Optimization of GPU-Based Surround View on TI's Tda2x

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

OMAP2430 Applications Processor

TM Technology for Innovators OMAP2430 applications processor All-in-One Key features entertainment • Advanced Imaging, Video and Audio Accelerator (IVA™ 2) boosts video for 3G mobile performance in mobile phones by up to 4X and imaging performance by up to 1.5X phones • Delivering a multimedia experience with consumer electronics quality to the handset • Multi-engine parallel processing architecture for supporting complex usage scenarios • Built-in M-Shield™ mobile security technology enables value-added services and terminal security • Support for all major High Level Operating Systems (HLOS) aids applications development PRODUCT BULLETIN Leading imaging The new OMAP2430 processor from Texas Instruments (TI) delivers a new and video level of multimedia performance to third-generation (3G) mobile phones and performance other handheld systems. Leveraging TI’s proven OMAP™ 2 architecture, the OMAP2430 features an advanced imaging, video and audio accelerator (IVA™ 2) that provides a 4X improvement in video processing and 1.5X improvement in image processing over previously available solutions for mobile phones. The processor’s high video performance enables advanced codec algorithms that promote higher compression ratios allowing networks to support more data, bringing down costs for service providers and allowing them to deploy revenue- generating services such as mobile digital TV and mobile-to-mobile gaming. Support for these services, plus high-resolution, high-speed decode of standard video compression algorithms, brings a TV-like viewing quality and the familiar features of consumer electronics to mobile communications. The OMAP2430 is optimized for the complex applications characteristic of 3G wireless communications. Offering even higher performance than first-generation OMAP 2 processors while at a lower cost, the OMAP2430 processor provides the ultimate balance between multimedia performance, flexibility, power and cost. -

GS40 0.11-Μm CMOS Standard Cell/Gate Array

GS40 0.11-µm CMOS Standard Cell/Gate Array Version 1.0 January 29, 2001 Copyright Texas Instruments Incorporated, 2001 The information and/or drawings set forth in this document and all rights in and to inventions disclosed herein and patents which might be granted thereon disclosing or employing the materials, methods, techniques, or apparatus described herein are the exclusive property of Texas Instruments. No disclosure of information or drawings shall be made to any other person or organization without the prior consent of Texas Instruments. IMPORTANT NOTICE Texas Instruments and its subsidiaries (TI) reserve the right to make changes to their products or to discontinue any product or service without notice, and advise customers to obtain the latest version of relevant information to verify, before placing orders, that information being relied on is current and complete. All products are sold subject to the terms and conditions of sale supplied at the time of order acknowledgement, including those pertaining to warranty, patent infringement, and limitation of liability. TI warrants performance of its semiconductor products to the specifications applicable at the time of sale in accordance with TI’s standard warranty. Testing and other quality control techniques are utilized to the extent TI deems necessary to support this war- ranty. Specific testing of all parameters of each device is not necessarily performed, except those mandated by government requirements. Certain applications using semiconductor products may involve potential risks of death, personal injury, or severe property or environmental damage (“Critical Applications”). TI SEMICONDUCTOR PRODUCTS ARE NOT DESIGNED, AUTHORIZED, OR WAR- RANTED TO BE SUITABLE FOR USE IN LIFE-SUPPORT DEVICES OR SYSTEMS OR OTHER CRITICAL APPLICATIONS. -

Integrated Step-Down Converter with SVID Interface for Intel® CPU

Product Order Technical Tools & Support & Folder Now Documents Software Community TPS53820 SLUSE33 –FEBRUARY 2020 Integrated Step-Down Converter with SVID Interface for Intel® CPU Power Supply 1 Features 3 Description The TPS53820 device is D-CAP+ mode integrated 1• Single chip to support Intel VR13.HC SVID POL applications step-down converter for low current SVID rails of Intel CPU power supply. It provides up to two outputs to • Two outputs to support VCCANA (5.5 A) and power the low current SVID rails such as VCCANA P1V8 (4 A) (5.5 A) and P1V8 (4 A). The device employs D-CAP+ • D-CAP+™ Control for Fast Transient Response mode control to provide fast load transient • Wide Input Voltage (4.5 V to 15 V) performance. Internal compensation allows ease of use and reduces external components. • Differential Remote Sense • Programmable Internal Loop Compensation The device also provides telemetry, including input voltage, output voltage, output current and • Per-Phase Cycle-by-Cycle Current Limit temperature reporting. Over voltage, over current and • Programmable Frequency from 800 kHz to 2 MHz over temperature protections are provided as well. 2 • I C System Interface for Telemetry of Voltage, The TPS53820 device is packaged in a thermally Current, Output Power, Temperature, and Fault enhanced 35-pin QFN and operates between –40°C Conditions and 125°C. • Over-Current, Over-Voltage, Over-Temperature (1) protections Table 1. Device Information • Low Quiescent Current PART NUMBER PACKAGE BODY SIZE (NOM) • 5 mm × 5 mm, 35-Pin QFN, PowerPAD Package TPS53820 RWZ (35) 5 mm × 5 mm (1) For all available packages, see the orderable addendum at 2 Applications the end of the data sheet. -

Notes to Portfolio of Investments—March 31, 2021 (Unaudited)

Notes to portfolio of investments—March 31, 2021 (unaudited) Shares Value Common stocks: 99.36% Communication services: 9.92% Interactive media & services: 8.14% Alphabet Incorporated Class C † 4,891 $ 10,117,669 Facebook Incorporated Class A † 21,120 6,220,474 16,338,143 Wireless telecommunication services: 1.78% T-Mobile US Incorporated † 28,582 3,581,039 Consumer discretionary: 10.99% Automobiles: 1.91% General Motors Company † 66,899 3,844,017 Internet & direct marketing retail: 3.59% Amazon.com Incorporated † 2,329 7,206,112 Multiline retail: 1.69% Dollar General Corporation 16,717 3,387,199 Specialty retail: 3.80% Burlington Stores Incorporated † 15,307 4,573,732 Ulta Beauty Incorporated † 9,936 3,071,913 7,645,645 Consumer staples: 2.81% Food & staples retailing: 1.60% Sysco Corporation 40,883 3,219,127 Household products: 1.21% Church & Dwight Company Incorporated 27,734 2,422,565 Financials: 7.38% Capital markets: 4.85% CME Group Incorporated 7,970 1,627,713 Intercontinental Exchange Incorporated 24,575 2,744,536 S&P Global Incorporated 9,232 3,257,696 The Charles Schwab Corporation 32,361 2,109,290 9,739,235 Insurance: 2.53% Chubb Limited 13,181 2,082,203 Marsh & McLennan Companies Incorporated 24,610 2,997,498 5,079,701 Health care: 14.36% Biotechnology: 1.11% Alexion Pharmaceuticals Incorporated † 14,516 2,219,642 Health care equipment & supplies: 6.29% Align Technology Incorporated † 5,544 3,002,242 Boston Scientific Corporation † 79,593 3,076,269 See accompanying notes to portfolio of investments Wells Fargo VT Opportunity -

4-Phase, 140-A Reference Design for Intel® Stratix® 10 GX Fpgas Using

TI Designs: PMP20176 Four-Phase, 140-A Reference Design for Intel® Stratix® 10 GX FPGAs Using TPS53647 Description Features This reference design focuses on providing a compact, • All Ceramic Output Capacitors high-performance, multiphase solution suitable for • D-CAP+™ Modulator for Superior Current-Sharing powering Intel®Stratix® 10 GX FPGAs with a specific Capabilities and Transient Response focus on the 1SG280-1IV variant. Integrated PMBus™ • Peak Efficiency of 91.5% at 400 kHz, VIN = 12 V, allows for easy output voltage setting and telemetry of V = 0.9 V, I = 60 A key design parameters. The design enables OUT OUT programming, configuration, smart VID adjustment, • Excellent Thermal Performance Under No Airflow and control of the power supply, while providing Conditions monitoring of input and output voltage, current, power, • Overvoltage, Overcurrent, and Overtemperature and temperature. TI's Fusion Digital Power™ Designer Protection is used for programming, monitoring, validation, and • PMBus Compatibility for Output Voltage Setting characterization of the FPGA power design. and Telemetry for VIN, VOUT, IOUT, and Temperature • PMBus and Pinstrapping Programming Options Resources Applications PMP20176 Design Folder • FPGA Core Rail Power TPS53647 Product Folder CSD95472Q5MC Product Folder • Ethernet Switches Fusion Digital Power • Firewalls and Routers Product Folder Designer • Telecom and Base Band Units • Test and Measurement ASK Our E2E Experts CSD95472Q5MC Smart Power Stage CSD95472Q5MC Smart Power Stage TPS53647 ® Four-Phase Stratix 10 GX Controller Core Rail CSD95472Q5MC Smart Power Stage Onboard Load Generator CSD95472Q5MC Smart Power Intel® Load Stage Slammers Copyright © 2017, Texas Instruments Incorporated An IMPORTANT NOTICE at the end of this TI reference design addresses authorized use, intellectual property matters and other important disclaimers and information. -

GS30 Product Overview

GS30 0.15-µm CMOS Standard Cell/Gate Array Version 1.0 February, 2001 Copyright Texas Instruments Incorporated, 2001 The information and/or drawings set forth in this document and all rights in and to inventions disclosed herein and patents which might be granted thereon disclosing or employing the materials, methods, techniques, or apparatus described herein are the exclusive property of Texas Instruments. No disclosure of information or drawings shall be made to any other person or organization without the prior consent of Texas Instruments. IMPORTANT NOTICE Texas Instruments and its subsidiaries (TI) reserve the right to make changes to their products or to discontinue any product or service without notice, and advise customers to obtain the latest version of relevant information to verify, before placing orders, that information being relied on is current and complete. All products are sold subject to the terms and conditions of sale supplied at the time of order acknowledgement, including those pertaining to warranty, patent infringement, and limitation of liability. TI warrants performance of its semiconductor products to the specifications applicable at the time of sale in accordance with TI’s standard warranty. Testing and other quality control techniques are utilized to the extent TI deems necessary to support this war- ranty. Specific testing of all parameters of each device is not necessarily performed, except those mandated by government requirements. Certain applications using semiconductor products may involve potential risks of death, personal injury, or severe property or environmental damage (“Critical Applications”). TI SEMICONDUCTOR PRODUCTS ARE NOT DESIGNED, AUTHORIZED, OR WAR- RANTED TO BE SUITABLE FOR USE IN LIFE-SUPPORT DEVICES OR SYSTEMS OR OTHER CRITICAL APPLICATIONS. -

Texas Instruments Incorporated

A Progressive Digital Media business COMPANY PROFILE Texas Instruments Incorporated REFERENCE CODE: AFDA1C52-BBF2-41FA-A70D-DE34086443A9 PUBLICATION DATE: 20 Oct 2017 www.marketline.com COPYRIGHT MARKETLINE. THIS CONTENT IS A LICENSED PRODUCT AND IS NOT TO BE PHOTOCOPIED OR DISTRIBUTED Texas Instruments Incorporated TABLE OF CONTENTS TABLE OF CONTENTS Company Overview ........................................................................................................3 Key Facts.........................................................................................................................3 SWOT Analysis ...............................................................................................................4 Texas Instruments Incorporated Page 2 © MarketLine Texas Instruments Incorporated Company Overview Company Overview COMPANY OVERVIEW Texas Instruments Incorporated (Texas Instruments, TI or 'the company') is a designer and producer of semiconductors. The company primarily provides analog integrated circuits (ICs) and embedded processors. TI sells its products to original equipment manufacturers (OEMs), original design manufacturers (ODMs), contract manufacturers and distributors through direct sales force and third-party sales representatives. It has business presence across the Americas, Asia, Europe, the Middle East and Africa. The company is headquartered in Dallas, Texas, the US. The company reported revenues of (US Dollars) US$13,370 million for the fiscal year ended December 2016 (FY2016), an increase of 2.8% -

Microsoft OMAP

TM R EAL W ORLD S IGNAL P ROCESSING Texas Instruments OMAP™ platform and Linux® OS Texas Instruments’ (TI) OMAP™ platform delivers a comprehensive family of processors, software and support providing a wide range of real-time, multimedia-rich capabilities for 2.5G and 3G mobile devices. The industry- leading combination of high-performance and power-efficient processing enables compelling applications such as MMS, video and audio content, speech recognition, advanced security, 3D interactive gaming, m-commerce, location-based services, Java™ and productivity that will attract users to next-generation devices and services. Linux® is a full-featured, Open Source operating system that is quickly becoming the OS of choice for many advanced consumer electronics applications, including 2.5G and 3G mobile devices. With full networking capabilities and a large array of development tools, middleware and applications, Linux offers many choices and flexibility. In addition, Linux offers developers convenience and more control and visibility compared to proprietary OSs: • Vendor independence – OEMs have the choice to work with a commercial Linux vendor like MontaVista or develop system software entirely themselves through Open Source. OEMs can also do a combination of both. Linux code source is freely available for application and platform development to anyone who wants to develop with it. • Customization and optimization – most of the Linux kernel and operating systems code is readily available in source form. OEMs and developers can easily leverage this open source base to tailor Linux to their particular applications; it also serves as in-depth system documentation. In short, open source Linux gives OEMs unprecedented control over their technological destiny. -

Printmgr File

Portfolio of investments—September 30, 2020 (unaudited) Shares Value Common Stocks: 98.71% Communication Services: 8.49% Interactive Media & Services: 7.01% Alphabet Incorporated Class C † 4,933 $ 7,249,537 Facebook Incorporated Class A † 19,758 5,174,620 12,424,157 Wireless Telecommunication Services: 1.48% T-Mobile US Incorporated † 22,930 2,622,275 Consumer Discretionary: 10.13% Automobiles: 1.82% General Motors Company 108,981 3,224,748 Internet & Direct Marketing Retail: 3.81% Amazon.com Incorporated † 2,144 6,750,877 Multiline Retail: 1.28% Dollar General Corporation 10,826 2,269,346 Specialty Retail: 3.22% Burlington Stores Incorporated † 16,250 3,348,963 Ulta Beauty Incorporated † 10,548 2,362,541 5,711,504 Consumer Staples: 2.61% Food & Staples Retailing: 1.51% Sysco Corporation 42,958 2,672,847 Household Products: 1.10% Church & Dwight Company Incorporated 20,884 1,957,040 Financials: 9.20% Capital Markets: 5.18% CME Group Incorporated 9,434 1,578,403 Intercontinental Exchange Incorporated 33,260 3,327,663 S&P Global Incorporated 7,447 2,685,388 The Charles Schwab Corporation 43,771 1,585,823 9,177,277 Consumer Finance: 1.19% Discover Financial Services 36,582 2,113,708 Insurance: 2.83% Chubb Limited 17,361 2,015,959 Marsh & McLennan Companies Incorporated 26,126 2,996,652 5,012,611 Health Care: 16.49% Biotechnology: 0.99% Alexion Pharmaceuticals Incorporated † 15,411 1,763,481 Health Care Equipment & Supplies: 5.52% Align Technology Incorporated † 5,029 1,646,293 Boston Scientific Corporation † 74,552 2,848,632 See accompanying notes to portfolio of investments. -

AR8031 to DP83867 and DP83869 System Rollover

(1) Application Report SNLA333–September 2019 AR8031 to DP83867 and DP83869 System Rollover Vanjari, Vibhu ABSTRACT This application report outlines the necessary and potential steps for replacing the Qualcomm Atheros AR8031 10/100/1000 Mb/s Ethernet PHY with either TI’s DP83867CR/CS/E/IR/IS or DP83869HM. Contents 1 Purpose ....................................................................................................................... 1 2 Required Changes........................................................................................................... 1 3 Potential Changes ........................................................................................................... 4 4 Informational Changes ...................................................................................................... 6 Appendix A Pinout Mapping ..................................................................................................... 9 1 Purpose While the Qualcomm Atheros AR8031 Ethernet PHY has many similarities with the TI Ethernet PHY products, the DP83867 and DP83869 offer several features that improve performance and offer greater system customization. This system rollover document outlines how to replace the Qualcomm Atheros AR8031 PHY with either TI’s DP83867CR/CS/E/IR/IS or DP83869HM by comparing differences including: • Required external components • Pin functions • Feature set • Register operation The impact to a design is dependent on PHY configuration and features used. 2 Required Changes This section describes -

Apple V. Fintiv IPR2020-00019

[email protected] Paper No. 11 571-272-7822 Entered: March 20, 2020 UNITED STATES PATENT AND TRADEMARK OFFICE ____________ BEFORE THE PATENT TRIAL AND APPEAL BOARD ____________ APPLE INC., Petitioner, v. FINTIV, INC., Patent Owner. ____________ Case IPR2020-00019 Patent 8,843,125 B2 ___________ Before WILLIAM M. FINK, Vice Chief Administrative Patent Judge, and LINDA E. HORNER and LYNNE E. PETTIGREW, Administrative Patent Judges. FINK, Vice Chief Administrative Patent Judge. ORDER Conduct of the Proceeding Supplemental Briefing on Discretionary Denial 35 U.S.C. § 314(a) and 37 C.F.R. § 42.5(a) IPR2020-00019 Patent 8,843,125 B2 I. INTRODUCTION Petitioner, Apple, Inc., filed a Petition in this case on October 28, 2019, challenging certain claims of U.S. Patent No. 8,843,125 B2 (Ex. 1001, “the ’125 patent”) owned by Patent Owner, Fintiv, Inc. Paper 1 (“Pet.”). Patent Owner filed a Preliminary Response on February 15, 2020. Paper 10 (“Prelim. Resp.”). In its Preliminary Response, Patent Owner requests that the Board apply its discretion under 35 U.S.C. § 314(a) to deny institution of the requested proceeding due to the advanced state of a parallel district court litigation in which the same issues have been presented and trial has been set for November 16, 2020. Prelim. Resp. 22‒26 (citing NHK Spring Co. v. Intri-Plex Techs., Inc., IPR2018-00752, Paper 8 (PTAB Sept. 12, 2018) (precedential, designated May 7, 2019)). Although Petitioner addressed the issue briefly in the Petition, at that time no trial date had been set. See Pet. 7. -

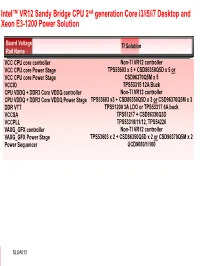

Intel VR12 Sandy Bridge CPU Power Solution

Intel™ VR12 Sandy Bridge CPU 2nd generation Core i3/i5/i7 Desktop and Xeon E3-1200 Power Solution Board Voltage TI Solution Rail Name VCC CPU core controller Non-TI VR12 controller VCC CPU core Power Stage TPS53603 x 5 + CSD86350Q5D x 5 or VCC CPU core Power Stage CSD96370Q5M x 5 VCCIO TPS53315 12A Buck CPU VDDQ + DDR3 Core VDDQ controller Non-TI VR12 controller CPU VDDQ + DDR3 Core VDDQ Power Stage TPS53603 x3 + CSD86350Q5D x 3 or CSD96370Q5M x 3 DDR VTT TPS51200 3A LDO or TPS53317 6A buck VCCSA TPS51217 + CSD86330Q3D VCCPLL TPS53310/11/12, TPS54226 VAXG_GFX controller Non-TI VR12 controller VAXG_GFX Power Stage TPS53603 x 2 + CSD86350Q5D x 2 or CSD96370Q5M x 2 Power Sequencer UCD9080/1/160 SlideSLUA613 1 IMPORTANT NOTICE Texas Instruments Incorporated and its subsidiaries (TI) reserve the right to make corrections, modifications, enhancements, improvements, and other changes to its products and services at any time and to discontinue any product or service without notice. Customers should obtain the latest relevant information before placing orders and should verify that such information is current and complete. All products are sold subject to TI’s terms and conditions of sale supplied at the time of order acknowledgment. TI warrants performance of its hardware products to the specifications applicable at the time of sale in accordance with TI’s standard warranty. Testing and other quality control techniques are used to the extent TI deems necessary to support this warranty. Except where mandated by government requirements, testing of all parameters of each product is not necessarily performed.