Arturo Miguel Batista Rodrigues Codificaç˜Ao De V´Ideo Com Um

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

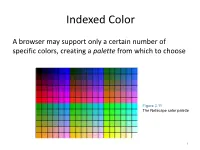

Indexed Color

Indexed Color A browser may support only a certain number of specific colors, creating a palette from which to choose Figure 3.11 The Netscape color palette 1 QUIZ How many bits are needed to represent this palette? Show your work. 2 How to digitize a picture • Sample it → Represent it as a collection of individual dots called pixels • Quantize it → Represent each pixel as one of 224 possible colors (TrueColor) Resolution = The # of pixels used to represent a picture 3 Digitized Images and Graphics Whole picture Figure 3.12 A digitized picture composed of many individual pixels 4 Digitized Images and Graphics Magnified portion of the picture See the pixels? Hands-on: paste the high-res image from the previous slide in Paint, then choose ZOOM = 800 Figure 3.12 A digitized picture composed of many individual pixels 5 QUIZ: Images A low-res image has 200 rows and 300 columns of pixels. • What is the resolution? • If the pixels are represented in True-Color, what is the size of the file? • Same question in High-Color 6 Two types of image formats • Raster Graphics = Storage on a pixel-by-pixel basis • Vector Graphics = Storage in vector (i.e. mathematical) form 7 Raster Graphics GIF format • Each image is made up of only 256 colors (indexed color – similar to palette!) • But they can be a different 256 for each image! • Supports animation! Example • Optimal for line art PNG format (“ping” = Portable Network Graphics) Like GIF but achieves greater compression with wider range of color depth No animations 8 Bitmap format Contains the pixel color -

Compression for Great Video and Audio Master Tips and Common Sense

Compression for Great Video and Audio Master Tips and Common Sense 01_K81213_PRELIMS.indd i 10/24/2009 1:26:18 PM 01_K81213_PRELIMS.indd ii 10/24/2009 1:26:19 PM Compression for Great Video and Audio Master Tips and Common Sense Ben Waggoner AMSTERDAM • BOSTON • HEIDELBERG • LONDON NEW YORK • OXFORD • PARIS • SAN DIEGO SAN FRANCISCO • SINGAPORE • SYDNEY • TOKYO Focal Press is an imprint of Elsevier 01_K81213_PRELIMS.indd iii 10/24/2009 1:26:19 PM Focal Press is an imprint of Elsevier 30 Corporate Drive, Suite 400, Burlington, MA 01803, USA Linacre House, Jordan Hill, Oxford OX2 8DP, UK © 2010 Elsevier Inc. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or any information storage and retrieval system, without permission in writing from the publisher. Details on how to seek permission, further information about the Publisher’s permissions policies and our arrangements with organizations such as the Copyright Clearance Center and the Copyright Licensing Agency, can be found at our website: www.elsevier.com/permissions . This book and the individual contributions contained in it are protected under copyright by the Publisher (other than as may be noted herein). Notices Knowledge and best practice in this fi eld are constantly changing. As new research and experience broaden our understanding, changes in research methods, professional practices, or medical treatment may become necessary. Practitioners and researchers must always rely on their own experience and knowledge in evaluating and using any information, methods, compounds, or experiments described herein. -

MAC Basics, Terminology, File Formats, Color Models

MAC Basics, Terminology, File Formats, Color Models Mac Short Cuts: command + c = Copy command + v = Paste command + i = Get Info (le version, date, manufacturer, etc.) option + drag = Copy folders and items on the same “drive” command + option + esc = Force quit (forces application to quit) also Finder > Apple > Force Quit space bar = in Finder Window gives you a quick look at les (without opening the le) Mac Operating System: Additional information on Mac OSX http://www.apple.com/ndouthow/mac/#tutorial=anatomy (video introduction to OS) Terminology: Bit- The smallest unit in computing. It can have a value of 1 or 0. Byte - A (still small) unit of information made up of 8 bits. Kilobyte (KB) - A unit of approximately 1000 bytes (1024 to be exact). Most download sites use kilobytes when they give le sizes. Megabyte (MB) - A unit of approximately one million bytes (1,024 KB). Gigabyte (GB) - Approximately 1 billion bytes (1024 MB). Most hard drive sizes are listed in gigabytes. Context: • 3 1/2" floppy drive holds 1.44 Megabytes (1,474 KB). [NOW OBSOLETE] • CD Rom holds up to 700 Megabytes (around 450 3.5 floppies). • 20 Gig hard drive will hold the same amount of info as 31 CD ROMs or 14,222 of the 3.5 floppy disks. • a typical page of text is around 4KB. File Formats: Graphics le formats dier in the way they represent image data (as pixels or vectors), in compression techniques, and which Photoshop features they support. With a few exceptions (for instance Large Document Format (PSB), Photoshop Raw, and TIFF), most file formats (withing Photoshop) cannot support documents larger than 2 GB. -

Creating Pseudocolored Images in Photoshop

If you would like, instead, to color these images in Photoshop, and Creating Pseudocolored Images in Downloaded from to make changes to the pseudocolor (and to the image itself), you can Photoshop do so by following these steps: Jerry Sedgewick 1. Make the grayscale image Indexed Color. Under Image on University of Minnesota the menu, choose Mocfe then Indexed Color. Indexed colored images [email protected] contain 256 grayscale or color values, as many as contained in an https://www.cambridge.org/core The method for creating pseudocolor in Photoshop can be done 8-bit image. in few steps, but the image will not be colored in the conventional way. This assumes that the image Is grayscale. If the image is in color, The conventional means for producing pseudocolor comes by using then change the image to grayscale first. For variously colored images, a look up table (LUT). This LUT is an overlay for making a range of such as those collected on a brightfield microscope, simply change the colors according to choices provided by the software. The colors are image to RGB: under Image on the menu, choose Mode then RGB matched to levels of brightness and darkness in the image (grayscale Color. In this scenario, the green channel is chosen as the grayscale pixel values), typically from a short to long wavelengths. In that sce- image, this most matches what we see by eye. nario, violet colors are matched to the black and near-black pixels and If the image contains shades of one color only (such as fluores- red to those dose to absolute white. -

Color in PDF/A-1

PDF/A Competence Center TechNote 0002: Color in PDF/A-1 The PDF/A-1 requirements related to color handling may be confusing to users and developers who are not familiar with color management concepts and ICC profiles. This TechNote describes the affected objects in PDF, details the require- ments of PDF/A-1 with respect to color handling, and provides recommenda- tions for color strategies in common situations. Contrary to popular belief, specifying an RGB value for, say, black does not accu- rately specify any color, but merely instructs a particular device to render the darkest possible color – which will be different on different devices. Similarly, 50% red in RGB may look like a clearly specified color, but it fails to define the red base color to use. Again, the visual results will be different on multiple out- put devices. These problems can be solved by using device-independent color specifications. Since PDF/A-1 is targeted at long-time preservation of the document’s visual appearance, device-independent color specification methods are required. PDF/A-1 makes use of several PDF concepts for specifying color without any de- pendency on actual output devices. 2008-03-14 page 1 PDF/A Competence Center 1 PDF Color Concepts 1.1 Color Spaces in PDF PDF supports various color spaces which can be grouped as follows: • Device color spaces refer to a specific output technology, and cannot be used to specify color values such that colors are reproducible in a predictable way across a range of output devices: DeviceGray, DeviceRGB, DeviceCMYK. -

Graphics File Formats Reference - Webopedia.Com Page 1 of 5

Graphics File Formats Reference - Webopedia.com Page 1 of 5 Enter a term... Term of the Day Recent Terms Did You Know? Quick Reference All Categories Home > Quick Reference > Graphics File Formats Graphics File Formats Related Terms graphics file formats » Tweet 0 Data Formats: File Formats and T Extensions » By: Vangie Beal server (Spo Posted: 05-13-2005 , Last Updated: 06-24-2010 Data Formats and Their File Exte A Quick Reference S » Data Formats and Their File Exte T » Data Formats and Their File Exte A » format » Data Formats and Their File Exte G » Data Formats and Their File Exte R » Data Formats and Their File Exte I » Data Formats and Their File Exte P » Data Formats and Their File Exte V » Data Formats and Their File Exte J » Data Formats and Their File Exte C » Graphics (n.) Refers to any computer device or program that makes a computer capable of displaying and manipulating pictures. T also refers to the images themselves. http://www.webopedia.com/quick_ref/graphics_formats.asp 20/09/2011 Graphics File Formats Reference - Webopedia.com Page 2 of 5 File Format A format for encoding information in a file. Each different type of file has a different file format. The file format specifies fir whether the file is a binary or ASCII file, and second, how the information is organized. Common Graphic & Image File Formats Some of the most used and common graphics formats used today ate TIFF, JPEG, and GIF. The Tagged Image File For (TIFF) is widely used in business, offices, and commercial printing environments. -

Computer Labs: the BMP File Format

Computer Labs: The BMP file format 2o MIEIC Pedro F. Souto ([email protected]) November 26, 2018 Digital Image File Formats XPM as we have defined has some limitations I Good only for indexed modes I It is not standard I Need to manually edit the XPMs bmp has been used by many LCOM projects in recent years I It is relatively simple, and you can use some libraries available on the Web to load bmp files I It is supported by some well know graphics editors, e.g. GIMP Example: Using C Arrays to Store XPMs static char *pic1[] = { "32 13 4", /* number of columns and rows, in pixels, and colors */ ". 0", /* ’.’ denotes color value 0 */ "x 2", /* ’x’ denotes color value 2 */ "o 14", /* .. and so on */ "+ 4", "................................", /* the map */ "..............xxx...............", "............xxxxxxx.............", ".........xxxxxx+xxxxxx..........", "......xxxxxxx+++++xxxxxxx.......", "....xxxxxxx+++++++++xxxxxxx.....", "....xxxxxxx+++++++++xxxxxxx.....", "......xxxxxxx+++++xxxxxxx.......", ".........xxxxxx+xxxxxx..........", "..........ooxxxxxxxoo...........", ".......ooo...........ooo........", ".....ooo...............ooo......", "...ooo...................ooo...." }; Question How many elements does an XPM array have? The BMP File Structure Bitmap File Header File metadata: Bitmap Info Header/DIB Header Pixmap and pixel format metadata I There are several versions, the most recent one is v5 Color Table/RGB Quad Array i.e. color palette I Used mainly for indexed color representations Pixel Array the pixmap Other structures with extra information or for padding are optional. Image with the BMP file fomat , via Wikipedia Bitmap File Header typedef struct tagBITMAPFILEHEADER { WORD bfType; // this is 2 bytes DWORD bfSize; // this is 4 bytes WORD bfReserved1; WORD bfReserved2; DWORD bfOffBits; }; Type Must be ’B”M’, in ASCII I But there other format versions Size Size of file OffBits Pixmap array offset (in file) Bitmap Info Header I Contains metadata about: Pixmap e.g. -

Digital Image Technology

Digital Image Technology Digital Image Technology Seminar Notes Douglas Dixon Manifest Technology® LLC May 2005 www.manifest-tech.com 5/2005 Copyright 2004-2005 Douglas Dixon, All Rights Reserved - www.manifest-tech.com Page 1 Digital Image Technology Digital Images • Image Characteristics – Image Types: Vector / raster, Graphics / photos, Animation – Resolution: Display, photos, scanners/ printers; DPI – Color Depth: Monochrome, gray, indexed, full; Color spaces • Image Compression – Image File Formats – Web, Photos, Print • Image / Photo Processing – Scanning, Printing – Image Editing and Enhancement 5/2005 Copyright 2004-2005 Douglas Dixon, All Rights Reserved - www.manifest-tech.com Page 2 1 Digital Image Technology Image Types • Vector / object / line art – PowerPoint, Adobe Illustrator – Graphics shapes, type, styles / attributes – Logos, for print, scale-independent • Graphics / drawings / logos – MS Paint – limited colors, flat – GIF files • Full-color / photos / continuous-tone – Grayscale and color – Adobe Photoshop – Scanned, digital photos, painting effects • Animations – Animated GIF – flip-book – Flash – scripted, programmed 5/2005 Copyright 2004-2005 Douglas Dixon, All Rights Reserved - www.manifest-tech.com Page 3 Digital Image Technology Image Resolutions • Resolution Handheld / 160 – Pixels – width x height phone 320 – Video – dots x lines PC display VGA 640 x 480 – PC – Display Properties XGA 1024 x 768 / 800 SXGA 1280 x 1024 • Capture UXGA 1600 x 1200 – Sampled – Sample rate Television NTSC 720 x 480 – Camera lens and imager High Def 720p 1280 X 720 – Scanner 1080i 1920 X 1080 Digital Cam 1 mpix ~ 1024 x 1024 • View: Perceived Resolution 2 mpix ~ 2048 x 1024 – Display size 5 mpix ~ 2500 x 1900 – Pixel size/structure 7 mpix ~ 3000 x 2400 – Viewing distance 8 mpix ~ 3264 x 2448 • Aspect Ratio 5/2005 Copyright 2004-2005 Douglas Dixon, All Rights Reserved - www.manifest-tech.com Page 4 2 Digital Image Technology Sampling: DPI vs Resolution • Scanner – Sampling rate – DPI – Dots Per Inch – 4800 x 2400, 600 x 1200, optical res vs. -

Color, Graphics and Hardware Monitors and Display

Color, graphics and hardware Monitors and Display •No two monitors display the same image in exactly the same way 1. Gamma settings - hardware setting on a monitor that controls the brightness of the pixels that display. • MAC displays lighter, PC displays a bit darker • You can “correct” for gamma settings by lightening or darkening images. 2. Color Calibration on different monitors • Everyone’s monitor will display differently • particularly if monitor has been calibrated for a special purpose. • There is no consistent color calibration that you can count on! 3. Different Display Technology: • CRT (Cathode Ray Tube) • LCD (Liquid Crystal Dislpay) • Plasma • They use different technologies and will display differently; some differences are subtle, others are not. 4. Monitor Resolution Settings: • people choose the size of the pixel they want to display. • The higher the resolution, the smaller the pixel. • Images “look” bigger on lower settings than on higher settings. Common settings are (4:3 ratio): 640 x 480, 800 x 600, 1024 x 768 • Laptops generally have a different aspect ratio for their display than traditional CRT monitors. • Be very careful when designing sites that your pages aren’t too wide for other displays. Image File Formats - standardized way to organize and store information - image files composed of either pixel or vector - vector (geometric): rasterized for display - pixel: grid, numbers for brightness, color, etc Image File Size - dimensions of image: pixels X pixels ( in X in ) - image resolution: amount of pixels -

Comparison of LSB Steganography in GIF and BMP Images

International Journal of Soft Computing and Engineering (IJSCE) ISSN: 2231-2307, Volume-3, Issue-4, September 2013 Comparison of LSB Steganography in GIF and BMP Images Eltyeb E.Abed Elgabar, Haysam A. Ali Alamin Abstract- Data encryption is not the only safe way to protect A. Basic terms data from penetration given the tremendous development in the • Cover-object, c: the original object where the message information age particularly in the field of speed of the processors has to be embedded. Cover-text, cover-image,... and the cryptanalysis. In some cases, we need new technologies or • Message, m: the message that has to be embedded in the methods to protect our secret data. There has emerged a set of new cover-object. It is also called stego-message or in the technologies that provides protection with or without data encryption technology such as data hiding. The word watermarking context mark or watermark. steganography literally means ‘covered writing’. It is derived from • Stego-object, s: The cover object, once the message has the Greek words “stegos” meaning “cover”, and “grafia” been embedded. meaning “writing”. Steganography functions to hide a secret • Stego-key, k: The secret shared between A and B to message embedded in media such as text, image, audio and video. embed and retrieve the message There are a lot of algorithms used in the field of information hiding in the media; the simplest and best known technique is B. The steganographic process Least Significant Bit (LSB). • Embedding function, E: is a function that maps the In this paper we have made a comparison and of the (LSB) tripled cover-object c, message m and stego-key k to a algorithm using the cover object as an image. -

Using Colorways

WHITE PAPER | ONYX 11 Textile Using ColorWays onyxgfx.com Introduction Colorways is a new tool for ONYX 11 and Thrive 11 Textile editions. Colorways is part of the Patterns tools in Job Editor. This tool makes it easy to replace the colors in a pattern. The set of replaced colors (colorways) can be applied to other jobs or automated using a Quick Set. Colorways supports specific file formats, indexed color TIFF, separation TIFF and multichannel PSD. This document will explain the basics of each file type and using them in Colorways. Colorways and Digital Printing Important Terms and Definitions Colorway: An Industry term that refers to a set of colors used in a pattern. Lab (L*a*b*): A device independent color space that includes all human perceivable colors. An example of a colorway is camouflage. The pattern is identical, but the colorways differ based on the type of environment. In ONYX Colorways, a single image file is used and the print operator applies a colorway based on the desired output. Designing Files for Colorways There are many design applications that can create files that are supported in Colorways. This document will use Adobe Photoshop to describe how to create each file type. Description of Indexed Color Indexed Color is a color mode used in textile work. Indexed Color produces images with a limited number of colors. It was designed to conserve file size while preserving the visual quality of the original image. File size reduction is accomplished by limiting the Figure 1: Indexed Color options in Adobe Photoshop number of colors and storing them in a color lookup table (CLUT).