Bump Mapping

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Steep Parallax Mapping

I3D 2005 Posters Session Steep Parallax Mapping Morgan McGuire* Max McGuire Brown University Iron Lore Entertainment N E E E E True Surface I I I I Bump x P Surface P P P Parallax Mapping Steep Parallax Mapping Bump Mapping Parallax Mapping Steep Parallax Mapping Fig. 2 The viewer perceives an intersection at (P) from Fig. 1 A single polygon with a high-frequency bump map. shading, although the true intersection occurred at (I). Abstract. We propose a new bump mapping scheme that We choose t to be the first ti for which NB [ti]α > 1.0 – i / n can produce parallax, self-occlusion, and self-shadowing for and then shade as with other bump mapping methods: arbitrary bump maps yet is efficient enough for games when float step = 1.0 / n implemented in a pixel shader and uses existing data formats. vec2 dt = E.xy * bumpScale / (n * E.z) Related Work float height = 1.0; Let E and L be unit vectors from the eye and light in a local vec2 t = texCoord.xy; vec4 nb = texture2DLod (NB, t, LOD); tangent space. At a pixel, let 2-vector s be the texture coordinate (i.e. where the eye ray hits the true, flat surface) while (nb.a < height) { and t be the coordinate of the texel where the ray would have height -= step; t += dt; nb = texture2DLod (NB, t, LOD); hit the surface if it were actually displaced by the bump map. } Shading is computed by Phong illumination, using the // ... Shade using N = nb.rgb normal to the scalar bump map surface B at t and the color of the texture map at t. -

Texture Mapping

Texture Mapping Slides from Rosalee Wolfe DePaul University http://www.siggraph.org/education/materials/HyperGraph/mapping/r_wolf e/r_wolfe_mapping_1.htm 1 1. Mapping techniques add realism and interest to computer graphics images. Texture mapping applies a pattern of color to an object. Bump mapping alters the surface of an object so that it appears rough, dented or pitted. In this example, the umbrella, background, beachball and beach blanket have texture maps. The sand has been bump mapped. These and other mapping techniques are the subject of this slide set. 2 2: When creating image detail, it is cheaper to employ mapping techniques that it is to use myriads of tiny polygons. The image on the right portrays a brick wall, a lawn and the sky. In actuality the wall was modeled as a rectangular solid, and the lawn and the sky were created from rectangles. The entire image contains eight polygons.Imagine the number of polygon it would require to model the blades of grass in the lawn! Texture mapping creates the appearance of grass without the cost of rendering thousands of polygons. 3 3: Knowing the difference between world coordinates and object coordinates is important when using mapping techniques. In object coordinates the origin and coordinate axes remain fixed relative to an object no matter how the object’s position and orientation change. Most mapping techniques use object coordinates. Normally, if a teapot’s spout is painted yellow, the spout should remain yellow as the teapot flies and tumbles through space. When using world coordinates, the pattern shifts on the object as the object moves through space. -

Towards a Set of Techniques to Implement Bump Mapping

Towards a set of techniques to implement Bump Mapping Márcio da Silva Camilo, Bernardo Nogueira S. Hodge, Rodrigo Pereira Martins, Alexandre Sztajnberg Departamento de Informática e Ciências da Computação Universidade Estadual do Rio de Janeiro {pmacstronger, bernardohodge , rodrigomartins , alexszt}@ime.uerj.br Abstract . irregular lighting appearance without modeling its irregular patterns as true geometric perturbations, The revolution of three dimensional video games increasing the model’s polygon count, hence decreasing lead to an intense development of graphical techniques applications’ performance. and hardware. Texture-mapping hardware is now able to There are several other techniques for generate interactive computer-generated imagery with implementing bump mapping. Some of them, such as high levels of per-pixel detail. Nevertheless, traditional Emboss Mapping, do not result in a good appearance for single texture techniques are not able to simulate bumped some surfaces, in general because they use a too simplistic surfaces decently. More traditional bump mapping approximation of light calculation [4]. Others, such as techniques, such as Emboss bump mapping, most times Blinn’s original bump mapping idea, are computationally deploy an undesirable or ‘fake’ appearance to wrinkles in expensive to calculate in real-time [7]. Dot3 bump surfaces. Bump mapping can be applied to different types mapping deploys a great final result on surface appearance of applications varying form computer games, 3d and is feasible in today’s hardware in a single rendering environment simulations, and architectural projects, pass. It is based on a mathematical model of lighting among others. In this paper we will examine a method that intensity and reflection calculated for each pixel on a can be applied in 3d game engines, which uses texture- surface to be rendered. -

INTEGRATING PIXOLOGIC ZBRUSH INTO an AUTODESK MAYA PIPELINE Micah Guy Clemson University, [email protected]

Clemson University TigerPrints All Theses Theses 8-2011 INTEGRATING PIXOLOGIC ZBRUSH INTO AN AUTODESK MAYA PIPELINE Micah Guy Clemson University, [email protected] Follow this and additional works at: https://tigerprints.clemson.edu/all_theses Part of the Computer Sciences Commons Recommended Citation Guy, Micah, "INTEGRATING PIXOLOGIC ZBRUSH INTO AN AUTODESK MAYA PIPELINE" (2011). All Theses. 1173. https://tigerprints.clemson.edu/all_theses/1173 This Thesis is brought to you for free and open access by the Theses at TigerPrints. It has been accepted for inclusion in All Theses by an authorized administrator of TigerPrints. For more information, please contact [email protected]. INTEGRATING PIXOLOGIC ZBRUSH INTO AN AUTODESK MAYA PIPELINE A Thesis Presented to the Graduate School of Clemson University In Partial Fulfillment of the Requirements for the Degree Master of Fine Arts in Digital Production Arts by Micah Carter Richardson Guy August 2011 Accepted by: Dr. Timothy Davis, Committee Chair Dr. Donald House Michael Vatalaro ABSTRACT The purpose of this thesis is to integrate the use of the relatively new volumetric pixel program, Pixologic ZBrush, into an Autodesk Maya project pipeline. As ZBrush is quickly becoming the industry standard in advanced character design in both film and video game work, the goal is to create a succinct and effective way for Maya users to utilize ZBrush files. Furthermore, this project aims to produce a final film of both valid artistic and academic merit. The resulting work produced a guide that followed a Maya-created film project where ZBrush was utilized in the creation of the character models, as well as noting the most useful formats and resolutions with which to approach each file. -

LEAN Mapping

LEAN Mapping Marc Olano∗ Dan Bakery Firaxis Games Firaxis Games (a) (b) (c) Figure 1: In-game views of a two-layer LEAN map ocean with sun just off screen to the right, and artist-selected shininess equivalent to a Blinn-Phong specular exponent of 13,777: (a) near, (b) mid, and (c) far. Note the lack of aliasing, even with an extremely high power. Abstract 1 Introduction For over thirty years, bump mapping has been an effective method We introduce Linear Efficient Antialiased Normal (LEAN) Map- for adding apparent detail to a surface [Blinn 1978]. We use the ping, a method for real-time filtering of specular highlights in bump term bump mapping to refer to both the original height texture that and normal maps. The method evaluates bumps as part of a shading defines surface normal perturbation for shading, and the more com- computation in the tangent space of the polygonal surface rather mon and general normal mapping, where the texture holds the ac- than in the tangent space of the individual bumps. By operat- tual surface normal. These methods are extremely common in video ing in a common tangent space, we are able to store information games, where the additional surface detail allows a rich visual ex- on the distribution of bump normals in a linearly-filterable form perience without complex high-polygon models. compatible with standard MIP and anisotropic filtering hardware. The necessary textures can be computed in a preprocess or gener- Unfortunately, bump mapping has serious drawbacks with filtering ated in real-time on the GPU for time-varying normal maps. -

Texture Mapping

Texture Mapping University of Texas at Austin CS384G - Computer Graphics Fall 2010 Don Fussell Reading Required Watt, intro to Chapter 8 and intros to 8.1, 8.4, 8.6, 8.8. Recommended Paul S. Heckbert. Survey of texture mapping. IEEE Computer Graphics and Applications 6(11): 56--67, November 1986. Optional Watt, the rest of Chapter 8 Woo, Neider, & Davis, Chapter 9 James F. Blinn and Martin E. Newell. Texture and reflection in computer generated images. Communications of the ACM 19(10): 542--547, October 1976. University of Texas at Austin CS384G - Computer Graphics Fall 2010 Don Fussell 2 What adds visual realism? Geometry only Phong shading Phong shading + Texture maps University of Texas at Austin CS384G - Computer Graphics Fall 2010 Don Fussell 3 Texture mapping Texture mapping (Woo et al., fig. 9-1) Texture mapping allows you to take a simple polygon and give it the appearance of something much more complex. Due to Ed Catmull, PhD thesis, 1974 Refined by Blinn & Newell, 1976 Texture mapping ensures that “all the right things” happen as a textured polygon is transformed and rendered. University of Texas at Austin CS384G - Computer Graphics Fall 2010 Don Fussell 4 Non-parametric texture mapping With “non-parametric texture mapping”: Texture size and orientation are fixed They are unrelated to size and orientation of polygon Gives cookie-cutter effect University of Texas at Austin CS384G - Computer Graphics Fall 2010 Don Fussell 5 Parametric texture mapping With “parametric texture mapping,” texture size and orientation are tied to the -

IMAGE-BASED MODELING TECHNIQUES for ARTISTIC RENDERING Bynum Murray Iii Clemson University, [email protected]

Clemson University TigerPrints All Theses Theses 5-2010 IMAGE-BASED MODELING TECHNIQUES FOR ARTISTIC RENDERING Bynum Murray iii Clemson University, [email protected] Follow this and additional works at: https://tigerprints.clemson.edu/all_theses Part of the Fine Arts Commons Recommended Citation Murray iii, Bynum, "IMAGE-BASED MODELING TECHNIQUES FOR ARTISTIC RENDERING" (2010). All Theses. 777. https://tigerprints.clemson.edu/all_theses/777 This Thesis is brought to you for free and open access by the Theses at TigerPrints. It has been accepted for inclusion in All Theses by an authorized administrator of TigerPrints. For more information, please contact [email protected]. IMAGE-BASED MODELING TECHNIQUES FOR ARTISTIC RENDERING A Thesis Presented to the Graduate School of Clemson University In Partial Fulfillment of the Requirements for the Degree Master of Arts Digital Production Arts by Bynum Edward Murray III May 2010 Accepted by: Timothy Davis, Ph.D. Committee Chair David Donar, M.F.A. Tony Penna, M.F.A. ABSTRACT This thesis presents various techniques for recreating and enhancing two- dimensional paintings and images in three-dimensional ways. The techniques include camera projection modeling, digital relief sculpture, and digital impasto. We also explore current problems of replicating and enhancing natural media and describe various solutions, along with their relative strengths and weaknesses. The importance of artistic skill in the implementation of these techniques is covered, along with implementation within the current industry applications Autodesk Maya, Adobe Photoshop, Corel Painter, and Pixologic Zbrush. The result is a set of methods for the digital artist to create effects that would not otherwise be possible. -

Real-Time Rendering of Bumpmap Shadows Taking Account of Surface Curvature

Real-time Rendering of Bumpmap Shadows Taking Account of Surface Curvature Koichi Onoue Nelson Max Tomoyuki Nishita The University of Tokyo University of California, Davis The University of Tokyo 5-1-5 Kashiwanoha,Kashiwa, P. O. Box 808, L-560, 5-1-5 Kashiwanoha,Kashiwa, Chiba, Japan Livermore, CA 94551, U.S.A Chiba, Japan Phone: +81.4.7136.3946 Phone: +1.925.422.4074 Phone: +81.4.7136.3942 Fax: +81.4.7136.3943 Fax: +1.925.422.6287 Fax: +81.4.7136.3943 [email protected] [email protected] [email protected] Abstract faces cast by other objects [4]. Forsyth [5] used a three- dimensional texture to implement Max’s method in graph- The bump-mapping technique is often used to represent ics hardware. However his method did not take account of bumps on objects such as bark on trees and craters on the surface curvature. Shadows of the bumps on bump-mapped moon. In order to render shadows cast by bumps, the hori- surfaces are called bumpmap shadows in the rest of this pa- zon map method was proposed. The horizon map is a table per. which has, for each of a small collection of azimuthal direc- In this paper, we propose to render bumpmap shad- tions, slopes from each viewpoint on the bump map (height ows more precisely by taking account of surface curvature. field) to the corresponding horizon point, which is the high- When bump mapping is used, surfaces facing away from est viewable point seen from that viewpoint. -

Digital Ornament

Digital Ornament John Elys University of Wales Institute Cardiff / University of Lincoln Abstract Gaming software has a history of fostering development of economical and creative methods to deal with hardware limitations. Traditionally the visual representation of gaming software has been a poor offspring of high-end visualization. In a twist of irony, this paper proposes that game production software leads the way into a new era of physical digital ornament. The toolbox of the rendering engine evolved rapidly between 1974-1985 and it is still today, 20 years later the main component of all visualization programs. The development of the bump map is of particular interest; its evolution into a physical displacement map provides untold opportunities of the appropriation of the 2D image to a physical 3D object. To expose the creative potential of the displacement map, a wide scope of existing displacement usage has been identified: Top2maya is a scientific appropriation, Caruso St John Architects an architectural precedent and Tord Boonje’s use of 2D digital pattern provides us with an artistic production precedent. Current gaming technologies give us an indication of how the resolution of displacement is set to enter an unprecedented level of geometric detail. As modernity was inspired by the machine age, we should be led by current technological advancement and appropriate its usage. It is about a move away from the simplification of structure and form to one that deals with the real possibilities of expanding the dialogue of surface topology. Digital Ornament is a kinetic process rather than static, its intentions lie in returning the choice of bespoke materials back to the Architect, Designer and Artist. -

Real-Time Shell Space Rendering of Volumetric Geometry

Real-Time Shell Space Rendering of Volumetric Geometry Nico Ritsche∗ Abstract This work introduces a new technique for real-time rendering of arbitrary volumetric geometry into a polygonal mesh’s shell space. The shell space is a layer of variable thickness on top or below the polygonal mesh. The technique computes view ray shell geometry intersections in a pixel shader. In addition to arbitrary volumetric shell geometry, represented as volume textures, it can handle also the less general case of height-field shell geometry. To minimize the number of ray tracing steps, a 3D distance map is used for skipping empty space in the shell volume. The shell volume is defined by a pre-computed tetrahedra mesh. The tetrahedra subdivide prisms extruded from the polygonal base mesh. A vertex shader computes tetrahedron face plane distances for generating the per-pixel tetra- hedron thickness using non-linear interpolation. The technique in- cludes local lighting and overcomes shortcomings of previous shell Figure 1: Chair shell geometry applied to a teapot base mesh un- space rendering approaches such as high storage requirements and der local illumination, rendered with the technique described in this involved per-vertex computations [Wang et al. 2004] or low shell work. geometry depth complexity and mapping distortions [Policarpo and Oliveira 2006]. Additionally, rendering artifacts for shallow view angles common to many related techniques are reduced. Further- wards highly programmable and high-performance vertex and pixel more, methods for generating a geometry volume texture and the shading units. Recent commodity graphics hardware is capable corresponding distance map from a polygonal mesh are presented. -

An Efficient 2.5D Shadow Detection Algorithm for Urban Planning And

International Journal of Geo-Information Article An Efficient 2.5D Shadow Detection Algorithm for Urban Planning and Design Using a Tensor Based Approach Sukriti Bhattacharya 1,* , Christian Braun 2 and Ulrich Leopold 2 1 Department for IT for Innovative Services, Luxembourg Institute of Science and Technology (LIST), L-4362 Esch-sur-Alzette, Luxembourg 2 Department for Environmental Research and Innovation, Luxembourg Institute of Science and Technology (LIST), L-4362 Esch-sur-Alzette, Luxembourg; [email protected] (C.B.); [email protected] (U.L.) * Correspondence: [email protected] Abstract: Urbanization is leading us to a more chaotic state where healthy living becomes a prime concern. The high-rise buildings influence the urban setting with a high shadow rate on surroundings that can have no positive impact on the general neighborhood. Nevertheless, shadows are the main factor of defeatist virtual settings, they are expensive to render in real-time. This paper investigates how the amount of sunlight varies by season and how seasons can indicate the time of year to understand how shadows vary in length at different times of the day and how they change over the seasons. We propose a novel efficient (fast and scalable) algorithm to calculate a 2.5D cast-shadow map from a given LiDAR-derived Digital Surface Model (DSM). We present a proof-of-concept demonstration to examine the technical practicability of the introduced algorithm. Tensor-based techniques such as singular value decomposition, tensor unfolding are examined and deployed to represent the multidimensional data. The proposed method exploits horizon mapping ideas and Citation: Bhattacharya, S.; Braun, C.; extends the method to a modern graphics algorithm (Bresenham’s line drawing algorithm) to account Leopold, U. -

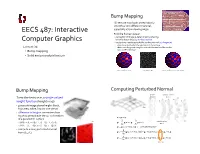

Bump and Normal Mapping; Procedural Textures

Bump Mapping 2D texture map looks unrealistically smooth across different material, EECS 487: Interactive especially at low viewing angle Fool the human viewer: • perception oF shape is determined by shading, Computer Graphics which is determined by surface normal • use texture map to perturb the surface normal per Fragment • does not actually alter the geometry oF the surface Lecture 26: • shade each Fragment using the perturbed normal as iF the surface • Bump mapping were a different shape • Solid and procedural texture Sphere w/Diffuse Texture Swirly Bump Map Sphere w/Diffuse Texture & Bump Map Bump Mapping Computing Perturbed Normal wrinkle wrinkled surface function p’(u,v) Treat the texture as a single-valued n’ n p’ p’ p p p n’ height function (height map) t • grayscale image stores height: black, s Blinn high area; white, low (or vice versa) • difference in heights determines how much to perturb n in the (u, v) directions n = pu × pv oF a parametric surface TP3 ∂ ∂ smooth surface TP3 • ∂b/∂u = b = (h[s+1, t] − h[s−1, t])/ds pu = p(u,v),!pv = p(u,v) u ∂u ∂v p(u,v) • ∂b/∂v = b = (h[s, t+1] − h[s, t−1])/dt v p' = p(u,v) + b(u,v)n! ⇐ !perturbed!surface • compute a new, perturbed normal ∂ p' u = (p(u,v) + b(u,v)n) = pu + bun + b(u,v)nu = pu + bun From (bu, bv) ∂u ∂ p' v = (p(u,v) + b(u,v)n) = pv + bvn + b(u,v)nv = pv + bvn ! ∂v 0: n ⊥ p Bump Map vs.