An Analysis of Factive Cognitive Verb Complementation Patterns Used by Elt Students

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lexical Ambiguity • Syntactic Ambiguity • Semantic Ambiguity • Pragmatic Ambiguity

Welcome to the course! IntroductionIntroduction toto NaturalNatural LanguageLanguage ProcessingProcessing (NLP)(NLP) Professors:Marta Gatius Vila Horacio Rodríguez Hontoria Hours per week: 2h theory + 1h laboratory Web page: http://www.cs.upc.edu/~gatius/engpln2017.html Main goal Understand the fundamental concepts of NLP • Most well-known techniques and theories • Most relevant existing resources • Most relevant applications NLP Introduction 1 Welcome to the course! IntroductionIntroduction toto NaturalNatural LanguageLanguage ProcessingProcessing Content 1. Introduction to Language Processing 2. Applications. 3. Language models. 4. Morphology and lexicons. 5. Syntactic processing. 6. Semantic and pragmatic processing. 7. Generation NLP Introduction 2 Welcome to the course! IntroductionIntroduction toto NaturalNatural LanguageLanguage ProcessingProcessing Assesment • Exams Mid-term exam- November End-of-term exam – Final exams period- all the course contents • Development of 2 Programs – Groups of two or three students Course grade = maximum ( midterm exam*0.15 + final exam*0.45, final exam * 0.6) + assigments *0.4 NLP Introduction 3 Welcome to the course! IntroductionIntroduction toto NaturalNatural LanguageLanguage ProcessingProcessing Related (or the same) disciplines: •Computational Linguistics, CL •Natural Language Processing, NLP •Linguistic Engineering, LE •Human Language Technology, HLT NLP Introduction 4 Linguistic Engineering (LE) • LE consists of the application of linguistic knowledge to the development of computer systems able to recognize, understand, interpretate and generate human language in all its forms. • LE includes: • Formal models (representations of knowledge of language at the different levels) • Theories and algorithms • Techniques and tools • Resources (Lingware) • Applications NLP Introduction 5 Linguistic knowledge levels – Phonetics and phonology. Language models – Morphology: Meaningful components of words. Lexicon doors is plural – Syntax: Structural relationships between words. -

Conference Abstracts

EIGHTH INTERNATIONAL CONFERENCE ON LANGUAGE RESOURCES AND EVALUATION Held under the Patronage of Ms Neelie Kroes, Vice-President of the European Commission, Digital Agenda Commissioner MAY 23-24-25, 2012 ISTANBUL LÜTFI KIRDAR CONVENTION & EXHIBITION CENTRE ISTANBUL, TURKEY CONFERENCE ABSTRACTS Editors: Nicoletta Calzolari (Conference Chair), Khalid Choukri, Thierry Declerck, Mehmet Uğur Doğan, Bente Maegaard, Joseph Mariani, Asuncion Moreno, Jan Odijk, Stelios Piperidis. Assistant Editors: Hélène Mazo, Sara Goggi, Olivier Hamon © ELRA – European Language Resources Association. All rights reserved. LREC 2012, EIGHTH INTERNATIONAL CONFERENCE ON LANGUAGE RESOURCES AND EVALUATION Title: LREC 2012 Conference Abstracts Distributed by: ELRA – European Language Resources Association 55-57, rue Brillat Savarin 75013 Paris France Tel.: +33 1 43 13 33 33 Fax: +33 1 43 13 33 30 www.elra.info and www.elda.org Email: [email protected] and [email protected] Copyright by the European Language Resources Association ISBN 978-2-9517408-7-7 EAN 9782951740877 All rights reserved. No part of this book may be reproduced in any form without the prior permission of the European Language Resources Association ii Introduction of the Conference Chair Nicoletta Calzolari I wish first to express to Ms Neelie Kroes, Vice-President of the European Commission, Digital agenda Commissioner, the gratitude of the Program Committee and of all LREC participants for her Distinguished Patronage of LREC 2012. Even if every time I feel we have reached the top, this 8th LREC is continuing the tradition of breaking previous records: this edition we received 1013 submissions and have accepted 697 papers, after reviewing by the impressive number of 715 colleagues. -

Corpus Linguistics: a Practical Introduction

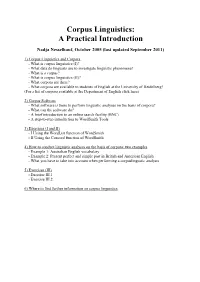

Corpus Linguistics: A Practical Introduction Nadja Nesselhauf, October 2005 (last updated September 2011) 1) Corpus Linguistics and Corpora - What is corpus linguistics (I)? - What data do linguists use to investigate linguistic phenomena? - What is a corpus? - What is corpus linguistics (II)? - What corpora are there? - What corpora are available to students of English at the University of Heidelberg? (For a list of corpora available at the Department of English click here) 2) Corpus Software - What software is there to perform linguistic analyses on the basis of corpora? - What can the software do? - A brief introduction to an online search facility (BNC) - A step-to-step introduction to WordSmith Tools 3) Exercises (I and II) - I Using the WordList function of WordSmith - II Using the Concord function of WordSmith 4) How to conduct linguistic analyses on the basis of corpora: two examples - Example 1: Australian English vocabulary - Example 2: Present perfect and simple past in British and American English - What you have to take into account when performing a corpuslingustic analysis 5) Exercises (III) - Exercise III.1 - Exercise III.2 6) Where to find further information on corpus linguistics 1) Corpus Linguistics and Corpora What is corpus linguistics (I)? Corpus linguistics is a method of carrying out linguistic analyses. As it can be used for the investigation of many kinds of linguistic questions and as it has been shown to have the potential to yield highly interesting, fundamental, and often surprising new insights about language, it has become one of the most wide-spread methods of linguistic investigation in recent years. -

A New Venture in Corpus-Based Lexicography: Towards a Dictionary of Academic English

A New Venture in Corpus-Based Lexicography: Towards a Dictionary of Academic English Iztok Kosem1 and Ramesh Krishnamurthy1 1. Introduction This paper asserts the increasing importance of academic English in an increasingly Anglophone world, and looks at the differences between academic English and general English, especially in terms of vocabulary. The creation of wordlists has played an important role in trying to establish the academic English lexicon, but these wordlists are not based on appropriate data, or are implemented inappropriately. There is as yet no adequate dictionary of academic English, and this paper reports on new efforts at Aston University to create a suitable corpus on which such a dictionary could be based. 2. Academic English The increasing percentage of academic texts published in English (Swales, 1990; Graddol, 1997; Cargill and O’Connor, 2006) and the increasing numbers of students (both native and non-native speakers of English) at universities where English is the language of instruction (Graddol, 2006) testify to the important role of academic English. At the same time, research has shown that there is a significant difference between academic English and general English. The research has focussed mainly on vocabulary: the lexical differences between academic English and general English have been thoroughly discussed by scholars (Coxhead and Nation, 2001; Nation, 2001, 1990; Coxhead, 2000; Schmitt, 2000, Nation and Waring, 1997; Xue and Nation, 1984), and Coxhead and Nation (2001: 254–56) list the following four distinguishing features of academic vocabulary: “1. Academic vocabulary is common to a wide range of academic texts, and generally not so common in non-academic texts. -

Compilación De Un Corpus De Habla Espontánea De Chino Putonghua Para Su Aplicación En La Enseñanza Como Lengua Segunda a Hispanohablantes

Universidad Autónoma de Madrid Departamento de Lingüística General, Lenguas Modernas, Lógica y Filosofía de la Ciencia, Teoría de la Literatura y Literatura Comparada Laboratorio de Lingüística Informática Compilación de un corpus de habla espontánea de chino putonghua para su aplicación en la enseñanza como lengua segunda a hispanohablantes DONG Yang Tesis doctoral dirigida por el Dr. Antonio Moreno Sandoval 2011 Agradecimientos Agradecimientos En primer lugar, quisiera dar las gracias al director de esta tesis, Dr. Antonio Moreno Sandoval, especialmente por aceptarme para realizar esta tesis bajo su dirección. Además, quisiera agradecer la confianza y paciencia que él ha depositado en este trabajo, así como su constante estímulo, sus comentarios y consejos tan valiosos para mi tesis. Gracias a la beca concedida por la Agencia Española de Cooperación Internacional, he podido seguir mis estudios del Programa de Doctorado en la Facultad de Filosofía y Letras de la Universidad Autónoma de Madrid y dedicarme totalmente a la tesis. A la Dra. Taciana Fisac, coordinadora del Programa de Doctorado “España y Latinoamérica Contemporáneas” y catedrática del Centro de Estudios de Asia Oriental de la Universidad Autónoma de Madrid, quiero extenderle un sincero agradecimiento por su disponibilidad, generosidad y ayuda incondicional en todo momento. Fue ella quien organizó y promovió este Programa de Doctorado de la Universidad Autónoma de Madrid, con la colaboración de la Universidad de Lenguas Extranjeras de Beijing. Quisiera agradecer a los miembros del Laboratorio de Lingüística Informática de la Universidad Autónoma de Madrid por la ayuda que me han ofrecido durante estos años de aprendizaje. Se trata de un soporte profesional muy importante. -

Corpus Studies in Applied Linguistics

106 Pietilä, P. & O-P. Salo (eds.) 1999. Multiple Languages – Multiple Perspectives. AFinLA Yearbook 1999. Publications de l’Association Finlandaise de Linguistique Appliquée 57. pp. 105–134. CORPUS STUDIES IN APPLIED LINGUISTICS Kay Wikberg, University of Oslo Stig Johansson, University of Oslo Anna-Brita Stenström, University of Bergen Tuija Virtanen, University of Växjö Three samples of corpora and corpus-based research of great interest to applied linguistics are presented in this paper. The first is the Bergen Corpus of London Teenage Language, a project which has already resulted in a number of investigations of how young Londoners use their language. It has also given rise to a related Nordic project, UNO, and to the project EVA, which aims at developing material for assessing the English proficiency of pupils in the compulsory school system in Norway. The second corpus is the English-Norwegian Parallel Corpus (Oslo), which has provided data for both contrastive studies and the study of translationese. Altogether it consists of about 2.6 million words and now also includes translations of English texts into German, Dutch and Portuguese. The third corpus, the International Corpus of Learner English, is a collection of advanced EFL essays written by learners representing 15 different mother tongues. By comparing linguistic features in the various subcorpora it is possible to find out about non-nativeness generally and about problems shared by students representing different languages. 1 INTRODUCTION 1.1 Corpus studies and descriptive linguistics Corpus-based language research has now been with us for more than 20 years. The number of recent books dealing with corpus studies (cf. -

Download (237Kb)

CHAPTER II REVIEW OF RELATED LITERATURE In this chapter, the researcher presents the result of reviewing related literature which covers Corpus based analysis, children short stories, verbs, and the previous studies. A. Corpus Based Analysis in Children Short Stories In these last five decades the work that takes the concept of using corpus has been increased. Corpus, the plural forms are certainly called as corpora, refers to the collection of text, written or spoken, which is systematically gathered. A corpus can also be defined as a broad, principled set of naturally occurring examples of electronically stored languages (Bennet, 2010, p. 2). For such studies, corpus typically refers to a set of authentic machine-readable text that has been selected to describe or represent a condition or variety of a language (Grigaliuniene, 2013, p. 9). Likewise, Lindquist (2009) also believed that corpus is related to electronic. He claimed that corpus is a collection of texts stored on some kind of digital medium to be used by linguists for research purposes or by lexicographers in the production of dictionaries (Lindquist, 2009, p. 3). Nowadays, the word 'corpus' is almost often associated with the term 'electronic corpus,' which is a collection of texts stored on some kind of digital medium to be used by linguists for research purposes or by lexicographers for dictionaries. McCarthy (2004) also described corpus as a collection of written or spoken texts, typically stored in a computer database. We may infer from the above argument that computer has a crucial function in corpus (McCarthy, 2004, p. 1). In this regard, computers and software programs have allowed researchers to fairly quickly and cheaply capture, store and handle vast quantities of data. -

Concreteness 25 3.1 Introduction

PDF hosted at the Radboud Repository of the Radboud University Nijmegen The following full text is a publisher's version. For additional information about this publication click this link. http://hdl.handle.net/2066/93581 Please be advised that this information was generated on 2021-09-28 and may be subject to change. Making choices Modelling the English dative alternation c 2012 by Daphne Theijssen Cover photos by Harm Lourenssen ISBN 978-94-6191-275-6 Making choices Modelling the English dative alternation Proefschrift ter verkrijging van de graad van doctor aan de Radboud Universiteit Nijmegen op gezag van de rector magnificus prof. mr. S.C.J.J. Kortmann, volgens besluit van het college van decanen, in het openbaar te verdedigen op maandag 18 juni 2012 om 13.30 uur precies door Daphne Louise Theijssen geboren op 25 juni 1984 te Uden Promotor: Prof. dr. L.W.J. Boves Copromotoren: Dr. B.J.M. van Halteren Dr. N.H.J. Oostdijk Manuscriptcommissie: Prof. Dr. A.P.J. van den Bosch Prof. Dr. R.H. Baayen (Eberhard Karls Universität Tübingen, Germany & University of Alberta, Edmonton, Canada) Dr. G. Bouma (Rijksuniversiteit Groningen) Nobody said it was easy It’s such a shame for us to part Nobody said it was easy No one ever said it would be this hard Oh take me back to the start I was just guessing at numbers and figures Pulling your puzzles apart Questions of science; science and progress Do not speak as loud as my heart The Scientist – Coldplay Dankwoord / Acknowledgements Wanneer ik mensen vertel dat ik een proefschrift heb geschreven, kijken ze me vaak vol bewondering aan. -

1. Introduction

This is the accepted manuscript of the chapter MacLeod, N, and Wright, D. (2020). Forensic Linguistics. In S. Adolphs and D. Knight (eds). Routledge Handbook of English Language and Digital Humanities. London: Routledge, pp. 360-377. Chapter 19 Forensic Linguistics 1. INTRODUCTION One area of applied linguistics in which there has been increasing trends in both (i) utilising technology to assist in the analysis of text and (ii) scrutinising digital data through the lens of traditional linguistic and discursive analytical methods, is that of forensic linguistics. Broadly defined, forensic linguistics is an application of linguistic theory and method to any point at which there is an interface between language and the law. The field is popularly viewed as comprising three main elements: (i) the (written) language of the law, (ii) the language of (spoken) legal processes, and (iii) language analysis as evidence or as an investigative tool. The intersection between digital approaches to language analysis and forensic linguistics discussed in this chapter resides in element (iii), the use of linguistic analysis as evidence or to assist in investigations. Forensic linguists might take instructions from police officers to provide assistance with criminal investigations, or from solicitors for either side preparing a prosecution or defence case in advance of a criminal trial. Alternatively, they may undertake work for parties involved in civil legal disputes. Forensic linguists often appear in court to provide their expert testimony as evidence for the side by which they were retained, though it should be kept in mind that standards here are required to be much higher than they are within investigatory enterprises. -

8Th International Conference on Language Resources and Evaluation 2012

8th International Conference on Language Resources and Evaluation 2012 (LREC-2012) Istanbul, Turkey 21-27 May 2012 Volume 1 of 5 ISBN: 978-1-62276-504-1 Printed from e-media with permission by: Curran Associates, Inc. 57 Morehouse Lane Red Hook, NY 12571 Some format issues inherent in the e-media version may also appear in this print version. Copyright© (2012) by the Association for Computational Linguistics All rights reserved. Printed by Curran Associates, Inc. (2012) For permission requests, please contact the Association for Computational Linguistics at the address below. Association for Computational Linguistics 209 N. Eighth Street Stroudsburg, Pennsylvania 18360 Phone: 1-570-476-8006 Fax: 1-570-476-0860 [email protected] Additional copies of this publication are available from: Curran Associates, Inc. 57 Morehouse Lane Red Hook, NY 12571 USA Phone: 845-758-0400 Fax: 845-758-2634 Email: [email protected] Web: www.proceedings.com TABLE OF CONTENTS Volume 1 PaCo2: A Fully Automated Tool for Gathering Parallel Corpora from the Web .......................................................................................1 Iñaki San Vicente, Iker Manterola Terra: A Collection of Translation Error-Annotated Corpora ....................................................................................................................7 Mark Fishel, Ondrej Bojar, Maja Popovic A Light Way to Collect Comparable Corpora from the Web .....................................................................................................................15 -

Papers Index

Papers Index SESSION O1 - Corpora for Machine Translation I˜naki San Vicente and Iker Manterola, PaCo2: A Fully Automated tool for gathering Parallel Corpora from the Web.................................. ........................................ 1 Mark Fishel, Ondˇrej Bojar and Maja Popovi´c, Terra: a Collection of Translation Error- Annotated Corpora................................... .......................................... 7 Ahmet Aker, Evangelos Kanoulas and Robert Gaizauskas, A light way to collect comparable corpora from the Web.................................. ........................................ 15 Volha Petukhova, Rodrigo Agerri, Mark Fishel, Sergio Penkale, Arantza del Pozo, Mir- jam Sepesy Maucec, Andy Way, Panayota Georgakopoulou and Martin Volk, SUMAT: Data Collection and Parallel Corpus Compilation for Machine Translation of Subtitles.......... 21 Daniele Pighin, Llu´ıs M`arquez and Llu´ıs Formiga, The FAUST Corpus of Adequacy Assess- ments for Real-World Machine Translation Output . ............................... 29 SESSION O2 - Infrastructures and Strategies for LRs (1) Stelios Piperidis, The META-SHARE Language Resources Sharing Infrastructure: Principles, Challenges, Solutions ................................... ....................................... 36 Riccardo Del Gratta, Francesca Frontini, Francesco Rubino, Irene Russo and Nicoletta Calzolari, The Language Library: supporting community effort for collective resource production 43 Khalid Choukri, Victoria Arranz, Olivier Hamon and Jungyeul Park, Using the Interna- -

Distributed Memory Bound Word Counting for Large Corpora

Democratic and Popular Republic of Algeria Ministry of Higher Education and Scientific Research Ahmed Draia University - Adrar Faculty of Science and Technology Department of Mathematics and Computer Science A Thesis Presented to Fulfil the Master’s Degree in Computer Science Option: Intelligent Systems. Title: Distributed Memory Bound Word Counting For Large Corpora Prepared by: Bekraoui Mohamed Lamine & Sennoussi Fayssal Taqiy Eddine Supervised by: Mr. Mediani Mohammed In front of President : CHOUGOEUR Djilali Examiner : OMARI Mohammed Examiner : BENATIALLAH Djelloul Academic Year 2017/2018 Abstract: Statistical Natural Language Processing (NLP) has seen tremendous success over the recent years and its applications can be met in a wide range of areas. NLP tasks make the core of very popular services such as Google translation, recommendation systems of big commercial companies such Amazon, and even in the voice recognizers of the mobile world. Nowadays, most of the NLP applications are data-based. Language data is used to estimate statistical models, which are then used in making predictions about new data which was probably never seen. In its simplest form, computing any statistical model will rely on the fundamental task of counting the small units constituting the data. With the expansion of the Internet and its intrusion in all aspects of human life, the textual corpora became available in very large amounts. This high availability is very advantageous performance-wise, as it enlarges the coverage and makes the model more robust both to noise and unseen examples. On the other hand, training systems on large data quantities raises a new challenge to the hardware resources, as it is very likely that the model will not fit into main memory.