Russian Disinformation Attacks on Elections: Lessons from Europe

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Trolls Can Sing and Dance in the Movies

can sing and dance in the Movies TrollsBut let’s be clear! Internet Trolls are not cute or funny! In Internet slang, a troll is a person who creates bad feelings on the Internet by starting quarrels or upsetting people, or by posting inflammatory, extraneous, or off-topic messages with the intent of provoking readers into an emotional outburst. 01. The Insult Troll 06. The Profanity and All-Caps Troll The insult troll is a pure hater, plain and simple. They will This type of troll spews F-bombs and other curse words with his often pick on everyone and anyone - calling them names, caps lock button on. In many cases, these types of trolls are just accusing them of certain things, doing anything they can to bored kids looking for something to do without needing to put too get a negative emotional response from them. This type of much thought or effort into anything. trolling can be considered a serious form of cyberbullying. 02. The Persistent Debate Troll 07. The One Word Only Troll This type of troll loves a good argument. They believe they're There's always that one type of troll who just says "LOL" or "what" or right, and everyone else is wrong. They write long posts and "k" or "yes" or "no." They may not be the worst type of troll online, they're always determined to have the last word - continuing but when a serious or detailed topic is being discussed, their one- to comment until that other user gives up. word replies are just a nuisance. -

Online Russia, Today

Online Russia, today. How is Russia Today framing the events of the Ukrainian crisis of 2013 and what this framing says about the Russian regime’s legitimation strategies? The case of the Russian-language online platform of RT Margarita Kurdalanova 24th of June 2016 Graduate School of Social Sciences Authoritarianism in a Global Age Adele Del Sordi Dr. Andrey Demidov This page intentionally left blank Word count: 14 886 1 Table of Contents Abstract ...................................................................................................................................... 3 1.Introduction ............................................................................................................................. 4 2.Literature Review .................................................................................................................... 5 2.1 Legitimacy and legitimation ............................................................................................. 5 2.2. Legitimation in authoritarian regimes ............................................................................. 7 2.3 Media and authoritarianism .............................................................................................. 9 2.4 Propaganda and information warfare ............................................................................. 11 3.Case study ............................................................................................................................. 13 3.1 The Russian-Ukrainian conflict of 2013 ....................................................................... -

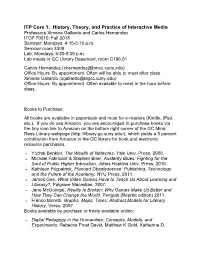

ITP Core 1: History, Theory, and Practice of Interactive Media Professors Ximena Gallardo and Carlos Hernandez ITCP 70010: Fall 2018 Seminar: Mondays, 4:15-6:15 P.M

ITP Core 1: History, Theory, and Practice of Interactive Media Professors Ximena Gallardo and Carlos Hernandez ITCP 70010: Fall 2018 Seminar: Mondays, 4:15-6:15 p.m. Seminar room 3309 Lab: Mondays, 6:30-8:30 p.m. Lab meets in GC Library Basement, room C196.01 Carlos Hernandez ([email protected]) Office Hours: By appointment. Often will be able to meet after class. Ximena Gallardo ([email protected]) Office Hours: By appointment. Often available to meet in the hour before class. Books to Purchase: All books are available in paperback and most for e-readers (Kindle, iPad, etc.). If you do use Amazon, you are encouraged to purchase books via the tiny icon link to Amazon on the bottom right corner of the GC Mina Rees Library webpage (http://library.gc.cuny.edu/), which yields a 5 percent contribution from Amazon to the GC library for book and electronic resource purchases. • Yochai Benkler, The Wealth of Networks, Yale Univ. Press, 2006. • Michael Fabricant & Stephen Brier, Austerity Blues: Fighting for the Soul of Public Higher Education, Johns Hopkins Univ. Press, 2016. • Kathleen Fitzpatrick, Planned Obsolescence: Publishing, Technology and the Future of the Academy, NYU Press, 2011. • James Gee, What Video Games Have to Teach Us About Learning and Literacy?, Palgrave Macmillan, 2007. • Jane McGonigal. Reality Is Broken: Why Games Make Us Better and How They Can Change the World. Penguin (Reprint edition) 2011. • Franco Moretti, Graphs, Maps, Trees: Abstract Models for Literary History, Verso, 2007. Books available by purchase or freely available online: • Digital Pedagogy in the Humanities: Concepts, Models, and Experiments, Rebecca Frost David, Matthew K Gold, Katherine D. -

Online Harassment: a Legislative Solution

\\jciprod01\productn\H\HLL\54-2\HLL205.txt unknown Seq: 1 11-MAY-17 15:55 ONLINE HARASSMENT: A LEGISLATIVE SOLUTION EMMA MARSHAK* TABLE OF CONTENTS I. INTRODUCTION .......................................... 501 II. WHY IS ONLINE HARASSMENT A PROBLEM?................ 504 R a. The Scope of the Problem ............................ 504 R b. Economic Impact .................................... 507 R i. Lost Business Opportunities ...................... 507 R ii. Swatting ........................................ 510 R iii. Doxxing ........................................ 511 R III. CURRENT LAW .......................................... 512 R a. Divergent State Law ................................. 512 R b. Elements of the Law ................................. 514 R IV. LAW ENFORCEMENT AND INVESTIGATIVE PROBLEMS ........ 515 R a. Police Training ...................................... 515 R b. Investigative Resources .............................. 519 R c. Prosecutorial Jurisdiction ............................ 520 R V. SOLUTION ............................................... 521 R a. Proposed Legislation ................................ 521 R b. National Evidence Laboratory ........................ 526 R c. Training Materials ................................... 526 R VI. CONCLUSION ............................................ 528 R VII. APPENDIX ............................................... 530 R I. INTRODUCTION A journalist publishes an article; rape threats follow in the comments.1 An art curator has a conversation with a visitor to her gallery; -

EU EAST STRATCOM Task Force

EU EAST STRATCOM Task Force November 2015 The Eastern Partnership Review: “EU-related communication in Eastern Partnership countries” • Difficulties with adjusting to the new realm of communication The time for “SOS-communication” or “Send-Out-Stuff” method of distributing information is now in the past and the communicators have to be innovative and creative for finding new effective methods for disseminating their information. • EU information in often too technical, full of jargon, lacks human language Communicators are often tempted to take this shortcut and copy these texts directly into their communication materials without translating it into a simple language that people can understand. • EU-related communication work revolves around official visits and events Very often EU-related communication work and the related coverage is driven by the activities of politicians, EU dignitaries and official events, which produces information that does not appeal to most of the target audiences. Not much attention is paid to the actual content – what does this meeting signify or initiative mean for local people. EU RESPONSE: European Council meeting (19 & 20 March 2015) – Conclusions Image: © European Union 1 Objectives of the Task Force Effective communication and promotion of EU policies towards the Eastern Neighbourhood Strengthening of the overall media environment in the Eastern Neighbourhood and in EU Member States Improved EU capacity to forecast, address and respond to disinformation activities by external actors Images: FLICKR, Creative -

Minsk II a Fragile Ceasefire

Briefing 16 July 2015 Ukraine: Follow-up of Minsk II A fragile ceasefire SUMMARY Four months after leaders from France, Germany, Ukraine and Russia reached a 13-point 'Package of measures for the implementation of the Minsk agreements' ('Minsk II') on 12 February 2015, the ceasefire is crumbling. The pressure on Kyiv to contribute to a de-escalation and comply with Minsk II continues to grow. While Moscow still denies accusations that there are Russian soldiers in eastern Ukraine, Russian President Vladimir Putin publicly admitted in March 2015 to having invaded Crimea. There is mounting evidence that Moscow continues to play an active military role in eastern Ukraine. The multidimensional conflict is eroding the country's stability on all fronts. While the situation on both the military and the economic front is acute, the country is under pressure to conduct wide-reaching reforms to meet its international obligations. In addition, Russia is challenging Ukraine's identity as a sovereign nation state with a wide range of disinformation tools. Against this backdrop, the international community and the EU are under increasing pressure to react. In the following pages, the current status of the Minsk II agreement is assessed and other recent key developments in Ukraine and beyond examined. This briefing brings up to date that of 16 March 2015, 'Ukraine after Minsk II: the next level – Hybrid responses to hybrid threats?'. In this briefing: • Minsk II – still standing on the ground? • Security-related implications of the crisis • Russian disinformation -

Cedc Stratcom Expert Workshop “Combating Fake News and Disinformation Campaigns“

CEDC STRATCOM EXPERT WORKSHOP “COMBATING FAKE NEWS AND DISINFORMATION CAMPAIGNS“ Zagreb, VTC, 8 June 2021 1 CEDC STRATCOM EXPERT WORKSHOP “COMBATING FAKE NEWS AND DISINFORMATION CAMPAIGNS“ Virtual expert workshop organized by the Croatian Presidency of the CEDC Tuesday, 08 June 2021, 9:00 AM – 2:30 PM (UTC+1) Format/Participants: High-level representatives of CEDC member countries Representatives of EEAS & NATO-accredited international military organizations International & national experts Beside its apparent advantages and opportunities, the digital communication era also opens a window of opportunities for different, not so benevolent actors to conduct malicious activities, resulting in an erosion of democratic values, polarization of politics, widespread confusion on important matters and general distrust in our institutions. Both state and non-state actors have exploited the COVID-19 pandemic to spread disinformation and propaganda, seeking to destabilise national and regional security. What is new about this phenomenon is that false and misleading information can now be disseminated over the Internet with much greater reach and at relatively low cost. Disinformation is a significant problem throughout our region and an increasingly important part of the way in which both domestic and foreign actors pursue their political objectives. Faced with the COVID-19 health crisis and uncertainty about the future trajectory of the pandemic and its impact on society as whole, states, regional organisations and other stakeholders are under enormous -

El Destacado

ADESyD “COMPARTIENDO (VISIONES DE) SEGURIDAD” VOL 5º. Junio 2019 V CONGRESO ADESyD “Compartiendo (visiones de) Seguridad” Coeditores: Dra. María Angustias Caracuel Raya Dr. José Díaz Toribio Dra. Elvira Sánchez Mateos Dr. Alfredo Crespo Alcázar Dra. María Teresa Sánchez González Madrid, 27 de noviembre de 2018 1 ADESyD “COMPARTIENDO (VISIONES DE) SEGURIDAD” VOL 5º. Junio 2019 ASOCIACIÓN DE DIPLOMADOS ESPAÑOLES EN SEGURIDAD Y DEFENSA (ADESyD) http://www.adesyd.es Coeditores: Dra. María Angustias Caracuel Raya Dr. José Díaz Toribio Dra. Elvira Sánchez Mateos Dr. Alfredo Crespo Alcázar Dra. María Teresa Sánchez González Federación de Gremios de Editores de España ISBN – 978-84-09-11703-1 Publicación en PDF ©ADESyD, 2019 Se autoriza la reproducción, agradeciendo la cita de la fuente Redes Sociales: @ADESyD2011 @SWIIS2011 Linkedin y Facebook Fecha de edición: 4 de junio de 2019 La responsabilidad de las opiniones, comentarios, análisis y afirmaciones contenidas en esta obra pertenece exclusivamente a los autores que firman cada uno de los trabajos. ADESyD no los asume como propios. 2 ADESyD “COMPARTIENDO (VISIONES DE) SEGURIDAD” VOL 5º. Junio 2019 3 ADESyD “COMPARTIENDO (VISIONES DE) SEGURIDAD” VOL 5º. Junio 2019 COMPARTIENDO (VISIONES DE) SEGURIDAD Esta obra, editada online y de acceso libre a través de la página web www.adesyd.es, ofrece una colección coherente de trabajos elaborados de acuerdo a los conceptos más actuales de cuantos están vigentes en los estudios sobre seguridad y defensa. Pone al lector en contacto con un contexto complejo del que se podrá conocer lo más relevante y debatido en las áreas de seguridad nacional, seguridad internacional, seguridad pública y seguridad privada. -

ASD-Covert-Foreign-Money.Pdf

overt C Foreign Covert Money Financial loopholes exploited by AUGUST 2020 authoritarians to fund political interference in democracies AUTHORS: Josh Rudolph and Thomas Morley © 2020 The Alliance for Securing Democracy Please direct inquiries to The Alliance for Securing Democracy at The German Marshall Fund of the United States 1700 18th Street, NW Washington, DC 20009 T 1 202 683 2650 E [email protected] This publication can be downloaded for free at https://securingdemocracy.gmfus.org/covert-foreign-money/. The views expressed in GMF publications and commentary are the views of the authors alone. Cover and map design: Kenny Nguyen Formatting design: Rachael Worthington Alliance for Securing Democracy The Alliance for Securing Democracy (ASD), a bipartisan initiative housed at the German Marshall Fund of the United States, develops comprehensive strategies to deter, defend against, and raise the costs on authoritarian efforts to undermine and interfere in democratic institutions. ASD brings together experts on disinformation, malign finance, emerging technologies, elections integrity, economic coercion, and cybersecurity, as well as regional experts, to collaborate across traditional stovepipes and develop cross-cutting frame- works. Authors Josh Rudolph Fellow for Malign Finance Thomas Morley Research Assistant Contents Executive Summary �������������������������������������������������������������������������������������������������������������������� 1 Introduction and Methodology �������������������������������������������������������������������������������������������������� -

National Strategy for Homeland Security 2007

national strategy for HOMELAND SECURITY H OMELAND SECURITY COUNCIL OCTOBER 2 0 0 7 national strategy for HOMELAND SECURITY H OMELAND SECURITY COUNCIL OCTOBER 2 0 0 7 My fellow Americans, More than 6 years after the attacks of September 11, 2001, we remain at war with adversar- ies who are committed to destroying our people, our freedom, and our way of life. In the midst of this conflict, our Nation also has endured one of the worst natural disasters in our history, Hurricane Katrina. As we face the dual challenges of preventing terrorist attacks in the Homeland and strengthening our Nation’s preparedness for both natural and man-made disasters, our most solemn duty is to protect the American people. The National Strategy for Homeland Security serves as our guide to leverage America’s talents and resources to meet this obligation. Despite grave challenges, we also have seen great accomplishments. Working with our part- ners and allies, we have broken up terrorist cells, disrupted attacks, and saved American lives. Although our enemies have not been idle, they have not succeeded in launching another attack on our soil in over 6 years due to the bravery and diligence of many. Just as our vision of homeland security has evolved as we have made progress in the War on Terror, we also have learned from the tragedy of Hurricane Katrina. We witnessed countless acts of courage and kindness in the aftermath of that storm, but I, like most Americans, was not satisfied with the Federal response. We have applied the lessons of Katrina to thisStrategy to make sure that America is safer, stronger, and better prepared. -

Address Munging: the Practice of Disguising, Or Munging, an E-Mail Address to Prevent It Being Automatically Collected and Used

Address Munging: the practice of disguising, or munging, an e-mail address to prevent it being automatically collected and used as a target for people and organizations that send unsolicited bulk e-mail address. Adware: or advertising-supported software is any software package which automatically plays, displays, or downloads advertising material to a computer after the software is installed on it or while the application is being used. Some types of adware are also spyware and can be classified as privacy-invasive software. Adware is software designed to force pre-chosen ads to display on your system. Some adware is designed to be malicious and will pop up ads with such speed and frequency that they seem to be taking over everything, slowing down your system and tying up all of your system resources. When adware is coupled with spyware, it can be a frustrating ride, to say the least. Backdoor: in a computer system (or cryptosystem or algorithm) is a method of bypassing normal authentication, securing remote access to a computer, obtaining access to plaintext, and so on, while attempting to remain undetected. The backdoor may take the form of an installed program (e.g., Back Orifice), or could be a modification to an existing program or hardware device. A back door is a point of entry that circumvents normal security and can be used by a cracker to access a network or computer system. Usually back doors are created by system developers as shortcuts to speed access through security during the development stage and then are overlooked and never properly removed during final implementation. -

Disinformation in the Cyber Domain: Detection, Impact, and Counter-Strategies1

24th International Command and Control Research and Technology Symposium ‘Managing Cyber Risk to Mission’ Disinformation in the Cyber Domain: Detection, Impact, and Counter-Strategies1 Ritu Gill, PhD Judith van de Kuijt, MA Defence R&D Canada Netherlands Organization-Applied Scientific Research [email protected] [email protected] Magnus Rosell, PhD Ronnie Johannson, PhD Swedish Defence Research Agency Swedish Defence Research Agency [email protected] [email protected] Abstract The authors examined disinformation via social media and its impact on target audiences by conducting interviews with Canadian Armed Forces and Royal Netherlands Army subject matter experts. Given the pervasiveness and effectiveness of disinformation employed by adversaries, particularly during major national events such as elections, the EU-Ukraine Association Agreement, and the Malaysian Airlines Flight 17, this study assessed several aspects of disinformation including i) how target audiences are vulnerable to disinformation, ii) which activities are affected by disinformation, iii) what are the indicators of disinformation, and iv) how to foster resilience to disinformation in the military and society. Qualitative analyses of results indicated that in order to effectively counter disinformation the focus needs to be on identifying the military’s core strategic narrative and reinforcing the larger narrative in all communications rather than continuously allocating valuable resources to actively refute all disinformation. Tactical messages that are disseminated should be focused on supporting the larger strategic narrative. In order to foster resilience to disinformation for target audiences, inoculation is key; inoculation can be attained through education as part of pre-deployment training for military, as well as public service announcements via traditional formats and through social media for the public, particularly during critical events such as national elections.