Scalable Web-Embedded Volume Rendering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Downtown Design Guidelines Is One Are an Adjunct to the City Development Code

DOWNTOWN ONTARIO DESIGN GUIDELINES A FOR UNIQUE ONTARIO'S HISTORIC EXPERIENCE MODEL COLONY: MULTI-CULTURAL URBAN A GUIDE FOR THE FUTURE THAT HONORS OUR PAST T OP E D D A 8 1 9 8 9 A 1 U G U S T CCC Contents x DOWNTOWN ONTARIO DESIGN GUIDELINES FOR ONTARIO'S HISTORIC MODEL COLONY: A UNIQUE MULTI-CULTURAL URBAN EXPERIENCE PRODUCED BY THE ARROYO GROUP, PLANNERS, ARCHITECTS & ASSOCIATED DISCIPLINES WITH PATRICK B. QUIGLEY & ASSOCIATES, LIGHTING CONSULTANT ADOPTED BY ONTARIO CITY COUNCIL ON AUGUST 18, 1998 D O W N T O W N O N T A R I O D E S I G N G U I D E L I N E S i AcknowledgementsAAA City of Ontario City Council Downtown Revitalization Partnership Consultant Team Gus Skropos, Mayor City Council Representatives: The Arroyo Group Alan Wapner, Mayor Pro Tem Alan Wapner, Mayor Pro Tem Larry Morrison, AIA, AICP, Principal Gary Ovitt, Council Member Gary Ovitt, Council Member Simran Malhotra, AIA, Associate Jim Bowman, Council Member Herb Barnes, Graphic Designer Jerry DuBois, Council Member Debra Dorst-Porada, Chairperson Rick Caughman, Vice Chairman Patrick B. Quigley & Associates City of Ontario Planning Commission Sue Luce, Director, Secretary/Director, Ontario Patrick Quigley, Principal Public Library James Maletic, Chairman Steve Alvarado, Vice President, Foothill Debra Dorst Porada, Vice Chairman Independent Bank Richard Gage, Commissioner Yvonne Borrowdale, Resident Alexandro Espinoza, Commissioner Kathleen Brugger, Chaffey College Bob Gregorek II, Commissioner Angie Salas Dark, Ontario Historical Society/ DeAna Hernandez, Commissioner Friends of Olde Town Ontario Gabe DeRocili, Commissioner Mike Fortunato, City Commercial Management, Inc. -

Publication DILA

o Quarante-quatrième année. – N 68 B ISSN 0298-2978 Mercredi 7 et jeudi 8 avril 2010 BODACCBULLETIN OFFICIEL DES ANNONCES CIVILES ET COMMERCIALES ANNEXÉ AU JOURNAL OFFICIEL DE LA RÉPUBLIQUE FRANÇAISE DIRECTION DE L’INFORMATION Standard......................................... 01-40-58-75-00 LÉGALE ET ADMINISTRATIVE Annonces....................................... 01-40-58-77-56 Accueil commercial....................... 01-40-15-70-10 26, rue Desaix, 75727 PARIS CEDEX 15 Abonnements................................. 01-40-15-67-77 www.dila.premier-ministre.gouv.fr (8h30à 12h30) www.bodacc.fr Télécopie........................................ 01-40-15-72-75 BODACC “B” Modifications diverses - Radiations Avis aux lecteurs Les autres catégories d’insertions sont publiées dans deux autres éditions séparées selon la répartition suivante Ventes et cessions .......................................... Créations d’établissements ............................ @ Procédures collectives .................................... ! BODACC “A” Procédures de rétablissement personnel .... Avis relatifs aux successions ......................... * Avis de dépôt des comptes des sociétés .... BODACC “C” Banque de données BODACC servie par les sociétés : Altares-D&B, EDD, Extelia, Questel, Tessi Informatique, Jurismedia, Pouey International, Scores et Décisions, Les Echos, Creditsafe, Coface services, Cartegie, La Base Marketing, Infolegale, France Telecom Orange, Telino et Maxisoft. Conformément à l’article 4 de l’arrêté du 17 mai 1984 relatif à la constitution et à -

Carbon Dioxide Reduction Through Urban Forestry: Guidelines for Professional and Volunteer Tree Planters

United States Carbon Dioxide Reduction Department of Agriculture Through Urban Forestry: Forest Service Guidelines for Professional and Volunteer Tree Planters Pacific Southwest Research Station General Technical Report E. Gregory McPherson James R. Simpson PSW-GTR-171 Publisher: Pacific Southwest Research Station Albany, California Forest Service Mailing address: U.S. Department of Agriculture PO Box 245, Berkeley CA 94701-0245 510 559-6300 http://www.psw.fs.fed.us/ techpub.html January 1999 Abstract McPherson, E. Gregory; Simpson, James R. 1999. Carbon dioxide reduction through urban forestry: Guidelines for professional and volunteer tree planters. Gen. Tech. Rep. PSW- GTR-171. Albany, CA: Pacific Southwest Research Station, Forest Service, U.S. Depart- ment of Agriculture; 237 p. Carbon dioxide reduction through urban forestry—Guidelines for professional and volunteer tree planters has been developed by the Pacific Southwest Research Station’s Western Center for Urban Forest Research and Education as a tool for utilities, urban foresters and arborists, municipalities, consultants, non-profit organizations and others to determine the effects of urban forests on atmospheric carbon dioxide (CO2) reduction. The calculation of CO2 reduction that can be made with the use of these Guidelines enables decision makers to incorporate urban forestry into their efforts to protect our global climate. With these Guidelines, they can: report current and future CO2 reductions through a standardized accounting process; evaluate the cost-effectiveness of urban forestry programs with CO2 reduction measures; compare benefits and costs of alternative urban forestry program designs; and produce educational materials that assess potential CO2 reduction benefits and provide information on tree selection, placement, planting, and stewardship. -

Rosemount Mciver Park Guidelines (PDF)

Rosemount-McIver Park Guidelines Sanford, North Carolina Adopted 4-5-2016 - Revised June 27, 2016 Revised 5-23-2016 page 32 item g Acknowledgments: This document was created by a citizen’s appointed committee by the Chairman of the Historic Preservation Commission. The document was submitted to the Historic Preservation Commission to review and revise as they felt appropriate. The Historic Preservation Commission has revised these guidelines from the original that were submitted to the Historic Preservation Staff on February 7, 2011. This document has been years in the making and during that time frame the citizen committee changed members numerous times. The City of Sanford thanks all citizens who participated on the Revision of the Rosemount McIver Park Historic Guidelines, as well as the Historic Preservation Commission. City of Sanford web site: http://www.sanfordnc.net/index.htm Historic Preservation web site: http://www.sanfordnc.net/historic_preservation/hpc.htm Contact information: Historic Preservation 226 Carthage Street Sanford, NC 27330 919-777-1406 [email protected] 2 3 4 I. INTRODUCTION 5 I. INTRODUCTION This document is governed by and interpreted by he UDO Uniformed Development Ordinance which can be referenced at the Planning Office or on line at www.sanfordnc.net. A. Statement of Philosophy The North Carolina State Legislature has stated in N.C.G.S. 160A-400.1 that “the historical heritage of our State is one of our most valued and important assets. The conservation and preservation of historic districts and landmarks stabilize and increase property values in their areas and strengthen the overall economy of the State.” For these reasons, the State authorized its cities and counties: 1. -

Openaircn-5G Project Current Activities

OpenAirCN-5G Project Current Activities Olivier CHOISY, b<>com Michel TREFCON, b<>com Luhan WANG, BUPT Tien-Thinh NGUYEN, EURECOM Outline . Introduction to OAI CN 5G project . Introduction to 5G Core Network . Current activities oMeetings, discussion between partners oCode generation for interfaces Demo oA prototype Implementation Demo . Roadmap Introduction . Open Air Interface Software Alliance o Core Network Software • Rel10/Rel14 Implementation • With ongoing development/maintenance . Initiative to start a 5G Core Implementation o Objectives : • Initiative to provide an Open source implementation of 5G Core Network as specified by 3GPP • Build a community to perform this implementation . How ? o New Software implementation (5G Skeleton then NFs) o Partners : Eurecom, b<>com, BUPT, Blackent, ng5T Introduction . Preliminary work (Eurecom/BUPT) Q3-2017 o HTTP/2, 5G SBA bus, … initial prototype . Initial workshop to start officially : 04/03/2018 (b<>com Paris) o Decision to start with a complete skeleton based on SBI/SBA 3GPP specifications (TS23.5xx,29.5xx) . 4 synchronization meetings with partners . Trello project follow-up . Progressive implication from partners expected . Q3/Q4 : initial SBA Interfaces implementation – on going Outline . Introduction to OAI CN 5G project . Introduction to 5G Core Network . Current activities oMeetings, discussion between partners oCode generation for interfaces Demo oPrototype Implementation Demo . Roadmap 5G – Service Trends and Usage Scenarios Service-Oriented 5G Core Network . Next Generation Network: To meet the needs of the range of services envisioned for 5G, with diverse performance requirements, across a wide variety of industries: Flexible, Scalable, and Customizable . Service Based Architecture o support a modularized service, flexible and adaptable, with fast deployment cycles and updates for launching services on demand in the network o a set of NFs providing services to other authorized NFs to access their services 5G System Architecture . -

Wal-Mart Draft EIR Part I

CITY OF SUISUN CITY COMMUNITY DEVELOPMENT DEPARTMENT PUBLIC NOTICE - NOTICE OF AVAILABILITY OF THE WAL-MART WALTERS ROAD PROJECT DRAFT ENVIRONMENTAL IMPACT REPORT (SCH# 2006072026) September 17, 2007 TO: RESPONSIBLE AGENCIES TRUSTEE AGENCIES OTHER INTERESTED PARTIES SUBJECT: NOTICE OF AVAILABILITY OF THE WAL-MART WALTERS ROAD PROJECT DRAFT ENVIRONMENTAL IMPACT REPORT (SCH# 2006072026 ) OVERVIEW: The City of Suisun City has prepared a Draft Environmental Impact Report (DEIR) to consider the potential environmental effects of the proposed Wal-Mart Walters Road Project (generally located on the northwest corner of Walters Road and State Route 12, Suisun City, CA). The proposed project requires the approval of a Site Plan and Architectural Application No. 06-08, Parcel Map 06-02, Sign Application No. 06-04, and Encroachment Permits to Local Streets. The project consists of approximately 230,000 square feet of commercial activities on approximately 20.1 acres. ENVIRONMENTAL IMPACTS: The DEIR found significant impacts related to Aesthetics, Light, and Glare, Air Quality, Biological Resources, Cultural Resources, Geology, Soils, and Seismicity, Hazards and Hazardous Materials, Hydrology and Water Quality, Land Use, Noise, Public Services and Utilities, Transportation, and Urban Decay. Many of these impacts were reduced to a less-than-significant level through the implementation of mitigation measures. However, even with implementation of applicable mitigation measures, the DEIR found that the project would still result in significant and unavoidable impacts to Aesthetics (Visual Character) Air Quality (Long-Term Operational Emissions, Cumulative Impacts, Greenhouse Gas Emissions), Noise (Construction Noise, Stationary Noise Sources, Operational Noise – Vehicular Sources), and Transportation (Intersections Operations, Long-Term Intersection Operations, Queuing). -

PRINT:OAS:Remain API Studio - Remain Software

5/18/2020 PRINT:OAS:Remain API Studio - Remain Software PRINT:OAS:Remain API Studio From Remain Software Welcome to the index of the Remain API Studio. OpenAPI Specification (formerly Swagger Specification) is an API description format for REST APIs. An OpenAPI file allows you to describe your entire API. This editor helps you easily create and edit your OpenAPI file. Contents 1 Import OpenAPI 2 Export OpenAPI 3 Schemas 3.1 Add New Schema 3.2 Update Schema 3.2.1 Update Schema Name/Description 3.2.2 Update Schema Properties/Attributes 3.3 Delete Schema 3.4 Import Schema 3.5 Extract Schema From JSON Sample/Payload 3.6 Composite Schema 3.6.1 Add Sub-Schema 3.6.2 Change Sub-Schema (composite) Keyword 3.7 Schema Properties 3.8 Additional Properties 4 Paths 4.1 Add Path 4.2 Edit Path 4.3 Delete Path 4.4 Duplicate Path 4.5 Copy|Cut Operation 4.6 Parameters 4.7 Add global parameter 4.8 Add a path/operation parameter 4.9 Edit/Delete parameter 5 Operations 5.1 Add Operation 5.2 Update Operation 5.3 Delete Operation 6 Request Bodies 6.1 Add A Global Request Body 6.2 Add An Operation Request Body 6.3 Add Request Body Content Type 6.4 Delete Request Body Content Type 6.5 Add Schema to Request Body Content Type 6.6 Update Schema to Request Body Content Type 6.7 Delete Request Body 6.8 Update Request Body 7 Responses 7.1 Add new Response 7.2 Delete a Response 7.3 Update Response 7.4 Add new Response Content Type 7.5 Update/Delete Response Content Type 7.6 Update Response Content Type Schema 8 Tags 8.1 Add Global Tag 8.2 Add Operation Tag 8.3 Update Tag -

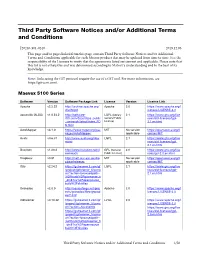

Third Party Software Notices And/Or Additional Terms and Conditions

Third Party Software Notices and/or Additional Terms and Conditions F20240-501-0240 2019.12.06 This page and/or pages linked from this page contain Third Party Software Notices and/or Additional Terms and Conditions applicable for each Matrox product that may be updated from time to time. It is the responsibility of the Licensee to verify that the agreements listed are current and applicable. Please note that this list is not exhaustive and was determined according to Matrox’s understanding and to the best of its knowledge. Note: Links using the GIT protocol require the use of a GIT tool. For more information, see https://git-scm.com/. Maevex 5100 Series Software Version Software Package Link License Version License Link Apache v2.2.22 http://archive.apache.org/ Apache 2.0 https://www.apache.org/l dist/httpd icenses/LICENSE-2.0 asoundlib (ALSA) v1.0.24.2 http://software- LGPL (Library 2.1 https://www.gnu.org/lice dl.ti.com/dsps/dsps_public General Public nses/old-licenses/lgpl- _sw/ezsdk/latest/index_FD License) 2.1.en.html S.html AutoMapper v3.1.0 https://www.nuget.org/pac MIT No version https://opensource.org/li kages/AutoMapper/ applicable censes/MIT Avahi v0.6.31 http://www.avahi.org/dow LGPL 2.1 https://www.gnu.org/lice nload nses/old-licenses/lgpl- 2.1.en.html Busybox v1.20.2 http://www.busybox.net/d GPL (General 2.0 https://www.gnu.org/lice ownloads Public License) nses/gpl-2.0.en.html Dropbear v0.51 http://matt.ucc.asn.au/dro MIT No version https://opensource.org/li pbear/releases applicable censes/MIT Glib v2.24.2 https://gstreamer.ti.com/gf -

Bicycle & Pedestrian Master Plan

BICYCLE & PEDESTRIAN MASTER PLAN Prepared by Buckeye Bicycle and Pedestrian Master Plan Prepared for: City of Buckeye Engineering Department 530 E. Monroe Avenue Buckeye, AZ 85326 Submitted by: Matrix Design Group 2020 North Central Avenue, Suite 1140 Phoenix, AZ 85004 Harrington Planning + Design 1921 S. Alma School Road, Suite 204 Mesa, AZ 85210 United Civil Group 2803 North 7th Avenue Phoenix, AZ 85007 September 2019 Page left intentionally blank. Table of Contents Chapter 1 Plan Development ................................................................................................... 1-1 Introduction ...................................................................................................................................................... 1-1 Plan Overview .................................................................................................................................................. 1-3 Planning Process .............................................................................................................................................. 1-3 Vision and Goals .............................................................................................................................................. 1-4 Project Management and Public Engagement ......................................................................................... 1-5 Chapter 2 Facts, Trends, and Benefits ...................................................................................... 2-1 Benefits of Bicycle and Pedestrian -

Spokane Park Board UFTC Meeting Minutes 3-31-15

Tree Committee Meeting of the City of Spokane Park Board Tuesday, March 31, 2015, 4:15 p.m. – 5:45 p.m. Willow Room, Woodland Center John A. Finch Arboretum Angel Spell – Urban Forester Committee Members: Park Board: Guest(s): X Pendergraft, Lauren – Chairperson Carrie Anderson A Potratz, Preston Nancy MacKerrow X Gifford, Guy Parks Staff: X Davis, Garth Angel Spell A Cash, Kevin Summary • Updates were given on six grants currently in-progress or recently completed. • Staff report was presented and discussed including year-to-date performance and upcoming events. • The Citizen Advisory Committee Report was delivered and discussed; items included early appearance of the Ips bark beetle and a Ponderosa pine tree replacement policy. • Angel presented a Research & Data Report which included metrics on the trees of Coeur d’Alene Park and two journal articles related to root systems in the urban environment. • Urban Forestry Financial Report was unavailable. MINUTES The meeting was called to order at 4:20 p.m. by Chairperson, Lauren Pendergraft. Introductions were made. Action Items: None Discussion Items: 1. Grants Update – Angel Spell Angel reported on the current status of six various grants, of which two are complete. 2. Staff Report – Angel Spell The progress-to-date of work done by Staff and upcoming events was presented and discussed. Also, the Utility Bill Donation Program has now been implemented online and funds have already been received. Angel expressed her appreciation for the success of the Arboretum Educational Series thus far this season. She also informed attendees of upcoming events. Inquiry concerning actions in response to past vandalism of neighborhood trees was discussed and the options provided by Spokane Municipal Code. -

4Th YEAR PROJECT EXPO 2019

DEPARTMENT OF COMPUTER SCIENCE th 4 YEAR PROJECT EXPO 2019 th Architecture Factory – May 8 – 17:00 to 19:00 1 Student Name: Andrew Kenneally Supervisor: Gerard MacSweeney Project Title: Operating System for Irish Primary and Secondary School Students Research Question: Is there an operating system which meets the needs of our public education system? Project Abstract: This Operating System provides in-depth logging, comprehensive parental controls, firewall rules to block illicit and adult content, close to bleeding edge software to protect from modern vulnerabilities, easy access to educational resources, an easy to use child friendly interface and an adult administrator login which allows for thorough monitoring and tweaking of each individual system. I plan to research the software, the market, the security and the various methods of distribution and present it as a research report. I will then develop a virtual machine to analyse the real world usage and uses of such a system and complete the report with my findings. The workstations will gather and send all the information required across a LAN /WAN to a physical machine accessed by a domain manager. This physical machine will not necessarily need to run the same OS as the workstations, but will be able to access the logs, diagnostics or remote terminal of any given workstation or classroom. Any data collected can then be further used to inform on a course of action regarding the OS or the hardware using it. For example; This data could be used to work on possible improvements and to examine any areas of inefficiency, malfunction or exploits. -

Structure, Function and Value of Street Trees in California, USA. Urban Forestry

Urban Forestry & Urban Greening 17 (2016) 104–115 Contents lists available at ScienceDirect Urban Forestry & Urban Greening j ournal homepage: www.elsevier.com/locate/ufug Structure, function and value of street trees in California, USA a,∗ b c E. Gregory McPherson , Natalie van Doorn , John de Goede a USDA Forest Service, Pacific Southwest Research Station, 1731 Research Park Dr., Davis, CA 95618, USA b USDA Forest Service, Pacific Southwest Research Station, 800 Buchanan St. Albany, CA 94710, USA c University of California Davis, Information Center for the Environment, Davis, CA 95616, USA a r t i c l e i n f o a b s t r a c t Article history: This study compiled recent inventory data from 929,823 street trees in 50 cities to determine trends in Received 9 December 2015 tree number and density, identify priority investments and create baseline data against which the effi- Received in revised form 22 March 2016 cacy of future practices can be evaluated. The number of street trees increased from 5.9 million in 1988 Accepted 30 March 2016 to 9.1 million in 2014, about one for every four residents. Street tree density declined from 65.6 to 46.6 Available online 16 April 2016 trees per km, nearly a 30% drop. City streets are at 36.3% of full stocking. State-wide, only London plane- × tree (Platanus hispanica) comprises over 10% of the total, suggesting good state-wide species diversity. Keywords: However, at the city scale, 39 communities were overly reliant on a single species. The state’s street trees Community forest remove 567,748 t CO2 (92,253 t se) annually, equivalent to taking 120,000 cars off the road.