The Norwegian State Railway System GTL (1976) Tor Olav Steine

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sistemi Operativi Real-Time Marco Cesati Lezione R13 Sistemi Operativi Real-Time – II Schema Della Lezione

Sistemi operativi real-time Marco Cesati Lezione R13 Sistemi operativi real-time – II Schema della lezione Caratteristiche comuni VxWorks LynxOS Sistemi embedded e real-time QNX eCos Windows Linux come RTOS 15 gennaio 2013 Marco Cesati Dipartimento di Ingegneria Civile e Ingegneria Informatica Università degli Studi di Roma Tor Vergata SERT’13 R13.1 Sistemi operativi Di cosa parliamo in questa lezione? real-time Marco Cesati In questa lezione descriviamo brevemente alcuni dei più diffusi sistemi operativi real-time Schema della lezione Caratteristiche comuni VxWorks LynxOS 1 Caratteristiche comuni degli RTOS QNX 2 VxWorks eCos 3 LynxOS Windows Linux come RTOS 4 QNX Neutrino 5 eCos 6 Windows Embedded CE 7 Linux come RTOS SERT’13 R13.2 Sistemi operativi Caratteristiche comuni dei principali RTOS real-time Marco Cesati Corrispondenza agli standard: generalmente le API sono proprietarie, ma gli RTOS offrono anche compatibilità (compliancy) o conformità (conformancy) allo standard Real-Time POSIX Modularità e Scalabilità: il kernel ha una dimensione Schema della lezione Caratteristiche comuni (footprint) ridotta e le sue funzionalità sono configurabili VxWorks Dimensione del codice: spesso basati su microkernel LynxOS QNX Velocità e Efficienza: basso overhead per cambi di eCos contesto, latenza delle interruzioni e primitive di Windows sincronizzazione Linux come RTOS Porzioni di codice non interrompibile: generalmente molto corte e di durata predicibile Gestione delle interruzioni “separata”: interrupt handler corto e predicibile, ISR lunga -

Embedded Systems - ECE) I -M.TECH I -Semester (AUTONOMOUS-R18

Presentation on Principles of Distributed Embedded Systems (Embedded Systems - ECE) I -M.TECH I -Semester (AUTONOMOUS-R18) Prepared by, Dr. S. Vinoth Associate Professor UNIT - I REAL TIME ENVIRONMENT 2 UNIT - I REAL-TIME ENVIRONMENT .Real-time computer system requirements .classification of real time systems .simplicity, global time .internal and external clock synchronization .real time model. Real time communication .temporal relations, dependability .power and energy awareness .real time communication .event triggered .rate constrained .time triggered. 3 What is an Embedded system? 4 What is a real-time system? . A real-time system is any information processing system which has to respond to externally generated input stimuli within a finite and specified period –the correctness depends not only on the logical result but also the time it was delivered –failure to respond is as bad as the wrong response! . The computer is a component in a larger engineering system => EMBEDDED COMPUTER SYSTEM 99% of all processors are for the embedded systems market 5 Terminology • Hard real-time — systems where it is absolutely imperative that responses occur within the required deadline. E.g. Flight control systems. • Soft real-time — systems where deadlines are important but which will still function correctly if deadlines are occasionally missed. E.g. Data acquisition system. • Real real-time — systems which are hard real-time and which the response times are very short. E.g. Missile guidance system. • Firm real-time — systems which are soft real-time but in which there is no benefit from late delivery of service. A single system may have all hard, soft and real real-time subsystems In reality many systems will have a cost function associated with missing each deadline. -

SINTRAN III NODAL Systems

THE SIHTIAN III NODAL SYSTEM Oslo University Varsion T.B. Skuli Institute of Physics University of Oslo FOB 1048, Blindern, Oslo 3, Norway Report 80-17 October 1980 The SINTRAN III NODAL System Oslo University Version T.B. Skaali Institute of Physics, University of Oslo POB 1046, Blindern, Oslo 3, Norway October 198O -ooOoo- 1. Introduction , 2. Basic Features of NODAL i 3. The NODILER Preinterpreter 2 4. Implementation under SINTRAN III 4 4.1 Background NODAL 5 4.2 Real Time NODAL Programs o 4.2.1 Interactive RT NODAL Programs 7 4.2.2 File-driven RT NODAL Programs 7 4.3 Oslo University Revisions to the NODAL System ... b 5. NODAL-SINTRAN III Fi...-i.ions 10 b.i RT Program Control 10 5.2 "Escape" Control Functions 11 5.3 General RT Functions 11 5.4 CAMAC Functions 15 5.5 Magnetic Tape and Floppy Disc Handling 16 5.6 Input/Output and I/O Buffer Functions 17 3.7 Functions for Fast Input/Output of Integers Arrays . 1C- 5.8 SINTRAN III Monitor Calls - NODAL Functions . 21 6. Functions for Double Precision Integers 22 7. Display Functions 23 6. TEKPLOT Program Package 24 References 25 Appendix: A Examples on the RT Use of NODAL 26 Throughout the report, replace the characters Æ and Å with [ and ], respectively. October 198O Introduction This report describes the Oalo University implementation of the NODAL system under the operating system 3INTRAN III. At present, the NODAL system has been installed on two NORD-'O.S computers at the Physics Department and on a NORD-'OO at the Chemistry Department, University of Oslo. -

Opensource Software Im Alltag

OpensourceOpensource SoftwareSoftware imim AlltagAlltag -- IstIst dasdas möglich?möglich? Willkommen zum Xing Stammtisch Alexander Demmler – 25.11.2016 © A. Demmler 2016 www.lacunasolutions.com 1 Marktanteile Desktop Systeme 2016 © A. Demmler 2016 www.lacunasolutions.com 2 Webserver Systeme © A. Demmler 2016 www.lacunasolutions.com 3 Einsatz von OSS in US Unternehmen: Northbridge Eine Venture Capital Company. Game changers disrupt existing markets. They also create new multi-billion dollar markets and have the potential to change the way we live and work. http://www.northbridge.com © A. Demmler 2016 www.lacunasolutions.com 4 Was bedeutet OpenSource? • OpenSource Software (OSS) ist professionelle Software unter einer alternativen Entwicklungs- und Vertriebsform • OpenSource ist nicht Linux - aber Linux ist auch OpenSource! • OpenSource bedeutet ein Stück Freiheit: - freie Auswahl - Freiheit ob und wieviel man bezahlt - Freiheit in der Nutzung © A. Demmler 2016 www.lacunasolutions.com 5 Was ist die Community? • Die Community besteht aus - Entwicklern + Beratern + Anwendern + Hobbyisten - grossen Unternehmen (HP, IBM, SUN) - Distributoren wie SUSE, RedHat, Ubuntu u.a. • Die Community entwickelt und pflegt gemeinsam die Software und bietet Unterstützung (Support) • Die Community ist kein Club von Hackern, Freaks und anderen Bösewichten! © A. Demmler 2016 www.lacunasolutions.com 6 Das Geschäftsmodell Freie Software Dienstleistungen (Kosten) Basis Version Erweiterungen Download im WWW Service + Support Online Support Schulungen Zusatzeinkünfte Werbung + Sponsoren © A. Demmler 2016 www.lacunasolutions.com 7 Lizenzrechtliches • Softwarecode (Quellen) sind offen gelegt: - Verbreitung und Nutzung sind ausdrücklich erlaubt - Veränderung (Anpassung, Erweiterung) erlaubt - Quellcode muss offengelegt werden • Es gibt historisch bedingt verschiedene Lizenzmodelle. (GPL, OSL, CPL, EPL u.a) • EU Initiative um Lizenzen vereinen: http://ec.europa.eu ACHTUNG: Auch hier gelten Vorschriften! Computerwoche: http://www.computerwoche.de © A. -

Norsk Data AS, the Fenomenon (Brief) Content

Norsk Data AS, The Fenomenon (brief) Tor Olav Steine, with the help of former colleaguesi . Abstract. Norsk Data was a remarkable company that went in just 20 years from a glimmer in the eyes of some computer enthusiasts to become number 2 in stock value at Oslo Stock Exchange. Then within a few years it collapsed, without obvious reason. How was this tremendous success possible and why did it collapse? NOTE: This history brief of the Norsk Data Company is based upon a Springer Verlag publication resulting from the HINC3 Conference in Stockholm 2010. It is ONLY for personal use, and not allowed for distribution or publishing. Content 1 The beginning ................................................................................................................................................. 2 2. The demanding customers .............................................................................................................................. 4 3 The years of fast growth ................................................................................................................................. 7 4. Crisis and downfall ......................................................................................................................................... 9 6. About the Author .......................................................................................................................................... 11 7. References ................................................................................................................................................... -

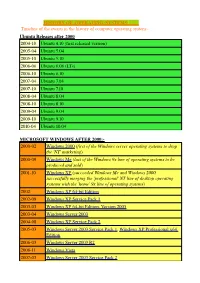

HISTORY of OPERATING SYSTEMS Timeline of The

HISTORY OF OPERATING SYSTEMS Timeline of the events in the history of computer operating system:- Ubuntu Releases after 2000 2004-10 Ubuntu 4.10 (first released version) 2005-04 Ubuntu 5.04 2005-10 Ubuntu 5.10 2006-06 Ubuntu 6.06 (LTs) 2006-10 Ubuntu 6.10 2007-04 Ubuntu 7.04 2007-10 Ubuntu 7.10 2008-04 Ubuntu 8.04 2008-10 Ubuntu 8.10 2009-04 Ubuntu 9.04 2009-10 Ubuntu 9.10 2010-04 Ubuntu 10.04 MICROSOFT WINDOWS AFTER 2000:- 2000-02 Windows 2000 (first of the Windows server operating systems to drop the ©NT© marketing) 2000-09 Windows Me (last of the Windows 9x line of operating systems to be produced and sold) 2001-10 Windows XP (succeeded Windows Me and Windows 2000, successfully merging the ©professional© NT line of desktop operating systems with the ©home© 9x line of operating systems) 2002 Windows XP 64-bit Edition 2002-09 Windows XP Service Pack 1 2003-03 Windows XP 64-bit Edition, Version 2003 2003-04 Windows Server 2003 2004-08 Windows XP Service Pack 2 2005-03 Windows Server 2003 Service Pack 1, Windows XP Professional x64 Edition 2006-03 Windows Server 2003 R2 2006-11 Windows Vista 2007-03 Windows Server 2003 Service Pack 2 2007-11 Windows Home Server 2008-02 Windows Vista Service Pack 1, Windows Server 2008 2008-04 Windows XP Service Pack 3 2009-05 Windows Vista Service Pack 2 2009-10 Windows 7(22 occtober 2009), Windows Server 2008 R2 EVENT IN HISTORY OF OS SINCE 1954:- 1950s 1954 MIT©s operating system made for UNIVAC 1103 1955 General Motors Operating System made for IBM 701 1956 GM-NAA I/O for IBM 704, based on General Motors -

P Ascalnewsletter

PASCAL USER'S GROUP USER'S GROUP PASCALNEWSLETTER NUMBER 8 COMMUNICATIONS ABOUT THE PROGRAMMING LANGUAGE PASCAL BY PASCALERS MAY} 1977 TAB LEO F CON TEN T S # a POLICY .EDITOR'S CONTRIBUTION ..... "# # 2 HERE AND TffEREWITH PASCAL. * 2 News .'.* . 3 Conferences # 6 Books and Articles # * 7 Applications * # 8 ARTICLES * 8 "Development of a Pascal Compiler for the C. I.1. IRIS 50. A Parti al History. II # '. .. Olivier Lecarme # * * 11 "A Further Defence of Formatted Iriput" '" # # .c'.' .' " - B. A. E. 'Meeki ngs ~" 'J' ; .,* 12 ilPt6~6:~al S fo~P:~~ca 1" * # . - heorge H. Richmond # 15 "A Proposal for Increased Security in the Use of Variant * Records"- William Barabash, Charles R. Hill, and * # Richard B. Kieburtz . # * 16 "Update on UCSD Pascal, Activities" * #' - Kenneth L. Bowl es * 18 # 19 * 22 # 40 40 * 40 # 40 " 40 * 42 * # 44 / # * 64 * # 65 ALL PURPOSE COUPON "'.~SCl~L USER 'S GROUP POLl (1 ES Purposes - are to promote the use of the programming language Pascal as well as the ideas behind Pascal. Pascal is a practical, general purpose language \,/ith a small and systematic structure being used for: ' * teaching programming concepts . * developing reliable "production" software * implementing software efficiently on today's machines * writing portable software Membership - is open to anyone: particularly the Pasc~l user, teacher, maihtainer, I impl ementor, di stributor, or just pl ai nfan. Institutional membershi ps, ' especially libraries, are encouraged. Membership is per academic year end~ June 30. Anyone joining for a particular year will receivea1l 4 quarterl} issues of FMC.a£. NeLIJI.>R.e:Ue.1t for that year. {In other words, back issues ar sent automatically.} First time members receive a receipt for membership; renewers do not to save PUG postage. -

Information Systems

Contents 7 A data flow approach to interoperability, Guest editorial, Tor M Jansen 3 Arve Meisingset 52 Overview, Arve Meisingset 4 8 The draft CCITT formalism for specifying human- machine interfaces, Arve Meisingset 60 1 CHILL – the international standard language for telecommunications programming, 9 The CCITT specification and description language – Kristen Rekdal 5 SDL, Astrid Nyeng 67 2 Human-machine interface design for large systems, 10 SDL-92 as an object oriented notation, Arve Meisingset 11 Birger Møller-Pedersen 71 3 Reference models, 11 An introduction to TMN, Ståle Wolland 84 Sigrid Steinholt Bygdås and Vigdis Houmb 21 12 The structure of OSI management information, 4 Formal languages, Astrid Nyeng 29 Anne-Grethe Kåråsen 90 5 Introduction to database systems, 13 Network management systems in Norwegian Ole Jørgen Anfindsen 37 Telecom, Knut Johannessen 97 6 Software development methods and life cycle 14 Centralised network management, models, Einar Ludvigsen 100 Sigrid Steinholt Bygdås and Magne Jørgensen 44 15 The DATRAN and DIMAN tools, Cato Nordlund 104 16 DIBAS – a management system for distributed databases, Eirik Arne Dahle and Helge Berg 110 17 Data design for access control administration, Arve Meisingset 121 Telektronikk Editorial office: Telektronikk Norwegian Telecom Research Volume 89 No. 2/3 - 1993 P.O. Box 83 RESEARCH ISSN 0085-7130 N-2007 Kjeller, Norway Editor: Editorial board: Ola Espvik Ole P Håkonsen, Senior Executive Vice President Tel. + 47 63 80 98 83 Karl Klingsheim, Vice President, Research Bjørn Løken, Vice President, Market and Product Strategies Feature editor: Arve Meisingset Graphic design: Tel. + 47 63 80 91 15 Design Consult AS Editorial assistant: Layout and illustrations: Gunhild Luke Gunhild Luke, Britt Kjus, Åse Aardal Tel. -

Timeline of Operating Systems - Wikipedia, the Free Encyclopedia

Timeline of operating systems - Wikipedia, the free encyclopedia http://en.wikipedia.org/wiki/Timeline_of_operating_systems Timeline of operating systems From Wikipedia, the free encyclopedia This article presents a timeline of events in the history of computer operating systems from 1951 to 2009. For a narrative explaining the overall developments, see the History of operating systems. Contents 1 1950s 2 1960s 3 1970s 4 1980s 5 1990s 6 2000s 7 See also 7.1 Category links 8 References 9 External links 1950s 1951 LEO I 'Lyons Electronic Office'[1] was the commercial development of EDSAC computing platform, supported by British firm J. Lyons and Co. 1954 MIT's operating system made for UNIVAC 1103[2] 1955 General Motors Operating System made for IBM 701[3] 1956 GM-NAA I/O for IBM 704, based on General Motors Operating System 1957 Atlas Supervisor (Manchester University) (Atlas computer project start) BESYS (Bell Labs), for IBM 7090 and IBM 7094 1958 University of Michigan Executive System (UMES), for IBM 704, 709, and 7090 1959 SHARE Operating System (SOS), based on GM-NAA I/O 1 of 16 2/24/11 9:28 PM Timeline of operating systems - Wikipedia, the free encyclopedia http://en.wikipedia.org/wiki/Timeline_of_operating_systems 1960s 1960 IBSYS (IBM for its 7090 and 7094) 1961 CTSS (MIT's Compatible Time-Sharing System for the IBM 7094) MCP (Burroughs Master Control Program) 1962 Atlas Supervisor (Manchester University) (Atlas computer commissioned) GCOS (GE's General Comprehensive Operating System, originally GECOS, General Electric Comprehensive -

Contribution to the Annual Report 1987 Controls

EUROPEAN ORGANIZATION FOR NUCLEAR RESEARCH BERN/PS 88-04 [CO] 27.1.1988 Domains : CONTROLS Catégorie: REF-MAN Status : FINAL CONTRIBUTION TO THE ANNUAL REPORT 1987 CONTROLS GROUP F. Perriollat Genéve, Suisse Controls activities during 1987 have been dominated by the commissioning of the LEP pre-injector facility and the new antiproton source [AC complex]. The long shutdown was used to complete the interfacing of the ACOL equipment for the PS controls. This was done by adding one CAMAC Serial Loop to the AA Front-End ND-iUOCX computer. This loop was equipped with fourteen well filled CAMAC crates to connect the specific interface to the controls. Some facilities were also connected to the AA machine. This extension was mainly in the domain of instrumentation. The requested basic application software modules providing uniform access to the equipment. were produced and tested. This covers both new modules and modification and extension of the already existing ones. These additions of software facilities to the AA Front-End Computer induced a shortage in virtual memory resource for the Nodal application programs. A drastic modification of the Real-time Nodal program environment was quickly implemented to bypass this shortage of resources by using the special instruction set of the ND-iDDCX computer. All this work allowed user applications programs, mainly supplied by the AA team and the Operation crew, to be ready in time for the successful running-in of the new antiproton source complex. Un-line and off-line modelling facilities have also been extensively used in the commissioning. The LPI complex started running early in 1987, and the time was used to complete the running-in to transfer the positron beam to the SPS according to design parameters. -

System Software of the Cern Proton Synchrotron Control System

CERN 84-16 Proton Synchrotron Division 20 December 1984 ORGANISATION EUROPÉENNE POUR LA RECHERCHE NUCLÉAIRE CERN EUROPEAN ORGANIZATION FOR NUCLEAR RESEARCH SYSTEM SOFTWARE OF THE CERN PROTON SYNCHROTRON CONTROL SYSTEM B.E. Carpenter, R. Cailliau, G. Cuisinier and W. Remmer GENEVA 1984 © Copyright CERN, Genève, 1984 Propriété littéraire et scientifique réservée pour Literary and scientific copyrights reserved in all tous les pays du monde. Ce document ne peut countries of the world. This report, or any part of être reproduit ou traduit en tout ou en partie sans it, may not be reprinted or translated without l'autorisation écrite du Directeur général du written permission of the copyright holder, the CERN, titulaire du droit d'auteur. Dans les cas Director-General of CERN. However, permission appropriés, et s'il s'agit d'utiliser le document à will be freely granted for appropriate non des fins non commerciales, cette autorisation commercial use. sera volontiers accordée. If any patentable invention or registrable design Le CERN ne revendique pas la propriété des is described in the report, CERN makes no claim inventions brevetables et dessins ou modèles to property rights in it but offers it for the free use susceptibles de dépôt qui pourraient être décrits of research institutions, manufacturers and dans le présent document; ceux-ci peuvent être others. CERN, however, may oppose any attempt librement utilisés par les instituts de recherche, by a user to claim any proprietary or patent rights les industriels et autres intéressés. Cependant, le in such inventions or designs as may be des CERN se réserve le droit de s'opposer à toute cribed in the present document. -

The Founding, Fantastic Growth, and Fast Decline of Norsk Data AS

The Founding, Fantastic Growth, and Fast Decline of Norsk Data AS Tor Olav Steine Formerly of Norsk Data AS [email protected] Abstract. Norsk Data was a remarkable company that in just twenty years went from a glimmer in the eyes of some computer enthusiasts to become number two in share value at the Oslo Stock Exchange. Within a few years thereafter, it collapsed, for no obvious reason. How was this tremendous success possible and why did the company collapse? Keywords: Collapse, computer, F16, industry, minicomputer, Norsk Data, Nord, simulator, success, Supermini. 1 The Beginning 1.1 FFI A combination of circumstances led to the founding of Norsk Data1 in June 1967. Several brilliant researchers came together at the Norwegian Defense Research Institute (FFI)2 in Kjeller outside Oslo, Norway. Under institute director Finn Lied, division director of telecommunications Karl Holberg initiated a digital group and appointed Yngvar Lundh as its project leader. Lars Monrad-Krohn was appointed leader of the digital lab. Yngvar Lundh had experience as a researcher at MIT (Cambridge, MA) during a stay in 1959, Monrad-Krohn followed with a similar appointment in 1962–64, while Per Bjørge, another brilliant computer developer, did his own MIT tour during 1966. These researchers were impressed with the fast development of computer technology at MIT [1, 2] and soon FFI was itself active in the field, building two military systems, SAM and SAM2. Due to the fact that SAM2 was being built with the new Dual-In-Line (DIP) Integrated Circuit technology, it also had civil potential. In time, the idea of launching a spin-off company for the civilian market was born.