Thesis Final.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Confirmation Bias in Criminal Cases

Moa Lidén Confirmation Bias in Criminal Cases Dissertation presented at Uppsala University to be publicly examined in Sal IV, Universitetshuset, Biskopsgatan 3, 753 10 Uppsala, Uppsala, Friday, 28 September 2018 at 10:15 for the degree of Doctor of Laws. The examination will be conducted in English. Faculty examiner: Professor Steven Penrod (John Jay College of Criminal Justice, City University New York). Abstract Lidén, M. 2018. Confirmation Bias in Criminal Cases. 284 pp. Uppsala: Department of Law, Uppsala University. ISBN 978-91-506-2720-6. Confirmation bias is a tendency to selectively search for and emphasize information that is consistent with a preferred hypothesis, whereas opposing information is ignored or downgraded. This thesis examines the role of confirmation bias in criminal cases, primarily focusing on the Swedish legal setting. It also examines possible debiasing techniques. Experimental studies with Swedish police officers, prosecutors and judges (Study I-III) and an archive study of appeals and petitions for new trials (Study IV) were conducted. The results suggest that confirmation bias is at play to varying degrees at different stages of the criminal procedure. Also, the explanations and possible ways to prevent the bias seem to vary for these different stages. In Study I police officers’ more guilt presumptive questions to apprehended than non-apprehended suspects indicate a confirmation bias. This seems primarily driven by cognitive factors and reducing cognitive load is therefore a possible debiasing technique. In Study II prosecutors did not display confirmation bias before but only after the decision to press charges, as they then were less likely to consider additional investigation necessary and suggested more guilt confirming investigation. -

Behavioural Sciences, Research Methods

ROUTLEDGE Behavioural Sciences and Research Methods Catalogue 2021 January - June New and Forthcoming Titles www.routledge.com Welcome THE EASY WAY TO ORDER Welcome to the January to June 2021 Behavioural Sciences and Book orders should be addressed to the Research Methods catalogue. Taylor & Francis Customer Services Department at Bookpoint, or the appropriate overseas offices. We welcome your feedback on our publishing programme, so please do not hesitate to get in touch – whether you want to read, write, review, adapt or buy, we want to hear from you, so please visit our website below or please contact your local sales representative for Contacts more information. UK and Rest of World: Bookpoint Ltd www.routledge.com Tel: +44 (0) 1235 400524 Email: [email protected] USA: Taylor & Francis Tel: 800-634-7064 Email: [email protected] Asia: Taylor & Francis Asia Pacific Tel: +65 6508 2888 Email: [email protected] China: Taylor & Francis China Prices are correct at time of going to press and may be subject to change without Tel: +86 10 58452881 notice. Some titles within this catalogue may not be available in your region. Email: [email protected] India: Taylor & Francis India Tel: +91 (0) 11 43155100 eBooks Partnership Opportunities at Email: [email protected] We have over 50,000 eBooks available across the Routledge Humanities, Social Sciences, Behavioural Sciences, At Routledge we always look for innovative ways to Built Environment, STM and Law, from leading support and collaborate with our readers and the Imprints, including Routledge, Focal Press and organizations they represent. Psychology Press. -

Book Problem-Solving Now

book Problem-solving now Problem solving consists of using generic or ad hoc methods, in an orderly manner, for finding solutions to problems. Some of the problem-solving techniques developed and used in artificial intelligence, computer science, engineering, mathematics, or medicine are related to mental problem-solving techniques studied in psychology. == Definition == The term problem-solving is used in many disciplines, sometimes with different perspectives, and often with different terminologies. For instance, it is a mental process in psychology and a computerized process in computer science. Problems can also be classified into two different types (ill-defined and well-defined) from which appropriate solutions are to be made. Ill-defined problems are those that do not have clear goals, solution paths, or expected solution. Well-defined problems have specific goals, clearly defined solution paths, and clear expected solutions. These problems also allow for more initial planning than ill-defined problems. Being able to solve problems sometimes involves dealing with pragmatics (logic) and semantics (interpretation of the problem). The ability to understand what the goal of the problem is and what rules could be applied represent the key to solving the problem. Sometimes the problem requires some abstract thinking and coming up with a creative solution. === Psychology === In psychology, problem solving refers to a state of desire for reaching a definite 'goal' from a present condition that either is not directly moving toward the goal, is far from it, or needs more complex logic for finding a missing description of conditions or steps toward the goal. In psychology, problem solving is the concluding part of a larger process that also includes problem finding and problem shaping. -

Logic Made Easy: How to Know When Language Deceives

LOGIC MADE EASY ALSO BY DEBORAH J. BENNETT Randomness LOGIC MADE EASY How to Know When Language Deceives You DEBORAH J.BENNETT W • W • NORTON & COMPANY I ^ I NEW YORK LONDON Copyright © 2004 by Deborah J. Bennett All rights reserved Printed in the United States of America First Edition For information about permission to reproduce selections from this book, write to Permissions, WW Norton & Company, Inc., 500 Fifth Avenue, New York, NY 10110 Manufacturing by The Haddon Craftsmen, Inc. Book design by Margaret M.Wagner Production manager: Julia Druskin Library of Congress Cataloging-in-Publication Data Bennett, Deborah J., 1950- Logic made easy : how to know when language deceives you / Deborah J. Bennett.— 1st ed. p. cm. Includes bibliographical references and index. ISBN 0-393-05748-8 1. Reasoning. 2. Language and logic. I.Title. BC177 .B42 2004 160—dc22 2003026910 WW Norton & Company, Inc., 500 Fifth Avenue, New York, N.Y. 10110 www. wwnor ton. com WW Norton & Company Ltd., Castle House, 75/76Wells Street, LondonWlT 3QT 1234567890 CONTENTS INTRODUCTION: LOGIC IS RARE I 1 The mistakes we make l 3 Logic should be everywhere 1 8 How history can help 19 1 PROOF 29 Consistency is all I ask 29 Proof by contradiction 33 Disproof 3 6 I ALL 40 All S are P 42 Vice Versa 42 Familiarity—help or hindrance? 41 Clarity or brevity? 50 7 8 CONTENTS 3 A NOT TANGLES EVERYTHING UP 53 The trouble with not 54 Scope of the negative 5 8 A and E propositions s 9 When no means yes—the "negative pregnant" and double negative 61 k SOME Is PART OR ALL OF ALL 64 Some -

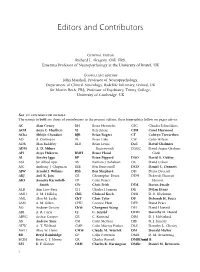

Editors and Contributors

Date : 9:8:2004 File Name: Prelims.3d 10/20 Editors and Contributors General editor Richard L. Gregory, CBE, FRS, Emeritus Professor of Neuropsychology at the University of Bristol, UK Consultant editors John Marshall, Professor of Neuropsychology, Department of Clinical Neurology, Radcliffe In®rmary, Oxford, UK Sir Martin Roth, FRS, Professor of Psychiatry, Trinity College, University of Cambridge, UK Key to contributor initials The names in bold are those of contributors to the present edition; their biographies follow on pages xiii±xv. AC Alan Cowey BH Beate Hermelin CSC Claudia SchmoÈlders ACH Anya C. Hurlbert BJ Bela Julesz CSH Carol Haywood ACha Abhijit Chauduri BJR Brian Rogers CT Colwyn Trevarthen AD A. Dickinson BL Brian Lake CW Colin Wilson ADB Alan Baddeley BLB Brian Lewis DaC David Chalmers ADM A. D. Milner Butterworth DAGC David Angus Graham AH Atiya Hakeem BMH Bruce Hood Cook AI Ainsley Iggo BP Brian Pippard DAO David A. Oakley AJA Sir Alfred Ayer BS Barbara J. Sahakian DC David Cohen AJC Anthony J. Chapman BSE Ben Semeonoff DCD Daniel C. Dennett AJW Arnold J. Wilkins BSh Ben Shephard DD Diana Deutsch AKJ Anil K. Jain CE Christopher Evans DDH Deborah Duncan AKS Annette Karmiloff- CF Colin Fraser Honore Smith CFr Chris Frith DDS Doron Swade ALB Ann Low-Beer CH Charles Hannam DE Dylan Evans AMH A. M. Halliday ChK Christof Koch DEB D. E. Blackman AML Alan M. Leslie ChT Chris Tyler DF Deborah H. Fouts AMS A. M. Sillito CHU Corinne Hutt DFP David Pears AO Andrew Ortony ChW Chongwei Wang DH David Howard ARL A. -

Score Distribution Analysis, Artificial Intelligence, and Player Modeling for Quantitative Game Design

SCORE DISTRIBUTION ANALYSIS, ARTIFICIAL INTELLIGENCE, AND PLAYER MODELING FOR QUANTITATIVE GAME DESIGN DISSERTATION Submitted in Partial Fulfillment of the Requirements for the Degree of DOCTOR OF PHILOSOPHY (Computer Science) at the NEW YORK UNIVERSITY TANDON SCHOOL OF ENGINEERING by Aaron Isaksen May 2017 SCORE DISTRIBUTION ANALYSIS, ARTIFICIAL INTELLIGENCE, AND PLAYER MODELING FOR QUANTITATIVE GAME DESIGN DISSERTATION Submitted in Partial Fulfillment of the Requirements for the Degree of DOCTOR OF PHILOSOPHY (Computer Science) at the NEW YORK UNIVERSITY TANDON SCHOOL OF ENGINEERING by Aaron Isaksen May 2017 Approved: Department Chair Signature Date University ID: N18319753 Net ID: ai758 ii Approved by the Guidance Committee: Major: Computer Science Andy Nealen Assistant Professor of Computer Science New York University, Tandon School of Engineering Date Julian Togelius Associate Professor of Computer Science New York University, Tandon School of Engineering Date Frank Lantz Full Arts Professor and Director New York University, NYU Game Center Date Michael Mateas Professor of Computational Media and Director University of California at Santa Cruz Center for Games and Playable Media Date Leonard McMillan Associate Professor of Computer Science University of North Carolina at Chapel Hill Date iii Microfilm/Publishing Microfilm or copies of this dissertation may be obtained from: UMI Dissertation Publishing ProQuest CSA 789 E. Eisenhower Parkway P.O. Box 1346 Ann Arbor, MI 48106-1346 iv Vita Aaron Mark Isaksen Education Ph.D. in Computer Science Jan. 2014 - May 2017 New York University, Tandon School of Engineering, Game Innovation Lab - Pearl Brownstein Doctoral Research Award, 2017 - Outstanding Performance on Ph.D. Qualifying Exam, D. Rosenthal, MD Award, 2015 - Best paper in Artificial Intelligence and Game Technology, FDG 2015 M.S. -

Is Universal Suffrage Overrated?

Department of Political Science Major in International Relations, Global Studies Chair Global Justice Is Universal Suffrage Overrated? SUPERVISOR Prof. Marcello Di Paola CANDIDATE Pasquale Domingos Di Pace Student no. 632972 CO-SUPERVISOR Prof. Gianfranco Pellegrino 1 Contents Introduction ---------------------------------------------------------------------------------------------------- 3 I. Chapter I: Are Citizens Politically Ignorant? ------------------------------------------------------ 14 I. Political Ignorance --------------------------------------------------------------------------------- 17 I Lack of Political Knowledge ------------------------------------------------------------- 17 I.II Rational Ignorance ---------------------------------------------------------------------- 20 II. Cognitive Biases ---------------------------------------------------------------------------------- 22 II.I The Big Five Personality Traits ------------------------------------------------------- 22 II.II Confirmation Bias ---------------------------------------------------------------------- 23 II.III Availability Bias ----------------------------------------------------------------------- 26 II.IV Peer Pressure and Authority --------------------------------------------------------- 27 II.V Political Tribalism --------------------------------------------------------------------- 29 II.VI Other Cognitive Biases --------------------------------------------------------------- 31 II. Chapter II: Epistocracy --------------------------------------------------------------------------------- -

Information Processing and Constraint Satisfaction in Wason's Selection

Information Processing and Constraint Satisfaction in Wason’s Selection Task Genot, Emmanuel Published in: Cognition, reasoning, emotion, Action. CogSc-12. Proceedings of the ILCLI International Workshop on Cognitive Science. 2012 Link to publication Citation for published version (APA): Genot, E. (2012). Information Processing and Constraint Satisfaction in Wason’s Selection Task. In J. M. Larrazabal (Ed.), Cognition, reasoning, emotion, Action. CogSc-12. Proceedings of the ILCLI International Workshop on Cognitive Science. (pp. 153-162). University of the Basque Country Press. Total number of authors: 1 General rights Unless other specific re-use rights are stated the following general rights apply: Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal Read more about Creative commons licenses: https://creativecommons.org/licenses/ Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. LUND UNIVERSITY PO Box 117 221 00 Lund +46 46-222 00 00 Download date: 04. Oct. 2021 Information Processing and Constraint Satisfaction in Wason’s Selection Task∗ Emmanuel J. -

Information Transmission in Communication Games Signaling with an Audience

City University of New York (CUNY) CUNY Academic Works Computer Science Technical Reports CUNY Academic Works 2013 TR-2013008: Information Transmission in Communication Games Signaling with an Audience Farishta Satari How does access to this work benefit ou?y Let us know! More information about this work at: https://academicworks.cuny.edu/gc_cs_tr/383 Discover additional works at: https://academicworks.cuny.edu This work is made publicly available by the City University of New York (CUNY). Contact: [email protected] Information Transmission in Communication Games Signaling with an Audience by Farishta Satari A dissertation submitted to the Graduate Faculty in Computer Science in partial fulfillment of the requirements for the degree of Doctor of Philosophy, The City University of New York. 2013 c 2013 Farishta Satari All rights reserved ii This manuscript has been read and accepted for the Graduate Faculty in Computer Science in satisfaction of the dissertation requirement for the degree of Doctor of Philosophy. THE CITY UNIVERSITY OF NEW YORK iii Abstract INFORMATION TRANSMISSION IN COMMUNICATION GAMES SIGNALING WITH AN AUDIENCE by Farishta Satari Adviser: Professor Rohit Parikh Communication is a goal-oriented activity where interlocutors use language as a means to achieve an end while taking into account the goals and plans of others. Game theory, being the scientific study of strategically interactive decision-making, provides the mathematical tools for modeling language use among rational decision makers. When we speak of language use, it is obvious that questions arise about what someone knows and what someone believes. Such a treatment of statements as moves in a language game has roots in the philosophy of language and in economics. -

Individual Differences and Basic Logic Ability Christopher R

James Madison University JMU Scholarly Commons Masters Theses The Graduate School Summer 2012 Individual differences and basic logic ability Christopher R. Runyon James Madison University Follow this and additional works at: https://commons.lib.jmu.edu/master201019 Part of the Psychology Commons Recommended Citation Runyon, Christopher R., "Individual differences and basic logic ability" (2012). Masters Theses. 308. https://commons.lib.jmu.edu/master201019/308 This Thesis is brought to you for free and open access by the The Graduate School at JMU Scholarly Commons. It has been accepted for inclusion in Masters Theses by an authorized administrator of JMU Scholarly Commons. For more information, please contact [email protected]. Individual Differences and Basic Logic Ability Christopher R. Runyon A thesis submitted to the Graduate Faculty of JAMES MADISON UNIVERSITY In Partial Fulfillment of the Requirements for the degree of Master of Arts Department of Graduate Psychology August 2012 Acknowledgments I would like to thank Dr. Richard West for his help and willingness to allow me to choose an independent topic for my thesis, in spite of the extra work that it brought upon himself and the other members of my thesis committee. I also would like to thank Dr. Laine Bradshaw for her insightful and detailed comments on the methodological aspects of this paper. I am also grateful to Dr. Michael Hall, as he spent several hours assisting me with the construction of this manuscript over the summer months, far beyond the necessary obligations of a committee member. I also would like to thank Dr. Bill Knorpp, Dr. Thomas Adajian, and Dr. -

Beyond Reasoning; the Life, Times and Work

BEYOND REASONING This book tells the story of Peter Cathcart Wason, offeringunique insights into the life of the pioneering research psychologist credited for establishing a whole new field of science: the psychological study of reasoning. And this was just one of the major contributions he made to psychology. Covering much more than Wason’s academic work, the author, Ken Manktelow, paints a vivid and personal portrait of the man. The book traces Wason’s eclectic family history, steeped in Liberal politics and aristocratic antecedents, before moving through his service in the Second World War and the life-changing injuries he sustained at the end of it, and on to his abortive first attempt at a career and subsequent extraordinary success as a psychologist. Following a chronological structure with each chapter dedicated to a significant transition period in Wason’s life, Manktelow expertly weaves together personal narratives with Wason’s evolving intellectual interests and major scientific discoveries, and in doing so simultaneously traces the worlds that vanished during the twentieth century. A brilliant biography of one of the most renowned figures in cognitive psychology, this book will be of interest not only to students and scholars in thinking and reasoning, but to anyone interested in the life and lasting contribution of this celebrated scholar. Ken Manktelow was formerly Professor of Psychology at the University of Wolverhampton, having begun his academic career with a PhD on reasoning, examined by Wason himself. He is the author