Remote Sensing Laboratories (University of Zurich) for His Fruitful Collaboration, Which Incited Me to Improve DART 3D Projection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Automatic Generation of a 3D City Model

UNIVERSITY OF CASTILLA-LA MANCHA ESCUELA SUPERIOR DE INFORMÁTICA COMPUTER ENGINEERING DEGREE DEGREE FINAL PROJECT Automatic generation of a 3D city model David Murcia Pacheco June, 2017 AUTOMATIC GENERATION OF A 3D CITY MODEL Escuela Superior de Informática UNIVERSITY OF CASTILLA-LA MANCHA ESCUELA SUPERIOR DE INFORMÁTICA Information Technology and Systems SPECIFIC TECHNOLOGY OF COMPUTER ENGINEERING DEGREE FINAL PROJECT Automatic generation of a 3D city model Author: David Murcia Pacheco Director: Dr. Félix Jesús Villanueva Molina June, 2017 David Murcia Pacheco Ciudad Real – Spain E-mail: [email protected] Phone No.:+34 625 922 076 c 2017 David Murcia Pacheco Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License". i TRIBUNAL: Presidente: Vocal: Secretario: FECHA DE DEFENSA: CALIFICACIÓN: PRESIDENTE VOCAL SECRETARIO Fdo.: Fdo.: Fdo.: ii Abstract HIS document collects all information related to the Degree Final Project (DFP) of Com- T puter Engineering Degree of the student David Murcia Pacheco, tutorized by Dr. Félix Jesús Villanueva Molina. This work has been developed during 2016 and 2017 in the Escuela Superior de Informática (ESI), in Ciudad Real, Spain. It is based in one of the proposed sub- jects by the faculty of this university for this year, called "Generación automática del modelo en 3D de una ciudad". -

Oracle Spatial 11G Release 2 New Features Deep Dive Siva Ravada Director of Development, Oracle Spatial

Title of Presentation Oracle Spatial 11g Release 2 New Features Deep Dive Siva Ravada Director of Development, Oracle Spatial Michael Smith US Army Corps of Engineers Agenda • Introduction to Oracle Spatial <Insert Picture Here> • Spatial Engine • SQL Developer • GeoRaster • Network Data Model • Moving Objects • 3D Support Oracle Spatial Features – Oracle Locator: Feature of Oracle Database XE, SE, EE – Oracle Spatial: Priced option to HTTP Oracle Database EE Fusion Middleware – MapViewer*: Java application and map rendering feature of Fusion MapViewer Middleware – Bundled Map Content: Major roads, JDBC administrative boundaries (city, county, state, country) - worldwide Oracle Database coverage from Navteq Oracle Locator Oracle Spatial Bundled Map Content Supports all Geospatial Data types Polygons (admin, sales territories, Locations high risk zones) Networks (points of interest) (roads, utilities) Data Imagery 3D data type (satellite imagery) Topology (city models) (data provider) LIDAR Data Type TIN Data Type Open Platform for Leading Applications and Tools MapInfo Leica ADE Bentley Manifold Oracle Spatial 11g Enabled Applications Scrollable, Interactive Maps Spatial Web Services 3D, Point Clouds, and LIDAR Geocoding & Routing Oracle BI Dashboards Raster Imagery Spatial Engine Spatial on Exadata • We achieve performance improvements based on the exploitation of the processing power, bandwidth, and parallelism of the Exadata machine • Parallel query and partitioning help improve Spatial performance • All the row level functions are also parallel enabled to take advantage of parallel query • Speedups of up to 40 times (compared to a 1-core CPU box) for the typical SDO_ANYINTERACT spatial query • Spatial predicates are not currently pushed into the Exadata storage nodes 9 Spatial Index Parallel Creation on Exadata • Build a Local Spatial Index on Partitioned Table: each slave can build a different index partition in parallel. -

GRASS GIS Loves Lidar FOSS4G NA 2016

GRASS GIS loves lidar FOSS4G NA 2016 Vaclav Petras (Vashek) Anna Petrasova, Helena Mitasova Center for Geospatial Analytics May 5, 2016 available at wenzeslaus.github.io/grass-lidar-talks Vaclav Petras (NC State University) GRASS GIS loves lidar May 5, 2016 1 / 31 GRASS GIS I all in one I hydrology modeling, image segmentation, point clustering, . I from small laptops to supercomputers I Raspberry Pi, Windows, Mac, GNU/Linux, GNU/Hurd, FreeBSD, IBM AIX I learn now, use forever I over 30 years of development and interface refinement I probably used more than you think I similarly to C/C++ is often not latest release 7.0.4 mentioned but is somewhere in there Sunday, May 1, 2016 Vaclav Petras (NC State University) GRASS GIS loves lidar May 5, 2016 2 / 31 CLI Vaclav Petras (NC State University) GRASS GIS loves lidar May 5, 2016 3 / 31 GUI Vaclav Petras (NC State University) GRASS GIS loves lidar May 5, 2016 4 / 31 Python Vaclav Petras (NC State University) GRASS GIS loves lidar May 5, 2016 5 / 31 Python versus CLI Documentation, Command Line (Shell, Bash, cmd.exe): r.in.lidar input=points.las\ output=elevation-e Python: from grass.script import run_command run_command('r.in.lidar', input="points.las", output="elevation", flags='e') Vaclav Petras (NC State University) GRASS GIS loves lidar May 5, 2016 6 / 31 Module GUI Vaclav Petras (NC State University) GRASS GIS loves lidar May 5, 2016 7 / 31 Graphical Modeler Vaclav Petras (NC State University) GRASS GIS loves lidar May 5, 2016 8 / 31 Points I collected by lidar I generated by Structure -

Sphinx Documentation Release 1.5.4

Sphinx Documentation Release 1.5.4 Georg Brandl Apr 02, 2017 Contents 1 Introduction 1 1.1 Conversion from other systems....................................1 1.2 Use with other systems........................................2 1.3 Prerequisites..............................................2 1.4 Usage..................................................2 2 First Steps with Sphinx 3 2.1 Install Sphinx..............................................3 2.2 Setting up the documentation sources................................3 2.3 Defining document structure.....................................4 2.4 Adding content.............................................5 2.5 Running the build...........................................5 2.6 Documenting objects..........................................5 2.7 Basic configuration...........................................6 2.8 Autodoc.................................................7 2.9 Intersphinx...............................................7 2.10 More topics to be covered.......................................8 3 Invocation of sphinx-quickstart9 3.1 Structure options............................................9 3.2 Project basic options..........................................9 3.3 Extension options........................................... 10 3.4 Makefile and Batchfile creation options............................... 10 3.5 Project templating........................................... 11 4 Invocation of sphinx-build 13 4.1 Environment variables......................................... 15 4.2 Makefile options........................................... -

3D Point Clouds in Postgresql/Postgis for Applications in GIS and Geodesy

3D Point Clouds in PostgreSQL/PostGIS for Applications in GIS and Geodesy Theresa Meyer a and Ansgar Brunn b University of Applied Sciences Wuerzburg-Schweinfurt, Roentgenring 8, Wuerzburg, Germany Keywords: 3D Point Clouds, Point Cloud Tiling, Geodatabase, GIS, 3D Applications. Abstract: Besides the common approach of an exclusively file based management of 3D point clouds, meanwhile it is possible to store and process this special type of massive geodata within spatial database systems. Users benefit from the general advantages of database solutions and especially from the potentials of a combined analysis of original 3D point clouds, 2D rasters, 3D voxel stacks and 2D and 3D vector data in order to gain valuable geo- information. This paper describes the integration of 3D point clouds into an open source PostgreSQL/PostGIS database using the Pointcloud extension and functions of the Point Data Abstraction Library (PDAL). The focus is on performing three-dimensional spatial queries and the evaluation of different tiling methods for the organization of 3D point clouds into table rows, regarding memory space, performance of spatial queries and effects on interactions between point clouds and other GIS features within the database. A new approach for an optimized point cloud tiling, considering the individual geometric characteristic of a 3D point cloud, is presented. The results show that an individually selected storage structure for a point cloud is crucial for low memory consumption and high-performance 3D queries in PostGIS applications, taking account of its three-dimensional spatial extent and point density. 1 INTRODUCTION cloud formats enable a lossless transport of up to bil- lions of 3D point coordinates (X,Y,Z) and dozens of 3D point clouds are increasingly gaining importance additional point attributes such as Intensity, Return for various applications mainly in fields of geodesy, Number, Red, Green, Blue, NIR, Classification and geoinformatics, architecture and archaeology. -

Open Source Point Cloud Processing HISTORY

Open Source Point Cloud Processing HISTORY • Software APIs and tools for manipulating ASPRS LAS data • Started in 2007, first release in 2008 • 20+ releases since 2007 • BSD license OPEN SOURCE • Community-driven • Public source repository • Public bug tracker • Public mailing list • Liberal license SCOPE • Feature-rich • Shoulders (GDAL, LASzip, Boost) • Multi-platform (Win32/64, OS X, Linux) • Multi-language (C++ - C/Python/.NET) AUDIENCE • Software developers • System integrators • Processing pipelines - horizontal scaling SOFTWARE • Cadcorp SIS Desktop • SAGA GIS • Myriax Eonfusion • ERDAS LPS/eATE • LASERDATA LIS • Safe FME • LizardTech LiDAR • TopoDOT Point Cloud Compressor Processing Tool Suite http://trac.liblas.org/wiki/WhoUsesLibLAS PROJECT ACTIVITY • 11 commiters • 119 mail list subscribers • 4-12 IRC members #liblas - irc.freenode.net • 60 bug tracking members • 200+ bugs filed SPONSORSHIP • Iowa Department of Natural Resources • LizardTech • US Army Corps Cold Regions Research and Engineering Laboratory FEATURES • Filtering • Transformation • Reprojection (GDAL) • Indexing and Tiling • Compression (LASzip) FORMAT SUPPORT • ASPRS LAS 1.0, 1.1, 1.2, 1.3 (points only) • LASzip - compressed LAS (read/write) • TerraSolid .bin (read) • Oracle Point Cloud (write) • ASCII (read/write) FILTERING FILTERING $ las2las --input in.las \ --output out.las \ --drop-intensity ">=1000" \ --keep-scan-angle "<=15" \ --keep-classes 2 COLOR ASSIGNMENT $ las2las -i input.las \ --color-source image.img \ --output output.las \ --file-format 1.2 \ --point-format 3 \ --color-source-scale 256 \ --color-source-bands 3 1 2 REPROJECTION $ las2las --input epsg26915.las \ --output wgs84.las \ --scale 0.000001 \ 0.000001 \ 0.01 --a_srs EPSG:26915 \ --t_srs EPSG:4326 VERTICAL TRANSFORM $ las2las --input srs.las \ --t_srs EPSG:26915+5703 --output navd88.las ADDING VLRs $ las2las --input in.las \ --output out.las \ --add-vlr CUSTOM_VLR \ 42 "A VLR description"\ "myfile.vlr" PYTHON >>> from liblas import file >>> f = file.File('file.las', mode='r') >>> for p in f: .. -

Library Concept and Design for Lidar Data Processing

LIBRARY CONCEPT AND DESIGN FOR LIDAR DATA PROCESSING Nicolas David, Clement´ Mallet, Fred´ eric´ Bretar Institut Geographique´ National (IGN) – MATIS laboratory 2-4 avenue Pasteur 94165 Saint-Mande,´ FRANCE http://recherche.ign.fr/labos/matis fi[email protected] KEY WORDS: lidar data, Point Cloud, Design, Data Management System, Processing ABSTRACT: Airborne Laser Scanning (ALS) is nowadays a very popular technology providing accurate altimetric data for remote sensing and mapping purposes. Therefore, many algorithms have been developed so far to process these data, depending on the application. Nevertheless, for researchers, it is still a challenging task to handle large amount of heterogeneous data and adapt them for their specific aim and processes. This paper reports the thoughts and the strategy developed by the MATIS laboratory of the Institut Geographique´ National (IGN) about an efficient lidar library design in order to tackle these issues. The specification of an efficient and versatile lidar file format is first discussed. The standard and current lidar file formats are first reviewed and a new one, dedicated to raw data processing with high feature modularity is presented. Besides, existing code components and libraries are reviewed with regard to their compatibility for research development. Modularity, availability and license conditions are here the main selection criteria. Then, differents strategies for large data set handling are summarized and extended by a new solution, both based on lidar strip and raw sensor topology. These workflows are illustrated through a UML activity diagram dedicated to 2D spatial query. Finally, the current status of the implementation of this federative software as well as the perspectives of development are sketched. -

Ecosystems in Github and a Method for Ecosystem Identification Using

Ecosystems in GitHub and a Method for Ecosystem Identification using Reference Coupling Kelly Blincoe, Francis Harrison and Daniela Damian Software Engineering Global InterAction Lab University of Victoria Victoria, BC, Canada Email: [email protected], [email protected], [email protected] Abstract—Software projects are not developed in isolation. To identify technical dependencies, we turn to cross- Recent research has shifted to studying software ecosystems, references on GitHub, a social code hosting service. GitHub communities of projects that depend on each other and are encourages collaboration between users both within and across developed together. However, identifying technical dependencies at the ecosystem level can be challenging. In this paper, we projects through its transparent interface and built-in social 1 propose a new method, known as reference coupling, for detecting features. With GitHub Flavored Markdown , when a user technical dependencies between projects. The method establishes cross-references another repository in a pull request, issue or dependencies through user-specified cross-references between commit comment a link to the other repository is automati- projects. We use our method to identify ecosystems in GitHub- cally created. This introduces a way for developers to bring hosted projects, and we identify several characteristics of the identified ecosystems. We find that most ecosystems are centered awareness across repositories. In this paper, we investigate around one project and are interconnected with other ecosystems. whether these cross-references indicate a technical dependency The predominant type of ecosystems are those that develop between the two repositories by examining a set of these tools to support software development. We also found that the cross-references. -

Guide De L'utilisateur

CloudCompare Manuel utilisateur de la version 2.4 Authors : DGM, AB, RM www.danielgm.net/cc 2 i Table des matières Introduction 1 0.1 Présentation..................................................1 0.2 Licence.....................................................2 0.3 Installation du binaire (Windows).......................................3 0.4 Compilation du projet.............................................3 1 Interface 5 1.1 Fenêtre principale...............................................5 1.2 Base de données................................................6 1.2.1 Arbre de navigation..........................................6 1.2.2 Sélectionner des entités........................................7 1.2.3 Intéractions avancées avec l’arbre de navigation..........................8 1.2.4 Entités particulières..........................................8 1.2.4.1 L’octree............................................8 1.2.4.2 Etiquettes 2D et annotation graphique........................... 10 1.3 Affichage des entités.............................................. 11 1.3.1 Vues 3D................................................. 11 1.3.1.1 Présentation......................................... 11 1.3.1.2 Interagir avec une vue 3D.................................. 12 1.3.1.3 Utiliser plusieurs vues 3D.................................. 13 1.3.1.4 Camera link......................................... 14 1.3.2 Options d’affichage.......................................... 14 1.3.2.1 Barre d’outils Viewing Tools ................................. 14 1.3.2.2 -

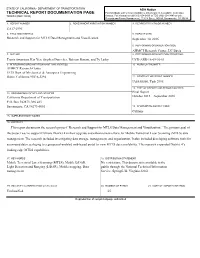

TECHNICAL REPORT DOCUMENTATION PAGE for Individuals with Sensory Disabilities, This Document Is Available in Alternate TR0003 (REV 10/98) Formats

STATE OF CALIFORNIA • DEPARTMENT OF TRANSPORTATION ADA Notice TECHNICAL REPORT DOCUMENTATION PAGE For individuals with sensory disabilities, this document is available in alternate TR0003 (REV 10/98) formats. For information call (916) 654-6410 or TDD (916) 654-3880 or write Records and Forms Management, 1120 N Street, MS-89, Sacramento, CA 95814. 1. REPORT NUMBER 2. GOVERNMENT ASSOCIATION NUMBER 3. RECIPIENT'S CATALOG NUMBER CA17-2996 4. TITLE AND SUBTITLE 5. REPORT DATE Research and Support for MTLS Data Management and Visualization September 30, 2016 6. PERFORMING ORGANIZATION CODE AHMCT Research Center, UC Davis 7. AUTHOR 8. PERFORMING ORGANIZATION REPORT NO. Travis Swanston, Kin Yen, Stephen Donecker, Bahram Ravani, and Ty Lasky UCD-ARR-16-09-30-01 9. PERFORMING ORGANIZATION NAME AND ADDRESS 10. WORK UNIT NUMBER AHMCT Research Center UCD Dept. of Mechanical & Aerospace Engineering Davis, California 95616-5294 11. CONTRACT OR GRANT NUMBER IA65A0560, Task 2996 13. TYPE OF REPORT AND PERIOD COVERED 12. SPONSORING AGENCY AND ADDRESS Final Report California Department of Transportation October 2015 – September 2016 P.O. Box 942873, MS #83 Sacramento, CA 94273-0001 14. SPONSORING AGENCY CODE Caltrans 15. SUPPLEMENTARY NOTES 16. ABSTRACT This report documents the research project “Research and Support for MTLS Data Management and Visualization.” The primary goal of the project was to support Caltrans District 4 in their upgrade and enhancement efforts for Mobile Terrestrial Laser Scanning (MTLS) data management. The research included investigating data storage, management, and organization. It also included developing software tools for automated data cataloging to a geospatial-enabled web-based portal to view MTLS data availability. -

POLITECNICO DI TORINO Repository ISTITUZIONALE

POLITECNICO DI TORINO Repository ISTITUZIONALE The Analysis of Open Source Software and Data for Establishment of GIS Services Throughout the Network in a Mapping Organization at National or International Level Original The Analysis of Open Source Software and Data for Establishment of GIS Services Throughout the Network in a Mapping Organization at National or International Level / JAFARI SALIM, Mehrdad. - (2014). Availability: This version is available at: 11583/2540693 since: Publisher: Politecnico di Torino Published DOI:10.6092/polito/porto/2540693 Terms of use: Altro tipo di accesso This article is made available under terms and conditions as specified in the corresponding bibliographic description in the repository Publisher copyright (Article begins on next page) 04 August 2020 POLITECNICO DI TORINO Doctorate school PhD in Environment and Territory - Environmental Protection and Management XXV cycle Final Dissertation The analysis of open source software and Data for establishment of GIS services throughout the network in a mapping organization at National or International level MEHRDAD JAFARI SALIM Tutor: Prof. Piero Boccardo FEB 2014 TABLE OF CONTENTS TABLE OF CONTENTS ............................................................................................................................ I INTRODUCTION................................................................................................................................... 1 1. Spatial Data Infrastructure Components and Considerations ...................................................... -

Pragmatic Remote Sensing a Hands-On Approach to Processing

Pragmatic Remote Sensing A Hands-on Approach to Processing J. Inglada1 , E. Christophe2 1CENTRE D’ÉTUDES SPATIALES DE LA BIOSPHÈRE,TOULOUSE,FRANCE 2CENTRE FOR REMOTE IMAGING,SENSING AND PROCESSING, NATIONAL UNIVERSITY OF SINGAPORE This content is provided under a Creative Commons Attribution 3.0 Unported License IGARSS 2010, Honolulu Table of Contents 1. General introduction 2. Pre-processing 2.1 Geometric corrections 2.2 Radiometric corrections 3. Feature extraction 4. Image classification 5. Change detection IGARSS 2010, Honolulu Introduction to the Orfeo Toolbox Applications and librairy Pragmatic Remote Sensing A Hands-on Approach to Processing J. Inglada1 , E. Christophe2 1CENTRE D’ÉTUDES SPATIALES DE LA BIOSPHÈRE,TOULOUSE,FRANCE 2CENTRE FOR REMOTE IMAGING,SENSING AND PROCESSING, NATIONAL UNIVERSITY OF SINGAPORE This content is provided under a Creative Commons Attribution 3.0 Unported License IGARSS 2010, Honolulu Introduction to the Orfeo Toolbox Applications and librairy Why? Common problems I Reading images I Accessing metadata I Implementing state of the art algorithms to be able to extract the most information, we need to use ⇒ the best of what is available: data, algorithms,. IGARSS 2010, Honolulu Introduction to the Orfeo Toolbox Applications and librairy What When Why How What is Orfeo Toolbox (OTB)? In the frame of CNES ORFEO Program Goal Make the development of new algorithms and their validation easier I C++ library: provide many algorithms (pre-processing, image analysis) with a common interface I Open-source: free to