Distributed Transactions Can Scale

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Big Data Velocity in Plain English

Big Data Velocity in Plain English John Ryan Data Warehouse Solution Architect Table of Contents The Requirement . 1 What’s the Problem? . .. 2 Components Needed . 3 Data Capture . 3 Transformation . 3 Storage and Analytics . 4 The Traditional Solution . 6 The NewSQL Based Solution . 7 NewSQL Advantage . 9 Thank You. 10 About the Author . 10 ii The Requirement The assumed requirement is the ability to capture, transform and analyse data at potentially massive velocity in real time. This involves capturing data from millions of customers or electronic sensors, and transforming and storing the results for real time analysis on dashboards. The solution must minimise latency — the delay between a real world event and it’s impact upon a dashboard, to under a second. Typical applications include: • Monitoring Machine Sensors: Using embedded sensors in industrial machines or vehicles — typically referred to as The Internet of Things (IoT) . For example Progressive Insurance use real time speed and vehicle braking data to help classify accident risk and deliver appropriate discounts. Similar technology is used by logistics giant FedEx which uses SenseAware technology to provide near real-time parcel tracking. • Fraud Detection: To assess the risk of credit card fraud prior to authorising or declining the transaction. This can be based upon a simple report of a lost or stolen card, or more likely, an analysis of aggregate spending behaviour, aligned with machine learning techniques. • Clickstream Analysis: Producing real time analysis of user web site clicks to dynamically deliver pages, recommended products or services, or deliver individually targeted advertising. Big Data: Velocity in Plain English eBook 1 What’s the Problem? The primary challenge for real time systems architects is the potentially massive throughput required which could exceed a million transactions per second. -

ACID Compliant Distributed Key-Value Store

ACID Compliant Distributed Key-Value Store #1 #2 #3 Lakshmi Narasimhan Seshan , Rajesh Jalisatgi , Vijaeendra Simha G A # 1l [email protected] # 2r [email protected] #3v [email protected] Abstract thought that would be easier to implement. All Built a fault-tolerant and strongly-consistent key/value storage components run http and grpc endpoints. Http endpoints service using the existing RAFT implementation. Add ACID are for debugging/configuration. Grpc Endpoints are transaction capability to the service using a 2PC variant. Build used for communication between components. on the raftexample[1] (available as part of etcd) to add atomic Replica Manager (RM): RM is the service discovery transactions. This supports sharding of keys and concurrent transactions on sharded KV Store. By Implementing a part of the system. RM is initiated with each of the transactional distributed KV store, we gain working knowledge Replica Servers available in the system. Users have to of different protocols and complexities to make it work together. instantiate the RM with the number of shards that the user wants the key to be split into. Currently we cannot Keywords— ACID, Key-Value, 2PC, sharding, 2PL Transaction dynamically change while RM is running. New Replica Servers cannot be added or removed from once RM is I. INTRODUCTION up and running. However Replica Servers can go down Distributed KV stores have become a norm with the and come back and RM can detect this. Each Shard advent of microservices architecture and the NoSql Leader updates the information to RM, while the DTM revolution. Initially KV Store discarded ACID properties leader updates its information to the RM. -

Lecture 15: March 28 15.1 Overview 15.2 Transactions

CMPSCI 677 Distributed and Operating Systems Spring 2019 Lecture 15: March 28 Lecturer: Prashant Shenoy 15.1 Overview This section covers the following topics: Distributed Transactions: ACID Properties, Concurrency Control, Serializability, Two-Phase Locking 15.2 Transactions Transactions are used widely in databases as they provide atomicity (all or nothing property). Atomicity is the property in which operations inside a transaction all execute successfully or none of the operations execute. Thus, if a process crashes during a transaction, all operations that happened during that transaction must be undone. Transactions are usually implemented using locks. Without locks there would be interleaving of operations from two transactions, causing overwrites and inconsistencies. It has to look like all instructions/operations of one transaction are executed at once, while ensuring other external instructions/operations are not executing at the same time. This is especially important in real-world applications such as banking applications. 15.2.1 ACID: Most transaction systems will provide the following properties • Atomicity: All or nothing (all instructions execute or none; cannot have some instructions execute and some do not) • Consistency: Transaction takes system from one consistent (valid) state to another • Isolated: Transaction executes as if it is the only transaction in the system. Ensures that the concurrent execution of transactions results in a system state that would be obtained if transactions were executed sequentially (serializability). -

Distributed Transactions

Distributed Systems 600.437 Distributed Transactions Department of Computer Science The Johns Hopkins University Yair Amir Fall 16/ Lecture 6 1 Distributed Transactions Lecture 6 Further reading: Concurrency Control and Recovery in Database Systems P. A. Bernstein, V. Hadzilacos, and N. Goodman, Addison Wesley. 1987. Transaction Processing: Concepts and Techniques Jim Gray & Andreas Reuter, Morgan Kaufmann Publishers, 1993. Yair Amir Fall 16/ Lecture 6 2 Transaction Processing System Clients Messages to TPS outside world Real actions (firing a missile) Database Yair Amir Fall 16/ Lecture 6 3 Basic Definition Transaction - a collection of operations on the physical and abstract application state, with the following properties: • Atomicity. • Consistency. • Isolation. • Durability. The ACID properties of a transaction. Yair Amir Fall 16/ Lecture 6 4 Atomicity Changes to the state are atomic: - A jump from the initial state to the result state without any observable intermediate state. - All or nothing ( Commit / Abort ) semantics. - Changes include: - Database changes. - Messages to outside world. - Actions on transducers. (testable / untestable) Yair Amir Fall 16/ Lecture 6 5 Consistency - The transaction is a correct transformation of the state. This means that the transaction is a correct program. Yair Amir Fall 16/ Lecture 6 6 Isolation Even though transactions execute concurrently, it appears to the outside observer as if they execute in some serial order. Isolation is required to guarantee consistent input, which is needed for a consistent program to provide consistent output. Yair Amir Fall 16/ Lecture 6 7 Durability - Once a transaction completes successfully (commits), its changes to the state survive failures (what is the failure model ? ). -

PS Non-Standard Database Systems Overview

PS Non-Standard Database Systems Overview Daniel Kocher March 2019 is document gives a brief overview on three categories of non-standard database systems (DBS) in the context of this class. For each category, we provide you with a motivation, a discussion about the key properties, a list of commonly used implementations (proprietary and open-source/freely-available), and recommendation(s) for your project. For your convenience, we also recap some relevant terms (e.g., ACID, OLTP, ...). Terminology OLTP Online transaction processing. OLTP systems are highly optimized to process (1) short queries (operating only on a small portion of the database) and (2) small transac- tions (inserting/updating few tuples per relation). Typically, OLTP includes insertions, updates, and deletions. e main focus of OLTP systems is on low response time and high throughput (in terms of transactions per second). Databases in OLTP systems are highly normalized [5]. OLAP Online analytical processing. OLAP systems analyze complex data (usually from a data warehouse). Typically, queries in an OLAP system may run for a long time (as opposed to queries in OLTP systems) and the number of transactions is usually low. Fur- thermore, typical OLAP queries are read-only and touch a large portion of the database (e.g., scan or join large tables). e main focus of OLAP systems is on low response time. Databases in OLAP systems are oen de-normalized intentionally [5]. ACID Traditional relational database systems guarantee ACID properties: – Atomicity All or no operation(s) of a transaction are reected in the database. – Consistency Isolated execution of a transaction preserves consistency of the database. -

A Study of the Availability and Serializability in a Distributed Database System

A STUDY OF THE AVAILABILITY AND SERIALIZABILITY IN A DISTRIBUTED DATABASE SYSTEM David Wai-Lok Cheung B.Sc., Chinese University of Hong Kong, 1971 M.Sc., Simon Fraser University, 1985 A THESIS SUBMI'ITED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHLLOSOPHY , in the School of Computing Science 0 David Wai-Lok Cheung 1988 SIMON FRASER UNIVERSITY January 1988 All rights reserved. This thesis may not be reproduced in whole or in part, by photocopy or other means, without permission of the author. 1 APPROVAL Name: David Wai-Lok Cheung Degree: Doctor of Philosophy Title of Thesis: A Study of the Availability and Serializability in a Distributed Database System Examining Committee: Chairperson: Dr. Binay Bhattacharya Senior Supervisor: Dr. TikoJameda WJ ru p v Dr. Arthur Lee Liestman Dr. Wo-Shun Luk Dr. Jia-Wei Han External Examiner: Toshihide Ibaraki Department of Applied Mathematics and Physics Kyoto University, Japan Bate Approved: January 15, 1988 PARTIAL COPYRIGHT LICENSE I hereby grant to Simon Fraser University the right to lend my thesis, project or extended essay (the title of which is shown below) to users of the Simon Fraser University Library, and to make partial or single copies only for such users or in response to a request from the library of any other university, or other educational institution, on its own behalf or for one of its users. I further agree that permission for multiple copying of this work for scholarly purposes may be granted by me or the Dean of Graduate Studies. It is understood that copying or publication of this work for financial gain shall not be allowed without my written permission, T it l e of Thes i s/Project/Extended Essay Author: (signature) ( name (date) ABSTRACT Replication of data objects enhances the reliability and availability of a distributed database system. -

Performance Analysis of Blockchain Platforms

UNLV Theses, Dissertations, Professional Papers, and Capstones August 2018 Performance Analysis of Blockchain Platforms Pradip Singh Maharjan Follow this and additional works at: https://digitalscholarship.unlv.edu/thesesdissertations Part of the Computer Sciences Commons Repository Citation Maharjan, Pradip Singh, "Performance Analysis of Blockchain Platforms" (2018). UNLV Theses, Dissertations, Professional Papers, and Capstones. 3367. http://dx.doi.org/10.34917/14139888 This Thesis is protected by copyright and/or related rights. It has been brought to you by Digital Scholarship@UNLV with permission from the rights-holder(s). You are free to use this Thesis in any way that is permitted by the copyright and related rights legislation that applies to your use. For other uses you need to obtain permission from the rights-holder(s) directly, unless additional rights are indicated by a Creative Commons license in the record and/ or on the work itself. This Thesis has been accepted for inclusion in UNLV Theses, Dissertations, Professional Papers, and Capstones by an authorized administrator of Digital Scholarship@UNLV. For more information, please contact [email protected]. PERFORMANCE ANALYSIS OF BLOCKCHAIN PLATFORMS By Pradip S. Maharjan Bachelor of Computer Engineering Tribhuvan University Institute of Engineering, Pulchowk Campus, Nepal 2012 A thesis submitted in partial fulfillment of the requirements for the Master of Science in Computer Science Department of Computer Science Howard R. Hughes College of Engineering The Graduate College University of Nevada, Las Vegas August 2018 c Pradip S. Maharjan, 2018 All Rights Reserved Thesis Approval The Graduate College The University of Nevada, Las Vegas May 4, 2018 This thesis prepared by Pradip S. -

Evaluating and Comparing Oracle Database Appliance Performance Updated for Oracle Database Appliance X8-2-HA

Evaluating and Comparing Oracle Database Appliance Performance Updated for Oracle Database Appliance X8-2-HA ORACLE WHITE PAPER | JANUARY 2020 DISCLAIMER The performance results in this paper are intended to give an estimate of the overall performance of the Oracle Database Appliance X8-2-HA system. The results are not a benchmark, and cannot be used to characterize the relative performance of Oracle Database Appliance systems from one generation to another, as the workload characteristics and other environmental factors including firmware, OS, and database patches, which includes Spectre/Meltdown fixes, will vary over time. For an accurate comparison to another platform, you should run the same tests, with the same OS (if applicable) and database versions, patch levels, etc. Do not rely on older tests or benchmark runs, as changes made to the underlying platform in the interim may substantially impact results. EVALUATING AND COMPARING ORACLE DATABASE APPLIANCE PERFORMANCE Table of Contents Introduction and Executive Summary 1 Audience 2 Objective 2 Oracle Database Appliance Deployment Architecture 3 Oracle Database Appliance Configuration Templates 3 What is Swingbench? 4 Swingbench Download and Setup 4 Configuring Oracle Database Appliance for Testing 5 Configuring Oracle Database Appliance System for Testing 5 Benchmark Setup 5 Database Setup 6 Schema Setup 6 Workload Setup and Execution 10 Benchmark Results 11 Workload Performance 11 Database and Operating System Statistics 12 Average CPU Busy 12 REDO Writes 13 Transaction Rate 13 Important Considerations for Performing the Benchmark 14 Conclusion 15 Appendix A Swingbench configuration files 16 Appendix B The loadgen.pl file 19 Appendix C The generate_awr.sh script 24 References 25 EVALUATING AND COMPARING ORACLE DATABASE APPLIANCE PERFORMANCE Introduction and Executive Summary Oracle Database Appliance X8-2-HA is a highly available Oracle database system. -

An Alternative Model to Overcoming Two Phase Commit Blocking Problem

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by International Journal of Computer (IJC - Global Society of Scientific Research and... International Journal of Computer (IJC) ISSN 2307-4523 (Print & Online) © Global Society of Scientific Research and Researchers http://ijcjournal.org/ An Alternative Model to Overcoming Two Phase Commit Blocking Problem Hassan Jafar Sheikh Salaha*, Dr. Richard M. Rimirub , Dr. Michael W. Kimwelec a,b,cComputer Science, Jomo Kenyatta University of Agriculture and Technology Kenya aEmail: [email protected] bEmail: [email protected] cEmail: [email protected] Abstract In distributed transactions, the atomicity requirement of the atomic commitment protocol should be preserved. The two phased commit protocol algorithm is widely used to ensure that transactions in distributed environment are atomic. However, a main drawback was attributed to the algorithm, which is a blocking problem. The system will get in stuck when participating sites or the coordinator itself crashes. To address this problem a number of algorithms related to 2PC protocol were proposed such as back up coordinator and a technique whereby the algorithm passes through 3PC during failure. However, both algorithms face limitations such as multiple site and backup coordinator failures. Therefore, we proposed an alternative model to overcoming the blocking problem in combined form. The algorithm was simulated using Britonix transaction manager (BTM) using Eclipse IDE and MYSQL. In this paper, we assessed the performance of the alternative model and found that this algorithm handled site and coordinator failures in distributed transactions using hybridization (combination of two algorithms) with minimal communication messages. -

Transaction Management in the R* Distributed Database Management System

Transaction Management in the R* Distributed Database Management System C. MOHAN, B. LINDSAY, and R. OBERMARCK IBM Almaden Research Center This paper deals with the transaction management aspects of the R* distributed database system. It concentrates primarily on the description of the R* commit protocols, Presumed Abort (PA) and Presumed Commit (PC). PA and PC are extensions of the well-known, two-phase (2P) commit protocol. PA is optimized for read-only transactions and a class of multisite update transactions, and PC is optimized for other classes of multisite update transactions. The optimizations result in reduced intersite message traffic and log writes, and, consequently, a better response time. The paper also discusses R*‘s approach toward distributed deadlock detection and resolution. Categories and Subject Descriptors: C.2.4 [Computer-Communication Networks]: Distributed Systems-distributed datahes; D.4.1 [Operating Systems]: Process Management-concurrency; deadlocks, syndvonization; D.4.7 [Operating Systems]: Organization and Design-distributed sys- tems; D.4.5 [Operating Systems]: Reliability--fault tolerance; H.2.0 [Database Management]: General-concurrency control; H.2.2 [Database Management]: ‘Physical Design-recouery and restart; H.2.4 [Database Management]: Systems-ditributed systems; transactionprocessing; H.2.7 [Database Management]: Database Administration-logging and recouery General Terms: Algorithms, Design, Reliability Additional Key Words and Phrases: Commit protocols, deadlock victim selection 1. INTRODUCTION R* is an experimental, distributed database management system (DDBMS) developed and operational at the IBM San Jose Research Laboratory (now renamed the IBM Almaden Research Center) 118, 201. In a distributed database system, the actions of a transaction (an atomic unit of consistency and recovery [13]) may occur at more than one site. -

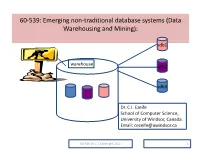

60-539: Emerging Non-Traditional Database Systems (Data Warehousing and Mining)

60-539: Emerging non-traditional database systems (Data Warehousing and Mining): sdb1 warehouse sdb2 sdb3 Dr. C.I. Ezeife School of Computer Science, University of Windsor, Canada. Email: [email protected] 60-539 Dr. C.I. Ezeife @c 2021 1 PART I (DATABASE MANAGEMENT SYSTEM OVERVIEW) DBMS (PART 1) OVERVIEW Components of a DBMS DBMS Data model Data Definition and Manipulation Language File Organization Techniques Query Optimization and Evaluation Facility Database Design and Tuning Transaction Processing Concurrency Control Database Security and Integrity Issues 60-539 Dr. C.I. Ezeife © 2021 2 DBMS OVERVIEW(What are?) What is a database? : It is a collection of data, typically describing the activities of one or more related organizations, e.g., a University, an airline reservation or a banking database. What is a DBMS?: A DBMS is a set of software for creating, querying, managing and keeping databases. Examples of DBMS’s are DB2, Informix, Sybase, Oracle, MYSQL, Microsoft Access (relational). Alternative to Databases: Storing all data for university, airline and banking information in separate files and writing separate program for each data file. What is a Data Warehouse?:A subject-oriented, historical, non- volatile database integrating a number of data sources. What is Data Mining?:Is data analysis for finding interesting trends or patterns in large dataset to guide decisions about future activities. 60-539 Dr. C.I. Ezeife © 2021 3 DBMS OVERVIEW(Evolution of Information Technology) 1960s and earlier: Primitive file processing: data are collected in files and manipulated with programs in Cobol and other languages. Disadv.: any change in storage structure of data requires changing the program (i.e., no logical or physical data independence). -

Implementing Distributed Transactions Distributed Transaction Distributed Database Systems ACID Properties Global Atomicity Atom

Distributed Transaction • A distributed transaction accesses resource managers distributed across a network Implementing Distributed • When resource managers are DBMSs we refer to the Transactions system as a distributed database system Chapter 24 DBMS at Site 1 Application Program DBMS 1 at Site 2 2 Distributed Database Systems ACID Properties • Each local DBMS might export • Each local DBMS – stored procedures, or – supports ACID properties locally for each subtransaction – an SQL interface. • Just like any other transaction that executes there • In either case, operations at each site are grouped – eliminates local deadlocks together as a subtransaction and the site is referred • The additional issues are: to as a cohort of the distributed transaction – Global atomicity: all cohorts must abort or all commit – Each subtransaction is treated as a transaction at its site – Global deadlocks: there must be no deadlocks involving • Coordinator module (part of TP monitor) supports multiple sites ACID properties of distributed transaction – Global serialization: distributed transaction must be globally serializable – Transaction manager acts as coordinator 3 4 Atomic Commit Protocol Global Atomicity Transaction (3) xa_reg • All subtransactions of a distributed transaction Manager Resource must commit or all must abort (coordinator) Manager (1) tx_begin (cohort) • An atomic commit protocol, initiated by a (4) tx_commit (5) atomic coordinator (e.g., the transaction manager), commit protocol ensures this. (3) xa_reg Resource Application – Coordinator