UC Berkeley CS61C Fall 2018 Final Exam Answers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Virtual Memory

Chapter 4 Virtual Memory Linux processes execute in a virtual environment that makes it appear as if each process had the entire address space of the CPU available to itself. This virtual address space extends from address 0 all the way to the maximum address. On a 32-bit platform, such as IA-32, the maximum address is 232 − 1or0xffffffff. On a 64-bit platform, such as IA-64, this is 264 − 1or0xffffffffffffffff. While it is obviously convenient for a process to be able to access such a huge ad- dress space, there are really three distinct, but equally important, reasons for using virtual memory. 1. Resource virtualization. On a system with virtual memory, a process does not have to concern itself with the details of how much physical memory is available or which physical memory locations are already in use by some other process. In other words, virtual memory takes a limited physical resource (physical memory) and turns it into an infinite, or at least an abundant, resource (virtual memory). 2. Information isolation. Because each process runs in its own address space, it is not possible for one process to read data that belongs to another process. This improves security because it reduces the risk of one process being able to spy on another pro- cess and, e.g., steal a password. 3. Fault isolation. Processes with their own virtual address spaces cannot overwrite each other’s memory. This greatly reduces the risk of a failure in one process trig- gering a failure in another process. That is, when a process crashes, the problem is generally limited to that process alone and does not cause the entire machine to go down. -

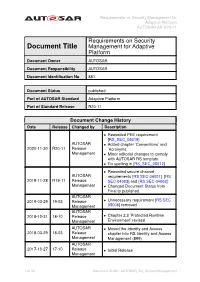

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Requirements on Security Document Title Management for Adaptive Platform Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 881 Document Status published Part of AUTOSAR Standard Adaptive Platform Part of Standard Release R20-11 Document Change History Date Release Changed by Description • Reworded PEE requirement [RS_SEC_05019] AUTOSAR • Added chapter ’Conventions’ and 2020-11-30 R20-11 Release ’Acronyms’ Management • Minor editorial changes to comply with AUTOSAR RS template • Fix spelling in [RS_SEC_05012] • Reworded secure channel AUTOSAR requirements [RS SEC 04001], [RS 2019-11-28 R19-11 Release SEC 04003] and [RS SEC 04003] Management • Changed Document Status from Final to published AUTOSAR 2019-03-29 19-03 Release • Unnecessary requirement [RS SEC Management 05006] removed AUTOSAR 2018-10-31 18-10 Release • Chapter 2.3 ’Protected Runtime Management Environment’ revised AUTOSAR • Moved the Identity and Access 2018-03-29 18-03 Release chapter into RS Identity and Access Management Management (899) AUTOSAR 2017-10-27 17-10 Release • Initial Release Management 1 of 32 Document ID 881: AUTOSAR_RS_SecurityManagement Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intel- lectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. -

Harnessing Numerical Flexibility for Deep Learning on Fpgas.Pdf

WHITE PAPER FPGA Inline Acceleration Harnessing Numerical Flexibility for Deep Learning on FPGAs Authors Abstract Andrew C . Ling Deep learning has become a key workload in the data center and the edge, leading [email protected] to a race for dominance in this space. FPGAs have shown they can compete by combining deterministic low latency with high throughput and flexibility. In Mohamed S . Abdelfattah particular, FPGAs bit-level programmability can efficiently implement arbitrary [email protected] precisions and numeric data types critical in the fast evolving field of deep learning. Andrew Bitar In this paper, we explore FPGA minifloat implementations (floating-point [email protected] representations with non-standard exponent and mantissa sizes), and show the use of a block-floating-point implementation that shares the exponent across David Han many numbers, reducing the logic required to perform floating-point operations. [email protected] The paper shows this technique can significantly improve FPGA performance with no impact to accuracy, reduce logic utilization by 3X, and memory bandwidth and Roberto Dicecco capacity required by more than 40%.† [email protected] Suchit Subhaschandra Introduction [email protected] Deep neural networks have proven to be a powerful means to solve some of the Chris N Johnson most difficult computer vision and natural language processing problems since [email protected] their successful introduction to the ImageNet competition in 2012 [14]. This has led to an explosion of workloads based on deep neural networks in the data center Dmitry Denisenko and the edge [2]. [email protected] One of the key challenges with deep neural networks is their inherent Josh Fender computational complexity, where many deep nets require billions of operations [email protected] to perform a single inference. -

The Hexadecimal Number System and Memory Addressing

C5537_App C_1107_03/16/2005 APPENDIX C The Hexadecimal Number System and Memory Addressing nderstanding the number system and the coding system that computers use to U store data and communicate with each other is fundamental to understanding how computers work. Early attempts to invent an electronic computing device met with disappointing results as long as inventors tried to use the decimal number sys- tem, with the digits 0–9. Then John Atanasoff proposed using a coding system that expressed everything in terms of different sequences of only two numerals: one repre- sented by the presence of a charge and one represented by the absence of a charge. The numbering system that can be supported by the expression of only two numerals is called base 2, or binary; it was invented by Ada Lovelace many years before, using the numerals 0 and 1. Under Atanasoff’s design, all numbers and other characters would be converted to this binary number system, and all storage, comparisons, and arithmetic would be done using it. Even today, this is one of the basic principles of computers. Every character or number entered into a computer is first converted into a series of 0s and 1s. Many coding schemes and techniques have been invented to manipulate these 0s and 1s, called bits for binary digits. The most widespread binary coding scheme for microcomputers, which is recog- nized as the microcomputer standard, is called ASCII (American Standard Code for Information Interchange). (Appendix B lists the binary code for the basic 127- character set.) In ASCII, each character is assigned an 8-bit code called a byte. -

Midterm-2020-Solution.Pdf

HONOR CODE Questions Sheet. A Lets C. [6 Points] 1. What type of address (heap,stack,static,code) does each value evaluate to Book1, Book1->name, Book1->author, &Book2? [4] 2. Will all of the print statements execute as expected? If NO, write print statement which will not execute as expected?[2] B. Mystery [8 Points] 3. When the above code executes, which line is modified? How many times? [2] 4. What is the value of register a6 at the end ? [2] 5. What is the value of register a4 at the end ? [2] 6. In one sentence what is this program calculating ? [2] C. C-to-RISC V Tree Search; Fill in the blanks below [12 points] D. RISCV - The MOD operation [8 points] 19. The data segment starts at address 0x10000000. What are the memory locations modified by this program and what are their values ? E Floating Point [8 points.] 20. What is the smallest nonzero positive value that can be represented? Write your answer as a numerical expression in the answer packet? [2] 21. Consider some positive normalized floating point number where p is represented as: What is the distance (i.e. the difference) between p and the next-largest number after p that can be represented? [2] 22. Now instead let p be a positive denormalized number described asp = 2y x 0.significand. What is the distance between p and the next largest number after p that can be represented? [2] 23. Sort the following minifloat numbers. [2] F. Numbers. [5] 24. What is the smallest number that this system can represent 6 digits (assume unsigned) ? [1] 25. -

2018-19 MAP 160 Byte File Layout Specifications

2018-19 MAP 160 Byte File Layout Specifications OVERVIEW: A) ISAC will provide an Eligibility Status File (ESF) record for each student to all schools listed as a college choice on the student’s Student Aid Report (SAR). The ESF records will be available daily as Record Type = 7. ESF records may be retrieved via the File Extraction option in MAP. B) Schools will transmit Payment Requests to ISAC via File Transfer Protocol (FTP) using the MAP 160byte layout and identify these with Record Type = 4. C) When payment requests are processed, ISAC will provide payment results to schools through the MAP system. The payment results records can be retrieved in the 160 byte format using the MAP Payment Results File Extraction Option. MAP results records have a Record Type = 5. The MAP Payment Results file contains some eligibility status data elements. Also, the same student record may appear on both the Payment Results and the Eligibility Status extract files. Schools may also use the Reports option in MAP to obtain payment results. D) To cancel Payment Requests, the school with the current Payment Results record on ISAC's Payment Database must transmit a matching record with MAP Payment Request Code = C, with the Requested Award Amount field equal to zero and the Enrollment Hours field equal to 0 along with other required data elements. These records must be transmitted to ISAC as Record Type = 4. E) Summary of Data Element Changes, revision (highlighted in grey) made to the 2018-19 layout. NONE 1 2018-19 MAP 160 Byte File Layout Specifications – 9/17 2018-19 MAP 160 Byte File Layout Specifications F) The following 160 byte record layout will be used for transmitting data between schools and ISAC. -

POINTER (IN C/C++) What Is a Pointer?

POINTER (IN C/C++) What is a pointer? Variable in a program is something with a name, the value of which can vary. The way the compiler and linker handles this is that it assigns a specific block of memory within the computer to hold the value of that variable. • The left side is the value in memory. • The right side is the address of that memory Dereferencing: • int bar = *foo_ptr; • *foo_ptr = 42; // set foo to 42 which is also effect bar = 42 • To dereference ted, go to memory address of 1776, the value contain in that is 25 which is what we need. Differences between & and * & is the reference operator and can be read as "address of“ * is the dereference operator and can be read as "value pointed by" A variable referenced with & can be dereferenced with *. • Andy = 25; • Ted = &andy; All expressions below are true: • andy == 25 // true • &andy == 1776 // true • ted == 1776 // true • *ted == 25 // true How to declare pointer? • Type + “*” + name of variable. • Example: int * number; • char * c; • • number or c is a variable is called a pointer variable How to use pointer? • int foo; • int *foo_ptr = &foo; • foo_ptr is declared as a pointer to int. We have initialized it to point to foo. • foo occupies some memory. Its location in memory is called its address. &foo is the address of foo Assignment and pointer: • int *foo_pr = 5; // wrong • int foo = 5; • int *foo_pr = &foo; // correct way Change the pointer to the next memory block: • int foo = 5; • int *foo_pr = &foo; • foo_pr ++; Pointer arithmetics • char *mychar; // sizeof 1 byte • short *myshort; // sizeof 2 bytes • long *mylong; // sizeof 4 byts • mychar++; // increase by 1 byte • myshort++; // increase by 2 bytes • mylong++; // increase by 4 bytes Increase pointer is different from increase the dereference • *P++; // unary operation: go to the address of the pointer then increase its address and return a value • (*P)++; // get the value from the address of p then increase the value by 1 Arrays: • int array[] = {45,46,47}; • we can call the first element in the array by saying: *array or array[0]. -

Common Object File Format (COFF)

Application Report SPRAAO8–April 2009 Common Object File Format ..................................................................................................................................................... ABSTRACT The assembler and link step create object files in common object file format (COFF). COFF is an implementation of an object file format of the same name that was developed by AT&T for use on UNIX-based systems. This format encourages modular programming and provides powerful and flexible methods for managing code segments and target system memory. This appendix contains technical details about the Texas Instruments COFF object file structure. Much of this information pertains to the symbolic debugging information that is produced by the C compiler. The purpose of this application note is to provide supplementary information on the internal format of COFF object files. Topic .................................................................................................. Page 1 COFF File Structure .................................................................... 2 2 File Header Structure .................................................................. 4 3 Optional File Header Format ........................................................ 5 4 Section Header Structure............................................................. 5 5 Structuring Relocation Information ............................................... 7 6 Symbol Table Structure and Content........................................... 11 SPRAAO8–April 2009 -

Bits and Bytes

BITS AND BYTES To understand how a computer works, you need to understand the BINARY SYSTEM. The binary system is a numbering system that uses only two digits—0 and 1. Although this may seem strange to humans, it fits the computer perfectly! A computer chip is made up of circuits. For each circuit, there are two possibilities: An electric current flows through the circuit (ON), or An electric current does not flow through the circuit (OFF) The number 1 represents an “on” circuit. The number 0 represents an “off” circuit. The two digits, 0 and 1, are called bits. The word bit comes from binary digit: Binary digit = bit Every time the computer “reads” an instruction, it translates that instruction into a series of bits (0’s and 1’s). In most computers every letter, number, and symbol is translated into eight bits, a combination of eight 0’s and 1’s. For example the letter A is translated into 01000001. The letter B is 01000010. Every single keystroke on the keyboard translates into a different combination of eight bits. A group of eight bits is called a byte. Therefore, a byte is a combination of eight 0’s and 1’s. Eight bits = 1 byte Capacity of computer memory, storage such as USB devices, DVD’s are measured in bytes. For example a Word file might be 35 KB while a picture taken by a digital camera might be 4.5 MG. Hard drives normally are measured in GB or TB: Kilobyte (KB) approximately 1,000 bytes MegaByte (MB) approximately 1,000,000 (million) bytes Gigabtye (GB) approximately 1,000,000,000 (billion) bytes Terabyte (TB) approximately 1,000,000,000,000 (trillion) bytes The binary code that computers use is called the ASCII (American Standard Code for Information Interchange) code. -

Virtual Memory - Paging

Virtual memory - Paging Johan Montelius KTH 2020 1 / 32 The process code heap (.text) data stack kernel 0x00000000 0xC0000000 0xffffffff Memory layout for a 32-bit Linux process 2 / 32 Segments - a could be solution Processes in virtual space Address translation by MMU (base and bounds) Physical memory 3 / 32 one problem Physical memory External fragmentation: free areas of free space that is hard to utilize. Solution: allocate larger segments ... internal fragmentation. 4 / 32 another problem virtual space used code We’re reserving physical memory that is not used. physical memory not used? 5 / 32 Let’s try again It’s easier to handle fixed size memory blocks. Can we map a process virtual space to a set of equal size blocks? An address is interpreted as a virtual page number (VPN) and an offset. 6 / 32 Remember the segmented MMU MMU exception no virtual addr. offset yes // < within bounds index + physical address segment table 7 / 32 The paging MMU MMU exception virtual addr. offset // VPN available ? + physical address page table 8 / 32 the MMU exception exception virtual address within bounds page available Segmentation Paging linear address physical address 9 / 32 a note on the x86 architecture The x86-32 architecture supports both segmentation and paging. A virtual address is translated to a linear address using a segmentation table. The linear address is then translated to a physical address by paging. Linux and Windows do not use use segmentation to separate code, data nor stack. The x86-64 (the 64-bit version of the x86 architecture) has dropped many features for segmentation. -

Virtual Memory in X86

Fall 2017 :: CSE 306 Virtual Memory in x86 Nima Honarmand Fall 2017 :: CSE 306 x86 Processor Modes • Real mode – walks and talks like a really old x86 chip • State at boot • 20-bit address space, direct physical memory access • 1 MB of usable memory • No paging • No user mode; processor has only one protection level • Protected mode – Standard 32-bit x86 mode • Combination of segmentation and paging • Privilege levels (separate user and kernel) • 32-bit virtual address • 32-bit physical address • 36-bit if Physical Address Extension (PAE) feature enabled Fall 2017 :: CSE 306 x86 Processor Modes • Long mode – 64-bit mode (aka amd64, x86_64, etc.) • Very similar to 32-bit mode (protected mode), but bigger address space • 48-bit virtual address space • 52-bit physical address space • Restricted segmentation use • Even more obscure modes we won’t discuss today xv6 uses protected mode w/o PAE (i.e., 32-bit virtual and physical addresses) Fall 2017 :: CSE 306 Virt. & Phys. Addr. Spaces in x86 Processor • Both RAM hand hardware devices (disk, Core NIC, etc.) connected to system bus • Mapped to different parts of the physical Virtual Addr address space by the BIOS MMU Data • You can talk to a device by performing Physical Addr read/write operations on its physical addresses Cache • Devices are free to interpret reads/writes in any way they want (driver knows) System Interconnect (Bus) : all addrs virtual DRAM Network … Disk (Memory) Card : all addrs physical Fall 2017 :: CSE 306 Virt-to-Phys Translation in x86 0xdeadbeef Segmentation 0x0eadbeef Paging 0x6eadbeef Virtual Address Linear Address Physical Address Protected/Long mode only • Segmentation cannot be disabled! • But can be made a no-op (a.k.a. -

Address Translation

CS 152 Computer Architecture and Engineering Lecture 8 - Address Translation John Wawrzynek Electrical Engineering and Computer Sciences University of California at Berkeley http://www.eecs.berkeley.edu/~johnw http://inst.eecs.berkeley.edu/~cs152 9/27/2016 CS152, Fall 2016 CS152 Administrivia § Lab 2 due Friday § PS 2 due Tuesday § Quiz 2 next Thursday! 9/27/2016 CS152, Fall 2016 2 Last time in Lecture 7 § 3 C’s of cache misses – Compulsory, Capacity, Conflict § Write policies – Write back, write-through, write-allocate, no write allocate § Multi-level cache hierarchies reduce miss penalty – 3 levels common in modern systems (some have 4!) – Can change design tradeoffs of L1 cache if known to have L2 § Prefetching: retrieve memory data before CPU request – Prefetching can waste bandwidth and cause cache pollution – Software vs hardware prefetching § Software memory hierarchy optimizations – Loop interchange, loop fusion, cache tiling 9/27/2016 CS152, Fall 2016 3 Bare Machine Physical Physical Address Inst. Address Data Decode PC Cache D E + M Cache W Physical Memory Controller Physical Address Address Physical Address Main Memory (DRAM) § In a bare machine, the only kind of address is a physical address 9/27/2016 CS152, Fall 2016 4 Absolute Addresses EDSAC, early 50’s § Only one program ran at a time, with unrestricted access to entire machine (RAM + I/O devices) § Addresses in a program depended upon where the program was to be loaded in memory § But it was more convenient for programmers to write location-independent subroutines How