The Turning, Stretching and Boxing Technique: a Direction Worth Looking Towards

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

EVGA Geforce GT 730

EVGA GeForce GT 730 Part Number: 01G-P3-3736-KR Speed up your PC experience when you upgrade from integrated graphics to the new NVIDIA GeForce GT 730 dedicated card. Enjoy all your videos and pictures at HD resolutions, with faster video editing, faster photo editing, and faster web browsing compared to integrated graphics. Discover faster gaming performance than integrated graphics, making all your gameplay richer and smoother. You can even get the latest drivers and optimize game settings with a single click using GeForce Experience. The GeForce GT 730 is everything you need for a better, faster PC experience. SPECIFICATIONS KEY FEATURES REQUIREMENTS Base Clock: 700 MHZ NVIDIA PhysX technology 300 Watt or greater power supply with a Memory Clock: 3200 MHz Effective PCI Express 2.0 support minimum of 20 Amp on the +12 volt rail.**** CUDA Cores: 96 NVIDIA SMX Engine PCI Express, PCI Express 2.0 or PCI Express Bus Type: PCI-E 2.0 NVIDIA Adaptive Vertical Sync 3.0 compliant motherboard with one graphics Memory Detail: 1024MB GDDR5 Microsoft DirectX 12 API (feature level 11_0) slot. Memory Bit Width: 128 Bit Support Windows 8 32/64bit, Windows 7 32/64bit, Windows Vista 32/64bit, Windows XP 32/64bit Memory Bandwidth: 51.2 GB/s NVIDIA 3D Vision Ready*** NVIDIA CUDA Technology DIMENSIONS OpenGL 4.4 Support OpenCL Support Height: 4.376in - 111.15mm NVIDIA FXAA Technology Length: 6.096in - 154.84mm NVIDIA TXAA Technology NVIDIA PureVideo HD Technology **Support for HDMI includes GPU-accelerated Blu-ray 3D support (Blu-ray 3D playback requires the purchase of a compatible software player from CyberLink, ArcSoft, Corel, or Sonic), x.v.Color, HDMI Deep Color, and 7.1 digital surround sound. -

Rendering with 3D Vision Stereo Or Mono: More Case Studies

Outline NVIDIA 3D Vision™ Stereoscopic driver & HW display solutions Stereoscopy Basics Definitions and equations Rendering with 3D Vision What & how to render in stereo mode Issues and Solutions Issues encountered in real games and our solutions gameworks.nvidia.com What does it offer? How it works? gameworks.nvidia.com 3D Movies 120 Hz LCDs Dimensionalized Experience 3D Pictures 3D DLP HDTVs 3D Games 3D Projectors The programmability of the GPU allows NVIDIA to import any 3D data format and decode, convert, or transform the data for viewing on a 3D-Ready displays. 3D Webcast Anaglyph 3D gameworks.nvidia.com NVIDIA 3D Vision Stereo Support GeForce Stereo Driver Vista & Win7 D3D9 / D3D10 Quadro GeForce features Professional OpenGL Stereo Quad Buffer Multiple synchronized stereo displays Multi-platform 3D Vision and many other stereo displays gameworks.nvidia.com NVIDIA 3D Vision NVIDIA 3D Vision Solutions NVIDIA 3D Vision Discover NVIDIA 3D Vision Bundled with select NVIDIA GPUs for a sneak Sold as a complete kit for full HD Availability peak at stereoscopic 3D stereoscopic 3D 3D Glasses type NVIDIA optmized anaglyph (red/cyan) Wireless Shutter glasses Anaglyph with optimized color and image 3D Mode Page flip 120 Hz & checkerboard pattern 3D processing on the GPU Color Fidelity Limited Color Full Color Display requirements All desktop LCD and CRT displays 3D-Vision-Ready displays NVIDIA GeForce GPU GeForce 8 series and higher GeForce 8 series and higher Microsoft Windows Vista Microsoft Windows Vista Operating System Microsoft Windows 7 Microsoft Windows 7 View 3D pictures Y Y Watch 3D movies Y Y Play real-time 3D games Y Y 3D consumer applicaiton Y Y gameworks.nvidia.com NVIDIA 3D Vision How It Works 3D game data is sent to stereoscopic driver The driver takes the 3D game data and renders each scene twice – once for the left eye and once for the right eye. -

NVIDIA's Opengl Functionality

NVIDIANVIDIA ’’ss OpenGLOpenGL FunctionalityFunctionality Session 2127 | Room A5 | Monday, September, 20th, 16:00 - 17:20 San Jose Convention Center, San Jose, California Mark J. Kilgard • Principal System Software Engineer – OpenGL driver – Cg (“C for graphics”) shading language • OpenGL Utility Toolkit (GLUT) implementer • Author of OpenGL for the X Window System • Co-author of Cg Tutorial Outline • OpenGL’s importance to NVIDIA • OpenGL 3.3 and 4.0 • OpenGL 4.1 • Loose ends: deprecation, Cg, further extensions OpenGL Leverage Cg Parallel Nsight SceniX CompleX OptiX Example of Hybrid Rendering with OptiX OpenGL (Rasterization) OptiX (Ray tracing) Parallel Nsight Provides OpenGL Profiling Configure Application Trace Settings Parallel Nsight Provides OpenGL Profiling Magnified trace options shows specific OpenGL (and Cg) tracing options Parallel Nsight Provides OpenGL Profiling Parallel Nsight Provides OpenGL Profiling Trace of mix of OpenGL and CUDA shows glFinish & OpenGL draw calls OpenGL In Every NVIDIA Business OpenGL on Quadro – World class OpenGL 4 drivers – 18 years of uninterrupted API compatibility – Workstation application certifications – Workstation application profiles – Display list optimizations – Fast antialiased lines – Largest memory configurations: 6 gigabytes – GPU affinity – Enhanced interop with CUDA and multi-GPU OpenGL – Advanced multi-GPU rendering – Overlays – Genlock – Unified Back Buffer for less framebuffer memory usage – Cross-platform • Windows XP, Vista, Win7, Linux, Mac, FreeBSD, Solaris – SLI Mosaic – -

NVIDIA 3D Vision on Quadro Professional Graphics Boards

NVIDIA 3D Vision on Quadro Professional Graphics Boards NVIDIA® Quadro® professional graphics boards support many stereo display solutions and Quad Buffered OpenGL applications, including NVIDIA 3D Vision glasses and displays. Support for NVIDIA 3D Vision is available on Windows XP, Vista and 7 both 32 and 64 bit versions. Supported Boards Recommended: Professional, flicker free, stereo with a direct connection between the stereo connector on the board to the 3D Vision emitter Quadro FX 5800 Quadro FX 4800 Quadro FX 3800– with stereo connector Quadro FX 5600 Quadro FX 4700 X2 Quadro FX 4600 Quadro FX 3700 Glasses are controlled through USB, occasional flickering of the glasses may occur Quadro FX 1800 – VBIOS must be 62.94.71.00.00 or newer, contact your board provider if you need an update Quadro FX 580 - VBIOS must be 62.94.71.00.00 or newer, contact your board provider if you need an update Quadro FX 380 Quadro FX 1700 Quadro FX 570 Quadro FX 370 Supported displays are listed here. Installation Download and install the R186.16 (or newer) driver Download and install the 3D Vision USB Driver for 3D Vision glasses: Windows Vista 32/64 and Windows 7 32/64 XP 32/64 driver coming soon For Quadro boards with a stereo connector, you can use the 3-pin mini-din to 1/8” stereo cable that comes with your 3D Vision kit to connect the graphics board to the emitter. This cable provides a direct connection from the GPU to the glasses to make sure they are switching at the correct time. -

NVIDIA Trademark and Logo Usage Guidelines

NVIDIA Trademark and Logo Usage Guidelines The NVIDIA Trademark and Logo Usage Guidelines includes information regarding when it is appropriate to use each of the trademarks and logos, when to use various versions of the logo, the correct way to use the trademarks in text, minimum sizing and spacing requirements, and color details. General Requirements for Logo Use NVIDIA Logo Primarily used to show a relationship with NVIDIA, such as a joint sponsorship at an event or partnership. Product Logos These include NVIDIA, GeForce GTX, GeForce RTX, Quadro, Quadro RTX, SHIELD, Tesla, Tegra, and NVS. May be used to promote partner products that include the corresponding NVIDIA products. Technology Logos These include PureVideo, PhysX, 3D Vision, GPU Boost, TXAA, Maximus. Other technology logos require separate license agreements at this time. • May be used to promote hardware products that include the corresponding technology as well as software products that support the technology. (Note: PureVideo HD, 3D Vision, and Maximus require a separate agreement.) Program Logos This includes “Graphics by NVIDIA®”. Other program logos require separate license agreements at this time. “Graphics by NVIDIA” may be used to promote products that contain NVIDIA GPUs (GeForce or Quadro). Permission must be granted by NVIDIA prior to use. Related stickers are available for use on PC systems. In general, multiple NVIDIA logos may not be used by licensees unless express permission has been granted by NVIDIA in writing or through current guidelines. NVIDIA Trademark and Logo Usage Guidelines - 2019 Use of NVIDIA Trademarks in Partner Product Names As part of the licensing agreement, NVIDIA allows partners to use the GeForce GTX trademarks in partner product names when certain guidelines are followed. -

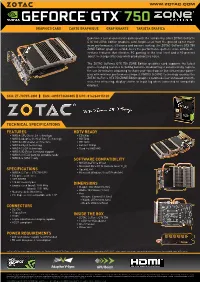

Technical Specifications Features Specifications

WWW.ZOTAC.COM GRAPHICS CARD CARTE GRAPHIQUE GRAFIKKARTE TARJETA GRAFICA Experience a next-generation gaming with the completely silent ZOTAC GeForce GTX 750 ZONE Edition graphics card. Engineered from the ground up for maxi- mum performance, efficiency and passive cooling, the ZOTAC GeForce GTX 750 ZONE Edition graphics card delivers the performance gamers crave with best- in-class features that elevates PC gaming to the next level and a 60-percent boost in energy-efficiency while producing zero noise. The ZOTAC GeForce GTX 750 ZONE Edition graphics card supports the latest game-changing features including GeForce ShadowPlay to automatically capture the last 20-minutes of gaming to share your epic frags or live stream your game- play with minimal performance impact. NVIDIA G-SYNC technology enables the ZOTAC GeForce GTX 750 ZONE Edition graphics card to deliver unrivaled smooth- ness free of tearing, display stutter or input lag when connected to compatible displays. SKU: ZT-70707-20M EAN: 4895173604805 UPC: 816264015120 TECHNICAL SPECIFICATIONS FEATURES HDTV READY • NVIDIA GPU Boost 2.0 technology • ED 480p • NVIDIA Adaptive Vertical Sync Technology • HD 720p • NVIDIA SMX shader architecture • HD 1080i • NVIDIA PhysX technology • Full HD 1080p • NVIDIA CUDA technology • Quad Full HD (4K) • NVIDIA 3D Vision Surround support • NVIDIA SHIELD gaming-portable ready • NVIDIA G-SYNC ready SOFTWARE COMPATIBILITY • NVIDIA GeForce driver • Microsoft DirectX 12 (feature level 11_0) SPECIFICATIONS • OpenGL 4.4 • NVIDIA GeForce GTX 750 GPU • Microsoft -

Nvidia Corporation from Super Phones to Supercomputers

NVIDIA CORPORATION FROM SUPER PHONES TO SUPERCOMPUTERS NVIDIA awakened the world to the power of interactive experiences. NVIDIA has shipped NVIDIA IN BRIEF computer graphics when it invented the GPU over one billion GPUs. And the rising Founded in 1993 in 1999. These multi-core processors enable importance of computer graphics is fueling Headquartered in Santa Clara, Calif. PC gamers to enjoy fully immersive worlds, growth. It’s estimated that by 2015, two and professionals to design everything from billion computers a year will ship with GPUs. 6,800 employees in 20 countries shampoo bottles to airplanes. Their parallel Fastest semiconductor company processing capabilities provide researchers A CULTURE OF REINVENTION to reach $1 billion in revenue and scientists with the ability to efficiently ounded in 1993, NVIDIA has continuously $3.5 billion in revenue in FY11 run high-performance applications, and reinvented itself to delight users and shape 1,900 patents they are deployed in supercomputing sites the industry. From our roots in PC graphics, around the world. More recently, NVIDIA we expanded into professional graphics and 1 billion GPUs shipped has moved into the fast-growing mobile high-performance computing. Our recent 10 million Tegras shipped computing market, where its processors move into mobile computing puts us at the power phones and tablets, as well as auto center of one of the industry’s fastest- infotainment systems. The company holds growing segments. more than 2,100 issued patents worldwide, including ones covering ideas essential to A PASSIONATE FOLLOWING modern computing. The passionate drive that fuels our company is most powerfully reflected back from our GPUS: THE ENGINES OF users. -

GUÍA RÁPIDA Todas Lasotras Marcas Registradas Sonpropiedad Desusrespectivos Dueños.Todos Los Derechos Reservados

PROCESADORES GRÁFICOS CANAL DE ESCRITORIO | ALINEACIÓN | ABR13 NVIDIA PARA PC DE ESCRITORIO Juegos 3D Vision Medios en HD Rendimiento GUÍA RÁPIDA ® ™ Unidad de Procesamiento Gráfico Reemplaza (GPU) Placa AMD DirectX PhysX GPU Boost TXAA NVIDIA SLI Experiencia en Juegos NVIDIA Surround Juegos en 3D Mirar HD Edición y Creación en HD Aplicaciones Recomendadas CUDA Núcleos Configuración de Memoria Estándar Rendimiento 3D Vision GeForce GTX TITAN 11 2.0 Triple 5760x1080 5760x1080 2688 6144 MB GDDR5 Surround 3D Vision GEFORCE ALINEACIÓN DE GPU GeForce GTX 690 11 1.0 Quad 3072 4096 MB GDDR5 Surround 1920x1080 y Más 3D Vision 1920x1080 GeForce GTX 680 HD 7970 11 1.0 Triple 1536 2048 MB GDDR5 @ Config Surround @ Config. Max Max 3D Vision GeForce GTX 670 HD 7950 11 1.0 Triple 1344 2048 MB GDDR5 Surround Artes Digitales: Adobe CS6 NVIDIA GEFORCE GTX GeForce GTX 660 Ti HD 7870 11 1.0 Triple Edición de Videos: 1344 2048 MB GDDR5 Surround MotionDSP 1920x1080 vReveal HD NVIDIA GeForce GTX 660 HD 7850 11 1.0 Doble @ Config 1920x1080 960 2048 MB GDDR5 Surround Alta NVIDIA GeForce GTX 650 Ti BOOST HD 7790 11 1.0 2-way 768 2048 MB GDDR5 Surround NVIDIA GeForce GTX 650 Ti HD 7770 11 768 1024 MB GDDR5 1920x1080 Surround @ Config 1680x1050 Media NVIDIA GeForce GTX 650 HD 7750 11 384 1024 MB GDDR5 Surround NVIDIA GeForce GT 640 HD 6670 11 1680x1050 384 2048 MB DDR3 Surround Muestra de Fotos: Muvee Reveal 1024 MB GDDR5 GeForce GT 630 HD 6570 11 96 Etiquetado de Fotos: 1024 MB DDR3 Cyberlink MediaShow No GEFORCE GT GeForce GT 620 HD 6450 11 1440x900 Conversión de Medios: 96 1024 MB DDR3 Recomendado Cyberlink MediaShow Espresso GeForce GT 610 HD 6450 11 Edición de Video: 48 1024 MB DDR3 Cyberlink PowerDirector GeForce 210 HD 5450 10.1 1280x720 16 512 MB / 1024 MB DDR2 1- La reproducción de Blu-ray 3D requiere un software reproductor de Blu-ray 3D compatible de compañías como CyberLink y Arcsoft. -

Nvidia Geforce Go 6100 Xp Driver Indir

Nvidia geforce go 6100 xp driver indir Click on the following links for the driver package readme info: /extract/ This package supports the following driver models:NVIDIA GeForce Go Download the latest drivers for your NVIDIA GeForce Go to keep your OS: Windows 10, Windows , Windows 7, Windows Vista, Windows XP. NVIDIA GeForce® Go® 6 graphics processing units (GPUs) empower a The NVIDIA GeForce Go / GPU and the NVIDIA nForce Go MCP are an. Install nVidia GeForce Go driver for Windows XP x86, or download DriverPack Solution software for automatic driver installation and update. Load and install nVidia GeForce Go video card drivers for Windows 7, XP, 10, 8, and , or download DriverPack Solution software for automatic driver. NVIDIA GeForce Go Free Driver Download for Windows XP - Averatec__NVIDIA_GeForce_Go_zip. World's most popular driver download site. Download the Vista 32bit drivers for both GeForce Go graphics and for all these drivers are miraculously available via Windows Update!! This page contains the driver installation download for NVIDIA GeForce Go Driver for HCL Infosystems Limited - T12M working on Microsoft Windows XP. Nvidia Geforce Go Driver for Windows 7 32 bit, Windows 7 64 bit, Windows 10, 8, XP. Uploaded on 4/20/, downloaded times, receiving a 79/ The video card is a nVIDIA GeForce Go (integrated laptop). I did find the NVIDIA GeForce Go drivers for XP @ the website, but for some reason ?det= Update your computer's drivers using DriverMax, the free driver update tool - Display Adapters - NVIDIA - NVIDIA GeForce Go Computer Driver Updates. NVIDIA GeForce Go + Win7 x86 Hi thelittlelim, Welcome to the forums, download the driver from here Gateway Support - Downloads. -

Technical Specifications Features

WWW.ZOTAC.COM GRAPHICS CARD CARTE GRAPHIQUE GRAFIKKARTE TARJETA GRAFICA Experience PC gaming like never before with surreal visuals and world-class performance with the ZOTAC GeForce GTX 780. The ZOTAC GeForce GTX 780 ushers in the next generation of PC gaming with unmatched visual realism and performance for the most demanding gamers and enthusiasts. NVIDIA 3D Vision Surround technology enables the ZOTAC GeForce GTX 780 to drive up to three displays for high-quality triple display gaming with immersive stereoscopic 3D effects. NVIDIA 3-Way SLI readiness lets the most demanding enthusiast gamers combine up to three ZOTAC GeForce GTX 780 graphics cards for up to a 280-percent performance boost. SKU: ZT-70201-10P EAN: 4895173602269 UPC: 816264013843 TECHNICAL SPECIFICATIONS FEATURES HDTV READY • NVIDIA GPU Boost 2.0 technology • ED 480p • NVIDIA Adaptive Vertical Sync Technology • HD 720p • NVIDIA SMX shader architecture • HD 1080i • NVIDIA PhysX technology • Full HD 1080p • NVIDIA CUDA technology • Quad Full HD (4K) • NVIDIA 3D Vision Surround support • NVIDIA SLI ready (3-way-SLI) • NVIDIA SHIELD gaming-portable ready SOFTWARE COMPATIBILITY • NVIDIA GeForce driver • Microsoft DirectX 11.1 (feature level 11_0) SPECIFICATIONS • OpenGL 4.3 • NVIDIA GeForce GTX 780 GPU • Microsoft Windows Vista/7/8 x86/x64 • 2,304 processor cores • 3GB GDDR5 • 384-bit memory bus DIMENSIONS • Engine clock (base): 863 MHz • Height: 111.15mm (4.376in) (boost): 900 MHz • Width: 266.7mm (10.5in) • Memory clock: 6008 MHz • Box • PCI Express 3.0 (compatible with -

How to Use NVIDIA 3D Vision with EDIUS 3D Preview Version

APPLICATION NOTE How To Use NVIDIA 3D Vision with EDIUS 3D Preview Version December 2011 Grass Valley EDIUS 6 3D preview version supports real-time 3D editing and 3D monitoring using NVIDIA 3D Vision glasses on Windows 7 and Windows Vista. www.grassvalley.com HOW TO USE NVIDIA 3D VISION WITH EDIUS 3D PREVIEW VERSION Introduction The EDIUS 3D preview version of Grass Valley™ EDIUS® 6 supports simultaneous real-time 3D editing and 3D moni- toring using NVIDIA 3D Vision glasses, on Windows 7 and Windows Vista systems. The 3D Vision glasses are not required for editing in 3D, only for viewing in stereoscopic 3D (you can change EDIUS to 3D editing mode, edit 3D clips, and preview in side-by-side or L-only/R-only modes and output to monitors), but if you have 3D Vision glasses and supported monitors, you will be able to monitor in frame sequential 3D mode while editing. Preparation Supported Hardware 1) NVIDIA 3D Vision Wireless Glasses Kit: http://www.nvidia.com/object/product_geforce_3D_VisionKit_us.html Note: NVIDIA had a wireless version of 3D Vision, but on their webpage they now only have a wired version avail- able (3D Vision Pro is wireless). Both versions (wired and wireless) will work, but you may want to get the wireless version while available. 2) Supported GPU cards and monitors: GeForce GPUs: http://www.nvidia.com/object/3d-vision-requirements.html Quadro GPUs: http://www.nvidia.com/object/3d-vision-pro-requirements.html Setting up the NVIDIA 3D Vision 1) Install latest NVIDIA GPU drivers: http://www.nvidia.com/drivers 2) Set up the USB emitter, 3D glasses, and monitors according to the NVIDIA driver wizard. -

Palit Geforce GTX 770 OC

Palit GeForce GTX 770 OC •OC Edition •Increase 7% DX11 performance* •7Gbps memory-the fastest ever on a GPU •GPU Boost 2.0 •NVIDIA SHIELD gaming portable ready •Palit Innovation Design •Copper Base •6-phase core PWM + 2-phase memory PWM •DrMOS : Better Power Efficiency •Better Overclocking Performance •2 slot cooler ready for 3 way SLI •Better thermal design, lower acoustic level •Overclocking Utility: ThunderMaster •*Unigine Heaven v2.5 (DX11) 1920x1200 None AA/4AF GeForce GTX 770 •NVIDIA® TXAA™ technology •NVIDIA GPU Boost 2.0 NE5X770S1042-1045F •NVIDIA PhysX® technology GK104 •NVIDIA FXAA™ technology •NVIDIA Adaptive Vertical Sync 28nm •NVIDIA Surround™ 1536 cores •Support for four concurrent displays including: •Two dual-link DVI 2048 MB •HDMI 256 bit •DisplayPort 1.2 •Microsoft DirectX 11.1 API (feature level 11_0) GDDR5 •NVIDIA SHIELD™-Ready 1085 MHz •NVIDIA 3D Vision®-Ready •NVIDIA SLI®-Ready 1137 MHz •NVIDIA CUDA® technology 3505MHz (DDR 7010MHz) •PCI Express 3.0 support •OpenGL 4.3 support 224.3 (GB/Sec) 229 W DisplayPort 1.2 HDMI 1.4a © 2013 NVIDIA Corporation. NVIDIA, the NVIDIA logo, and GeForce are registered trademarks of NVIDIA Corporation in the U.S. and other countries. All rights reserved. Graphics card require: PCI Express-compliant motherboard with one dual-width x16 Dual-Link DVI-I graphics slot One 8-pin and one 6-pin PCI Express supplementary power connectors Dual-Link DVI-D Minimum 600W or greater system power supply (with a minimum 12V current rating of 42A) Driver Disc Manual Power Cable DVI-CRT Adapter Supported Operating Systems: Windows 8, Windows 7, Windows Vista or Windows XP Operating System (32 or 64-bit) PCB: 254mm(L) x 112mm(W) Box: 400x270x72mm Board: 280mm (L) x 112mm(W) Retail Pack: 10 pcs per carton Cooler :2 slot Bracket: 2 slot GTX 770_K1045B_F206 .