Representing Unordered Data Using Complex-Weighted Multiset Automata

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Principle of Inclusion-Exclusion

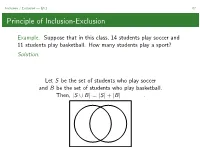

Inclusion / Exclusion — §3.1 67 Principle of Inclusion-Exclusion Example. Suppose that in this class, 14 students play soccer and 11 students play basketball. How many students play a sport? Solution. Let S be the set of students who play soccer and B be the set of students who play basketball. Then, |S ∪ B| = |S| + |B| . Inclusion / Exclusion — §3.1 68 Principle of Inclusion-Exclusion When A = A1 ∪···∪Ak ⊂U (U for universe) and the sets Ai are pairwise disjoint,wehave|A| = |A1| + ···+ |Ak |. When A = A1 ∪···∪Ak ⊂U and the Ai are not pairwise disjoint, we must apply the principle of inclusion-exclusion to determine |A|: |A1 ∪ A2| = |A1| + |A2|−|A1 ∩ A2| |A1 ∪ A2 ∪ A3| = |A1| + |A2| + |A3|−|A1 ∩ A2|−|A1 ∩ A3| −|A2 ∩ A3| + |A1 ∩ A2 ∩ A3| |A1 ∪···∪Am| = |Ai |− |Ai ∩ Aj | + Ai ∩ Aj ∩ Ak ··· ! ! ! " " " " It may be more convenient to apply inclusion/exclusion where the Ai are forbidden subsets of U,inwhichcase . Inclusion / Exclusion — §3.1 69 mmm...PIE The key to using the principle of inclusion-exclusion is determining the right choice of Ai .TheAi and their intersections should be easy to count and easy to characterize. Notation: π = p1p2 ···pn is the one-line notation for a permutation of [n] whose first element is p1,secondelementisp2,etc. Example. How many permutations p = p1p2 ···pn are there in which at least one of p1 and p2 are even? Solution. Let U be the set of n-permutations. Let A1 be the set of permutations where p1 is even. Let A2 be the set of permutations where p2 is even. -

Simultaneous Diagonalization and SVD of Commuting Matrices

Simultaneous Diagonalization and SVD of Commuting Matrices Ronald P. Nordgren1 Brown School of Engineering, Rice University Abstract. We present a matrix version of a known method of constructing common eigenvectors of two diagonalizable commuting matrices, thus enabling their simultane- ous diagonalization. The matrices may have simple eigenvalues of multiplicity greater than one. The singular value decomposition (SVD) of a class of commuting matrices also is treated. The effect of row/column permutation is examined. Examples are given. 1 Introduction It is well known that if two diagonalizable matrices have the same eigenvectors, then they commute. The converse also is true and a construction for the common eigen- vectors (enabling simultaneous diagonalization) is known. If one of the matrices has distinct eigenvalues (multiplicity one), it is easy to show that its eigenvectors pertain to both commuting matrices. The case of matrices with simple eigenvalues of mul- tiplicity greater than one requires a more complicated construction of their common eigenvectors. Here, we present a matrix version of a construction procedure given by Horn and Johnson [1, Theorem 1.3.12] and in a video by Sadun [4]. The eigenvector construction procedure also is applied to the singular value decomposition of a class of commuting matrices that includes the case where at least one of the matrices is real and symmetric. In addition, we consider row/column permutation of the commuting matrices. Three examples illustrate the eigenvector construction procedure. 2 Eigenvector Construction Let A and B be diagonalizable square matrices that commute, i.e. AB = BA (1) and their Jordan canonical forms read −1 −1 A = SADASA , B = SBDB SB . -

MTH 102A - Part 1 Linear Algebra 2019-20-II Semester

MTH 102A - Part 1 Linear Algebra 2019-20-II Semester Arbind Kumar Lal1 February 3, 2020 1Indian Institute of Technology Kanpur 1 2 • Matrix A = behaves like the scalar 3 when multiplied with 2 1 1 1 2 1 1 x = . That is, = 3 . 1 2 1 1 1 • Physically: The Linear function f (x) = Ax magnifies the nonzero 1 2 vector ∈ C three (3) times. 1 1 1 • Similarly, A = −1 . So, behaves by changing the −1 −1 1 direction of the vector −1 1 2 2 • Take A = . Do I have a nonzero x ∈ C which gets 1 3 magnified by A? • So I am looking for x 6= 0 and α s.t. Ax = αx. Using x 6= 0, we have Ax = αx if and only if [αI − A]x = 0 if and only if det[αI − A] = 0. √ α − 1 −2 2 • det[αI − A] = det = α − 4α + 1. So α = 2 ± 3. −1 α − 3 √ √ 1 + 3 −2 • Take α = 2 + 3. To find x, solve √ x = 0: −1 3 − 1 √ 3 − 1 using GJE, for instance. We get x = . Moreover 1 √ √ √ 1 2 3 − 1 3 + 1 √ 3 − 1 Ax = = √ = (2 + 3) . 1 3 1 2 + 3 1 n • We call λ ∈ C an eigenvalue of An×n if there exists x ∈ C , x 6= 0 s.t. Ax = λx. We call x an eigenvector of A for the eigenvalue λ. We call (λ, x) an eigenpair. • If (λ, x) is an eigenpair of A, then so is (λ, cx), for each c 6= 0, c ∈ C. -

The Algebra Generated by Three Commuting Matrices

THE ALGEBRA GENERATED BY THREE COMMUTING MATRICES B.A. SETHURAMAN Abstract. We present a survey of an open problem concerning the dimension of the algebra generated by three commuting matrices. This article concerns a problem in algebra that is completely elementary to state, yet, has proven tantalizingly difficult and is as yet unsolved. Consider C[A; B; C] , the C-subalgebra of the n × n matrices Mn(C) generated by three commuting matrices A, B, and C. Thus, C[A; B; C] consists of all C- linear combinations of \monomials" AiBjCk, where i, j, and k range from 0 to infinity. Note that C[A; B; C] and Mn(C) are naturally vector-spaces over C; moreover, C[A; B; C] is a subspace of Mn(C). The problem, quite simply, is this: Is the dimension of C[A; B; C] as a C vector space bounded above by n? 2 Note that the dimension of C[A; B; C] is at most n , because the dimen- 2 sion of Mn(C) is n . Asking for the dimension of C[A; B; C] to be bounded above by n when A, B, and C commute is to put considerable restrictions on C[A; B; C]: this is to require that C[A; B; C] occupy only a small portion of the ambient Mn(C) space in which it sits. Actually, the dimension of C[A; B; C] is already bounded above by some- thing slightly smaller than n2, thanks to a classical theorem of Schur ([16]), who showed that the maximum possible dimension of a commutative C- 2 subalgebra of Mn(C) is 1 + bn =4c. -

![Arxiv:1705.10957V2 [Math.AC] 14 Dec 2017 Ideal Called Is and Commutator X Y Vrafield a Over Let Matrix Eae Leri Es Is E Sdfierlvn Notions](https://docslib.b-cdn.net/cover/9555/arxiv-1705-10957v2-math-ac-14-dec-2017-ideal-called-is-and-commutator-x-y-vra-eld-a-over-let-matrix-eae-leri-es-is-e-sd-erlvn-notions-379555.webp)

Arxiv:1705.10957V2 [Math.AC] 14 Dec 2017 Ideal Called Is and Commutator X Y Vrafield a Over Let Matrix Eae Leri Es Is E Sdfierlvn Notions

NEARLY COMMUTING MATRICES ZHIBEK KADYRSIZOVA Abstract. We prove that the algebraic set of pairs of matrices with a diag- onal commutator over a field of positive prime characteristic, its irreducible components, and their intersection are F -pure when the size of matrices is equal to 3. Furthermore, we show that this algebraic set is reduced and the intersection of its irreducible components is irreducible in any characteristic for pairs of matrices of any size. In addition, we discuss various conjectures on the singularities of these algebraic sets and the system of parameters on the corresponding coordinate rings. Keywords: Frobenius, singularities, F -purity, commuting matrices 1. Introduction and preliminaries In this paper we study algebraic sets of pairs of matrices such that their commutator is either nonzero diagonal or zero. We also consider some other related algebraic sets. First let us define relevant notions. Let X =(x ) and Y =(y ) be n×n matrices of indeterminates arXiv:1705.10957v2 [math.AC] 14 Dec 2017 ij 1≤i,j≤n ij 1≤i,j≤n over a field K. Let R = K[X,Y ] be the polynomial ring in {xij,yij}1≤i,j≤n and let I denote the ideal generated by the off-diagonal entries of the commutator matrix XY − YX and J denote the ideal generated by the entries of XY − YX. The ideal I defines the algebraic set of pairs of matrices with a diagonal commutator and is called the algebraic set of nearly commuting matrices. The ideal J defines the algebraic set of pairs of commuting matrices. -

Inclusion‒Exclusion Principle

Inclusionexclusion principle 1 Inclusion–exclusion principle In combinatorics, the inclusion–exclusion principle (also known as the sieve principle) is an equation relating the sizes of two sets and their union. It states that if A and B are two (finite) sets, then The meaning of the statement is that the number of elements in the union of the two sets is the sum of the elements in each set, respectively, minus the number of elements that are in both. Similarly, for three sets A, B and C, This can be seen by counting how many times each region in the figure to the right is included in the right hand side. More generally, for finite sets A , ..., A , one has the identity 1 n This can be compactly written as The name comes from the idea that the principle is based on over-generous inclusion, followed by compensating exclusion. When n > 2 the exclusion of the pairwise intersections is (possibly) too severe, and the correct formula is as shown with alternating signs. This formula is attributed to Abraham de Moivre; it is sometimes also named for Daniel da Silva, Joseph Sylvester or Henri Poincaré. Inclusion–exclusion illustrated for three sets For the case of three sets A, B, C the inclusion–exclusion principle is illustrated in the graphic on the right. Proof Let A denote the union of the sets A , ..., A . To prove the 1 n Each term of the inclusion-exclusion formula inclusion–exclusion principle in general, we first have to verify the gradually corrects the count until finally each identity portion of the Venn Diagram is counted exactly once. -

Multipermutations 1.1. Generating Functions

CORE Metadata, citation and similar papers at core.ac.uk Provided by Elsevier - Publisher Connector JOURNALOF COMBINATORIALTHEORY, Series A 67, 44-71 (1994) The r- Multipermutations SEUNGKYUNG PARK Department of Mathematics, Yonsei University, Seoul 120-749, Korea Communicated by Gian-Carlo Rota Received October 1, 1992 In this paper we study permutations of the multiset {lr, 2r,...,nr}, which generalizes Gesse| and Stanley's work (J. Combin. Theory Ser. A 24 (1978), 24-33) on certain permutations on the multiset {12, 22..... n2}. Various formulas counting the permutations by inversions, descents, left-right minima, and major index are derived. © 1994 AcademicPress, Inc. 1. THE r-MULTIPERMUTATIONS 1.1. Generating Functions A multiset is defined as an ordered pair (S, f) where S is a set and f is a function from S to the set of nonnegative integers. If S = {ml ..... mr}, we write {m f(mD, .... m f(mr)r } for (S, f). Intuitively, a multiset is a set with possibly repeated elements. Let us begin by considering permutations of multisets (S, f), which are words in which each letter belongs to the set S and for each m e S the total number of appearances of m in the word is f(m). Thus 1223231 is a permutation of the multiset {12, 2 3, 32}. In this paper we consider a special set of permutations of the multiset [n](r) = {1 r, ..., nr}, which is defined as follows. DEFINITION 1.1. An r-multipermutation of the set {ml ..... ran} is a permutation al ""am of the multiset {m~ .... -

Lecture 2: Spectral Theorems

Lecture 2: Spectral Theorems This lecture introduces normal matrices. The spectral theorem will inform us that normal matrices are exactly the unitarily diagonalizable matrices. As a consequence, we will deduce the classical spectral theorem for Hermitian matrices. The case of commuting families of matrices will also be studied. All of this corresponds to section 2.5 of the textbook. 1 Normal matrices Definition 1. A matrix A 2 Mn is called a normal matrix if AA∗ = A∗A: Observation: The set of normal matrices includes all the Hermitian matrices (A∗ = A), the skew-Hermitian matrices (A∗ = −A), and the unitary matrices (AA∗ = A∗A = I). It also " # " # 1 −1 1 1 contains other matrices, e.g. , but not all matrices, e.g. 1 1 0 1 Here is an alternate characterization of normal matrices. Theorem 2. A matrix A 2 Mn is normal iff ∗ n kAxk2 = kA xk2 for all x 2 C : n Proof. If A is normal, then for any x 2 C , 2 ∗ ∗ ∗ ∗ ∗ 2 kAxk2 = hAx; Axi = hx; A Axi = hx; AA xi = hA x; A xi = kA xk2: ∗ n n Conversely, suppose that kAxk = kA xk for all x 2 C . For any x; y 2 C and for λ 2 C with jλj = 1 chosen so that <(λhx; (A∗A − AA∗)yi) = jhx; (A∗A − AA∗)yij, we expand both sides of 2 ∗ 2 kA(λx + y)k2 = kA (λx + y)k2 to obtain 2 2 ∗ 2 ∗ 2 ∗ ∗ kAxk2 + kAyk2 + 2<(λhAx; Ayi) = kA xk2 + kA yk2 + 2<(λhA x; A yi): 2 ∗ 2 2 ∗ 2 Using the facts that kAxk2 = kA xk2 and kAyk2 = kA yk2, we derive 0 = <(λhAx; Ayi − λhA∗x; A∗yi) = <(λhx; A∗Ayi − λhx; AA∗yi) = <(λhx; (A∗A − AA∗)yi) = jhx; (A∗A − AA∗)yij: n ∗ ∗ n Since this is true for any x 2 C , we deduce (A A − AA )y = 0, which holds for any y 2 C , meaning that A∗A − AA∗ = 0, as desired. -

Basic Operations for Fuzzy Multisets

Basic operations for fuzzy multisets A. Riesgoa, P. Alonsoa, I. D´ıazb, S. Montesc aDepartment of Mathematics, University of Oviedo, Spain bDepartment of Informatics, University of Oviedo, Spain cDepartment of Statistics and O.R., University of Oviedo, Spain Abstract In their original and ordinary formulation, fuzzy sets associate each element in a reference set with one number, the membership value, in the real unit interval [0; 1]. Among the various existing generalisations of the concept, we find fuzzy multisets. In this case, membership values are multisets in [0; 1] rather than single values. Mathematically, they can be also seen as a generalisation of the hesitant fuzzy sets, but in this general environment, the information about repetition is not lost, so that, the opinions given by the experts are better managed. Thus, we focus our study on fuzzy multisets and their basic operations: complement, union and intersection. Moreover, we show how the hesitant operations can be worked out from an extension of the fuzzy multiset operations and investigate the important role that the concepts of order and sorting sequences play in the basic difference between these two related approaches. Keywords: Fuzzy multiset; Hesitant fuzzy set; Complement; Aggregate union; Aggregate intersection. 1. Introduction The concept of a fuzzy set was originally introduced by Lotfi A. Zadeh as an extension of classical set theory [15]. Fuzzy sets aim to account for real-life situations where there is either limited knowledge or some sort of implicit ambiguity about whether an element should be considered a member of a set. This extension from the classical (or crisp) sets to the fuzzy ones is accomplished by replacing the Boolean characteristic function of a set, which maps each element of the universal set to either 0 (non-member) or 1 (member), with a more sophisticated membership function that maps elements into the real interval [0; 1]. -

Finding Direct Partition Bijections by Two-Directional Rewriting Techniques

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Discrete Mathematics 285 (2004) 151–166 www.elsevier.com/locate/disc Finding direct partition bijections by two-directional rewriting techniques Max Kanovich Department of Computer and Information Science, University of Pennsylvania, 3330 Walnut Street, Philadelphia, PA 19104, USA Received 7 May 2003; received in revised form 27 October 2003; accepted 21 January 2004 Abstract One basic activity in combinatorics is to establish combinatorial identities by so-called ‘bijective proofs,’ which consists in constructing explicit bijections between two types of the combinatorial objects under consideration. We show how such bijective proofs can be established in a systematic way from the ‘lattice properties’ of partition ideals, and how the desired bijections are computed by means of multiset rewriting, for a variety of combinatorial problems involving partitions. In particular, we fully characterizes all equinumerous partition ideals with ‘disjointly supported’ complements. This geometrical characterization is proved to automatically provide the desired bijection between partition ideals but in terms of the minimal elements of the order ÿlters, their complements. As a corollary, a new transparent proof, the ‘bijective’ one, is given for all equinumerous classes of the partition ideals of order 1 from the classical book “The Theory of Partitions” by G.Andrews. Establishing the required bijections involves two-directional reductions technique novel in the sense that forward and backward application of rewrite rules heads, respectively, for two di?erent normal forms (representing the two combinatorial types). It is well-known that non-overlapping multiset rules are con@uent. -

Symmetric Relations and Cardinality-Bounded Multisets in Database Systems

Symmetric Relations and Cardinality-Bounded Multisets in Database Systems Kenneth A. Ross Julia Stoyanovich Columbia University¤ [email protected], [email protected] Abstract 1 Introduction A relation R is symmetric in its ¯rst two attributes if R(x ; x ; : : : ; x ) holds if and only if R(x ; x ; : : : ; x ) In a binary symmetric relationship, A is re- 1 2 n 2 1 n holds. We call R(x ; x ; : : : ; x ) the symmetric com- lated to B if and only if B is related to A. 2 1 n plement of R(x ; x ; : : : ; x ). Symmetric relations Symmetric relationships between k participat- 1 2 n come up naturally in several contexts when the real- ing entities can be represented as multisets world relationship being modeled is itself symmetric. of cardinality k. Cardinality-bounded mul- tisets are natural in several real-world appli- Example 1.1 In a law-enforcement database record- cations. Conventional representations in re- ing meetings between pairs of individuals under inves- lational databases su®er from several consis- tigation, the \meets" relationship is symmetric. 2 tency and performance problems. We argue that the database system itself should pro- Example 1.2 Consider a database of web pages. The vide native support for cardinality-bounded relationship \X is linked to Y " (by either a forward or multisets. We provide techniques to be im- backward link) between pairs of web pages is symmet- plemented by the database engine that avoid ric. This relationship is neither reflexive nor antire- the drawbacks, and allow a schema designer to flexive, i.e., \X is linked to X" is neither universally simply declare a table to be symmetric in cer- true nor universally false. -

On Low Rank Perturbations of Complex Matrices and Some Discrete Metric Spaces∗

Electronic Journal of Linear Algebra ISSN 1081-3810 A publication of the International Linear Algebra Society Volume 18, pp. 302-316, June 2009 ELA ON LOW RANK PERTURBATIONS OF COMPLEX MATRICES AND SOME DISCRETE METRIC SPACES∗ LEV GLEBSKY† AND LUIS MANUEL RIVERA‡ Abstract. In this article, several theorems on perturbations ofa complex matrix by a matrix ofa given rank are presented. These theorems may be divided into two groups. The first group is about spectral properties ofa matrix under such perturbations; the second is about almost-near relations with respect to the rank distance. Key words. Complex matrices, Rank, Perturbations. AMS subject classifications. 15A03, 15A18. 1. Introduction. In this article, we present several theorems on perturbations of a complex matrix by a matrix of a given rank. These theorems may be divided into two groups. The first group is about spectral properties of a matrix under such perturbations; the second is about almost-near relations with respect to the rank distance. 1.1. The first group. Theorem 2.1gives necessary and sufficient conditions on the Weyr characteristics, [20], of matrices A and B if rank(A − B) ≤ k.In one direction the theorem is known; see [12, 13, 14]. For k =1thetheoremisa reformulation of a theorem of Thompson [16] (see Theorem 2.3 of the present article). We prove Theorem 2.1by induction with respect to k. To this end, we introduce a discrete metric space (the space of Weyr characteristics) and prove that this metric space is geodesic. In fact, the induction (with respect to k) is hidden in this proof (see Proposition 3.6 and Proposition 3.1).