Necessary to Conduct Most Analyses Commercial Software Typically Have (Very Bad) Installers Open Source Software Typically Have

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction to Computer Systems 15-213/18-243, Spring 2009 1St

M4-L3: Executables CSE351, Winter 2021 Executables CSE 351 Winter 2021 Instructor: Teaching Assistants: Mark Wyse Kyrie Dowling Catherine Guevara Ian Hsiao Jim Limprasert Armin Magness Allie Pfleger Cosmo Wang Ronald Widjaja http://xkcd.com/1790/ M4-L3: Executables CSE351, Winter 2021 Administrivia ❖ Lab 2 due Monday (2/8) ❖ hw12 due Friday ❖ hw13 due next Wednesday (2/10) ▪ Based on the next two lectures, longer than normal ❖ Remember: HW and readings due before lecture, at 11am PST on due date 2 M4-L3: Executables CSE351, Winter 2021 Roadmap C: Java: Memory & data car *c = malloc(sizeof(car)); Car c = new Car(); Integers & floats c->miles = 100; c.setMiles(100); x86 assembly c->gals = 17; c.setGals(17); Procedures & stacks float mpg = get_mpg(c); float mpg = Executables free(c); c.getMPG(); Arrays & structs Memory & caches Assembly get_mpg: Processes pushq %rbp language: movq %rsp, %rbp Virtual memory ... Memory allocation popq %rbp Java vs. C ret OS: Machine 0111010000011000 100011010000010000000010 code: 1000100111000010 110000011111101000011111 Computer system: 3 M4-L3: Executables CSE351, Winter 2021 Reading Review ❖ Terminology: ▪ CALL: compiler, assembler, linker, loader ▪ Object file: symbol table, relocation table ▪ Disassembly ▪ Multidimensional arrays, row-major ordering ▪ Multilevel arrays ❖ Questions from the Reading? ▪ also post to Ed post! 4 M4-L3: Executables CSE351, Winter 2021 Building an Executable from a C File ❖ Code in files p1.c p2.c ❖ Compile with command: gcc -Og p1.c p2.c -o p ▪ Put resulting machine code in -

CERES Software Bulletin 95-12

CERES Software Bulletin 95-12 Fortran 90 Linking Experiences, September 5, 1995 1.0 Purpose: To disseminate experience gained in the process of linking Fortran 90 software with library routines compiled under Fortran 77 compiler. 2.0 Originator: Lyle Ziegelmiller ([email protected]) 3.0 Description: One of the summer students, Julia Barsie, was working with a plot program which was written in f77. This program called routines from a graphics package known as NCAR, which is also written in f77. Everything was fine. The plot program was converted to f90, and a new version of the NCAR graphical package was released, which was written in f77. A problem arose when trying to link the new f90 version of the plot program with the new f77 release of NCAR; many undefined references were reported by the linker. This bulletin is intended to convey what was learned in the effort to accomplish this linking. The first step I took was to issue the "-dryrun" directive to the f77 compiler when using it to compile the original f77 plot program and the original NCAR graphics library. "- dryrun" causes the linker to produce an output detailing all the various libraries that it links with. Note that these libaries are in addition to the libaries you would select on the command line. For example, you might compile a program with erbelib, but the linker would have to link with librarie(s) that contain the definitions of sine or cosine. Anyway, it was my hypothesis that if everything compiled and linked with f77, then all the libraries must be contained in the output from the f77's "-dryrun" command. -

Version 7.8-Systemd

Linux From Scratch Version 7.8-systemd Created by Gerard Beekmans Edited by Douglas R. Reno Linux From Scratch: Version 7.8-systemd by Created by Gerard Beekmans and Edited by Douglas R. Reno Copyright © 1999-2015 Gerard Beekmans Copyright © 1999-2015, Gerard Beekmans All rights reserved. This book is licensed under a Creative Commons License. Computer instructions may be extracted from the book under the MIT License. Linux® is a registered trademark of Linus Torvalds. Linux From Scratch - Version 7.8-systemd Table of Contents Preface .......................................................................................................................................................................... vii i. Foreword ............................................................................................................................................................. vii ii. Audience ............................................................................................................................................................ vii iii. LFS Target Architectures ................................................................................................................................ viii iv. LFS and Standards ............................................................................................................................................ ix v. Rationale for Packages in the Book .................................................................................................................... x vi. Prerequisites -

Linking + Libraries

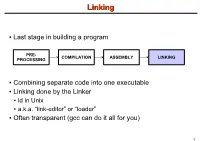

LinkingLinking ● Last stage in building a program PRE- COMPILATION ASSEMBLY LINKING PROCESSING ● Combining separate code into one executable ● Linking done by the Linker ● ld in Unix ● a.k.a. “link-editor” or “loader” ● Often transparent (gcc can do it all for you) 1 LinkingLinking involves...involves... ● Combining several object modules (the .o files corresponding to .c files) into one file ● Resolving external references to variables and functions ● Producing an executable file (if no errors) file1.c file1.o file2.c gcc file2.o Linker Executable fileN.c fileN.o Header files External references 2 LinkingLinking withwith ExternalExternal ReferencesReferences file1.c file2.c int count; #include <stdio.h> void display(void); Compiler extern int count; int main(void) void display(void) { file1.o file2.o { count = 10; with placeholders printf(“%d”,count); display(); } return 0; Linker } ● file1.o has placeholder for display() ● file2.o has placeholder for count ● object modules are relocatable ● addresses are relative offsets from top of file 3 LibrariesLibraries ● Definition: ● a file containing functions that can be referenced externally by a C program ● Purpose: ● easy access to functions used repeatedly ● promote code modularity and re-use ● reduce source and executable file size 4 LibrariesLibraries ● Static (Archive) ● libname.a on Unix; name.lib on DOS/Windows ● Only modules with referenced code linked when compiling ● unlike .o files ● Linker copies function from library into executable file ● Update to library requires recompiling program 5 LibrariesLibraries ● Dynamic (Shared Object or Dynamic Link Library) ● libname.so on Unix; name.dll on DOS/Windows ● Referenced code not copied into executable ● Loaded in memory at run time ● Smaller executable size ● Can update library without recompiling program ● Drawback: slightly slower program startup 6 LibrariesLibraries ● Linking a static library libpepsi.a /* crave source file */ … gcc .. -

Using the DLFM Package on the Cray XE6 System

Using the DLFM Package on the Cray XE6 System Mike Davis, Cray Inc. Version 1.4 - October 2013 1.0: Introduction The DLFM package is a set of libraries and tools that can be applied to a dynamically-linked application, or an application that uses Python, to provide improved performance during the loading of dynamic libraries and importing of Python modules when running the application at large scale. Included in the DLFM package are: a set of wrapper functions that interface with the dynamic linker (ld.so) to cache the application's dynamic library load operations; and a custom libpython.so, with a set of optimized components, that caches the application's Python import operations. 2.0: Dynamic Linking and Python Importing Without DLFM When a dynamically-linked application is executed, the set of dependent libraries is loaded in sequence by ld.so prior to the transfer of control to the application's main function. This set of dependent libraries ordinarily numbers a dozen or so, but can number many more, depending on the needs of the application; their names and paths are given by the ldd(1) command. As ld.so processes each dependent library, it executes a series of system calls to find the library and make the library's contents available to the application. These include: fd = open (/path/to/dependent/library, O_RDONLY); read (fd, elfhdr, 832); // to get the library ELF header fstat (fd, &stbuf); // to get library attributes, such as size mmap (buf, length, prot, MAP_PRIVATE, fd, offset); // read text segment mmap (buf, length, prot, MAP_PRIVATE, fd, offset); // read data segment close (fd); The number of open() calls can be many times larger than the number of dependent libraries, because ld.so must search for each dependent library in multiple locations, as described on the ld.so(1) man page. -

Environment Variable and Set-UID Program Lab 1

SEED Labs – Environment Variable and Set-UID Program Lab 1 Environment Variable and Set-UID Program Lab Copyright © 2006 - 2016 Wenliang Du, All rights reserved. Free to use for non-commercial educational purposes. Commercial uses of the materials are prohibited. The SEED project was funded by multiple grants from the US National Science Foundation. 1 Overview The learning objective of this lab is for students to understand how environment variables affect program and system behaviors. Environment variables are a set of dynamic named values that can affect the way running processes will behave on a computer. They are used by most operating systems, since they were introduced to Unix in 1979. Although environment variables affect program behaviors, how they achieve that is not well understood by many programmers. As a result, if a program uses environment variables, but the programmer does not know that they are used, the program may have vulnerabilities. In this lab, students will understand how environment variables work, how they are propagated from parent process to child, and how they affect system/program behaviors. We are particularly interested in how environment variables affect the behavior of Set-UID programs, which are usually privileged programs. This lab covers the following topics: • Environment variables • Set-UID programs • Securely invoke external programs • Capability leaking • Dynamic loader/linker Readings and videos. Detailed coverage of the Set-UID mechanism, environment variables, and their related security problems can be found in the following: • Chapters 1 and 2 of the SEED Book, Computer & Internet Security: A Hands-on Approach, 2nd Edition, by Wenliang Du. -

Other Useful Commands

Bioinformatics 101 – Lecture 2 Introduction to command line Alberto Riva ([email protected]), J. Lucas Boatwright ([email protected]) ICBR Bioinformatics Core Computing environments ▪ Standalone application – for local, interactive use; ▪ Command-line – local or remote, interactive use; ▪ Cluster oriented: remote, not interactive, highly parallelizable. Command-line basics ▪ Commands are typed at a prompt. The program that reads your commands and executes them is the shell. ▪ Interaction style originated in the 70s, with the first visual terminals (connections were slow…). ▪ A command consists of a program name followed by options and/or arguments. ▪ Syntax may be obscure and inconsistent (but efficient!). Command-line basics ▪ Example: to view the names of files in the current directory, use the “ls” command (short for “list”) ls plain list ls –l long format (size, permissions, etc) ls –l –t sort newest to oldest ls –l –t –r reverse sort (oldest to newest) ls –lrt options can be combined (in this case) ▪ Command names and options are case sensitive! File System ▪ Unix systems are centered on the file system. Huge tree of directories and subdirectories containing all files. ▪ Everything is a file. Unix provides a lot of commands to operate on files. ▪ File extensions are not necessary, and are not recognized by the system (but may still be useful). ▪ Please do not put spaces in filenames! Permissions ▪ Different privileges and permissions apply to different areas of the filesystem. ▪ Every file has an owner and a group. A user may belong to more than one group. ▪ Permissions specify read, write, and execute privileges for the owner, the group, everyone else. -

Chapter 6: the Linker

6. The Linker 6-1 Chapter 6: The Linker References: • Brian W. Kernighan / Dennis M. Ritchie: The C Programming Language, 2nd Ed. Prentice-Hall, 1988. • Samuel P. Harbison / Guy L. Steele Jr.: C — A Reference Manual, 4th Ed. Prentice-Hall, 1995. • Online Documentation of Microsoft Visual C++ 6.0 (Standard Edition): MSDN Library: Visual Studio 6.0 release. • Horst Wettstein: Assemblierer und Binder (in German). Carl Hanser Verlag, 1979. • Peter Rechenberg, Gustav Pomberger (Eds.): Informatik-Handbuch (in German). Carl Hanser Verlag, 1997. Kapitel 12: Systemsoftware (H. M¨ossenb¨ock). Stefan Brass: Computer Science III Universit¨atGiessen, 2001 6. The Linker 6-2 Overview ' $ 1. Introduction (Overview) & % 2. Object Files, Libraries, and the Linker 3. Make 4. Dynamic Linking Stefan Brass: Computer Science III Universit¨atGiessen, 2001 6. The Linker 6-3 Introduction (1) • Often, a program consists of several modules which are separately compiled. Reasons are: The program is large. Even with fast computers, editing and compiling a single file with a million lines leads to unnecessary delays. The program is developed by several people. Different programmers cannot easily edit the same file at the same time. (There is software for collaborative work that permits that, but it is still a research topic.) A large program is easier to understand if it is divided into natural units. E.g. each module defines one data type with its operations. Stefan Brass: Computer Science III Universit¨atGiessen, 2001 6. The Linker 6-4 Introduction (2) • Reasons for splitting a program into several source files (continued): The same module might be used in different pro- grams (e.g. -

GNU GCC Compiler

C-Refresher: Session 01 GNU GCC Compiler Arif Butt Summer 2017 I am Thankful to my student Muhammad Zubair [email protected] for preparation of these slides in accordance with my video lectures at http://www.arifbutt.me/category/c-behind-the-curtain/ Today’s Agenda • Brief Concept of GNU gcc Compiler • Compilation Cycle of C-Programs • Contents of Object File • Multi-File Programs • Linking Process • Libraries Muhammad Arif Butt (PUCIT) 2 Compiler Compiler is a program that transforms the source code of a high level language into underlying machine code. Underlying machine can be x86 sparse, Linux, Motorola. Types of Compilers: gcc, clang, turbo C-compiler, visual C++… Muhammad Arif Butt (PUCIT) 3 GNU GCC Compiler • GNU is an integrated distribution of compilers for C, C++, OBJC, OBJC++, JAVA, FORTRAN • gcc can be used for cross compile Cross Compile: • Cross compile means to generate machine code for the platform other than the one in which it is running • gcc uses tools like autoConf, automake and lib to generate codes for some other architecture Muhammad Arif Butt (PUCIT) 4 Compilation Process Four stages of compilation: •Preprocessor •Compiler •Assembler •Linker Muhammad Arif Butt (PUCIT) 5 Compilation Process(cont...) hello.c gcc –E hello.c 1>hello.i Preprocessor hello.i 1. Preprocessor • Interpret Preprocessor Compiler directives • Include header files • Remove comments • Expand headers Assembler Linker Muhammad Arif Butt (PUCIT) 6 Compilation Process(cont...) hello.c 2. Compiler • Check for syntax errors Preprocessor • If no syntax error, the expanded code is converted to hello.i assembly code which is understood by the underlying Compiler gcc –S hello.i processor, e.g. -

Hardware Pattern Matching for Network Traffic Analysis in Gigabit

Technische Universitat¨ Munchen¨ Fakultat¨ fur¨ Informatik Diplomarbeit in Informatik Hardware Pattern Matching for Network Traffic Analysis in Gigabit Environments Gregor M. Maier Aufgabenstellerin: Prof. Anja Feldmann, Ph. D. Betreuerin: Prof. Anja Feldmann, Ph. D. Abgabedatum: 15. Mai 2007 Ich versichere, dass ich diese Diplomarbeit selbst¨andig verfasst und nur die angegebe- nen Quellen und Hilfsmittel verwendet habe. Datum Gregor M. Maier Abstract Pattern Matching is an important task in various applica- tions, including network traffic analysis and intrusion detec- tion. In modern high speed gigabit networks it becomes un- feasible to search for patterns using pure software implemen- tations, due to the amount of data that must be searched. Furthermore applications employing pattern matching often need to search for several patterns at the same time. In this thesis we explore the possibilities of using FPGAs for hardware pattern matching. We analyze the applicability of various pattern matching algorithms for hardware imple- mentation and implement a Rabin-Karp and an approximate pattern matching algorithm in Endace’s network measure- ment cards using VHDL. The implementations are evalu- ated and compared to pure software matching solutions. To demonstrate the power of hardware pattern matching, an example application for traffic accounting using hardware pattern matching is presented as a proof-of-concept. Since some systems like network intrusion detection systems an- alyze reassembled TCP streams, possibilities for hardware TCP reassembly combined with hardware pattern matching are discussed as well. Contents vii Contents List of Figures ix 1 Introduction 1 1.1 Motivation . 1 1.2 Related Work . 2 1.3 About Endace DAG Network Monitoring Cards . -

Husky: Towards a More Efficient and Expressive Distributed Computing Framework

Husky: Towards a More Efficient and Expressive Distributed Computing Framework Fan Yang Jinfeng Li James Cheng Department of Computer Science and Engineering The Chinese University of Hong Kong ffyang,jfli,[email protected] ABSTRACT tends Spark, and Gelly [3] extends Flink, to expose to programmers Finding efficient, expressive and yet intuitive programming models fine-grained control over the access patterns on vertices and their for data-parallel computing system is an important and open prob- communication patterns. lem. Systems like Hadoop and Spark have been widely adopted Over-simplified functional or declarative programming interfaces for massive data processing, as coarse-grained primitives like map with only coarse-grained primitives also limit the flexibility in de- and reduce are succinct and easy to master. However, sometimes signing efficient distributed algorithms. For instance, there is re- over-simplified API hinders programmers from more fine-grained cent interest in machine learning algorithms that compute by fre- control and designing more efficient algorithms. Developers may quently and asynchronously accessing and mutating global states have to resort to sophisticated domain-specific languages (DSLs), (e.g., some entries in a large global key-value table) in a fine-grained or even low-level layers like MPI, but this raises development cost— manner [14, 19, 24, 30]. It is not clear how to program such algo- learning many mutually exclusive systems prolongs the develop- rithms using only synchronous and coarse-grained operators (e.g., ment schedule, and the use of low-level tools may result in bug- map, reduce, and join), and even with immutable data abstraction prone programming. -

GNU Coreutils Cheat Sheet (V1.00) Created by Peteris Krumins ([email protected], -- Good Coders Code, Great Coders Reuse)

GNU Coreutils Cheat Sheet (v1.00) Created by Peteris Krumins ([email protected], www.catonmat.net -- good coders code, great coders reuse) Utility Description Utility Description arch Print machine hardware name nproc Print the number of processors base64 Base64 encode/decode strings or files od Dump files in octal and other formats basename Strip directory and suffix from file names paste Merge lines of files cat Concatenate files and print on the standard output pathchk Check whether file names are valid or portable chcon Change SELinux context of file pinky Lightweight finger chgrp Change group ownership of files pr Convert text files for printing chmod Change permission modes of files printenv Print all or part of environment chown Change user and group ownership of files printf Format and print data chroot Run command or shell with special root directory ptx Permuted index for GNU, with keywords in their context cksum Print CRC checksum and byte counts pwd Print current directory comm Compare two sorted files line by line readlink Display value of a symbolic link cp Copy files realpath Print the resolved file name csplit Split a file into context-determined pieces rm Delete files cut Remove parts of lines of files rmdir Remove directories date Print or set the system date and time runcon Run command with specified security context dd Convert a file while copying it seq Print sequence of numbers to standard output df Summarize free disk space setuidgid Run a command with the UID and GID of a specified user dir Briefly list directory