Automated Knowledge Enrichment for Semantic Web Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Deepavali of the Gods

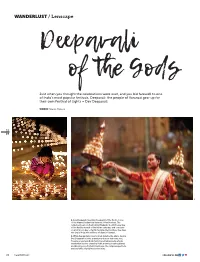

WANDERLUST / Lenscape Deepavali of the Gods Just when you thought the celebrations were over, and you bid farewell to one of India's most popular festivals, Deepavali; the people of Varanasi gear up for their own Festival of Lights – Dev Deepavali. WORDS Edwina D'souza 11 19 1 2 1. Dev Deepavali, meaning ‘Deepavali of the Gods’, is one of the biggest festivals in Varanasi, Uttar Pradesh. The festivities begin on Prabodhini Ekadashi, the 11th lunar day of the Kartika month of the Hindu calendar, and conclude on a full moon day – Kartik Purnima. During these five days, the city is lit up with millions of diyas (oil lamps). 2. While Ganga Aarti is performed daily by the ghats, during Dev Deepavali it is the grandest and most elaborate one. Twenty one priests hold multi-tiered lamps and perform rituals that involve chanting Vedic mantras, beating drums and blowing conch shells. Each year, the religious spectacle attracts lakhs of pilgrims and tourists. 74 FOLLOW US ON 11 19 Oil lamps are symbolic of Deepavali celebrations across India. During Dev Deepavali, courtyards, window panes, temples, streets and ghats in Varanasi are adorned with these oil lamps. Twinkling like stars under the night sky, these lit baskets create a captivating sight. 75 11 19 1 2 76 FOLLOW US ON 3 1. The city of Varanasi comes alive at the crack of dawn with devotees thronging the ghats and adjacent temples. An aerial view of the colourful rooftoops on the banks of the Ganges at sunrise; with Manikarnika Ghat in the foreground. -

Coca Cola Was the Purchase of Parley Brands

SWAMI VIVEKANAND UNIVERSITY A PROJECT REPORT ON MARKETING STRATGIES OF TOP BRANDS OF COLD DRINKS Submitted in partial fulfilment for the Award of degree of Master in Management Studies UNDER THE GUIDANCE OF SUBMITTED BY Prof.SHWETA RAJPUT HEMANT SONI CERTIFICATE Certified that the dissertation title MARKETING STRATEGIES OF TOP BRANDS OF COLD DRINKS IN SAGAR is a bonafide work done Mr. HEMANI SONI under my guidance in partial fulfilment of Master in Management Studies programme . The views expressed in this dissertation is only of that of the researcher and the need not be those of this institute. This project work has been corrected by me. PROJECT GUIDE SWETA RAJPUT DATE:: PLACE: STUDENT’S DECLARATION I hereby declare that the Project Report conducted on MARKETING STRATEGIES OF TOP BRANDS OF COLD DRINKS Under the guidance of Ms. SHWETA RAJPUT Submitted in Partial fulfillment of the requirements for the Degree of MASTER OF BUSINESS ADMINISTRATION TO SVN COLLAGE Is my original work and the same has not been submitted for the award of any other Degree/diploma/fellowship or other similar titles or prizes. Place: SAGAR HEMANT SONI Date: ACKNOWLEDGEMENT It is indeed a pleasure doing a project on “MARKETING STRATEGIES OF TOP BRANDS OF COLD DRINKS”. I am grateful to sir Parmesh goutam (hod) for providing me this opportunity. I owe my indebtedness to My Project Guide Ms. Shweta rajput, for her keen interest, encouragement and constructive support and under whose able guidance I have completed out my project. She not only helped me in my project but also gave me an overall exposure to other issues related to retailing and answered all my queries calmly and patiently. -

Universidad Peruana De Ciencias Aplicadas (UPC)

El perfil del consumidor peruano en el sector de bebidas no alchólicas (BNA) Item Type info:eu-repo/semantics/bachelorThesis Authors Buralli Valdez, Roberto; Sanchez Maltese, Alonso; Zevallos Dávila, Humberto Publisher Universidad Peruana de Ciencias Aplicadas (UPC) Rights info:eu-repo/semantics/openAccess Download date 28/09/2021 13:10:13 Item License http://creativecommons.org/licenses/by-nc-nd/4.0/ Link to Item http://hdl.handle.net/10757/621504 UNIVERSIDAD PERUANA DE CIENCIAS APLICADAS Facultad de Negocios Área Académica de Administración EL PERFIL DEL CONSUMIDOR PERUANO EN EL SECTOR DE BEBIDAS NO ALCHOLICAS (BNA) TRABAJO DE SUFICIENCIA PROFESIONAL Para optar al Título de Licenciado en Negocios Internacionales Presentado por los Bachilleres: Roberto Buralli Alonso Sanchez Humberto Zevallos Asesor (a): Prof. María Laura Cuya Lima, enero de 2017. 1 ÍNDICE DEDICATORIA .................................................................................................................... 5 AGRADECIMIENTOS ........................................................................................................ 6 RESUMEN ............................................................................................................................ 7 ABSTRACT ........................................................................................................................... 8 INTRODUCCIÓN ................................................................................................................ 9 1. CAPÍTULO I: MARCO TEÓRICO ......................................................................... -

Survival Strategies of Two Small Marine Ciliates and Their Role in Regulating Bacterial Community Structure Under Experimental Conditions

MARINE ECOLOGY - PROGRESS SERIES Vol. 33: 59-70, 1986 Published October l Mar. Ecol. Prog. Ser. - Survival strategies of two small marine ciliates and their role in regulating bacterial community structure under experimental conditions C. M. Turley, R. C. Newel1 & D. B. Robins Institute for Marine Environmental Research. Prospect Place, The Hoe, Plymouth PL13DH. United Kingdom ABSTRACT: Urcmema sp. of ca 12 X 5 pm and Euplotes sp. ca 20 X 10 pm were isolated from surface waters of the English Channel. The rapidly motile Uronerna sp. has a relative growth rate of 3.32 d-' and responds rapidly to the presence of bacterial food with a doubling time of only 5.01 h. Its mortality rate is 0.327 d-' and mortality time is therefore short at 50.9 h once the bacterial food resource has become hiting. Uronema sp. therefore appears to be adapted to exploit transitory patches when bacterial prey abundance exceeds a concentration of ca 6 X 106 cells ml-'. In contrast, Euplotes sp. had a slower relative growth rate of 1.31 d-' and a doubling time of ca. 12.7 h, implying a slower response to peaks in bacterial food supply. The mortality rate of 0.023 d-' is considerably lower than In Uronema and mortality time is as much as 723 h. This suggests that, relative to Uronerna, the slower moving Euplotes has a more persistent strategy whch under the conditions of our experiment favours a stable equhbnum wlth its food supply. Grazing activities of these 2 ciliates have an important influence on abundance and size-class structure of their bacterial prey. -

Coca-Cola La Historia Negra De Las Aguas Negras

Coca-Cola La historia negra de las aguas negras Gustavo Castro Soto CIEPAC COCA-COLA LA HISTORIA NEGRA DE LAS AGUAS NEGRAS (Primera Parte) La Compañía Coca-Cola y algunos de sus directivos, desde tiempo atrás, han sido acusados de estar involucrados en evasión de impuestos, fraudes, asesinatos, torturas, amenazas y chantajes a trabajadores, sindicalistas, gobiernos y empresas. Se les ha acusado también de aliarse incluso con ejércitos y grupos paramilitares en Sudamérica. Amnistía Internacional y otras organizaciones de Derechos Humanos a nivel mundial han seguido de cerca estos casos. Desde hace más de 100 años la Compañía Coca-Cola incide sobre la realidad de los campesinos e indígenas cañeros ya sea comprando o dejando de comprar azúcar de caña con el fin de sustituir el dulce por alta fructuosa proveniente del maíz transgénico de los Estados Unidos. Sí, los refrescos de la marca Coca-Cola son transgénicos así como cualquier industria que usa alta fructuosa. ¿Se ha fijado usted en los ingredientes que se especifican en los empaques de los productos industrializados? La Coca-Cola también ha incidido en la vida de los productores de coca; es responsable también de la falta de agua en algunos lugares o de los cambios en las políticas públicas para privatizar el vital líquido o quedarse con los mantos freáticos. Incide en la economía de muchos países; en la industria del vidrio y del plástico y en otros componentes de su fórmula. Además de la economía y la política, ha incidido directamente en trastocar las culturas, desde Chamula en Chiapas hasta Japón o China, pasando por Rusia. -

Coca-Cola FEMSA, S.A.B. De C.V

As filed with the Securities and Exchange Commission on June 25, 2007 UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 20-F ANNUAL REPORT PURSUANT TO SECTION 13 OF THE SECURITIES EXCHANGE ACT OF 1934 For the fiscal year ended December 31, 2006 Commission file number 1-12260 Coca-Cola FEMSA, S.A.B. de C.V. (Exact name of registrant as specified in its charter) Not Applicable (Translation of registrant’s name into English) United Mexican States (Jurisdiction of incorporation or organization) Guillermo González Camarena No. 600 Centro de Ciudad Santa Fé 01210 México, D.F., México (Address of principal executive offices) Securities registered or to be registered pursuant to Section 12(b) of the Act: Title of Each Class Name of Each Exchange on Which Registered American Depositary Shares, each representing 10 Series L Shares, without par value ................................................. New York Stock Exchange, Inc. Series L Shares, without par value............................................................. New York Stock Exchange, Inc. (not for trading, for listing purposes only) Securities registered or to be registered pursuant to Section 12(g) of the Act: None Securities for which there is a reporting obligation pursuant to Section 15(d) of the Act: None The number of outstanding shares of each class of capital or common stock as of December 31, 2006 was: 992,078,519 Series A Shares, without par value 583,545,678 Series D Shares, without par value 270,906,004 Series L Shares, without par value Indicate by check mark if the registrant is a well-known seasoned issuer, as defined in Rule 405 of the Securities Act. -

Hindu Calendar 2019 with Festival and Fast Dates January 2019 Calendar

Hindu Calendar 2019 With Festival and Fast Dates January 2019 Calendar Sr. No. Date Day Festivals/Events 1. 1st January 2019 Tuesday New Year 2. 1st January 2019 Tuesday Saphala Ekadashi 3. 3rd January 2019 Thursday Pradosha Vrata (Krishna Paksha Pradosham) 4. 3rd January 2019 Thursday Masik Shivaratri 5. 5th January 2019 Saturday Paush Amavasya, Margashirsha Amavasya 6. 12th January 2019 Saturday Swami Vivekananda Jayanti/National Youth Day 7. 13th January 2019 Sunday Guru Gobind Singh Jayanti 8. 13th January 2019 Monday Lohri 9. 15th January 2019 Tuesday Pongal, Uttarayan, Makar Sankranti 10. 17th January 2019 Thursday Pausha Putrada Ekadashi Vrat 11. 18th January 2019 Friday Pradosha Vrata (Shukla Paksha Pradosham) 12. 23rd January 2019 Wednesday Subhash Chandra Bose Jayanti 13. 24th January 2019 Thursday Sankashti Chaturthi 14. 26th January 2019 Saturday Republic Day 15. 27th January 2019 Sunday Swami Vivekananda Jayanti *Samvat 16. 30th January 2019 Wednesday Mahatma Gandhi Death Anniversary 17. 31st January 2018 Thursday Shattila Ekadashi Vrat February 2019 Calendar Sr. No. Date Day Festivals/Events 1. February 1st 2018 Friday Pradosha Vrata (Krishna Paksha Pradosham) 2. February 2nd 2019 Saturday Masik Shivaratri 3. February 4th 2019 Monday Magha Amavasya 4. February 4th 2019 Monday World Cancer Day 5. February 5th 2019 Tuesday Chinese New Year of 2019 6. February 10th 2019 Saturday Vasant Panchami 7. February 13th 2019 Thursday Kumbha Sankranti 8. February 14th 2019 Thursday Valentine’s Day 9. February 16th 2019 Saturday Jaya Ekadashi Vrat 10. February 19th 2019 Tuesday Guru Ravidas Jayanti, Magha Purnima Vrat 11. February 22nd 2019 Friday Sankashti Chaturthi 12. -

A New Tetrahymena

The Journal of Published by the International Society of Eukaryotic Microbiology Protistologists Journal of Eukaryotic Microbiology ISSN 1066-5234 ORIGINAL ARTICLE A New Tetrahymena (Ciliophora, Oligohymenophorea) from Groundwater of Cape Town, South Africa Pablo Quintela-Alonsoa, Frank Nitschea, Claudia Wylezicha,1, Hartmut Arndta,b & Wilhelm Foissnerc a Department of General Ecology, Cologne Biocenter, Institute for Zoology, University of Cologne, Zulpicher€ Str. 47b, D-50674, Koln,€ Germany b Department of Zoology, University of Cape Town, Private Bag X3, Rondebosch, 7701, South Africa c Department of Organismic Biology, University of Salzburg, Hellbrunnerstrasse 34, A-5020, Salzburg, Austria Keywords ABSTRACT biodiversity; ciliates; cytochrome c oxidase subunit I (cox1); groundwater; interior- The identification of species within the genus Tetrahymena is known to be diffi- branch test; phylogeny; silverline pattern; cult due to their essentially identical morphology, the occurrence of cryptic and SSU rDNA. sibling species and the phenotypic plasticity associated with the polymorphic life cycle of some species. We have combined morphology and molecular biol- Correspondence ogy to describe Tetrahymena aquasubterranea n. sp. from groundwater of Cape P. Quintela-Alonso, Department of General Town, Republic of South Africa. The phylogenetic analysis compares the cox1 Ecology, Cologne Biocenter, University of gene sequence of T. aquasubterranea with the cox1 gene sequences of other Cologne, Zulpicher€ Strasse 47 b, D-50674 Tetrahymena species and uses the interior-branch test to improve the resolution Cologne, Germany of the evolutionary relationships. This showed a considerable genetic diver- Telephone number: +49-221-470-3100; gence of T. aquasubterranea to its next relative, T. farlyi, of 9.2% (the average FAX number: +49-221-470-5932; cox1 divergence among bona fide species of Tetrahymena is ~ 10%). -

Pola Konsumsi Daging Ayam Broiler Berdasarkan Tingkat Pengetahuan Dan Pendapatan Kelompok Mahasiswa Fakultas Peternakan Universitas Padjadjaran

Pola Konsumsi Daging Ayam Broiler................................................................................... Apriannda Winda POLA KONSUMSI DAGING AYAM BROILER BERDASARKAN TINGKAT PENGETAHUAN DAN PENDAPATAN KELOMPOK MAHASISWA FAKULTAS PETERNAKAN UNIVERSITAS PADJADJARAN CONSUMPTION PATTERNS OF BROILER MEAT BASED ON THE LEVEL OF KNOWLEDGE AND INCOME STUDENT GROUP OF FACULTY OF ANIMAL HUSBANDRY PADJADJARAN Aprianda Winda*, Rochadi Tawaf**, Marina Sulistyati** Universitas Padjadjaran *Alumni Fakultas Peternakan Universitas Padjadjaran **Staf Pengajar Fakultas Peternakan Universitas Padjadjaran E-mail : [email protected] ABSTRAK Penelitian telah dilaksanakan di Fakultas Peternakan Universitas Padjadjaran, Kabupaten Sumedang pada tanggal 9-22 September 2015. Tujuan penelitian ini untuk; (1) mengetahui preferensi konsumsi daging ayam broiler pada kelompok mahasiswa Fakultas Peternakan Universitas Padjadjaran berdasarkan tingkat pengetahuan gizi, (2) mengetahui preferensi konsumsi daging ayam broiler pada kelompok mahasiswa Fakultas Peternakan Universitas Padjadjaran berdasarkan tingkat pendapatan, (3) mengkaji pola konsumsi daging ayam broiler pada kelompok mahasiswa Fakultas Peternakan Universitas Padjadjaran berdasarkan tingkat pengetahuan gizi dan tingkat pendapatan. Metode yang digunakan adalah survei. Pengambilan sampel menggunakan metode cluster random sampling pada mahasiswa angkatan 2013 yang indekost sehingga didapat 30 mahasiswa sebagai responden. Data yang diperoleh ditabulasi dan dianalisis dengan menggunakan -

Le Cas Du Marché Des Colas Philippe Robert-Demontrond, Anne Joyeau and Christine Bougeard-Delfosse

Document generated on 09/28/2021 4:33 a.m. Management international Gestiòn Internacional International Management La sphère marchande comme outil de résistance à la mondialisation : le cas du marché des colas Philippe Robert-Demontrond, Anne Joyeau and Christine Bougeard-Delfosse Volume 14, Number 4, Summer 2010 Article abstract Day after day, the world is becoming more and more globalized. Financial URI: https://id.erudit.org/iderudit/044659ar movements, transports, means of communication facilitate the emergence of DOI: https://doi.org/10.7202/044659ar this phenomenon. Nevertheless, globalization is not without consequences on the local populations who perceive this evolution as a threat for their identity See table of contents and their culture. Through the creation or resurgence of an offer of foodstuffs, and more particularly through a world and plethoric offer of local colas, the consumer is showing resistance and refuses to standardize his consumption. Publisher(s) The commercial success of those altercolas cannot be denied and many dimensions are bound to their consumption. HEC Montréal et Université Paris Dauphine ISSN 1206-1697 (print) 1918-9222 (digital) Explore this journal Cite this article Robert-Demontrond, P., Joyeau, A. & Bougeard-Delfosse, C. (2010). La sphère marchande comme outil de résistance à la mondialisation : le cas du marché des colas. Management international / Gestiòn Internacional / International Management, 14(4), 55–68. https://doi.org/10.7202/044659ar Tous droits réservés © Management international / International This document is protected by copyright law. Use of the services of Érudit Management / Gestión Internacional, 2010 (including reproduction) is subject to its terms and conditions, which can be viewed online. -

Ex-Google Engineer Starts Religion That Worships Artificial Intelligence

Toggle navigation Search Patheos Beliefs Buddhist Christian Catholic Evangelical Mormon Progressive Hindu Jewish Muslim Nonreligious Pagan Spirituality Topics Politics Red Politics Blue Entertainment Book Club Family and Relationships More Topics Trending Now Spiritual but Not Religious Should Be... Guest Contributor Redefining Pro-Life Guest Contributor Columnists Religion Library Research Tools Preachers Teacher Resources Comparison Lens Anglican/Episcopalian Baha'i Baptist Buddhism Christianity Confucianism Eastern Orthodoxy Hinduism Holiness and Pentecostal ISKCON Islam Judaism Lutheran Methodist Mormonism New Age Paganism Presbyterian and Reformed Protestantism Roman Catholic Scientology Shi'a Islam Sikhism Sufism Sunni Islam Taoism Zen see all religions newsletters More Features Top 10 Things Never to Say to Your... Leah D. Schade We Must Root Out All Forms of Racism Henry Karlson Key Voices Is God Using Natural Disasters As A... Jack Wellman What Pianists Know pleithart Toggle navigation Home Ask Richard Podcasts Speaking Contributors Media Contact/Submissions Books Nonreligious Ex-Google Engineer Starts Religion that Worships Artificial Intelligence September 29, 2017 by David G. McAfee 111 Comments 1786 Anthony Levandowski, the multi-millionaire engineer who once led Google’s self-driving car program, has founded a religious organization to “develop and promote the realization of a Godhead based on Artificial Intelligence.” Levandowski created The Way of the Future in 2015, but it was unreported until now. He serves as the CEO -

Students Blaspheme Hosted a Discussion on the Importance of Taking Personal Moral Responsibility for the Planet Wednesday Night in the Memorial Union

Iowa State Daily, October 2010 Iowa State Daily, 2010 10-2010 Iowa State Daily (October 1, 2010) Iowa State Daily Follow this and additional works at: http://lib.dr.iastate.edu/iowastatedaily_2010-10 Part of the Higher Education Commons, and the Journalism Studies Commons Recommended Citation Iowa State Daily, "Iowa State Daily (October 1, 2010)" (2010). Iowa State Daily, October 2010. 6. http://lib.dr.iastate.edu/iowastatedaily_2010-10/6 This Book is brought to you for free and open access by the Iowa State Daily, 2010 at Iowa State University Digital Repository. It has been accepted for inclusion in Iowa State Daily, October 2010 by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Sports Opinion Your weekend previews, Video games level-up, ready for consumption assist scientific endeavors p8 >> p6 >> FRIDAY October 1, 2010 | Volume 206 | Number 28 | 40 cents | iowastatedaily.com | An independent newspaper serving Iowa State since 1890. Campus First Amendment Weekend allows families to learn about student life By Molly.Collins and Sarah.Binder iowastatedaily.com The events planned for Cyclone Family Weekend, start- ing Friday, will provide ISU students the opportunity to bring their families to their new home and let them have a taste of life as a Cyclone. Families will get to explore campus, dis- cover what Iowa State has to offer and spend time with their Cyclone students. “One thing I’m looking forward to is having my parents see how everything goes up here, so that way they get a feel for what I’ve been doing,” said JP McKinney, freshman in soft- ware engineering.