Biomolecular Structure and Modeling: Historical Perspective

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Architecture of the Protein Domain Universe

The architecture of the protein domain universe Nikolay V. Dokholyan Department of Biochemistry and Biophysics, The University of North Carolina at Chapel Hill, School of Medicine, Chapel Hill, NC 27599 ABSTRACT Understanding the design of the universe of protein structures may provide insights into protein evolution. We study the architecture of the protein domain universe, which has been found to poses peculiar scale-free properties (Dokholyan et al., Proc. Natl. Acad. Sci. USA 99: 14132-14136 (2002)). We examine the origin of these scale-free properties of the graph of protein domain structures (PDUG) and determine that that the PDUG is not modular, i.e. it does not consist of modules with uniform properties. Instead, we find the PDUG to be self-similar at all scales. We further characterize the PDUG architecture by studying the properties of the hub nodes that are responsible for the scale-free connectivity of the PDUG. We introduce a measure of the betweenness centrality of protein domains in the PDUG and find a power-law distribution of the betweenness centrality values. The scale-free distribution of hubs in the protein universe suggests that a set of specific statistical mechanics models, such as the self-organized criticality model, can potentially identify the principal driving forces of molecular evolution. We also find a gatekeeper protein domain, removal of which partitions the largest cluster into two large sub- clusters. We suggest that the loss of such gatekeeper protein domains in the course of evolution is responsible for the creation of new fold families. INTRODUCTION The principles of molecular evolution remain elusive despite fundamental breakthroughs on the theoretical front 1-5 and a growing amount of genomic and proteomic data, over 23,000 solved protein structures 6 and protein functional annotations 7-9. -

Cmse 520 Biomolecular Structure, Function And

CMSE 520 BIOMOLECULAR STRUCTURE, FUNCTION AND DYNAMICS (Computational Structural Biology) OUTLINE Review: Molecular biology Proteins: structure, conformation and function(5 lectures) Generalized coordinates, Phi, psi angles, DNA/RNA: structure and function (3 lectures) Structural and functional databases (PDB, SCOP, CATH, Functional domain database, gene ontology) Use scripting languages (e.g. python) to cross refernce between these databases: starting from sequence to find the function Relationship between sequence, structure and function Molecular Modeling, homology modeling Conservation, CONSURF Relationship between function and dynamics Confromational changes in proteins (structural changes due to ligation, hinge motions, allosteric changes in proteins and consecutive function change) Molecular Dynamics Monte Carlo Protein-protein interaction: recognition, structural matching, docking PPI databases: DIP, BIND, MINT, etc... References: CURRENT PROTOCOLS IN BIOINFORMATICS (e-book) (http://www.mrw.interscience.wiley.com/cp/cpbi/articles/bi0101/frame.html) Andreas D. Baxevanis, Daniel B. Davison, Roderic D.M. Page, Gregory A. Petsko, Lincoln D. Stein, and Gary D. Stormo (eds.) 2003 John Wiley & Sons, Inc. INTRODUCTION TO PROTEIN STRUCTURE Branden C & Tooze, 2nd ed. 1999, Garland Publishing COMPUTER SIMULATION OF BIOMOLECULAR SYSTEMS Van Gusteren, Weiner, Wilkinson Internet sources Ref: Department of Energy Rapid growth in experimental technologies Human Genome Projects Two major goals 1. DNA mapping 2. DNA sequencing Rapid growth in experimental technologies z Microrarray technologies – serial gene expression patterns and mutations z Time-resolved optical, rapid mixing techniques - folding & function mechanisms (Æ ns) z Techniques for probing single molecule mechanics (AFM, STM) (Æ pN) Æ more accurate models/data for computer-aided studies Weiss, S. (1999). Fluorescence spectroscopy of single molecules. -

Action of Multiple Rice -Glucosidases on Abscisic Acid Glucose Ester

International Journal of Molecular Sciences Article Action of Multiple Rice β-Glucosidases on Abscisic Acid Glucose Ester Manatchanok Kongdin 1, Bancha Mahong 2, Sang-Kyu Lee 2, Su-Hyeon Shim 2, Jong-Seong Jeon 2,* and James R. Ketudat Cairns 1,* 1 School of Chemistry, Institute of Science, Center for Biomolecular Structure, Function and Application, Suranaree University of Technology, Nakhon Ratchasima 30000, Thailand; [email protected] 2 Graduate School of Biotechnology and Crop Biotech Institute, Kyung Hee University, Yongin 17104, Korea; [email protected] (B.M.); [email protected] (S.-K.L.); [email protected] (S.-H.S.) * Correspondence: [email protected] (J.-S.J.); [email protected] (J.R.K.C.); Tel.: +82-31-2012025 (J.-S.J.); +66-44-224304 (J.R.K.C.); Fax: +66-44-224185 (J.R.K.C.) Abstract: Conjugation of phytohormones with glucose is a means of modulating their activities, which can be rapidly reversed by the action of β-glucosidases. Evaluation of previously characterized recombinant rice β-glucosidases found that nearly all could hydrolyze abscisic acid glucose ester (ABA-GE). Os4BGlu12 and Os4BGlu13, which are known to act on other phytohormones, had the highest activity. We expressed Os4BGlu12, Os4BGlu13 and other members of a highly similar rice chromosome 4 gene cluster (Os4BGlu9, Os4BGlu10 and Os4BGlu11) in transgenic Arabidopsis. Extracts of transgenic lines expressing each of the five genes had higher β-glucosidase activities on ABA-GE and gibberellin A4 glucose ester (GA4-GE). The β-glucosidase expression lines exhibited longer root and shoot lengths than control plants in response to salt and drought stress. -

Evolution of Biomolecular Structure Class II Trna-Synthetases and Trna

University of Illinois at Urbana-Champaign Luthey-Schulten Group Theoretical and Computational Biophysics Group Evolution of Biomolecular Structure Class II tRNA-Synthetases and tRNA MultiSeq Developers: Prof. Zan Luthey-Schulten Elijah Roberts Patrick O’Donoghue John Eargle Anurag Sethi Dan Wright Brijeet Dhaliwal March 2006. A current version of this tutorial is available at http://www.ks.uiuc.edu/Training/Tutorials/ CONTENTS 2 Contents 1 Introduction 4 1.1 The MultiSeq Bioinformatic Analysis Environment . 4 1.2 Aminoacyl-tRNA Synthetases: Role in translation . 4 1.3 Getting Started . 7 1.3.1 Requirements . 7 1.3.2 Copying the tutorial files . 7 1.3.3 Configuring MultiSeq . 8 1.3.4 Configuring BLAST for MultiSeq . 10 1.4 The Aspartyl-tRNA Synthetase/tRNA Complex . 12 1.4.1 Loading the structure into MultiSeq . 12 1.4.2 Selecting and highlighting residues . 13 1.4.3 Domain organization of the synthetase . 14 1.4.4 Nearest neighbor contacts . 14 2 Evolutionary Analysis of AARS Structures 17 2.1 Loading Molecules . 17 2.2 Multiple Structure Alignments . 18 2.3 Structural Conservation Measure: Qres . 19 2.4 Structure Based Phylogenetic Analysis . 21 2.4.1 Limitations of sequence data . 21 2.4.2 Structural metrics look further back in time . 23 3 Complete Evolutionary Profile of AspRS 26 3.1 BLASTing Sequence Databases . 26 3.1.1 Importing the archaeal sequences . 26 3.1.2 Now the other domains of life . 27 3.2 Organizing Your Data . 28 3.3 Finding a Structural Domain in a Sequence . 29 3.4 Aligning to a Structural Profile using ClustalW . -

The Effect of Dicer Knockout on RNA Interference Using Various Dicer Substrate Interfering RNA Structures

bioRxiv preprint doi: https://doi.org/10.1101/2020.04.19.049817; this version posted April 20, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. The effect of Dicer knockout on RNA interference using various Dicer substrate interfering RNA structures Min-Sun Song1 and John J Rossi1, 2 * 1Department of Molecular and Cellular Biology, Beckman Research Institute of City of Hope, City of Hope, Duarte, CA 91010, USA 2Irell and Manella Graduate School of Biological Sciences, Beckman Research Institute of City of Hope, City of Hope, Duarte, CA 91010, USA *Corresponding author: John J. Rossi, Email address: [email protected]. Running title: Dicer regulation of DsiRNAs Keywords: Dicer, tetra-loop, Dicer-substrate siRNA(DsiRNA), RNA interference(RNAi), RNA biogenesis, Dicer knockout 1 bioRxiv preprint doi: https://doi.org/10.1101/2020.04.19.049817; this version posted April 20, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Abstract Dicer-substrate siRNA (DsiRNA) was a useful tool for sequence-specific gene silencing. DsiRNA was proposed to have increased efficacy via RNAi gene silencing, but the molecular mechanism underlying the increased efficacy is not precise. We designed the tetra-looped DsiRNA as the tetra-looped RNAs have been reported more stable structure and increased binding efficiency with RNA and protein. -

Protein Structure Prediction and Design in a Biologically-Realistic Implicit Membrane

bioRxiv preprint doi: https://doi.org/10.1101/630715; this version posted May 8, 2019. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. Protein structure prediction and design in a biologically-realistic implicit membrane Rebecca F. Alforda, Patrick J. Flemingb,c, Karen G. Flemingb,c, and Jeffrey J. Graya,c aDepartment of Chemical & Biomolecular Engineering, Johns Hopkins University, Baltimore, MD 21218; bT.C. Jenkins Department of Biophysics, Johns Hopkins University, Baltimore, MD 21218; cProgram in Molecular Biophysics, Johns Hopkins University, Baltimore, MD 21218 This manuscript was compiled on May 8, 2019 ABSTRACT. Protein design is a powerful tool for elucidating mecha- MOS (16), and the protein-lipid interactions are scored with nisms of function and engineering new therapeutics and nanotech- a molecular mechanics energy function. All-atom models are nologies. While soluble protein design has advanced, membrane attractive because they can feature hundreds of lipid types to- protein design remains challenging due to difficulties in modeling ward approximating the composition of biological membranes the lipid bilayer. In this work, we developed an implicit approach (17). With current technology, detailed all-atom models can be that captures the anisotropic structure, shape of water-filled pores, used to explore membrane dynamics for hundreds of nanosec- and nanoscale dimensions of membranes with different lipid compo- onds (18): the time scale required to achieve equilibrated sitions. The model improves performance in computational bench- properties on a bilayer with approximately 250 lipids (19). marks against experimental targets including prediction of protein Coarse-grained representations such as MARTINI (20), ELBA orientations in the bilayer, ∆∆G calculations, native structure dis- (21), and SIRAH (22) reduce computation time by mapping crimination, and native sequence recovery. -

Topic 5: the Folding of Biopolymers – RNA and Protein Overview

Topic 5: the folding of biopolymers – RNA and Protein Overview: The main functional biomolecules in cells are polymers – DNA, RNA and proteins For RNA and Proteins, the specific sequence of the polymer dictates its final structure Can we predict the final structure of RNA or protein given just the sequence information? Can we design any biomolecular structure that we want? The world of RNA RNA is a linear polymer built from 4 possible monomers: A, G, U, C These monomers can form complimentary interactions to form base-pairings: A with U and C with G Most often RNA is found as a single-stranded polymer that via base-pairing with complementary regions within its own sequence, is able to fold in on itself RNA has a wide variety of functions that we will now explore RNA function in cell mRNA = messenger RNA RNA’s main function in the cell is to act as a messenger molecule in the process of making a protein DNA (gene) mRNA Protein tRNA = transfer RNA these are used in translation to recognize the 64 codons. There is one tRNA for each codon, each representing one of the 20 amino acids RNA function in cell rRNA = ribosomal RNA these are RNAs that get incorporated into the ribosome to give it part of its function. microRNA or siRNA these are relatively recent discovered form of RNA that is used to regulate gene expression – so called RNA interference (won Nobel prize) destroys target mRNA many developmental genes are regulated by miRNAs in your genome RNA function in cell Riboswitches – many mRNA molecules can detect metabolites by binding them and changing the structure of the mRNA = regulation RNA function in cell Ribozymes – like enzymes (catalytic proteins) except made from RNA. -

Biomolecular Modeling and Simulation: a Prospering Multidisciplinary Field

Annual Review of Biophysics Biomolecular Modeling and Simulation: A Prospering Multidisciplinary Field Tamar Schlick,1,2,3 Stephanie Portillo-Ledesma,1 Christopher G. Myers,1 Lauren Beljak,4 Justin Chen,4 Sami Dakhel,4 Daniel Darling,4 Sayak Ghosh,4 Joseph Hall,4 Mikaeel Jan,4 Emily Liang,4 Sera Saju,4 Mackenzie Vohr,4 Chris Wu,4 Yifan Xu,4 and Eva Xue4 1Department of Chemistry, New York University, New York, New York 10003, USA; email: [email protected] 2Courant Institute of Mathematical Sciences, New York University, New York, New York 10012, USA 3New York University–East China Normal University Center for Computational Chemistry, New York University Shanghai, Shanghai 200122, China 4College of Arts and Science, New York University, New York, New York 10003, USA Annu. Rev. Biophys. 2021. 50:267–301 Keywords First published as a Review in Advance on biomolecular modeling, biomolecular simulation, biomolecular dynamics, February 19, 2021 structure prediction, protein folding, DNA folding, RNA folding, The Annual Review of Biophysics is online at multiscale modeling, citizen science projects, machine learning, artificial biophys.annualreviews.org intelligence https://doi.org/10.1146/annurev-biophys-091720- 102019 Abstract Copyright © 2021 by Annual Reviews. We reassess progress in the field of biomolecular modeling and simulation, Annu. Rev. Biophys. 2021.50:267-301. Downloaded from www.annualreviews.org All rights reserved following up on our perspective published in 2011. By reviewing metrics Access provided by New York University - Bobst Library on 05/12/21. For personal use only. for the field’s productivity and providing examples of success, we under- score the productive phase of the field, whose short-term expectations were overestimated and long-term effects underestimated. -

![A Glance Into the Evolution of Template-Free Protein Structure Prediction Methodologies Arxiv:2002.06616V2 [Q-Bio.QM] 24 Apr 2](https://docslib.b-cdn.net/cover/3618/a-glance-into-the-evolution-of-template-free-protein-structure-prediction-methodologies-arxiv-2002-06616v2-q-bio-qm-24-apr-2-2853618.webp)

A Glance Into the Evolution of Template-Free Protein Structure Prediction Methodologies Arxiv:2002.06616V2 [Q-Bio.QM] 24 Apr 2

A glance into the evolution of template-free protein structure prediction methodologies Surbhi Dhingra1, Ramanathan Sowdhamini2, Frédéric Cadet3,4,5, and Bernard Offmann∗1 1Université de Nantes, CNRS, UFIP, UMR6286, F-44000 Nantes, France 2Computational Approaches to Protein Science (CAPS), National Centre for Biological Sciences (NCBS), Tata Institute for Fundamental Research (TIFR), Bangalore 560-065, India 3University of Paris, BIGR—Biologie Intégrée du Globule Rouge, Inserm, UMR_S1134, Paris F-75015, France 4Laboratory of Excellence GR-Ex, Boulevard du Montparnasse, Paris F-75015, France 5DSIMB, UMR_S1134, BIGR, Inserm, Faculty of Sciences and Technology, University of La Reunion, Saint-Denis F-97715, France Abstract Prediction of protein structures using computational approaches has been explored for over two decades, paving a way for more focused research and development of algorithms in com- parative modelling, ab intio modelling and structure refinement protocols. A tremendous suc- cess has been witnessed in template-based modelling protocols, whereas strategies that involve template-free modelling still lag behind, specifically for larger proteins (> 150 a.a.). Various improvements have been observed in ab initio protein structure prediction methodologies over- time, with recent ones attributed to the usage of deep learning approaches to construct protein backbone structure from its amino acid sequence. This review highlights the major strategies undertaken for template-free modelling of protein structures while discussing few tools devel- arXiv:2002.06616v2 [q-bio.QM] 24 Apr 2020 oped under each strategy. It will also briefly comment on the progress observed in the field of ab initio modelling of proteins over the course of time as seen through the evolution of CASP platform. -

Biological Information, Molecular Structure, and the Origins Debate Jonathan K

Article Biological Information, Molecular Structure, and the Origins Debate Jonathan K. Watts Jonathan K. Watts Biomolecules contain tremendous amounts of information; this information is “written” and “read” through their chemical structures and functions. A change in the information of a biomolecule is a change in the physical properties of that molecule—a change in the molecule itself. It is impossible to separate the information contained in biomolecules from their structure and function. For molecules such as DNA and RNA, new information can be incorporated into the sequence of the molecules when that new sequence has favorable structural and functional properties. New biological information can arise by natural processes, mediated by the inter- actions between biomolecules and their environment, using the inherent relationship between structure and information. This fact has important implications for the generation of new biological information and thus the question of origins. traveler is checking in for a flight computer code or printed text, we expect A and her bags are slightly over that similar devices containing different the weight limit. Without hesi- information will have similar physical tating, she pulls out her iPod. It is very properties. By contrast, different devices heavy, she explains to the check-in agent, may contain the same information in since it contains thousands of songs. She spite of their dramatically different phys- deletes most of the music, repacks the ical properties (for example, the printed iPod, and reweighs the bags—which are and online versions of this article). now well within the weight limits. But biological information is quite Or consider a kindergarten student, different. -

Appendix8 Shape Grammars in Chapter 9 There Is a Reference to Possible Applications of Shape Grammars. One Such Application Conc

Appendix8 Shape Grammars In Chapter 9 there is a reference to possible applications of shape grammars. One such application concerns artificial biological materials constructed from a finite number of specially created (stable) Holliday type moleculesb. Shape grammars might be of practical value in a number of other applications as well. A shape grammar is defined as follows. SG = (VM, VT, %, I), where VT is a finite set of terminal symbols VM is a finite set of marker symbols (that act like non-terminals) I e Vj V^ VJ is the start symbol. The production rules of the shape grammar % are phrase structured, and the rules may be applied serially, or in parallel (the same as with Lindenmeyer systems). % is composed of rules of the form u => v, where u is a mix of Vf with V^ , and v is a mix of Vj with V^. Objects in VT and VM may have any location, orientation, or scaling (think in terms of location in a Cartesian grid or some other coordinate system, rotation, and size), in two or more dimensions. As an example, VM The marker disks may be at any one of the four branches, and marks an active area. In the example that follows, these marked active areas are where ligases may act. In the following rule for /£, the four cruciform symbols on the right of the production rule are of the same size, none are rotated, and all four have the locations indicated. J. Gips, Shape Grammars and Their Uses: Artificial Perception, Shape Generation and Computer Aesthetics, 1975, Birkhftuser. -

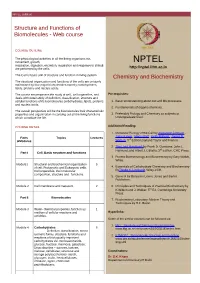

Structure and Functions of Biomolecules - Web Course

NPTEL Syllabus Structure and Functions of Biomolecules - Web course COURSE OUTLINE The physiological activities in all the living organisms viz. movement, growth, NPTEL respiration, digestion, excretion, respiration and response to stimuli are performed by the cells. http://nptel.iitm.ac.in The Cell is basic unit of structure and function in living system. Chemistry and Biochemistry The structural organization and functions of the cells are uniquely maintained by four major biomolecules namely carbohydrates, lipids, proteins and nucleic acids. The course encompasses the study of cell, cell organelles, and Pre-requisites: deals with detail study of definition, classification, structure and cellular functions of its biomolecules carbohydrates, lipids, proteins 1. Basic understanding about cell and life processes. and nucleic acids. 2. Fundamentals of organic chemistry. The overall perspective will be the biomolecules their characteristic properties and organization in carrying out all the living functions 3. Preferably Biology and Chemistry as subjects at which constitute the life. Undergraduate level. COURSE DETAIL Additional Reading: 1. Molecular Biology of the Cell by Alexander Johnson , Julian Lewis , Martin Raff , Keith Roberts , James D. Parts Topics Lectures rd &Modules Watson, 3 Edition,Garland Taylor and Francis. 2. The Lipid Handbook by Frank D. Gunstone, John L. Harwood, and Albert J. Dijkstra, 3rd Edition, CRC Press. Part I Cell: Basic structure and functions 3. Protein:Biotechnology and Biochemistry by Gary Walsh, Wiley. Module1 Structural and biochemical organization 5 of cell. Prokaryotic and Eukaryotic cells. 4. Essentials of Carbohydrate Chemistry and Biochemistry Cell organelles, their molecular ByThisbe K. Lindhorst, Wiley-VCH. composition, structure and functions. 5. Gene IX by Benjamin Lewin, Jones and Barlett Publishers.