[email protected]

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Decnet-Plusftam and Virtual Terminal Use and Management

VSI OpenVMS DECnet-Plus FTAM and Virtual Terminal Use and Management Document Number: DO-FTAMMG-01A Publication Date: June 2020 Revision Update Information: This is a new manual. Operating System and Version: VSI OpenVMS Integrity Version 8.4-2 VSI OpenVMS Alpha Version 8.4-2L1 VMS Software, Inc., (VSI) Bolton, Massachusetts, USA DECnet-PlusFTAM and Virtual Terminal Use and Management Copyright © 2020 VMS Software, Inc. (VSI), Bolton, Massachusetts, USA Legal Notice Confidential computer software. Valid license from VSI required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor's standard commercial license. The information contained herein is subject to change without notice. The only warranties for VSI products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. VSI shall not be liable for technical or editorial errors or omissions contained herein. HPE, HPE Integrity, HPE Alpha, and HPE Proliant are trademarks or registered trademarks of Hewlett Packard Enterprise. Intel, Itanium and IA-64 are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. UNIX is a registered trademark of The Open Group. The VSI OpenVMS documentation set is available on DVD. ii DECnet-PlusFTAM and Virtual -

Inter-Autonomous-System Routing: Border Gateway Protocol

Inter-Autonomous-System Routing: Border Gateway Protocol Antonio Carzaniga Faculty of Informatics University of Lugano May 18, 2010 © 2005–2007 Antonio Carzaniga 1 Outline Hierarchical routing BGP © 2005–2007 Antonio Carzaniga 2 Routing Goal: each router u must be able to compute, for each other router v, the next-hop neighbor x that is on the least-cost path from u to v v x3 x4 u x2 x1 © 2005–2007 Antonio Carzaniga 3 Network Model So far we have studied routing over a “flat” network model 14 g h j 4 1 1 2 3 9 d e f 1 2 1 1 1 3 4 a b c Also, our objective has been to find the least-cost paths between sources and destinations © 2005–2007 Antonio Carzaniga 4 More Realistic Topologies © 2005–2007 Antonio Carzaniga 5 Even More Realistic ©2001 Stephen Coast © 2005–2007 Antonio Carzaniga 6 An Internet Map ©1999 Lucent Technologies © 2005–2007 Antonio Carzaniga 7 Higher-Level Objectives Scalability ◮ hundreds of millions of hosts in today’s Internet ◮ transmitting routing information (e.g., LSAs) would be too expensive ◮ forwarding would also be too expensive Administrative autonomy ◮ one organization might want to run a distance-vector routing protocol, while another might want to run a link-state protocol ◮ an organization might not want to expose its internal network structure © 2005–2007 Antonio Carzaniga 8 Hierarchical Structure Today’s Internet is organized in autonomous systems (ASs) ◮ independent administrative domains Gateway routers connect an autonomous system with other autonomous systems An intra-autonomous system routing protocol runs within an autonomous system (e.g., OSPF) ◮ this protocol determines internal routes ◮ internal router ↔ internal router ◮ internal router ↔ gateway router ◮ gateway router ↔ gateway router © 2005–2007 Antonio Carzaniga 9 Hierarchical Structure 32 25 24 AS3 31 21 23 35 AS2 33 22 34 11 42 13 12 AS4 43 14 AS1 41 © 2005–2007 Antonio Carzaniga 10 Inter-AS Routing An inter-autonomous system routing protocol determines routing at the autonomous-system level AS3 AS2 AS4 AS1 At AS3: AS1 → AS1; AS2 → AS2; AS4 → AS1. -

User Manual Workbench

User Manual Workbench Create and Customize User Interfaces for Router Control www.s-a-m.com WorkBench User Manual Issue1 Revision 5 Page 2 © 2017 SAM WorkBench User Manual Information and Notices Information and Notices Copyright and Disclaimer Copyright protection claimed includes all forms and matters of copyrightable material and information now allowed by statutory or judicial law or hereinafter granted, including without limitation, material generated from the software programs which are displayed on the screen such as icons, screen display looks etc. Information in this manual and software are subject to change without notice and does not represent a commitment on the part of SAM Limited. The software described in this manual is furnished under a license agreement and can not be reproduced or copied in any manner without prior agreement with SAM Limited, or their authorized agents. Reproduction or disassembly of embedded computer programs or algorithms prohibited. No part of this publication can be transmitted or reproduced in any form or by any means, electronic or mechanical, including photocopy, recording or any information storage and retrieval system, without permission being granted, in writing, by the publishers or their authorized agents. SAM operates a policy of continuous improvement and development. SAM reserves the right to make changes and improvements to any of the products described in this document without prior notice. Contact Details Customer Support For details of our Regional Customer Support Offices and contact details please visit the SAM web site and navigate to Support/247-Support. www.s-a-m.com/support/247-support/ Customers with a support contract should call their personalized number, which can be found in their contract, and be ready to provide their contract number and details. -

Reasons for Visiting Destinations Motives Are Not Motives for Visiting — Caveats and Questions for Destination Marketers Leonardo A.N

University of Massachusetts Amherst ScholarWorks@UMass Amherst Travel and Tourism Research Association: 2012 ttra International Conference Advancing Tourism Research Globally Reasons For Visiting Destinations Motives Are Not Motives For Visiting — Caveats and Questions For Destination Marketers Leonardo A.N. (Don) Dioko Tourism College, Institute for Tourism Studies, Macau Amy So Siu-Ian Faculty of Business Administration, University of Macau Rich Harrill University of South Carolina, College of Hospitality, Retail, & Sport Management Follow this and additional works at: https://scholarworks.umass.edu/ttra Dioko, Leonardo A.N. (Don); So Siu-Ian, Amy; and Harrill, Rich, "Reasons For Visiting Destinations Motives Are Not Motives For Visiting — Caveats and Questions For Destination Marketers" (2016). Travel and Tourism Research Association: Advancing Tourism Research Globally. 46. https://scholarworks.umass.edu/ttra/2012/Oral/46 This is brought to you for free and open access by ScholarWorks@UMass Amherst. It has been accepted for inclusion in Travel and Tourism Research Association: Advancing Tourism Research Globally by an authorized administrator of ScholarWorks@UMass Amherst. For more information, please contact [email protected]. Reasons For Visiting Destinations Motives Are Not Motives For Visiting — Caveats and Questions For Destination Marketers Leonardo A.N. (Don) Dioko Tourism College Institute for Tourism Studies, Macau So Siu-Ian, Amy Faculty of Business Administration University of Macau Rich Harrill University of South Carolina College of Hospitality, Retail, & Sport Management ABSTRACT Many tourism studies consider elicited reasons for undertaking a behavior (e.g., visiting a destination) as the basis from which tourist motives are inferred. Such an approach is problematic principally because it ignores a dual motivational system in which explicit as well as implicit types of motives drive behavior. -

601 Automated Pipetting System Operation

™ EzMate 401 / 601 Automated Pipetting System Operation Manual Ver. 2.2 Trademarks: Beckman®, Biomek® 3000 ( Beckman Coulter Inc.); Roche®, LightCycler® 480 ( Roche Group); DyNAmo™ ( Finnzyme Oy); SYBR® (Molecular Probes Inc.); Invitrogen™, Platinum® ( Invitrogen Corp.), MicroAmp® ( Applied Biosystems); Microsoft® , Windows® 7 (Microsoft Corp.). All other trademarks are the sole property of their respective owners. Copyright Notice: No part of this manual may be reproduced or transmitted in any form or by any means, electronic or mechanical, for any purpose, without prior written permission of Arise Biotech Corp. Notice: This product only provides pipetting functionality. Arise Biotech Corp. does not guarantee the performance and results of the assay and reagent. Table of Contents 1. Safety Precautions ............................................................................... 1 2. Product Introduction ........................................................................... 3 2.1 Features .................................................................................................................... 3 2.2 Hardware Overview ................................................................................................. 4 2.2.1 Outlook ........................................................................................................ 4 2.2.2 Control Notebook Computer........................................................................ 6 2.2.3 Automated Pipetting Module (APM).......................................................... -

The Experiential Value of Cultural Tourism Destinations Hung, Kuang-Peng; Peng, Norman; Chen, Annie

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by ResearchOnline@GCU Incorporating on-site activity involvement and sense of belonging into the Mehrabian- Russell model – The experiential value of cultural tourism destinations Hung, Kuang-peng; Peng, Norman; Chen, Annie Published in: Tourism Management Perspectives Publication date: 2019 Document Version Peer reviewed version Link to publication in ResearchOnline Citation for published version (Harvard): Hung, K, Peng, N & Chen, A 2019, 'Incorporating on-site activity involvement and sense of belonging into the Mehrabian-Russell model – The experiential value of cultural tourism destinations', Tourism Management Perspectives, vol. 30, pp. 43-52. General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. Take down policy If you believe that this document breaches copyright please view our takedown policy at https://edshare.gcu.ac.uk/id/eprint/5179 for details of how to contact us. Download date: 29. Apr. 2020 Incorporating On-site Activity Involvement and Sense of Belonging into the Mehrabian-Russell Model - The Experiential Value of Cultural Tourism Destinations 1. INTRODUCTION Cultural products are important to post-modern society and the economy (Throsby, 2008). Among a range of different cultural products, cultural tourism destinations are significant because they provide opportunities to present a snapshot of a region’s image and history, symbolize a community’s identity, and increase the vibrancy of local economies (Chen, Peng, & Hung, 2015; Hou, Lin, & Morais, 2005; Wansborough & Mageean, 2000). -

Constructive Resistance

Constructive Resistance Lilja_9781538146484.indb 1 12/19/2020 4:22:19 PM OPEN ACCESS The author is grateful to the Swedish Research Council (VR), which has provided funds for this study. This book publishes results from three different VR projects: (1) Reconciliatory Heritage: Reconstructing Heritage in a Time of Violent Fragmentations (2016-03212); (2) The Futures of Genders and Sexualities. Cultural Products, Transnational Spaces and Emerging Communities (2014-47034); and (3) A Study of Civic Resistance and Its Impact on Democracy (2017-00881).’ Lilja_9781538146484.indb 2 12/19/2020 4:22:19 PM Constructive Resistance Repetitions, Emotions, and Time Mona Lilja ROWMAN & LITTLEFIELD Lanham • Boulder • New York • London Lilja_9781538146484.indb 3 12/19/2020 4:22:19 PM Published by Rowman & Littlefield An imprint of The Rowman & Littlefield Publishing Group, Inc. 4501 Forbes Boulevard, Suite 200, Lanham, Maryland 20706 www .rowman .com 6 Tinworth Street, London SE11 5AL, United Kingdom Copyright © 2021 by Mona Lilja All photographs © Ola Kjelbye All rights reserved. No part of this book may be reproduced in any form or by any electronic or mechanical means, including information storage and retrieval systems, without written permission from the publisher, except by a reviewer who may quote passages in a review. British Library Cataloguing in Publication Information Available Library of Congress Control Number: 2020948897 ISBN 9781538146484 (cloth : alk. paper) | ISBN 9781538146491 (epub) ∞ ™ The paper used in this publication meets the minimum -

Copyright by Di Wu 2016

Copyright by Di Wu 2016 The Dissertation Committee for Di Wu Certifies that this is the approved version of the following dissertation: Understanding Consumer Response to the Olympic Visual Identity Designs Committee: Thomas M. Hunt, Supervisor Matthew Bowers Darla M. Castelli Marlene A. Dixon Tolga Ozyurtcu Janice S. Todd Understanding Consumer Response to the Olympic Visual Identity Designs by Di Wu, B.MAN.S.; M.A. Dissertation Presented to the Faculty of the Graduate School of The University of Texas at Austin in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy The University of Texas at Austin December 2016 Dedication To my beloved daughter Evelyn. Acknowledgements I want to thank my parents, Weixing Wu and Jie Gao, for their unconditional love and support. I appreciate everything you have done for me. To my husband Andy Chan, thank you for your support through this journey! You are a great husband and father! To my baby girl Evelyn, you are the light and joy of my life. I love you all very much! Dr. Hunt, I can’t thank you enough for your help, encouragement, and advice that helped me to carry on and complete this long journey. Your advice and guidance of being a scholar and a parent mean so much to me. Thank you for believing in me. I am very fortunate to have you as my advisor. I also want to thank my dissertation committee members— Dr. Matt Bowers, Dr. Darla Castelli, Dr. Marlene Dixon, Dr. Tolga Ozyurtcu, and Dr. Jan Todd, for their encouragement and valuable advice. -

Evolution 5000 E5780 and E5782 Encoder ST.RE.E10135.4

ST.RE.E10135.4 Issue 4 ENGLISH (UK) REFERENCE GUIDE E5780 and E5782 Encoder Build Version 3.11.0 (and later) E5780/E5782 Encoder Preliminary Pages ENGLISH (UK) ITALIANO READ THIS FIRST! LEGGERE QUESTO AVVISO PER PRIMO! If you do not understand the contents of this manual Se non si capisce il contenuto del presente manuale DO NOT OPERATE THIS EQUIPMENT. NON UTILIZZARE L’APPARECCHIATURA. Also, translation into any EC official language of this manual can be È anche disponibile la versione italiana di questo manuale, ma il costo è made available, at your cost. a carico dell’utente. SVENSKA NEDERLANDS LÄS DETTA FÖRST! LEES DIT EERST! Om Ni inte förstår informationen i denna handbok Als u de inhoud van deze handleiding niet begrijpt ARBETA DÅ INTE MED DENNA UTRUSTNING. STEL DEZE APPARATUUR DAN NIET IN WERKING. En översättning till detta språk av denna handbok kan också anskaffas, U kunt tevens, op eigen kosten, een vertaling van deze handleiding på Er bekostnad. krijgen. PORTUGUÊS SUOMI LEIA O TEXTO ABAIXO ANTES DE MAIS NADA! LUE ENNEN KÄYTTÖÄ! Se não compreende o texto deste manual Jos et ymmärrä käsikirjan sisältöä NÃO UTILIZE O EQUIPAMENTO. ÄLÄ KÄYTÄ LAITETTA. O utilizador poderá também obter uma tradução do manual para o Käsikirja voidaan myös suomentaa asiakkaan kustannuksella. português à própria custa. FRANÇAIS DANSK AVANT TOUT, LISEZ CE QUI SUIT! LÆS DETTE FØRST! Si vous ne comprenez pas les instructions contenues dans ce manuel Udstyret må ikke betjenes NE FAITES PAS FONCTIONNER CET APPAREIL. MEDMINDRE DE TIL FULDE FORSTÅR INDHOLDET AF DENNE HÅNDBOG. En outre, nous pouvons vous proposer, à vos frais, une version Vi kan også for Deres regning levere en dansk oversættelse af denne française de ce manuel. -

Michigan's Wetland Wonders Sponsors and Donors

Thank You Michigan’s Wetland Wonders Sponsors & Donors Ducks Unlimited • One Wader Bag • One 12’ Jon Boat • Donation for Wetland Lynch Mob • Five Decoy Anchors Fowl Pursuit • One Browning Decoy Bag • One Dozen Full Body Gibraltar Duck Hunters • Four Camouflage Wonders Brochure Calls • Two XL Decoy Bags • Two Heavy Hauler Lanyards Goose Decoys Association • 100 Fowl Pursuit 9 DVD’s • Seven Ducks Unlimited Thermos Bottles • Two Standard Decoy Bags • One Decoy Line Remington 887 Shotguns • Three Floating Decoy Bags • One • Two Floating Decoy Bags • One Zink Power Duck Pack • Donation for Pointe Mouillee • 25 Camouflage V-muffs Stranglehold • Two Hypnotizer •Three Band Hunters “The Wetland Wonders Video Duck Call Goose Flags Perfect Storm” DVD’s • Six Hunter’s View • One Goose • Two Snow Storm 24-7 DVD’s Deluxe Lanyards Noose Goose • Three Destination X “Cross- • 12 Mallard Decoys Call roads” DVD’s Pointe Mouillee Waterfowl Festival Committee • One Blind • Donation for Pointe Mouillee • One Duck Call • Donation for Fish Point Saginaw Bay • Seven Hats • Three Complimentary Safari Club International • One Complimentary •Donation for Shiawassee River Wetland Wonders Video • One Goose Call Wetland Wonders Video Chapter • Hunter’s View Porting Services Livonia Chapter Duck Mount Wetland Wonders Video Deluxe Lanyards • Three 50%-off Porting • One Ground Hunting Blind • Donation for • 12 Mallard Decoys Services • One Handheld LED Spotlight Nayanquing • One Ranger Headlamp Point Wetland • One Wildview Game Camera Wonders Video • One Duck Call E 1 SINC 945 Frank’s GREAT OUTDOORS • One Waterfowl Mount Bluewater • One Waterfowl Mount •Seven Gift Cards Chapter • Seven Boxes of Steel Shot • Donation for Harsens Island Wetland Wonders Video Please Support our Sponsors!. -

REQUEST for QUOTATION This RFQ X Is Is Not a Small Business Set-Aside Page 1 of 62 (This Is NOT an Order) 1

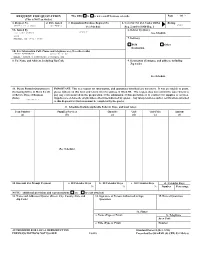

REQUEST FOR QUOTATION This RFQ X is is not a small business set-aside Page 1 Of 62 (This is NOT an Order) 1. Request No. 2. Date Issued 3. Requisition/Purchase Request No. 4. Cert For Nat Def. Under BDSA Rating DOA4 SPRDL1-21-Q-0065 2021AUG10 See Schedule Reg. 2 and/or DMS Reg. 1 5A. Issued By 6. Deliver by (Date) DLA LAND WARREN SPRDL1 See Schedule ZGAB WARREN, MI 48397-5000 7. Delivery X FOB Other Destination 5B. For Information Call: (Name and telephone no.) (No collect calls) DEREK RUTKOWSKI (586)282-3199 EMAIL: [email protected] 8. To: Name and Address, Including Zip Code 9. Destination (Consignee and address, including Zip Code) See Schedule 10. Please Furnish Quotations to IMPORTANT: This is a request for information, and quotations furnished are not offers. If you are unable to quote, the Issuing Office in Block 5A On please indicate on this form and return it to the address in Block 5B. This request does not commit the Government to or Before Close of Business pay any costs incurred in the preparation of the submission of this quotation or to contract for supplies or services. (Date) Supplies are of domestic origin unless otherwise indicated by quoter. Any interpretations and/or certifications attached 2021SEP13 to this Request for Quotation must be completed by the quoter. 11. Schedule (Include applicable Federal, State, and local taxes) Item Number Supplies/Services Quantity Unit Unit Price Amount (a) (b) (c) (d) (e) (f) (See Schedule) 12. Discount For Prompt Payment a. -

Thank You for Downloading This Free Sample of Pwtorch Newsletter #1409, Cover-Dated June 18, 2015. Please Feel Free to Link to I

Thank you for downloading this free sample of PWTorch Newsletter #1409, cover-dated June 18, 2015. Please feel free to link to it on Twitter or Facebook or message boards or any social media. Help spread the word! To become a subscriber and receive continued ongoing access to new editions of the weekly Pro Wrestling Torch Newsletter (in BOTH the PDF and All-Text formats) along with instant access to nearly 1,400 back issues dating back to the 1980s, visit this URL: www.PWTorch.com/govip You will find information on our online VIP membership which costs $99 for a year, $27.50 for three months, or $10 for a single month, recurring. It includes: -New PWTorch Newsletters in PDF and All-Text format weekly -Nearly 1,400 back issues of the PWTorch Newsletter dating back to the 1980s -Ad-free access to PWTorch.com -Ad-free access to the daily PWTorch Livecast -Over 50 additional new VIP audio shows every month -All new VIP audio shows part of RSS feeds that work on iTunes and various podcast apps on smart phones. Get a single feed for all shows or individual feeds for specific shows and themes. -Retro radio shows added regularly (including 100 Pro Wrestling Focus radio shows hosted by Wade Keller from the early 1990s immediately, plus Pro Wrestling Spotlight from New York hosted by John Arezzi and Total Chaos Radio hosted by Jim Valley during the Attitude Era) -Access to newly added VIP content via our free PWTorch App on iPhone and Android www.PWTorch.com/govip ALSO AVAILABLE: Print Copy Home Delivery of the Pro Wrestling Torch Newsletter 12 page edition for just $99 for a full year or $10 a month.