A Simple Approach to the Automated Unit Testing of Clinical SAS® Macros Matthew Nizol, United Biosource Corporation, Ann Arbor, MI

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Practical Unit Testing for Embedded Systems Part 1 PARASOFT WHITE PAPER

Practical Unit Testing for Embedded Systems Part 1 PARASOFT WHITE PAPER Table of Contents Introduction 2 Basics of Unit Testing 2 Benefits 2 Critics 3 Practical Unit Testing in Embedded Development 3 The system under test 3 Importing uVision projects 4 Configure the C++test project for Unit Testing 6 Configure uVision project for Unit Testing 8 Configure results transmission 9 Deal with target limitations 10 Prepare test suite and first exemplary test case 12 Deploy it and collect results 12 Page 1 www.parasoft.com Introduction The idea of unit testing has been around for many years. "Test early, test often" is a mantra that concerns unit testing as well. However, in practice, not many software projects have the luxury of a decent and up-to-date unit test suite. This may change, especially for embedded systems, as the demand for delivering quality software continues to grow. International standards, like IEC-61508-3, ISO/DIS-26262, or DO-178B, demand module testing for a given functional safety level. Unit testing at the module level helps to achieve this requirement. Yet, even if functional safety is not a concern, the cost of a recall—both in terms of direct expenses and in lost credibility—justifies spending a little more time and effort to ensure that our released software does not cause any unpleasant surprises. In this article, we will show how to prepare, maintain, and benefit from setting up unit tests for a simplified simulated ASR module. We will use a Keil evaluation board MCBSTM32E with Cortex-M3 MCU, MDK-ARM with the new ULINK Pro debug and trace adapter, and Parasoft C++test Unit Testing Framework. -

Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems

University of Denver Digital Commons @ DU Electronic Theses and Dissertations Graduate Studies 1-1-2017 Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems Tosapon Pankumhang University of Denver Follow this and additional works at: https://digitalcommons.du.edu/etd Part of the Computer Sciences Commons Recommended Citation Pankumhang, Tosapon, "Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems" (2017). Electronic Theses and Dissertations. 1353. https://digitalcommons.du.edu/etd/1353 This Dissertation is brought to you for free and open access by the Graduate Studies at Digital Commons @ DU. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons @ DU. For more information, please contact [email protected],[email protected]. PARALLEL, CROSS-PLATFORM UNIT TESTING FOR REAL-TIME EMBEDDED SYSTEMS __________ A Dissertation Presented to the Faculty of the Daniel Felix Ritchie School of Engineering and Computer Science University of Denver __________ In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy __________ by Tosapon Pankumhang August 2017 Advisor: Matthew J. Rutherford ©Copyright by Tosapon Pankumhang 2017 All Rights Reserved Author: Tosapon Pankumhang Title: PARALLEL, CROSS-PLATFORM UNIT TESTING FOR REAL-TIME EMBEDDED SYSTEMS Advisor: Matthew J. Rutherford Degree Date: August 2017 ABSTRACT Embedded systems are used in a wide variety of applications (e.g., automotive, agricultural, home security, industrial, medical, military, and aerospace) due to their small size, low-energy consumption, and the ability to control real-time peripheral devices precisely. These systems, however, are different from each other in many aspects: processors, memory size, develop applications/OS, hardware interfaces, and software loading methods. -

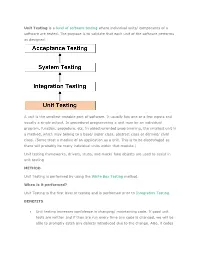

Unit Testing Is a Level of Software Testing Where Individual Units/ Components of a Software Are Tested. the Purpose Is to Valid

Unit Testing is a level of software testing where individual units/ components of a software are tested. The purpose is to validate that each unit of the software performs as designed. A unit is the smallest testable part of software. It usually has one or a few inputs and usually a single output. In procedural programming a unit may be an individual program, function, procedure, etc. In object-oriented programming, the smallest unit is a method, which may belong to a base/ super class, abstract class or derived/ child class. (Some treat a module of an application as a unit. This is to be discouraged as there will probably be many individual units within that module.) Unit testing frameworks, drivers, stubs, and mock/ fake objects are used to assist in unit testing. METHOD Unit Testing is performed by using the White Box Testing method. When is it performed? Unit Testing is the first level of testing and is performed prior to Integration Testing. BENEFITS Unit testing increases confidence in changing/ maintaining code. If good unit tests are written and if they are run every time any code is changed, we will be able to promptly catch any defects introduced due to the change. Also, if codes are already made less interdependent to make unit testing possible, the unintended impact of changes to any code is less. Codes are more reusable. In order to make unit testing possible, codes need to be modular. This means that codes are easier to reuse. Development is faster. How? If you do not have unit testing in place, you write your code and perform that fuzzy ‘developer test’ (You set some breakpoints, fire up the GUI, provide a few inputs that hopefully hit your code and hope that you are all set.) If you have unit testing in place, you write the test, write the code and run the test. -

Including Jquery Is Not an Answer! - Design, Techniques and Tools for Larger JS Apps

Including jQuery is not an answer! - Design, techniques and tools for larger JS apps Trifork A/S, Headquarters Margrethepladsen 4, DK-8000 Århus C, Denmark [email protected] Karl Krukow http://www.trifork.com Trifork Geek Night March 15st, 2011, Trifork, Aarhus What is the question, then? Does your JavaScript look like this? 2 3 What about your server side code? 4 5 Non-functional requirements for the Server-side . Maintainability and extensibility . Technical quality – e.g. modularity, reuse, separation of concerns – automated testing – continuous integration/deployment – Tool support (static analysis, compilers, IDEs) . Productivity . Performant . Appropriate architecture and design . … 6 Why so different? . “Front-end” programming isn't 'real' programming? . JavaScript isn't a 'real' language? – Browsers are impossible... That's just the way it is... The problem is only going to get worse! ● JS apps will get larger and more complex. ● More logic and computation on the client. ● HTML5 and mobile web will require more programming. ● We have to test and maintain these apps. ● We will have harder requirements for performance (e.g. mobile). 7 8 NO Including jQuery is NOT an answer to these problems. (Neither is any other js library) You need to do more. 9 Improving quality on client side code . The goal of this talk is to motivate and help you improve the technical quality of your JavaScript projects . Three main points. To improve non-functional quality: – you need to understand the language and host APIs. – you need design, structure and file-organization as much (or even more) for JavaScript as you do in other languages, e.g. -

Testing and Debugging Tools for Reproducible Research

Testing and debugging Tools for Reproducible Research Karl Broman Biostatistics & Medical Informatics, UW–Madison kbroman.org github.com/kbroman @kwbroman Course web: kbroman.org/Tools4RR We spend a lot of time debugging. We’d spend a lot less time if we tested our code properly. We want to get the right answers. We can’t be sure that we’ve done so without testing our code. Set up a formal testing system, so that you can be confident in your code, and so that problems are identified and corrected early. Even with a careful testing system, you’ll still spend time debugging. Debugging can be frustrating, but the right tools and skills can speed the process. "I tried it, and it worked." 2 This is about the limit of most programmers’ testing efforts. But: Does it still work? Can you reproduce what you did? With what variety of inputs did you try? "It's not that we don't test our code, it's that we don't store our tests so they can be re-run automatically." – Hadley Wickham R Journal 3(1):5–10, 2011 3 This is from Hadley’s paper about his testthat package. Types of tests ▶ Check inputs – Stop if the inputs aren't as expected. ▶ Unit tests – For each small function: does it give the right results in specific cases? ▶ Integration tests – Check that larger multi-function tasks are working. ▶ Regression tests – Compare output to saved results, to check that things that worked continue working. 4 Your first line of defense should be to include checks of the inputs to a function: If they don’t meet your specifications, you should issue an error or warning. -

The Art, Science, and Engineering of Fuzzing: a Survey

1 The Art, Science, and Engineering of Fuzzing: A Survey Valentin J.M. Manes,` HyungSeok Han, Choongwoo Han, Sang Kil Cha, Manuel Egele, Edward J. Schwartz, and Maverick Woo Abstract—Among the many software vulnerability discovery techniques available today, fuzzing has remained highly popular due to its conceptual simplicity, its low barrier to deployment, and its vast amount of empirical evidence in discovering real-world software vulnerabilities. At a high level, fuzzing refers to a process of repeatedly running a program with generated inputs that may be syntactically or semantically malformed. While researchers and practitioners alike have invested a large and diverse effort towards improving fuzzing in recent years, this surge of work has also made it difficult to gain a comprehensive and coherent view of fuzzing. To help preserve and bring coherence to the vast literature of fuzzing, this paper presents a unified, general-purpose model of fuzzing together with a taxonomy of the current fuzzing literature. We methodically explore the design decisions at every stage of our model fuzzer by surveying the related literature and innovations in the art, science, and engineering that make modern-day fuzzers effective. Index Terms—software security, automated software testing, fuzzing. ✦ 1 INTRODUCTION Figure 1 on p. 5) and an increasing number of fuzzing Ever since its introduction in the early 1990s [152], fuzzing studies appear at major security conferences (e.g. [225], has remained one of the most widely-deployed techniques [52], [37], [176], [83], [239]). In addition, the blogosphere is to discover software security vulnerabilities. At a high level, filled with many success stories of fuzzing, some of which fuzzing refers to a process of repeatedly running a program also contain what we consider to be gems that warrant a with generated inputs that may be syntactically or seman- permanent place in the literature. -

Background Goal Unit Testing Regression Testing Automation

Streamlining a Workflow Jeffrey Falgout Daniel Wilkey [email protected] [email protected] Science, Discovery, and the Universe Bloomberg L.P. Computer Science and Mathematics Major Background Regression Testing Jenkins instance would begin running all Bloomberg L.P.'s main product is a Another useful tool to have in unit and regression tests. This Jenkins job desktop application which provides sufficiently complex systems is a suite would then report the results on the financial tools. Bloomberg L.P. of regression tests. Regression testing Phabricator review and signal the author employs many computer programmers allows you to test very high level in an appropriate way. The previous to maintain, update, and add new behavior which can detect low level workflow required manual intervention to features to this product. bugs. run tests. This new workflow only the The product the code base was developer to create his feature branch in implementing has a record/playback order for all tests to be run automatically. Goal feature. This was leveraged to create a Many of the tasks that a programmer regression testing framework. This has to perform as part of a team can be framework leveraged the unit testing automated. The goal of this project was framework wherever possible. to work with a team to automate tasks At various points during the playback, where possible and make them easier callback methods would be invoked. An example of the new workflow, where a Jenkins where impossible. These methods could have testing logic to instance will automatically run tests and update the make sure the application was in the peer review. -

Introduction to Unit Testing

INTRODUCTION TO UNIT TESTING In C#, you can think of a unit as a method. You thus write a unit test by writing something that tests a method. For example, let’s say that we had the aforementioned Calculator class and that it contained an Add(int, int) method. Let’s say that you want to write some code to test that method. public class CalculatorTester { public void TestAdd() { var calculator = new Calculator(); if (calculator.Add(2, 2) == 4) Console.WriteLine("Success"); else Console.WriteLine("Failure"); } } Let’s take a look now at what some code written for an actual unit test framework (MS Test) looks like. [TestClass] public class CalculatorTests { [TestMethod] public void TestMethod1() { var calculator = new Calculator(); Assert.AreEqual(4, calculator.Add(2, 2)); } } Notice the attributes, TestClass and TestMethod. Those exist simply to tell the unit test framework to pay attention to them when executing the unit test suite. When you want to get results, you invoke the unit test runner, and it executes all methods decorated like this, compiling the results into a visually pleasing report that you can view. Let’s take a look at your top 3 unit test framework options for C#. MSTest/Visual Studio MSTest was actually the name of a command line tool for executing tests, MSTest ships with Visual Studio, so you have it right out of the box, in your IDE, without doing anything. With MSTest, getting that setup is as easy as File->New Project. Then, when you write a test, you can right click on it and execute, having your result displayed in the IDE One of the most frequent knocks on MSTest is that of performance. -

Unit Testing Or What Can We Learn from Tech Startups

Unit Testing or what can we learn from tech startups 2014-04-01 Saulius Lukauskas Outline • Motivation • Hierarchy of Testing • Short tutorial of unit testing in Python • Two rules of writing testable code • Kickstarting Software Development Workflow Coding Testing Production This is the standard software workflow which most of people are familiar with. Unfortunately, when people talk about coding only the coding and production parts are mentioned, while the testing part is often not spoken about. The better we get at Coding the less problems there will be with our software There is this misconception in software development, an arrogance one may say, that good programmers make good code that has few problems. The better we get at Testing the less problems there will be with our software In reality is that people who are good at testing, not at programming per se, make good software. Good programmers just make easier to test code Software Development Workflow Putting bugs in Debugging Production This is due to the fact the development cycle often looks like this. Cost of a software bug Putting bugs in Debugging Production The cost of having a bug in your software increases with each the step of the cycle My program crashed when I clicked “run” Cost of a software bug Putting bugs in Debugging Production The bugs at the coding stage are very easy to spot — the compiler often warns about them. They are also so easy to fix, most of us do not even consider them “bugs” any more. I tried this sample My program crashed model and the when I clicked “run” probabilities did not sum to 1 Cost of a software bug Putting bugs in Debugging Production Bugs at the quality assurance (testing) stage are a bit harder to spot, often require running your software for a good few minutes, checking the output carefully. -

Devops Advantages for Testing

INTEGRATION AND INTEROPERABILITY Labs found that teams using DevOps experience “60 times fewer failures and recover from failures 168 times faster than DevOps Advantages their lower-performing peers. They also deploy 30 times more frequently with 200 times shorter lead times [2].” The choices of tools and frameworks for all of this automation for Testing has grown dramatically in recent years, with options available for almost any operating system, any programming language, open source or commercial, hosted or as-a-service. Active communi- Increasing Quality through ties surround many of these tools, making it easy to find help to start using them and to resolve issues. Continuous Delivery Continuous Integration Building a CD process starts with building a Continuous Integra- Gene Gotimer, Coveros tion (CI) process. In CI developers frequently integrate other de- Thomas Stiehm, Coveros veloper’s code changes, often multiple times a day. The integrated code is committed to source control then automatically built and Abstract. DevOps and continuous delivery can improve software quality and unit tested. Developers get into the rhythm of a rapid “edit-compile- reduce risk by offering opportunities for testing and some non-obvious benefits test” feedback loop. Integration errors are discovered quickly, to the software development cycle. By taking advantage of cloud computing and usually within minutes or hours of the integration being performed, automated deployment, throughput can be improved while increasing the amount while the changes are fresh on the developer’s minds. of testing and ensuring high quality. This article points out some of these oppor- A CI engine, such as Jenkins [3], is often used to schedule tunities and offers suggestions for making the most of them. -

Guidelines on Minimum Standards for Developer Verification of Software

Guidelines on Minimum Standards for Developer Verification of Software Paul E. Black Barbara Guttman Vadim Okun Software and Systems Division Information Technology Laboratory July 2021 Abstract Executive Order (EO) 14028, Improving the Nation’s Cybersecurity, 12 May 2021, di- rects the National Institute of Standards and Technology (NIST) to recommend minimum standards for software testing within 60 days. This document describes eleven recommen- dations for software verification techniques as well as providing supplemental information about the techniques and references for further information. It recommends the following techniques: • Threat modeling to look for design-level security issues • Automated testing for consistency and to minimize human effort • Static code scanning to look for top bugs • Heuristic tools to look for possible hardcoded secrets • Use of built-in checks and protections • “Black box” test cases • Code-based structural test cases • Historical test cases • Fuzzing • Web app scanners, if applicable • Address included code (libraries, packages, services) The document does not address the totality of software verification, but instead recom- mends techniques that are broadly applicable and form the minimum standards. The document was developed by NIST in consultation with the National Security Agency. Additionally, we received input from numerous outside organizations through papers sub- mitted to a NIST workshop on the Executive Order held in early June, 2021 and discussion at the workshop as well as follow up with several of the submitters. Keywords software assurance; verification; testing; static analysis; fuzzing; code review; software security. Disclaimer Any mention of commercial products or reference to commercial organizations is for infor- mation only; it does not imply recommendation or endorsement by NIST, nor is it intended to imply that the products mentioned are necessarily the best available for the purpose. -

Unit Testing of Java EE Web Applications

Unit Testing of Java EE Web Applications CHRISTIAN CASTILLO and MUSTAFA HAMRA KTH Information and Communication Technology Bachelor of Science Thesis Stockholm, Sweden 2014 TRITA-ICT-EX-2014:55 Unit Testing of Java EE Web Applications Christian Castillo Mustafa Hamra Bachelor of Science Thesis ICT 2013:3 TIDAB 009 KTH Information and Communication Technology Computer Engineering SE-164 40 KISTA Examensarbete ICT 2013:3 TIDAB 009 Analys av testramverk för Java EE Applikationer Christian Castillo Mustafa Hamra Godkänt Examinator Handledare 2014-maj-09 Leif Lindbäck Leif Lindbäck Uppdragsgivare Kontaktperson KTH/ICT/SCS Leif Lindbäck Sammanfattning Målet med denna rapport att är utvärdera testramverken Mockito och Selenium för att se om de är väl anpassade för nybörjare som ska enhetstesta och integritetstesta existerande Java EE Webbapplikationer. Rapporten ska också hjälpa till med inlärningsprocessen genom att förse studenterna, i kursen IV1201 – Arkitektur och design av globala applikationer, med användarvänliga guider. ii Bachelor thesis ICT 2014:6 TIDAB 009 Unit Testing of Java EE web applications Christian Castillo Mustafa Hamra Approved Examiner Supervisor 2014-maj-09 Leif Lindbäck Leif Lindbäck Commissioner Contact person KTH/ICT/SCS Leif Lindbäck Abstract This report determines if the Mockito and Selenium testing frameworks are well suited for novice users when unit- and integration testing existing Java EE Web applications in the course IV1201 – Design of Global Applications. The report also provides user-friendly tutorials to help with the learning process. iii PREFACE The report is a Bachelor Thesis that has been written in collaboration with the Department of Software and Computer Systems (SCS), School of Information and Communication Technology (ICT), Royal Institute of Technology (KTH).