Accelerate Devops with Continuous Integration and Simulation a How-To Guide for Embedded Development

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Core Elements of Continuous Testing

WHITE PAPER CORE ELEMENTS OF CONTINUOUS TESTING Today’s modern development disciplines -- whether Agile, Continuous Integration (CI) or Continuous Delivery (CD) -- have completely transformed how teams develop and deliver applications. Companies that need to compete in today’s fast-paced digital economy must also transform how they test. Successful teams know the secret sauce to delivering high quality digital experiences fast is continuous testing. This paper will define continuous testing, explain what it is, why it’s important, and the core elements and tactical changes development and QA teams need to make in order to succeed at this emerging practice. TABLE OF CONTENTS 3 What is Continuous Testing? 6 Tactical Engineering Considerations 3 Why Continuous Testing? 7 Benefits of Continuous Testing 4 Core Elements of Continuous Testing WHAT IS CONTINUOUS TESTING? Continuous testing is the practice of executing automated tests throughout the software development cycle. It’s more than just automated testing; it’s applying the right level of automation at each stage in the development process. Unlike legacy testing methods that occur at the end of the development cycle, continuous testing occurs at multiple stages, including development, integration, pre-release, and in production. Continuous testing ensures that bugs are caught and fixed far earlier in the development process, improving overall quality while saving significant time and money. WHY CONTINUOUS TESTING? Continuous testing is a critical requirement for organizations that are shifting left towards CI or CD, both modern development practices that ensure faster time to market. When automated testing is coupled with a CI server, tests can instantly be kicked off with every build, and alerts with passing or failing test results can be delivered directly to the development team in real time. -

Rugby - a Process Model for Continuous Software Engineering

INSTITUT FUR¨ INFORMATIK DER TECHNISCHEN UNIVERSITAT¨ MUNCHEN¨ Forschungs- und Lehreinheit I Angewandte Softwaretechnik Rugby - A Process Model for Continuous Software Engineering Stephan Tobias Krusche Vollstandiger¨ Abdruck der von der Fakultat¨ fur¨ Informatik der Technischen Universitat¨ Munchen¨ zur Erlangung des akademischen Grades eines Doktors der Naturwissenschaften (Dr. rer. nat.) genehmigten Dissertation. Vorsitzender: Univ.-Prof. Dr. Helmut Seidl Prufer¨ der Dissertation: 1. Univ.-Prof. Bernd Brugge,¨ Ph.D. 2. Prof. Dr. Jurgen¨ Borstler,¨ Blekinge Institute of Technology, Karlskrona, Schweden Die Dissertation wurde am 28.01.2016 bei der Technischen Universitat¨ Munchen¨ eingereicht und durch die Fakultat¨ fur¨ Informatik am 29.02.2016 angenommen. Abstract Software is developed in increasingly dynamic environments. Organizations need the capability to deal with uncertainty and to react to unexpected changes in require- ments and technologies. Agile methods already improve the flexibility towards changes and with the emergence of continuous delivery, regular feedback loops have become possible. The abilities to maintain high code quality through reviews, to regularly re- lease software, and to collect and prioritize user feedback, are necessary for con- tinuous software engineering. However, there exists no uniform process model that handles the increasing number of reviews, releases and feedback reports. In this dissertation, we describe Rugby, a process model for continuous software en- gineering that is based on a meta model, which treats development activities as parallel workflows and which allows tailoring, customization and extension. Rugby includes a change model and treats changes as events that activate workflows. It integrates re- view management, release management, and feedback management as workflows. As a consequence, Rugby handles the increasing number of reviews, releases and feedback and at the same time decreases their size and effort. -

Best Practices for Systems Integration

Best Practices for Systems Integration November 2011 Presented by Pete Houser Manager for Engineering & Product Excellence Northrop Grumman Corporation Copyright © 2011 Northrop Grumman Systems Corporation. All rights reserved. Log #DSD-11-78 Systems Integration Definition • Systems Integration (SI) is one aspect of the Systems Engineering, Integration, and Test (SEIT) process. SI must be integrated within the overall SEIT structure. • Systems Integration is the process of: – Assembling the constituent parts of a system in a logical, cost-effective way, comprehensively checking system execution (all nominal & exceptional paths), and including a full functional check-out. • Systems Test is the process of: – Verifying that the system meets its requirements, and – Validating that the system performs in accordance with the customer/user expectations • Across Dod Programs, systems integration experiences have been erratic (at best). In many cases: – Programs do not define dedicated Integration Engineers. – Immature system is passed to the Test organization for concurrent Integration and Test. – Program budget is exceeded because Integration was not separately estimated. Copyright © 2011 Northrop Grumman Systems Corporation. All rights reserved. Log #DSD-11-78 Systems Integration Issues • When executed as a distinct process, Systems Integration has historically followed the “big bang” model shown below. – All of the components arrive more-or-less simultaneously (usually late) in the Integration Lab. – The Integration engineers arrive more-or-less -

Practical Unit Testing for Embedded Systems Part 1 PARASOFT WHITE PAPER

Practical Unit Testing for Embedded Systems Part 1 PARASOFT WHITE PAPER Table of Contents Introduction 2 Basics of Unit Testing 2 Benefits 2 Critics 3 Practical Unit Testing in Embedded Development 3 The system under test 3 Importing uVision projects 4 Configure the C++test project for Unit Testing 6 Configure uVision project for Unit Testing 8 Configure results transmission 9 Deal with target limitations 10 Prepare test suite and first exemplary test case 12 Deploy it and collect results 12 Page 1 www.parasoft.com Introduction The idea of unit testing has been around for many years. "Test early, test often" is a mantra that concerns unit testing as well. However, in practice, not many software projects have the luxury of a decent and up-to-date unit test suite. This may change, especially for embedded systems, as the demand for delivering quality software continues to grow. International standards, like IEC-61508-3, ISO/DIS-26262, or DO-178B, demand module testing for a given functional safety level. Unit testing at the module level helps to achieve this requirement. Yet, even if functional safety is not a concern, the cost of a recall—both in terms of direct expenses and in lost credibility—justifies spending a little more time and effort to ensure that our released software does not cause any unpleasant surprises. In this article, we will show how to prepare, maintain, and benefit from setting up unit tests for a simplified simulated ASR module. We will use a Keil evaluation board MCBSTM32E with Cortex-M3 MCU, MDK-ARM with the new ULINK Pro debug and trace adapter, and Parasoft C++test Unit Testing Framework. -

Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems

University of Denver Digital Commons @ DU Electronic Theses and Dissertations Graduate Studies 1-1-2017 Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems Tosapon Pankumhang University of Denver Follow this and additional works at: https://digitalcommons.du.edu/etd Part of the Computer Sciences Commons Recommended Citation Pankumhang, Tosapon, "Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems" (2017). Electronic Theses and Dissertations. 1353. https://digitalcommons.du.edu/etd/1353 This Dissertation is brought to you for free and open access by the Graduate Studies at Digital Commons @ DU. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons @ DU. For more information, please contact [email protected],[email protected]. PARALLEL, CROSS-PLATFORM UNIT TESTING FOR REAL-TIME EMBEDDED SYSTEMS __________ A Dissertation Presented to the Faculty of the Daniel Felix Ritchie School of Engineering and Computer Science University of Denver __________ In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy __________ by Tosapon Pankumhang August 2017 Advisor: Matthew J. Rutherford ©Copyright by Tosapon Pankumhang 2017 All Rights Reserved Author: Tosapon Pankumhang Title: PARALLEL, CROSS-PLATFORM UNIT TESTING FOR REAL-TIME EMBEDDED SYSTEMS Advisor: Matthew J. Rutherford Degree Date: August 2017 ABSTRACT Embedded systems are used in a wide variety of applications (e.g., automotive, agricultural, home security, industrial, medical, military, and aerospace) due to their small size, low-energy consumption, and the ability to control real-time peripheral devices precisely. These systems, however, are different from each other in many aspects: processors, memory size, develop applications/OS, hardware interfaces, and software loading methods. -

Towards Embedded System Agile Development Challenging Verification, Validation and Accreditation

Towards Embedded System Agile Development Challenging Verification, Validation and Accreditation : Application in a Healthcare Company Clément Duffau, Bartosz Grabiec, Mireille Blay-Fornarino To cite this version: Clément Duffau, Bartosz Grabiec, Mireille Blay-Fornarino. Towards Embedded System Agile Develop- ment Challenging Verification, Validation and Accreditation : Application in a Healthcare Company. ISSRE 2017- IEEE International Symposium on Software Reliability Engineering, Oct 2017, Toulouse, France. pp.1-4. hal-01678815 HAL Id: hal-01678815 https://hal.archives-ouvertes.fr/hal-01678815 Submitted on 9 Jan 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Towards Embedded System Agile Development Challenging Verification, Validation and Accreditation Application in a Healthcare Company Clement´ Duffau Bartosz Grabiec Mireille Blay-Fornarino AXONIC and I3S AXONIC I3S Universite´ Coteˆ d’Azur, CNRS Sophia Antipolis, France Universite´ Coteˆ d’Azur, CNRS Sophia Antipolis, France [email protected] Sophia Antipolis, France [email protected] [email protected] Abstract—When Agile development meets critical embedded to survive. Moreover, with limited human resources, quality systems, verification, validation and accreditation activities are improvement with Agility usage remains to be demonstrated. impacted. Challenges such as tests increase or accreditation The remainder of this paper is organized as follows. -

Leading Practice: Test Strategy and Approach in Agile Projects

CA SERVICES | LEADING PRACTICE Leading Practice: Test Strategy and Approach in Agile Projects Abstract This document provides best practices on how to strategize testing CA Project and Portfolio Management (CA PPM) in an agile project. The document does not include specific test cases; the list of test cases and steps for each test case are provided in a separate document. This document should be used by the agile project team that is planning the testing activities, and by end users who perform user acceptance testing (UAT). Concepts Concept Description Test Approach Defines testing strategy, roles and responsibilities of various team members, and test types. Testing Environments Outlines which testing is carried out in which environment. Testing Automation and Tools Addresses test management and automation tools required for test execution. Risk Analysis Defines the approach for risk identification and plans to mitigate risks as well as a contingency plan. Test Planning and Execution Defines the approach to plan the test cases, test scripts, and execution. Review and Approval Lists individuals who should review, approve and sign off on test results. Test Approach The test approach defines testing strategy, roles and responsibilities of various team members, and the test types. The first step is to define the testing strategy. It should describe how and when the testing will be conducted, who will do the testing, the type of testing being conducted, features being tested, environment(s) where the testing takes place, what testing tools are used, and how are defects tracked and managed. The testing strategy should be prepared by the agile core team. -

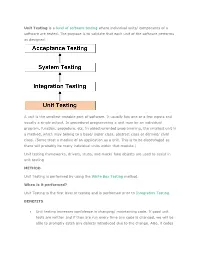

Unit Testing Is a Level of Software Testing Where Individual Units/ Components of a Software Are Tested. the Purpose Is to Valid

Unit Testing is a level of software testing where individual units/ components of a software are tested. The purpose is to validate that each unit of the software performs as designed. A unit is the smallest testable part of software. It usually has one or a few inputs and usually a single output. In procedural programming a unit may be an individual program, function, procedure, etc. In object-oriented programming, the smallest unit is a method, which may belong to a base/ super class, abstract class or derived/ child class. (Some treat a module of an application as a unit. This is to be discouraged as there will probably be many individual units within that module.) Unit testing frameworks, drivers, stubs, and mock/ fake objects are used to assist in unit testing. METHOD Unit Testing is performed by using the White Box Testing method. When is it performed? Unit Testing is the first level of testing and is performed prior to Integration Testing. BENEFITS Unit testing increases confidence in changing/ maintaining code. If good unit tests are written and if they are run every time any code is changed, we will be able to promptly catch any defects introduced due to the change. Also, if codes are already made less interdependent to make unit testing possible, the unintended impact of changes to any code is less. Codes are more reusable. In order to make unit testing possible, codes need to be modular. This means that codes are easier to reuse. Development is faster. How? If you do not have unit testing in place, you write your code and perform that fuzzy ‘developer test’ (You set some breakpoints, fire up the GUI, provide a few inputs that hopefully hit your code and hope that you are all set.) If you have unit testing in place, you write the test, write the code and run the test. -

Including Jquery Is Not an Answer! - Design, Techniques and Tools for Larger JS Apps

Including jQuery is not an answer! - Design, techniques and tools for larger JS apps Trifork A/S, Headquarters Margrethepladsen 4, DK-8000 Århus C, Denmark [email protected] Karl Krukow http://www.trifork.com Trifork Geek Night March 15st, 2011, Trifork, Aarhus What is the question, then? Does your JavaScript look like this? 2 3 What about your server side code? 4 5 Non-functional requirements for the Server-side . Maintainability and extensibility . Technical quality – e.g. modularity, reuse, separation of concerns – automated testing – continuous integration/deployment – Tool support (static analysis, compilers, IDEs) . Productivity . Performant . Appropriate architecture and design . … 6 Why so different? . “Front-end” programming isn't 'real' programming? . JavaScript isn't a 'real' language? – Browsers are impossible... That's just the way it is... The problem is only going to get worse! ● JS apps will get larger and more complex. ● More logic and computation on the client. ● HTML5 and mobile web will require more programming. ● We have to test and maintain these apps. ● We will have harder requirements for performance (e.g. mobile). 7 8 NO Including jQuery is NOT an answer to these problems. (Neither is any other js library) You need to do more. 9 Improving quality on client side code . The goal of this talk is to motivate and help you improve the technical quality of your JavaScript projects . Three main points. To improve non-functional quality: – you need to understand the language and host APIs. – you need design, structure and file-organization as much (or even more) for JavaScript as you do in other languages, e.g. -

Testing and Debugging Tools for Reproducible Research

Testing and debugging Tools for Reproducible Research Karl Broman Biostatistics & Medical Informatics, UW–Madison kbroman.org github.com/kbroman @kwbroman Course web: kbroman.org/Tools4RR We spend a lot of time debugging. We’d spend a lot less time if we tested our code properly. We want to get the right answers. We can’t be sure that we’ve done so without testing our code. Set up a formal testing system, so that you can be confident in your code, and so that problems are identified and corrected early. Even with a careful testing system, you’ll still spend time debugging. Debugging can be frustrating, but the right tools and skills can speed the process. "I tried it, and it worked." 2 This is about the limit of most programmers’ testing efforts. But: Does it still work? Can you reproduce what you did? With what variety of inputs did you try? "It's not that we don't test our code, it's that we don't store our tests so they can be re-run automatically." – Hadley Wickham R Journal 3(1):5–10, 2011 3 This is from Hadley’s paper about his testthat package. Types of tests ▶ Check inputs – Stop if the inputs aren't as expected. ▶ Unit tests – For each small function: does it give the right results in specific cases? ▶ Integration tests – Check that larger multi-function tasks are working. ▶ Regression tests – Compare output to saved results, to check that things that worked continue working. 4 Your first line of defense should be to include checks of the inputs to a function: If they don’t meet your specifications, you should issue an error or warning. -

Model and Tool Integration in High Level Design of Embedded Systems

Model and Tool Integration in High Level Design of Embedded Systems JIANLIN SHI Licentiate thesis TRITA – MMK 2007:10 Department of Machine Design ISSN 1400-1179 Royal Institute of Technology ISRN/KTH/MMK/R-07/10-SE SE-100 44 Stockholm TRITA – MMK 2007:10 ISSN 1400-1179 ISRN/KTH/MMK/R-07/10-SE Model and Tool Integration in High Level Design of Embedded Systems Jianlin Shi Licentiate thesis Academic thesis, which with the approval of Kungliga Tekniska Högskolan, will be presented for public review in fulfilment of the requirements for a Licentiate of Engineering in Machine Design. The public review is held at Kungliga Tekniska Högskolan, Brinellvägen 83, A425 at 2007-12-20. Mechatronics Lab TRITA - MMK 2007:10 Department of Machine Design ISSN 1400 -1179 Royal Institute of Technology ISRN/KTH/MMK/R-07/10-SE S-100 44 Stockholm Document type Date SWEDEN Licentiate Thesis 2007-12-20 Author(s) Supervisor(s) Jianlin Shi Martin Törngren, Dejiu Chen ([email protected]) Sponsor(s) Title SSF (through the SAVE and SAVE++ projects), VINNOVA (through the Model and Tool Integration in High Level Design of Modcomp project), and the European Embedded Systems Commission (through the ATESST project) Abstract The development of advanced embedded systems requires a systematic approach as well as advanced tool support in dealing with their increasing complexity. This complexity is due to the increasing functionality that is implemented in embedded systems and stringent (and conflicting) requirements placed upon such systems from various stakeholders. The corresponding system development involves several specialists employing different modeling languages and tools. -

Chap 3. Test Models and Strategies 3.5 Test Integration and Automation 1

Chap 3. Test Models and Strategies 3.5 Test Integration and Automation 1. Introduction 2. Integration Testing 3. System Testing 4. Test Automation Appendix: JUnit Overview 1 1. Introduction -A system is composed of components. System of software components can be defined at any physical scope. Component System Typical intercomponent interfaces (locus of (Focus of Integration) (Scope of integration faults) Integration) Method Class Instance variables Intraclass messages Class Cluster Intraclass messages Cluster Subsystem Interclass messages Interpackage messages Subsystem System Inteprocess communication Remote procedure call ORB services, OS services -Integration test design is concerned with several primary questions: 1. Which components and interfaces should be exercised? 2. In what sequence will component interfaces be exercised? 2 3. Which test design technique should be used to exercise each interface? -Integration testing is a search for component faults that cause intercomponent failures. -System scope testing is a search for faults that lead to a failure to meet a system scope responsibility. ÷System scope testing cannot be done unless components interoperate sufficiently well to exercise system scope responsibilities. -Effective testing at system scope requires a concrete and testable system-level specification. ÷System test cases must be derived from some kind of functional specification. Traditionally, user documentation, product literature, line-item narrative requirements, and system scope models have been used. 3 2. Integration Testing -Unit testing focuses on individual components. Once faults in each component have been removed and the test cases do not reveal any new fault, components are ready to be integrated into larger subsystems. -Integration testing detects faults that have not been detected during unit testing, by focusing on small groups of components.