Entropy Changes in the Clustering of Galaxies in an Expanding Universe 1Tabasum Masood, 1, 2 Naseer Iqbal, 1 Mohammad Shafi Khan

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Entropy, Information, and Conservation of Information

entropy Article On Entropy, Information, and Conservation of Information Yunus A. Çengel Department of Mechanical Engineering, University of Nevada, Reno, NV 89557, USA; [email protected] Abstract: The term entropy is used in different meanings in different contexts, sometimes in contradic- tory ways, resulting in misunderstandings and confusion. The root cause of the problem is the close resemblance of the defining mathematical expressions of entropy in statistical thermodynamics and information in the communications field, also called entropy, differing only by a constant factor with the unit ‘J/K’ in thermodynamics and ‘bits’ in the information theory. The thermodynamic property entropy is closely associated with the physical quantities of thermal energy and temperature, while the entropy used in the communications field is a mathematical abstraction based on probabilities of messages. The terms information and entropy are often used interchangeably in several branches of sciences. This practice gives rise to the phrase conservation of entropy in the sense of conservation of information, which is in contradiction to the fundamental increase of entropy principle in thermody- namics as an expression of the second law. The aim of this paper is to clarify matters and eliminate confusion by putting things into their rightful places within their domains. The notion of conservation of information is also put into a proper perspective. Keywords: entropy; information; conservation of information; creation of information; destruction of information; Boltzmann relation Citation: Çengel, Y.A. On Entropy, 1. Introduction Information, and Conservation of One needs to be cautious when dealing with information since it is defined differently Information. Entropy 2021, 23, 779. -

Practice Problems from Chapter 1-3 Problem 1 One Mole of a Monatomic Ideal Gas Goes Through a Quasistatic Three-Stage Cycle (1-2, 2-3, 3-1) Shown in V 3 the Figure

Practice Problems from Chapter 1-3 Problem 1 One mole of a monatomic ideal gas goes through a quasistatic three-stage cycle (1-2, 2-3, 3-1) shown in V 3 the Figure. T1 and T2 are given. V 2 2 (a) (10) Calculate the work done by the gas. Is it positive or negative? V 1 1 (b) (20) Using two methods (Sackur-Tetrode eq. and dQ/T), calculate the entropy change for each stage and ∆ for the whole cycle, Stotal. Did you get the expected ∆ result for Stotal? Explain. T1 T2 T (c) (5) What is the heat capacity (in units R) for each stage? Problem 1 (cont.) ∝ → (a) 1 – 2 V T P = const (isobaric process) δW 12=P ( V 2−V 1 )=R (T 2−T 1)>0 V = const (isochoric process) 2 – 3 δW 23 =0 V 1 V 1 dV V 1 T1 3 – 1 T = const (isothermal process) δW 31=∫ PdV =R T1 ∫ =R T 1 ln =R T1 ln ¿ 0 V V V 2 T 2 V 2 2 T1 T 2 T 2 δW total=δW 12+δW 31=R (T 2−T 1)+R T 1 ln =R T 1 −1−ln >0 T 2 [ T 1 T 1 ] Problem 1 (cont.) Sackur-Tetrode equation: V (b) 3 V 3 U S ( U ,V ,N )=R ln + R ln +kB ln f ( N ,m ) V2 2 N 2 N V f 3 T f V f 3 T f ΔS =R ln + R ln =R ln + ln V V 2 T V 2 T 1 1 i i ( i i ) 1 – 2 V ∝ T → P = const (isobaric process) T1 T2 T 5 T 2 ΔS12 = R ln 2 T 1 T T V = const (isochoric process) 3 1 3 2 2 – 3 ΔS 23 = R ln =− R ln 2 T 2 2 T 1 V 1 T 2 3 – 1 T = const (isothermal process) ΔS 31 =R ln =−R ln V 2 T 1 5 T 2 T 2 3 T 2 as it should be for a quasistatic cyclic process ΔS = R ln −R ln − R ln =0 cycle 2 T T 2 T (quasistatic – reversible), 1 1 1 because S is a state function. -

Lecture 4: 09.16.05 Temperature, Heat, and Entropy

3.012 Fundamentals of Materials Science Fall 2005 Lecture 4: 09.16.05 Temperature, heat, and entropy Today: LAST TIME .........................................................................................................................................................................................2� State functions ..............................................................................................................................................................................2� Path dependent variables: heat and work..................................................................................................................................2� DEFINING TEMPERATURE ...................................................................................................................................................................4� The zeroth law of thermodynamics .............................................................................................................................................4� The absolute temperature scale ..................................................................................................................................................5� CONSEQUENCES OF THE RELATION BETWEEN TEMPERATURE, HEAT, AND ENTROPY: HEAT CAPACITY .......................................6� The difference between heat and temperature ...........................................................................................................................6� Defining heat capacity.................................................................................................................................................................6� -

Entropy: Ideal Gas Processes

Chapter 19: The Kinec Theory of Gases Thermodynamics = macroscopic picture Gases micro -> macro picture One mole is the number of atoms in 12 g sample Avogadro’s Number of carbon-12 23 -1 C(12)—6 protrons, 6 neutrons and 6 electrons NA=6.02 x 10 mol 12 atomic units of mass assuming mP=mn Another way to do this is to know the mass of one molecule: then So the number of moles n is given by M n=N/N sample A N = N A mmole−mass € Ideal Gas Law Ideal Gases, Ideal Gas Law It was found experimentally that if 1 mole of any gas is placed in containers that have the same volume V and are kept at the same temperature T, approximately all have the same pressure p. The small differences in pressure disappear if lower gas densities are used. Further experiments showed that all low-density gases obey the equation pV = nRT. Here R = 8.31 K/mol ⋅ K and is known as the "gas constant." The equation itself is known as the "ideal gas law." The constant R can be expressed -23 as R = kNA . Here k is called the Boltzmann constant and is equal to 1.38 × 10 J/K. N If we substitute R as well as n = in the ideal gas law we get the equivalent form: NA pV = NkT. Here N is the number of molecules in the gas. The behavior of all real gases approaches that of an ideal gas at low enough densities. Low densitiens m= enumberans tha oft t hemoles gas molecul es are fa Nr e=nough number apa ofr tparticles that the y do not interact with one another, but only with the walls of the gas container. -

Lecture 6: Entropy

Matthew Schwartz Statistical Mechanics, Spring 2019 Lecture 6: Entropy 1 Introduction In this lecture, we discuss many ways to think about entropy. The most important and most famous property of entropy is that it never decreases Stot > 0 (1) Here, Stot means the change in entropy of a system plus the change in entropy of the surroundings. This is the second law of thermodynamics that we met in the previous lecture. There's a great quote from Sir Arthur Eddington from 1927 summarizing the importance of the second law: If someone points out to you that your pet theory of the universe is in disagreement with Maxwell's equationsthen so much the worse for Maxwell's equations. If it is found to be contradicted by observationwell these experimentalists do bungle things sometimes. But if your theory is found to be against the second law of ther- modynamics I can give you no hope; there is nothing for it but to collapse in deepest humiliation. Another possibly relevant quote, from the introduction to the statistical mechanics book by David Goodstein: Ludwig Boltzmann who spent much of his life studying statistical mechanics, died in 1906, by his own hand. Paul Ehrenfest, carrying on the work, died similarly in 1933. Now it is our turn to study statistical mechanics. There are many ways to dene entropy. All of them are equivalent, although it can be hard to see. In this lecture we will compare and contrast dierent denitions, building up intuition for how to think about entropy in dierent contexts. The original denition of entropy, due to Clausius, was thermodynamic. -

Thermodynamic Processes: the Limits of Possible

Thermodynamic Processes: The Limits of Possible Thermodynamics put severe restrictions on what processes involving a change of the thermodynamic state of a system (U,V,N,…) are possible. In a quasi-static process system moves from one equilibrium state to another via a series of other equilibrium states . All quasi-static processes fall into two main classes: reversible and irreversible processes . Processes with decreasing total entropy of a thermodynamic system and its environment are prohibited by Postulate II Notes Graphic representation of a single thermodynamic system Phase space of extensive coordinates The fundamental relation S(1) =S(U (1) , X (1) ) of a thermodynamic system defines a hypersurface in the coordinate space S(1) S(1) U(1) U(1) X(1) X(1) S(1) – entropy of system 1 (1) (1) (1) (1) (1) U – energy of system 1 X = V , N 1 , …N m – coordinates of system 1 Notes Graphic representation of a composite thermodynamic system Phase space of extensive coordinates The fundamental relation of a composite thermodynamic system S = S (1) (U (1 ), X (1) ) + S (2) (U-U(1) ,X (2) ) (system 1 and system 2). defines a hyper-surface in the coordinate space of the composite system S(1+2) S(1+2) U (1,2) X = V, N 1, …N m – coordinates U of subsystems (1 and 2) X(1,2) (1,2) S – entropy of a composite system X U – energy of a composite system Notes Irreversible and reversible processes If we change constraints on some of the composite system coordinates, (e.g. -

Energy Minimum Principle

ESCI 341 – Atmospheric Thermodynamics Lesson 12 – The Energy Minimum Principle References: Thermodynamics and an Introduction to Thermostatistics, Callen Physical Chemistry, Levine THE ENTROPY MAXIMUM PRINCIPLE We’ve seen that a reversible process achieves maximum thermodynamic efficiency. Imagine that I am adding heat to a system (dQin), converting some of it to work (dW), and discarding the remaining heat (dQout). We’ve already demonstrated that dQout cannot be zero (this would violate the second law). We also have shown that dQout will be a minimum for a reversible process, since a reversible process is the most efficient. This means that the net heat for the system (dQ = dQin dQout) will be a maximum for a reversible process, dQrev dQirrev . The change in entropy of the system, dS, is defined in terms of reversible heat as dQrev/T. We can thus write the following inequality dQ dQ dS rev irrev , (1) T T Notice that for Eq. (1), if the system is reversible and adiabatic, we get dQ dS 0 irrev , T or dQirrev 0 which illustrates the following: If two states can be connected by a reversible adiabatic process, then any irreversible process connecting the two states must involve the removal of heat from the system. Eq. (1) can be written as dS dQ T (2) The equality will hold if all processes in the system are reversible. The inequality will hold if there are irreversible processes in the system. For an isolated system (dQ = 0) the inequality becomes dS 0 isolated system , which is just a restatement of the second law of thermodynamics. -

The Carnot Cycle, Reversibility and Entropy

entropy Article The Carnot Cycle, Reversibility and Entropy David Sands Department of Physics and Mathematics, University of Hull, Hull HU6 7RX, UK; [email protected] Abstract: The Carnot cycle and the attendant notions of reversibility and entropy are examined. It is shown how the modern view of these concepts still corresponds to the ideas Clausius laid down in the nineteenth century. As such, they reflect the outmoded idea, current at the time, that heat is motion. It is shown how this view of heat led Clausius to develop the entropy of a body based on the work that could be performed in a reversible process rather than the work that is actually performed in an irreversible process. In consequence, Clausius built into entropy a conflict with energy conservation, which is concerned with actual changes in energy. In this paper, reversibility and irreversibility are investigated by means of a macroscopic formulation of internal mechanisms of damping based on rate equations for the distribution of energy within a gas. It is shown that work processes involving a step change in external pressure, however small, are intrinsically irreversible. However, under idealised conditions of zero damping the gas inside a piston expands and traces out a trajectory through the space of equilibrium states. Therefore, the entropy change due to heat flow from the reservoir matches the entropy change of the equilibrium states. This trajectory can be traced out in reverse as the piston reverses direction, but if the external conditions are adjusted appropriately, the gas can be made to trace out a Carnot cycle in P-V space. -

Chapter 20: Entropy and the Second Law of Thermodynamics the Conservation of Energy Law Allows Energy to Flow Bi- Directionally Between Its Various Forms

Chapter 20: Entropy and the Second Law of Thermodynamics The Conservation of Energy law allows energy to flow bi- directionally between its various forms. For example in a pendulum, energy continually goes to/from kinetic energy and potential energy. Entropy is different: No conservation law – the entropy change ΔS associated with an irreversible process in a closed system is always greater than or equal to zero. Ice in water Consider putting some ice into a glass of water. Conservation of energy would allow energy to flow: 1. only from ice into water 2. only from water into ice 3. both ways Entropy and the Second Law of Thermodynamics Consider putting some ice into a glass of water. Conservation of energy would allow: • ice getting colder and water getting hotter. • ice getting warmer and water getting cooler. • both ice and water staying at their initial temperatures. Only one of these scenarios happens, so something must be controlling the direction of energy flow. That direction is set by a quantity called entropy Entropy and the Second Law of Thermodynamics But what about the ice? The ice is a very ordered state with all of the molecules in specific locations in the crystal. (W small) Liquid water has many states for the water molecules. (W large) Nature will maximize number of energetically-allowed microstates. Thus, heat (energy) always flows from the water to the ice. Two equivalent ways to define the entropy in a system: (1) In terms of the system’s temperature and the energy change the system gains or loses as heat, or; (1) By counting the ways in which the components of the system can be arranged. -

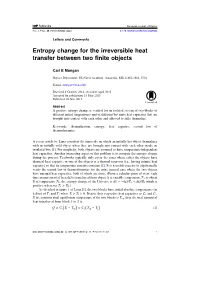

Entropy Change for the Irreversible Heat Transfer Between Two Finite

European Journal of Physics Eur. J. Phys. 36 (2015) 048004 (3pp) doi:10.1088/0143-0807/36/4/048004 Letters and Comments Entropy change for the irreversible heat transfer between two finite objects Carl E Mungan Physics Department, US Naval Academy, Annapolis, MD 21402-1363, USA E-mail: [email protected] Received 4 October 2014, revised 6 April 2015 Accepted for publication 15 May 2015 Published 10 June 2015 Abstract A positive entropy change is verified for an isolated system of two blocks of different initial temperatures and of different but finite heat capacities that are brought into contact with each other and allowed to fully thermalize. Keywords: thermalization, entropy, heat capacity, second law of thermodynamics A recent article by Lima considers the timescale on which an initially hot object thermalizes with an initially cold object when they are brought into contact with each other inside an insulated box [1]. For simplicity, both objects are assumed to have temperature-independent heat capacities. Another interesting aspect of this problem is to compute the entropy change during the process. Textbooks typically only cover the cases where either the objects have identical heat capacity, or one of the objects is a thermal reservoir (i.e., having infinite heat capacity) so that its temperature remains constant [2]. It is a useful exercise to algebraically verify the second law of thermodynamics for the more general case where the two objects have unequal heat capacities, both of which are finite. (From a calculus point of view, each time an increment of heat dQ is transferred from object A at variable temperature TA to object B at temperature TB, the entropy change of the Universe is dd/d/SQTQT=−AB + which is positive whenever TTAB> .) As sketched in figure 1 of Lima [1], the two blocks have initial absolute temperatures (in kelvin) of T1 and T2 where TT12>>0. -

Section 15-7: Entropy and the Second Law of Thermodynamics

Answer to Essential Question15.6: We can immediately fill in the following values: because there is no heat in an adiabatic process; so the 2 ! 3 process satisfies the first law; and because that is always true (the system returns to its initial state, with no change in temperature). With those three values in the table it is straightforward to fill in the Process Q (J) W (J) (J) remaining values by (a) making sure that each row 1 ! 2 0 +300 –300 satisfies the first law ( ) and, (b) that in 2 ! 3 +800 0 +800 every column the sum of the values for the individual 3 ! 1 –1000 –500 –500 processes is equal to the value for the entire cycle. The Entire Cycle –200 –200 0 completed table is shown in Table 15.4. Table 15.4: The completed table. 15-7 Entropy and the Second Law of Thermodynamics A system of ideal gas in a particular state has an entropy, just as it has a pressure, a volume, and a temperature. Unlike pressure, volume, and temperature, which are easy to determine, the entropy of a system can be difficult to find. On the other hand, changes in entropy can be quite straight- forward to calculate. Entropy: Entropy is in some sense a measure of disorder. The symbol for entropy is S, and the units are J/K. Change in entropy: In certain cases the change in entropy, , is easy to determine. An example is in an isothermal process in which an amount of heat Q is transferred to a system: . -

Properties of Entropy

Properties of Entropy Due to its additivity, entropy is a homogeneous function of the extensive coordinates of the system: S(λU, λV, λN1,…, λNm) = λ S (U, V, N1,…, Nm) This means we can write the entropy as a function of the total number of particles and of intensive coordinates: mole fractions and molar volume If λ = 1/N N S(u, v, n1,…, nm) = S (U, V, N1,…, Nm) Σni=1 Intensive Thermodynamic Coordinates The fundamental relation in the energy representation gives the internal energy of the system as a function of all extensive coordinates (including the entropy) U = U(S, V, N1, … ,Nm ) Since the internal energy is a function of the state of the system, we can write its first differential as: dU = (∂U/∂S)V,N1,…Nm dS + (∂U/∂V)S,N1,…Nm dV + (∂U/∂N1)S,V,N2,…,Nm dN1 + … The partial derivatives in this differential are functions of the same extensive parameters as the internal energy. New Intensive Thermodynamic Variables It is convenient to give names to these partial derivatives: (∂U/∂S)V,N1,…Nm = T, temperature - (∂U/∂V)S,N1,…Nm = P, pressure (∂U/∂N1)V,S, N2,…Nm = µ1 electrochemical potential We will show that the properties of the above defined variables coincide with our intuitive understanding of temperature, pressure and chemical potential dU = (∂U/∂S)V,N1,…Nm dS + (∂U/∂V)S,N1,…Nm dV + (∂U/∂N1)S,V,N2,…,Nm dN1 + … dU = T dS – P dV + µ1 dN1 + … dS = (1/T) dU + (P/T) dV – (µ1/T) dN1 + … Relation between Entropy and Heat If the number of particles is constrained in a given process (dN = 0): dU = T dS – P dV On the other hand, we have conservation of energy: dU = δW + δQ Since work is: δW = - P dV, we find the connection between heat and entropy: δQ = T dS Flux of heat into the system increases its entropy.