Ucalgary 2016 Lu Kokyee.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Global Network Investment Competition Fudan University Supreme Pole ‐ Allwinner Technology

Global Network Investment Competition Fudan University Supreme Pole ‐ Allwinner Technology Date: 31.10.2017 Fan Jiang Jianbin Gu Qianrong Lu Shijie Dong Zheng Xu Chunhua Xu Allwinner Technology ‐‐ Sail Again We initiate coverage on Allwinner Technology with a strong BUY rating, target price is derived by DCF at CNY ¥ 35.91 , indicating Price CNY ¥ 27.60 30.1% upside potential. Price Target CNY ¥ 35.91 Upside Potential 30.1% Target Period 1 Year We recommend based on: 52 week Low CNY ¥ 23.4 Broad prospects of the AI. 52 week High CNY ¥ 54.02 Supporting of the industry policy. Average Volume CNY ¥ 190.28 M Allwinner has finished its transition. Market Cap CNY ¥ 96.08 B The rise of the various new P/E 64 products will put the margins back Price Performance in the black. 60 The current valuation, 64.x P/E, is 50 lower than its competitor such as 40 Ingenic which is trading at more 30 than 100 and Nationz which is 20 trading at 76.x P/E. 10 0 Overview for Allwinner Allwinner Technology, founded in 2007, is a leading fabless design company dedicated to smart application processor SoCs and smart analog ICs. Its product line includes multi‐core application processors for smart devices and smart power management ICs used worldwide. These two categories of products are applied to various types of intelligent terminals into 3 major business lines: Consumer Electronics: Robot, Smart Hardware Open Platform, Tablets, Video Theater Device, E‐Reader, Video Story Machine, Action Camera, VR Home Entertainment: OTT Box, Karaoke Machine, IPC monitoring Connected Automotive Applications: Dash Cams, Smart Rear‐view Mirror, In Car Entertainment THE PROSPECT OF AI AI(Artificial Intelligence) has a wider range of global concern and is entering its third golden period of development. -

A13 Datasheet V1.01

Allwinner Technology CO., Ltd. A13 A13 Datasheet V1.12 2012.3.29 A13 Datasheet v1.12 Copyright © 2011-2012 Allwinner Technology. All Rights Reserved. 2012-03-29 Allwinner Technology CO., Ltd. A13 Revision History Version Date Section/ Page Changes V1.00 2011.12.9 Initial version GPIOE[0]/[1]/[2] and GPIOG[0]/[1]/[2] V1.10 2011.12.30 Pin Description are changed for INPUT only. V1.11 2012.1.10 Pin Dimension Pin Dimension V1.12 2012.3.29 Audio Codec Revise some description A13 Datasheet v1.12 Copyright © 2011-2012 Allwinner Technology. All Rights Reserved. 1 2012-03-29 Allwinner Technology CO., Ltd. A13 Table of Contents Revision History ...................................................................................................................... 1 1. Introduction ..................................................................................................................... 5 2. Feature ............................................................................................................................. 5 3. Functional Block Diagram ................................................................................................ 9 4. Pin Assignment ............................................................................................................... 10 4.1. Pin Map ................................................................................................................... 10 4.2. Pin Dimension ........................................................................................................... 11 -

Taiwan Semiconductor Manufacturing

08 January 2015 Asia Pacific/Taiwan Equity Research Semiconductor Devices Taiwan Semiconductor Manufacturing (2330.TW / 2330 TT) Rating (from Outperform) NEUTRAL Price (08 Jan 15, NT$) 138.00 DOWNGRADE RATING Target price (NT$) 145.00¹ Upside/downside (%) 5.1 Mkt cap (NT$ bn) 3.58 (US$ 111,910 mn) Competition heats up in 2015 Enterprise value (NT$ mn) 3,463,889 ■ Competitive landscape a key focus for 2015. We downgrade TSMC to Number of shares (mn) 25,929.87 Free float (%) 87.3 NEUTRAL from Outperform with TP unchanged at NT$145, toning down our 52-week price range 141.5–100.5 positive view of the past five years. We see increasing customer ADTO - 6M (US$ mn) 148.6 concentration risks where further share gains are limited and may slip, *Stock ratings are relative to the coverage universe in each analyst's or each team's respective sector. moderating growth from mobile; rising competitors finally lifting their yields; ¹Target price is for 12 months. and valuation back in line with Taiwan tech and its historical average due to Research Analysts slowing YoY/sequential momentum after the likely strong 4Q14 results report. Randy Abrams, CFA ■ Customers more concentrated but now able to diversify. Qualcomm, 886 2 2715 6366 [email protected] Apple and Mediatek have supplied half of TSMC's growth in the past four Nickie Yue years and to boost TSMC’s growth 700 bp to 16%. Further share is capped 886 2 2715 6364 or might even slip as Apple brings Samsung/GF back in for iPhone, [email protected] Qualcomm relies more on Samsung/GF/UMC/SMIC, and further Mediatek gains are capped with TSMC's share up from 20% to 70% since 2007. -

Allwinner V3 Is a High Performance FHD Camera Application Solution That Features

Smart Dual-Channel FHD Camera Solution Allwinner V3 is a high performance FHD camera application solution that features: Robust Video Engine, H.264 1080p@60fps or 2-CH 1080p@30fps encoding Allwinner V3 comes with a high-performance and low bit-rate video engine that is capable of single-channel H.264 1080p@60fps encoding, or dual-channel 1080p@30fps encoding, or front & rear 1080p@30fps encoding. It is also the world's first camera solution that supports recompilation of FHD MJPEG into H.264 format. An advanced ISP is integrated to provide higher image quality. ARM Cortex-A7 CPU, Advanced ADAS Algorithm Allwinner V3 packs an ARM Cortex-A7 core that runs up to 1.2GHz to deliver higher computing capability, enabling support for advanced ADAS (Advance Driver Assistant System) algorithm to provide more secure driving experience, including FCWS (Forward Collision Warning System), LDWS (Lane Departure Warning System) ,etc. WiFi or 3G/4G Supported, Android/iOS APKs WiFi or 3G/4G function can be available in V3 to enable interaction with mobile phones after installing corresponding Android/iOS applications. Linux OS or Allwinner's Camdroid OS Allwinner V3 runs on the Linux operating system or Allwinner's Camdroid OS, an Android-lite operation system that capable of running on Nor Flash. Lower Power Consumption Allwinner V3 runs is highly power efficient due to its leading fabrication process and optimized processor architecture design. High System Integration Allwinner V3 integrates a wide range of connectivity and interfaces, including MIPI & parallel CSI controllers, RGB/ LVDS LCD controller, audio codec, EMAC + PHY, etc. -

A20 Brief 2013-03-06.Pdf

Overview Allwinner Technology, a leading mobile application processor solution provider, launches a dual-core mobile application processor A20 for tablet, smart TV as well as Mobile Internet Device (MID) markets. The A20 processor is based on dual-core ARM Cortex-A7 CPU architecture, the most energy efficient application processor from ARM. It also integrates the powerful Mali400MP2 GPU, and supports 2160p video decoding and H.264 HP 1080p video encoding. Dual-Core Mobile Application Processor More importantly, A20 processor is pin-to-pin compatible with its predecessor A10, which greatly simplifies the product design process and makes the upgrade of existing devices much easier. Lastly, A20 processor delivers an extensive range of interfaces and connectivity, including 4-CH CVBS in, 4-CH CVBS out, VGA, LVDS/RGB LCD, HDMI with HDCP, SATA, USB as well as GMAC, etc. Framework ©2013 Allwinner Technology www.allwinnertech.com A20 Brief Feature CPU • ARM Cortex-A7 Dual-Core • ARM Mali400MP2 GPU • Comply with OpenGL ES 2.0/1.1 • HD H.264 2160p video decoding • Multi-format FHD video decoding, including Mpeg1/2, Mpeg4 SP/ASP GMC, H.263, H.264, VP6/8, AVS jizun, Jpeg/Mjpeg, etc. Video • BD Directory, BD ISO and BD m2ts video decoding • H.264 High Profile 1080p@30fps or 720p@60fps encoding • 3840x1080@30fps 3D decoding, BD/SBS/TAB/FP supported • Comply with RTSP, HTTP,HLS,RTMP,MMS streaming media protocols • Support multi-channel HD display • Integrated HDMI 1.4 transmitter with HDCP support Display • CPU/RGB/LVDS LCD interface • Support CVBS/YPbPr/VGA -

China Smart Devices

China smart devices EQUITY: TECHNOLOGY The next mega trend for 2014 and beyond? Global Markets Research LTE, Smart Homes, 3D Vision, EV, IT nationalism, 21 May 2014 Smart TVs, 4G smartphones and more… Anchor themes Despite the macro slowdown and Industry view: hardware + service to stimulate industry upgrade saturation in major technology We believe “hardware + service” has become an important business model to product lines, we think innovation drive the development of China’s IT hardware sector. In the past two years, and policy supports are the popularity of WeChat and mobile gaming has accelerated adoption of 3G incubating a new round of smartphones in China. Since 2013, IPTV services such as LeTV have started growth, potentially benefiting to reshape China’s TV industry. Looking ahead, we believe: players well positioned in various Mobile video services will stimulate growth of 4G smartphones TMT sub-sectors. TV game deregulation will become a new driver for Smart TVs in China Nomura vs consensus Air pollution may boost demand for EV and green home appliances We analyse China's TMT industry E-commerce will create new opportunities in O2O logistics from the perspective of a broad scope throughout the vertical & Smart home hardware is ready to incubate service and reshape the industry horizontal axis, and across sub- Concerns on IT security will benefit players in nationally strategic areas like sectors. semiconductor and enterprise equipment Research analysts 3D vision may help machines to better understand the real world and innovate the way people use e-commerce and auto driving. China Technology Regulatory policies still matter Leping Huang, PhD - NIHK As well as the above, government policy will play an important role in guiding [email protected] the development of China technology and telecoms. -

Company Vendor ID (Decimal Format) (AVL) Ditest Fahrzeugdiagnose Gmbh 4621 @Pos.Com 3765 0XF8 Limited 10737 1MORE INC

Vendor ID Company (Decimal Format) (AVL) DiTEST Fahrzeugdiagnose GmbH 4621 @pos.com 3765 0XF8 Limited 10737 1MORE INC. 12048 360fly, Inc. 11161 3C TEK CORP. 9397 3D Imaging & Simulations Corp. (3DISC) 11190 3D Systems Corporation 10632 3DRUDDER 11770 3eYamaichi Electronics Co., Ltd. 8709 3M Cogent, Inc. 7717 3M Scott 8463 3T B.V. 11721 4iiii Innovations Inc. 10009 4Links Limited 10728 4MOD Technology 10244 64seconds, Inc. 12215 77 Elektronika Kft. 11175 89 North, Inc. 12070 Shenzhen 8Bitdo Tech Co., Ltd. 11720 90meter Solutions, Inc. 12086 A‐FOUR TECH CO., LTD. 2522 A‐One Co., Ltd. 10116 A‐Tec Subsystem, Inc. 2164 A‐VEKT K.K. 11459 A. Eberle GmbH & Co. KG 6910 a.tron3d GmbH 9965 A&T Corporation 11849 Aaronia AG 12146 abatec group AG 10371 ABB India Limited 11250 ABILITY ENTERPRISE CO., LTD. 5145 Abionic SA 12412 AbleNet Inc. 8262 Ableton AG 10626 ABOV Semiconductor Co., Ltd. 6697 Absolute USA 10972 AcBel Polytech Inc. 12335 Access Network Technology Limited 10568 ACCUCOMM, INC. 10219 Accumetrics Associates, Inc. 10392 Accusys, Inc. 5055 Ace Karaoke Corp. 8799 ACELLA 8758 Acer, Inc. 1282 Aces Electronics Co., Ltd. 7347 Aclima Inc. 10273 ACON, Advanced‐Connectek, Inc. 1314 Acoustic Arc Technology Holding Limited 12353 ACR Braendli & Voegeli AG 11152 Acromag Inc. 9855 Acroname Inc. 9471 Action Industries (M) SDN BHD 11715 Action Star Technology Co., Ltd. 2101 Actions Microelectronics Co., Ltd. 7649 Actions Semiconductor Co., Ltd. 4310 Active Mind Technology 10505 Qorvo, Inc 11744 Activision 5168 Acute Technology Inc. 10876 Adam Tech 5437 Adapt‐IP Company 10990 Adaptertek Technology Co., Ltd. 11329 ADATA Technology Co., Ltd. -

Bachelorarbeit

Fachbereich 3 - Informatik und Mathematik Bachelorarbeit Studiengang Informatik Eine Plattform für Bare-Metal-Programmierung und die Entwicklung eines Hard-Realtime-Operating-Systems Christian Manal [email protected] Matrikelnummer 3005022 Gutachter Prof. Dr. Jan Peleska [email protected] Prof. Dr. Rolf Drechsler [email protected] 7. Januar 2019 Eidesstattliche Erklärung Hiermit erkläre ich, dass ich die vorliegende Arbeit selbstständig angefertigt, nicht anderweitig zu Prüfungszwecken vorgelegt und keine anderen als die angegebenen Hilfsmittel verwendet habe. Sämtliche wissentlich verwendete Textausschnitte, Zitate oder Inhalte anderer Verfasser wurden ausdrücklich als solche gekennzeichnet. Bremen, 7. Januar 2019 Christian Manal i Zusammenfassung In dieser Arbeit geht es um die Auswahl einer Plattform, die sich für die Bare-Metal- Programmierung im Kontext einer Hochschullehrveranstaltung eignet. Insbesondere soll es möglich sein, zu Lehrzwecken ein minimales Betriebssystem zu entwickeln, das ggf. auch Hard-Realtime-Eigenschaften hat. Zu diesem Zweck wurden verschie- dene Single-Board-Computer (SBC) bzw. System-on-a-Chip (SoC) nach ausgewählten Kriterien bewertet und auf ihre Eignung untersucht. ii Inhaltsverzeichnis 1. Einleitung 1 2. Grundlagen 2 2.1. Bare-Metal-Programmierung ....................... 2 2.2. Hard-Realtime-Operating-System ..................... 4 3. Verwandte Arbeiten 6 3.1. Betriebssysteme in der Hochschullehre .................. 6 3.2. Hard-Realtime-Betriebssysteme in der Lehre ............... 7 4. Auswahlprozess 9 4.1. Kriterien ................................... 9 4.2. Evaluierte Plattformen ........................... 17 4.3. Ergebnis ................................... 33 5. Realtime-Eigenschaften 35 5.1. Versuchsaufbau ............................... 35 5.2. Auswertung der Ergebnisse ........................ 39 5.3. Versuchsfazit ................................ 44 6. Fazit und Ausblick 46 Anhänge 48 A. Abbildungsverzeichnis 49 B. Tabellenverzeichnis 50 C. Glossar 51 D. Abkürzungen und Akronyme 53 E. -

Allwinner A40i Breif.Pdf

Quad-Core Industrial Control Processor The A40i processor represents Allwinner’s latest achievement in the intelligent industrial control sector. The processor is ideal for applications that require 3D graphics, advanced video processing, rich user interfaces, high quality, low power consumption and a high level of system integration. The A40i is mainly applied to industrial control products based on visual interaction. Content can be displayed on either 4-lane MIPI DSI displays, an RGB panel or a Dual-channel LVDS panel. CVBS-out and HDMI V1.4 is also supported. Supports dual CMOS sensor parallel interfaces and 4-channel CVBS-in , which is capable of executing multi-channel video recording. A40i meets the standard of industrial grade, and the operating temperature reaches the standard of AEC-Q100 grade3. Integrated audio codec with 24bit/192KHz DAC playback, and supports I2S/PCM interface for connecting to an external audio codec.I2S/PCM interface includes eight channels of TDM with sampling precision up to 32bit/192KHz. To reduce the total system cost, the A40i has an extensive range of support for hardware peripherals allowing for an array of configurations, such as 3*USB2.0, GMAC, EMAC, SATA, 2*TSC, PS2, RTP, 4*SDHC etc. Supports Linux3.10, Android 7.1 and the above system. CPU Architecture GPU Architecture • Quad-core ARM Cortex-A7 MPCore Processor • 3D • ARMv7 ISA standard ARM instruction set ‒ Mali400 MP2 GPU • Thumb-2 Technology ‒ Support OpenGL ES 2.0 / OpenVG 1.1 standard • Jazeller RCT • 2D • NEON Advanced SIMD ‒ Support BLT -

Drivers Allwinner A31

Drivers Allwinner A31 1 / 5 Drivers Allwinner A31 2 / 5 3 / 5 [2]In 2012 and 2013, Allwinner was the number one supplier in terms of unit shipments of application processors for Android tablets worldwide.. They have also been adopted in free hardware projects like the Cubieboard development board.. Hi everyone, This patchset adds support for the USB controllers found in the Allwinner A31. 1. drivers allwinner 2. drivers allwinner a23 3. drivers allwinner h3 [3][4][5]According to DigiTimes, in Q4 2013 Allwinner lost its number one position in terms of unit shipments to the Chinese market to Rockchip.. [6][7] For Q2 2014, Allwinner was reported by DigiTimes to be the third largest supplier to the Chinese market after Rockchip and MediaTek.. The company is headquartered in Zhuhai, Guangdong, China It has a sales and technical support office in Shenzhen, Guangdong, and logistics operations in Hong Kong.. A2x and A3x family[edit]In December 2012, Allwinner announced the availability of two ARM Cortex-A7 MPCore powered products, the dual-core Allwinner A20 and quad-core Allwinner A31. drivers allwinner drivers allwinner, drivers allwinner a13, drivers allwinner a23, drivers allwinner a13 download, drivers allwinner h3, drivers allwinner a20, drivers allwinner a64, allwinner usb drivers windows 7, allwinner drivers download, allwinner a10 drivers windows 7, driver allwinner, driver allwinner a33, driver allwinner a13, driver allwinner a23, driver allwinner windows 10 Download lp conversion kits for generators All drivers available for download have been scanned by antivirus program I have an Allwinner A31-based Onda v975 tablet and am looking for assistance with installing USB drivers. -

Allwinner Technology CO., Ltd. A13 Manycore Lite Soc for Android 4.0

Allwinner Technology CO., Ltd. A13 A13 ManyCore Lite SoC for Android 4.0 PAD Overview Allwinner Tech has expanded its processor lineup to brains of Android 4.0.3, A13 makes multitasking include a new ARM Cortex-A8 chip A13 which is smoother, apps loading more quickly, and anything even more competitive for Android tablets with you touch responds instantly. What’s more important, higher performance (ManyCore Lite), lower power A13 is available in eLQFP176 package with Audio consumption, and lower total system cost. As the Codec, and 2 Points R-TP integrated. Feature CPU/GPU DPU ARM Cortex-A8 Core LCD Interfaces: CPU, RGB 32KB D-Cache/ 32KB I-Cache Memory 256KB L2 Cache DDR2/DDR3: Up to 533MHz Mali-400 3-D Engine 16 bits Data Bus VPU Memory capacity up to 512MB HD Video Decoding MLC/TLC/SLC/EF-NAND 1920*1080@30fps 2 flash chips, ECC 64-bit Support H.264、H.263、VC1、Mpeg1/2/4、 Support NAND of 5xnm, 4xnm, 3xnm, Divx 3/4/5/6、Xvid、VP6/8、AVS etc 2xnm… HD Video Encoding Support NADN of Samsung, Toshiba, Support encoding in H.264 format Hynix… up to 1920*1080@30fps Copyright © 2011 Allwinner Technology. All Rights Reserved. Allwinner Technology CO., Ltd. A13 Peripherals SD Card USB2.0 OTG, USB2.0 HOST USB (OHCI/EHCI) Powerful Acceleration SD Card V.3.0, eMMC V.4.2 Graphic (3D, Mali400 MP) SPI, TWI and UART VPU(1080P) integrated Audio Codec APU CSI E-Reader R-TP Controller Ultra-low System Power Consumption 4-wire resistive TP interface 15~20% lower than competitors 2 points and gesture detection Smart Backlight:auto adjust backlight Boot Devices acc. -

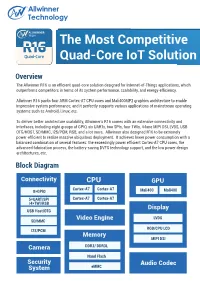

The Most Competitive Quad-Core Iot Solution

The Most Competitive Quad-Core IoT Solution Overview The Allwinner R16 is an efficient quad-core solution designed for Internet-of-Things applications, which outperforms competitors in terms of its system performance, scalability, and energy-efficiency. Allwinner R16 packs four ARM Cortex-A7 CPU cores and Mali400MP2 graphics architecture to enable impressive system performance, and it perfectly supports various applications of mainstream operating systems such as Android, Linux, etc. To deliver better architecture scalability, Allwinner’s R16 comes with an extensive connectivity and interfaces, including eight groups of GPIO, six UARTs, two SPIs, four TWIs, 4-lane MIPI DSI, LVDS, USB OTG/HOST, SD/MMC, I2S/PCM, RSB, and a lot more. Allwinner also designed R16 to be extremely power-efficient to realize massive ubiquitous deployment. It achieves lower power consumption with a balanced combination of several features: the exceedingly power efficient Cortex-A7 CPU cores, the advanced fabrication process, the battery-saving DVFS technology support, and the low power design architectures, etc. Block Diagram Connectivity CPU GPU Cortex-A7 Cortex-A7 8×GPIO Mali400 Mali400 5×UART/SPI Cortex-A7 Cortex-A7 /4×TWI/RSB Display USB Host/OTG Video Engine LVDS SD/MMC RGB/CPU LCD I2S/PCM Memory MIPI DSI Camera DDR3/ DDR3L Nand Flash Security Audio Codec System eMMC Specifications · Quad-core Cortex™-A7 · 256KB L1 Cache CPU · 512KB L2 Cache GPU · Mali400 · Supports OpenGL ES 2.0 / VG 1.1 standards · Supports 1080p@60fps video playback · Supports multi-format FHD video decoding, including Mpeg1/2, Mpeg4 SP/ASP GMC, H.263, H.264, WMV9/VC-1, VP8, etc.