Cpus, Gpus, Accelerators and Memory V1.0.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Effective Virtual CPU Configuration with QEMU and Libvirt

Effective Virtual CPU Configuration with QEMU and libvirt Kashyap Chamarthy <[email protected]> Open Source Summit Edinburgh, 2018 1 / 38 Timeline of recent CPU flaws, 2018 (a) Jan 03 • Spectre v1: Bounds Check Bypass Jan 03 • Spectre v2: Branch Target Injection Jan 03 • Meltdown: Rogue Data Cache Load May 21 • Spectre-NG: Speculative Store Bypass Jun 21 • TLBleed: Side-channel attack over shared TLBs 2 / 38 Timeline of recent CPU flaws, 2018 (b) Jun 29 • NetSpectre: Side-channel attack over local network Jul 10 • Spectre-NG: Bounds Check Bypass Store Aug 14 • L1TF: "L1 Terminal Fault" ... • ? 3 / 38 Related talks in the ‘References’ section Out of scope: Internals of various side-channel attacks How to exploit Meltdown & Spectre variants Details of performance implications What this talk is not about 4 / 38 Related talks in the ‘References’ section What this talk is not about Out of scope: Internals of various side-channel attacks How to exploit Meltdown & Spectre variants Details of performance implications 4 / 38 What this talk is not about Out of scope: Internals of various side-channel attacks How to exploit Meltdown & Spectre variants Details of performance implications Related talks in the ‘References’ section 4 / 38 OpenStack, et al. libguestfs Virt Driver (guestfish) libvirtd QMP QMP QEMU QEMU VM1 VM2 Custom Disk1 Disk2 Appliance ioctl() KVM-based virtualization components Linux with KVM 5 / 38 OpenStack, et al. libguestfs Virt Driver (guestfish) libvirtd QMP QMP Custom Appliance KVM-based virtualization components QEMU QEMU VM1 VM2 Disk1 Disk2 ioctl() Linux with KVM 5 / 38 OpenStack, et al. libguestfs Virt Driver (guestfish) Custom Appliance KVM-based virtualization components libvirtd QMP QMP QEMU QEMU VM1 VM2 Disk1 Disk2 ioctl() Linux with KVM 5 / 38 libguestfs (guestfish) Custom Appliance KVM-based virtualization components OpenStack, et al. -

Wind Rose Data Comes in the Form >200,000 Wind Rose Images

Making Wind Speed and Direction Maps Rich Stromberg Alaska Energy Authority [email protected]/907-771-3053 6/30/2011 Wind Direction Maps 1 Wind rose data comes in the form of >200,000 wind rose images across Alaska 6/30/2011 Wind Direction Maps 2 Wind rose data is quantified in very large Excel™ spreadsheets for each region of the state • Fields: X Y X_1 Y_1 FILE FREQ1 FREQ2 FREQ3 FREQ4 FREQ5 FREQ6 FREQ7 FREQ8 FREQ9 FREQ10 FREQ11 FREQ12 FREQ13 FREQ14 FREQ15 FREQ16 SPEED1 SPEED2 SPEED3 SPEED4 SPEED5 SPEED6 SPEED7 SPEED8 SPEED9 SPEED10 SPEED11 SPEED12 SPEED13 SPEED14 SPEED15 SPEED16 POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 WEIBC1 WEIBC2 WEIBC3 WEIBC4 WEIBC5 WEIBC6 WEIBC7 WEIBC8 WEIBC9 WEIBC10 WEIBC11 WEIBC12 WEIBC13 WEIBC14 WEIBC15 WEIBC16 WEIBK1 WEIBK2 WEIBK3 WEIBK4 WEIBK5 WEIBK6 WEIBK7 WEIBK8 WEIBK9 WEIBK10 WEIBK11 WEIBK12 WEIBK13 WEIBK14 WEIBK15 WEIBK16 6/30/2011 Wind Direction Maps 3 Data set is thinned down to wind power density • Fields: X Y • POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 • Power1 is the wind power density coming from the north (0 degrees). Power 2 is wind power from 22.5 deg.,…Power 9 is south (180 deg.), etc… 6/30/2011 Wind Direction Maps 4 Spreadsheet calculations X Y POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 Max Wind Dir Prim 2nd Wind Dir Sec -132.7365 54.4833 0.643 0.767 1.911 4.083 -

Portfolio Holdings Listing Select Semiconductors Portfolio As of June

Portfolio Holdings Listing Select Semiconductors Portfolio DUMMY as of August 31, 2021 The portfolio holdings listing (listing) provides information on a fund’s investments as of the date indicated. Top 10 holdings information (top 10 holdings) is also provided for certain equity and high income funds. The listing and top 10 holdings are not part of a fund’s annual/semiannual report or Form N-Q and have not been audited. The information provided in this listing and top 10 holdings may differ from a fund’s holdings disclosed in its annual/semiannual report and Form N-Q as follows, where applicable: With certain exceptions, the listing and top 10 holdings provide information on the direct holdings of a fund as well as a fund’s pro rata share of any securities and other investments held indirectly through investment in underlying non- money market Fidelity Central Funds. A fund’s pro rata share of the underlying holdings of any investment in high income and floating rate central funds is provided at a fund’s fiscal quarter end. For certain funds, direct holdings in high income or convertible securities are presented at a fund’s fiscal quarter end and are presented collectively for other periods. For the annual/semiannual report, a fund’s investments include trades executed through the end of the last business day of the period. This listing and the top 10 holdings include trades executed through the end of the prior business day. The listing includes any investment in derivative instruments, and excludes the value of any cash collateral held for securities on loan and a fund’s net other assets. -

LPC47N267 Product Brief

LPC47N267 100-Pin LPC Super I/O with X-Bus Interface Product Features • Enhanced Digital Data Separator - 2 Mbps, 1 Mbps, 500 Kbps, 300 Kbps, 250 • 3.3 Volt Operation (5V tolerant) Kbps Data Rates • Programmable Wakeup Event Interface - Programmable Precompensation Modes (IO_PME# Pin) • Serial Ports • SMI Support (IO_SMI# Pin) - Two Full Function Serial Ports • GPIOs (29) - High Speed NS16C550 Compatible UARTs • Four IRQ Input Pins with Send/Receive 16-Byte FIFOs • X-Bus Interface - Supports 230k and 460k Baud - Supports up to 4 external components - Programmable Baud Rate Generator - Supports I/O cycles (No Memory Support) - Modem Control Circuitry - 8-Bit Data Transfer • Infrared Communications Controller - 16-Bit Address Qualification - IrDA v1.2 (4Mbps), HPSIR, ASKIR, Con- - Write Protection for each component sumer IR Support • XNOR Chain -2 IR Ports • PC99 and ACPI 1.0b Compliant - 96 Base I/O Address, 15 IRQ Options and 3 • 100-pin STQFP Package DMA Options • Intelligent Auto Power Management • Multi-Mode Parallel Port with ChiProtect • 2.88MB Super I/O Floppy Disk Controller - Standard Mode IBM PC/XT, PC/AT, and PS/2 - Licensed CMOS 765B Floppy Disk Controller Compatible Bidirectional Parallel Port - Software and Register Compatible with - Enhanced Parallel Port (EPP) Compatible - Microchip's Proprietary 82077AA Compatible EPP 1.7 and EPP 1.9 (IEEE 1284 Compliant) Core - IEEE 1284 Compliant Enhanced Capabilities - Supports One Floppy Drive Directly Port (ECP) - Configurable Open Drain/Push-Pull Output - ChiProtect Circuitry for -

AMD EPYC™ 7371 Processors Accelerating HPC Innovation

AMD EPYC™ 7371 Processors Solution Brief Accelerating HPC Innovation March, 2019 AMD EPYC 7371 processors (16 core, 3.1GHz): Exceptional Memory Bandwidth AMD EPYC server processors deliver 8 channels of memory with support for up The right choice for HPC to 2TB of memory per processor. Designed from the ground up for a new generation of solutions, AMD EPYC™ 7371 processors (16 core, 3.1GHz) implement a philosophy of Standards Based AMD is committed to industry standards, choice without compromise. The AMD EPYC 7371 processor delivers offering you a choice in x86 processors outstanding frequency for applications sensitive to per-core with design innovations that target the performance such as those licensed on a per-core basis. evolving needs of modern datacenters. No Compromise Product Line Compute requirements are increasing, datacenter space is not. AMD EPYC server processors offer up to 32 cores and a consistent feature set across all processor models. Power HPC Workloads Tackle HPC workloads with leading performance and expandability. AMD EPYC 7371 processors are an excellent option when license costs Accelerate your workloads with up to dominate the overall solution cost. In these scenarios the performance- 33% more PCI Express® Gen 3 lanes. per-dollar of the overall solution is usually best with a CPU that can Optimize Productivity provide excellent per-core performance. Increase productivity with tools, resources, and communities to help you “code faster, faster code.” Boost AMD EPYC processors’ innovative architecture translates to tremendous application performance with Software performance. More importantly, the performance you’re paying for can Optimization Guides and Performance be matched to the appropriate to the performance you need. -

Microchip Technology Announces Financial Results for Third Quarter of Fiscal Year 2020

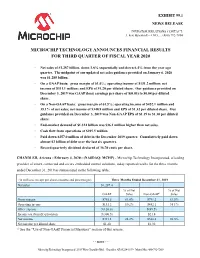

EXHIBIT 99.1 NEWS RELEASE INVESTOR RELATIONS CONTACT: J. Eric Bjornholt -- CFO..... (480) 792-7804 MICROCHIP TECHNOLOGY ANNOUNCES FINANCIAL RESULTS FOR THIRD QUARTER OF FISCAL YEAR 2020 ◦ Net sales of $1.287 billion, down 3.8% sequentially and down 6.4% from the year ago quarter. The midpoint of our updated net sales guidance provided on January 6, 2020 was $1.285 billion. ◦ On a GAAP basis: gross margin of 61.0%; operating income of $131.2 million; net income of $311.1 million; and EPS of $1.20 per diluted share. Our guidance provided on December 3, 2019 was GAAP (loss) earnings per share of $(0.03) to $0.04 per diluted share. ◦ On a Non-GAAP basis: gross margin of 61.5%; operating income of $452.1 million and 35.1% of net sales; net income of $340.8 million and EPS of $1.32 per diluted share. Our guidance provided on December 3, 2019 was Non-GAAP EPS of $1.19 to $1.30 per diluted share. ◦ End-market demand of $1.324 billion was $36.1 million higher than net sales. ◦ Cash flow from operations of $395.5 million. ◦ Paid down $257.0 million of debt in the December 2019 quarter. Cumulatively paid down almost $2 billion of debt over the last six quarters. ◦ Record quarterly dividend declared of 36.70 cents per share. CHANDLER, Arizona - February 4, 2020 - (NASDAQ: MCHP) - Microchip Technology Incorporated, a leading provider of smart, connected and secure embedded control solutions, today reported results for the three months ended December 31, 2019 as summarized in the following table: (in millions, except per share amounts and percentages) Three Months Ended December 31, 2019 Net sales $1,287.4 % of Net % of Net GAAP Sales Non-GAAP1 Sales Gross margin $785.5 61.0% $791.2 61.5% Operating income $131.2 10.2% $452.1 35.1% Other expense $(120.6) $(89.5) Income tax (benefit) provision $(300.5) $21.8 Net income $311.1 24.2% $340.8 26.5% Net income per diluted share $1.20 $1.32 (1) See the "Use of Non-GAAP Financial Measures" section of this release. -

AN2534 PAC193X Integration Notes for Microsoft® Windows® 10 Driver Support Author: Razvan Ungureanu TABLE 2: REVISION HISTORY Microchip Technology Inc

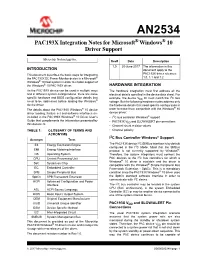

AN2534 PAC193X Integration Notes for Microsoft® Windows® 10 Driver Support Author: Razvan Ungureanu TABLE 2: REVISION HISTORY Microchip Technology Inc. Rev# Date Description 1.0 20-June-2017 The information in this INTRODUCTION document apply to the This document describes the basic steps for integrating PAC193X driver releases: the PAC193X DC Power Monitor device in a Microsoft® 1.0, 1.1 and 1.2 Windows® 10 host system in order to enable support of the Windows® 10 PAC193X driver. HARDWARE INTEGRATION As the PAC193X device can be used in multiple ways The hardware integration must first address all the and in different system configurations, there are some electrical details specified in the device data sheet. For 2 specific hardware and BIOS configuration details that example, the device VDD IO must match the I C bus need to be addressed before loading the Windows® voltage. But the following hardware notes address only device driver. the hardware details that need specific configuration in ® The details about the PAC193X Windows® 10 device order to make them compatible with the Windows 10 driver loading, feature set and software interfaces are device driver: included in the PAC193X Windows® 10 Driver User’s •I2C bus controller Windows® support Guide that complements the information presented by • PAC193X VDD and SLOW/ALERT pin connections this document. • Channel shunt resistor values . TABLE 1: GLOSSARY OF TERMS AND • Channel polarity ACRONYMS I2C Bus Controller Windows® Support Acronym Term 2 E3 Energy Estimation Engine The PAC193X device I C/SMBus interface is by default configured in the I2C Mode. Mind that the SMBus EMI Energy Metering Interface protocol is not currently supported by Windows®. -

Stoxx® Global Automation & Robotics Index

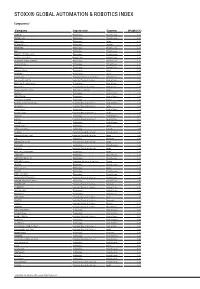

STOXX® GLOBAL AUTOMATION & ROBOTICS INDEX Components1 Company Supersector Country Weight (%) SNAP 'A' Technology United States 4.24 NVIDIA Corp. Technology United States 2.80 Nidec Corp. Technology Japan 2.42 HEXAGON B Technology Sweden 2.38 TERADYNE Technology United States 2.31 KLA Technology United States 2.23 MARVELL TECHNOLOGY Technology United States 2.16 Intuitive Surgical Inc. Health Care United States 2.12 ADVANCED MICRO DEVICES Technology United States 2.12 Qualcomm Inc. Technology United States 2.09 Apple Inc. Technology United States 2.06 Advantest Corp. Technology Japan 2.05 LASERTEC Technology Japan 2.04 Garmin Ltd. Consumer Products & Services United States 2.02 Emerson Electric Co. Industrial Goods & Services United States 2.02 Microchip Technology Inc. Technology United States 1.98 Ametek Inc. Industrial Goods & Services United States 1.96 Toyota Industries Corp. Automobiles & Parts Japan 1.96 Xilinx Inc. Technology United States 1.95 SERVICENOW Technology United States 1.88 DASSAULT SYSTEMS Technology France 1.82 Rockwell Automation Corp. Industrial Goods & Services United States 1.74 Fanuc Ltd. Industrial Goods & Services Japan 1.74 Autodesk Inc. Technology United States 1.68 Keyence Corp. Industrial Goods & Services Japan 1.63 Ansys Inc. Technology United States 1.61 HALMA Industrial Goods & Services Great Britain 1.56 PTC INC Technology United States 1.53 Omron Corp. Technology Japan 1.52 OPEN TEXT (NAS) Technology Canada 1.49 COGNEX Industrial Goods & Services United States 1.48 Yaskawa Electric Corp. Industrial Goods & Services Japan 1.40 SAP Technology Germany 1.40 MINEBEA MITSUMI Industrial Goods & Services Japan 1.24 Intel Corp. -

Openpower AI CERN V1.Pdf

Moore’s Law Processor Technology Firmware / OS Linux Accelerator sSoftware OpenStack Storage Network ... Price/Performance POWER8 2000 2020 DRAM Memory Chips Buffer Power8: Up to 12 Cores, up to 96 Threads L1, L2, L3 + L4 Caches Up to 1 TB per socket https://www.ibm.com/blogs/syst Up to 230 GB/s sustained memory ems/power-systems- openpower-enable- bandwidth acceleration/ System System Memory Memory 115 GB/s 115 GB/s POWER8 POWER8 CPU CPU NVLink NVLink 80 GB/s 80 GB/s P100 P100 P100 P100 GPU GPU GPU GPU GPU GPU GPU GPU Memory Memory Memory Memory GPU PCIe CPU 16 GB/s System bottleneck Graphics System Memory Memory IBM aDVantage: data communication and GPU performance POWER8 + 78 ms Tesla P100+NVLink x86 baseD 170 ms GPU system ImageNet / Alexnet: Minibatch size = 128 ADD: Coherent Accelerator Processor Interface (CAPI) FPGA CAPP PCIe POWER8 Processor ...FPGAs, networking, memory... Typical I/O MoDel Flow Copy or Pin MMIO Notify Poll / Int Copy or Unpin Ret. From DD DD Call Acceleration Source Data Accelerator Completion Result Data Completion Flow with a Coherent MoDel ShareD Mem. ShareD Memory Acceleration Notify Accelerator Completion Focus on Enterprise Scale-Up Focus on Scale-Out and Enterprise Future Technology and Performance DriVen Cost and Acceleration DriVen Partner Chip POWER6 Architecture POWER7 Architecture POWER8 Architecture POWER9 Architecture POWER10 POWER8/9 2007 2008 2010 2012 2014 2016 2017 TBD 2018 - 20 2020+ POWER6 POWER6+ POWER7 POWER7+ POWER8 POWER8 P9 SO P9 SU P9 SO 2 cores 2 cores 8 cores 8 cores 12 cores w/ NVLink -

Evaluation of AMD EPYC

Evaluation of AMD EPYC Chris Hollowell <[email protected]> HEPiX Fall 2018, PIC Spain What is EPYC? EPYC is a new line of x86_64 server CPUs from AMD based on their Zen microarchitecture Same microarchitecture used in their Ryzen desktop processors Released June 2017 First new high performance series of server CPUs offered by AMD since 2012 Last were Piledriver-based Opterons Steamroller Opteron products cancelled AMD had focused on low power server CPUs instead x86_64 Jaguar APUs ARM-based Opteron A CPUs Many vendors are now offering EPYC-based servers, including Dell, HP and Supermicro 2 How Does EPYC Differ From Skylake-SP? Intel’s Skylake-SP Xeon x86_64 server CPU line also released in 2017 Both Skylake-SP and EPYC CPU dies manufactured using 14 nm process Skylake-SP introduced AVX512 vector instruction support in Xeon AVX512 not available in EPYC HS06 official GCC compilation options exclude autovectorization Stock SL6/7 GCC doesn’t support AVX512 Support added in GCC 4.9+ Not heavily used (yet) in HEP/NP offline computing Both have models supporting 2666 MHz DDR4 memory Skylake-SP 6 memory channels per processor 3 TB (2-socket system, extended memory models) EPYC 8 memory channels per processor 4 TB (2-socket system) 3 How Does EPYC Differ From Skylake (Cont)? Some Skylake-SP processors include built in Omnipath networking, or FPGA coprocessors Not available in EPYC Both Skylake-SP and EPYC have SMT (HT) support 2 logical cores per physical core (absent in some Xeon Bronze models) Maximum core count (per socket) Skylake-SP – 28 physical / 56 logical (Xeon Platinum 8180M) EPYC – 32 physical / 64 logical (EPYC 7601) Maximum socket count Skylake-SP – 8 (Xeon Platinum) EPYC – 2 Processor Inteconnect Skylake-SP – UltraPath Interconnect (UPI) EYPC – Infinity Fabric (IF) PCIe lanes (2-socket system) Skylake-SP – 96 EPYC – 128 (some used by SoC functionality) Same number available in single socket configuration 4 EPYC: MCM/SoC Design EPYC utilizes an SoC design Many functions normally found in motherboard chipset on the CPU SATA controllers USB controllers etc. -

POWER10 Processor Chip

POWER10 Processor Chip Technology and Packaging: PowerAXON PowerAXON - 602mm2 7nm Samsung (18B devices) x x 3 SMT8 SMT8 SMT8 SMT8 3 - 18 layer metal stack, enhanced device 2 Core Core Core Core 2 - Single-chip or Dual-chip sockets + 2MB L2 2MB L2 2MB L2 2MB L2 + 4 4 SMP, Memory, Accel, Cluster, PCI Interconnect Cluster,Accel, Memory, SMP, Computational Capabilities: PCI Interconnect Cluster,Accel, Memory, SMP, Local 8MB - Up to 15 SMT8 Cores (2 MB L2 Cache / core) L3 region (Up to 120 simultaneous hardware threads) 64 MB L3 Hemisphere Memory Signaling (8x8 OMI) (8x8 Signaling Memory - Up to 120 MB L3 cache (low latency NUCA mgmt) OMI) (8x8 Signaling Memory - 3x energy efficiency relative to POWER9 SMT8 SMT8 SMT8 SMT8 - Enterprise thread strength optimizations Core Core Core Core - AI and security focused ISA additions 2MB L2 2MB L2 2MB L2 2MB L2 - 2x general, 4x matrix SIMD relative to POWER9 - EA-tagged L1 cache, 4x MMU relative to POWER9 SMT8 SMT8 SMT8 SMT8 Core Core Core Core Open Memory Interface: 2MB L2 2MB L2 2MB L2 2MB L2 - 16 x8 at up to 32 GT/s (1 TB/s) - Technology agnostic support: near/main/storage tiers - Minimal (< 10ns latency) add vs DDR direct attach 64 MB L3 Hemisphere PowerAXON Interface: - 16 x8 at up to 32 GT/s (1 TB/s) SMT8 SMT8 SMT8 SMT8 x Core Core Core Core x - SMP interconnect for up to 16 sockets 3 2MB L2 2MB L2 2MB L2 2MB L2 3 - OpenCAPI attach for memory, accelerators, I/O 2 2 + + - Integrated clustering (memory semantics) 4 4 PCIe Gen 5 PCIe Gen 5 PowerAXON PowerAXON PCIe Gen 5 Interface: Signaling (x16) Signaling -

Analyzing the Performance of Intel Optane DC Persistent Memory in Storage Over App Direct Mode

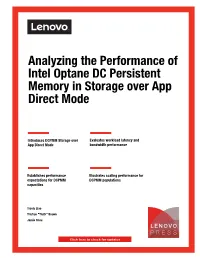

Front cover Analyzing the Performance of Intel Optane DC Persistent Memory in Storage over App Direct Mode Introduces DCPMM Storage over Evaluates workload latency and App Direct Mode bandwidth performance Establishes performance Illustrates scaling performance for expectations for DCPMM DCPMM populations capacities Travis Liao Tristian "Truth" Brown Jamie Chou Abstract Intel Optane DC Persistent Memory is the latest memory technology for Lenovo® ThinkSystem™ servers. This technology deviates from contemporary flash storage offerings and utilizes the ground-breaking 3D XPoint non-volatile memory technology to deliver a new level of versatile performance in a compact memory module form factor. As server storage technology continues to advance from devices behind RAID controllers to offerings closer to the processor, it is important to understand the technological differences and suitable use cases. This paper provides a look into the performance of Intel Optane DC Persistent Memory Modules configured in Storage over App Direct Mode operation. At Lenovo Press, we bring together experts to produce technical publications around topics of importance to you, providing information and best practices for using Lenovo products and solutions to solve IT challenges. See a list of our most recent publications at the Lenovo Press web site: http://lenovopress.com Do you have the latest version? We update our papers from time to time, so check whether you have the latest version of this document by clicking the Check for Updates button on the front page of the PDF. Pressing this button will take you to a web page that will tell you if you are reading the latest version of the document and give you a link to the latest if needed.