Spotify at TREC 2020: Genre-Aware Abstractive Podcast Summarization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Looking for Podcast Suggestions? We’Ve Got You Covered

Looking for podcast suggestions? We’ve got you covered. We asked Loomis faculty members to share their podcast playlists with us, and they offered a variety of suggestions as wide-ranging as their areas of personal interest and professional expertise. Here’s a collection of 85 of these free, downloadable audio shows for you to try, listed alphabetically with their “recommenders” listed below each entry: 30 for 30 You may be familiar with ESPN’s 30 for 30 series of award-winning sports documentaries on television. The podcasts of the same name are audio documentaries on similarly compelling subjects. Recent podcasts have looked at the man behind the Bikram Yoga fitness craze, racial activism by professional athletes, the origins of the hugely profitable Ultimate Fighting Championship, and the lasting legacy of the John Madden Football video game. Recommended by Elliott: “I love how it involves the culture of sports. You get an inner look on a sports story or event that you never really knew about. Brings real life and sports together in a fantastic way.” 99% Invisible From the podcast website: “Ever wonder how inflatable men came to be regular fixtures at used car lots? Curious about the origin of the fortune cookie? Want to know why Sigmund Freud opted for a couch over an armchair? 99% Invisible is about all the thought that goes into the things we don’t think about — the unnoticed architecture and design that shape our world.” Recommended by Scott ABCA Calls from the Clubhouse Interviews with coaches in the American Baseball Coaches Association Recommended by Donnie, who is head coach of varsity baseball and says the podcast covers “all aspects of baseball, culture, techniques, practices, strategy, etc. -

WHITE SOX HEADLINES of NOVEMBER 20, 2017 “White Sox

WHITE SOX HEADLINES OF NOVEMBER 20, 2017 “White Sox face roster call on Clarkin, 6 more” … Scott Merkin, MLB.com “Up close, White Sox see same big potential Cubs forecasted for Dylan Cease” … Patrick Mooney, NBC Sports Chicago “Omar Vizquel will reportedly be a minor league manager for White Sox in 2018” … Vinnie Duber, NBC Sports Chicago “Frank Kaminsky on the White Sox rebuild: ‘The Process is paying off for the 76ers. I hope it pays off for us too’” … Jon Greenberg, The Athletic “Rumor Central: Athletics eyeing White Sox OF Avisail Garica as trade target?” … Nick Ostiller, ESPN.com White Sox face roster call on Clarkin, 6 more Chicago must decide which top prospects to protect from Rule 5 Draft By Scott Merkin / MLB.com | Nov. 17, 2017 CHICAGO -- By all accounts, Ian Clarkin threw the ball well during recently completed White Sox instructional league action at Camelback Ranch in Glendale, Ariz. Even more important, the left-hander felt healthy through six or seven side sessions, after dealing with myriad injuries during his Minor League career. That combination, along with his raw talent, puts Clarkin in play to be added to the White Sox 40-man roster by the 7 p.m. CT deadline on Monday, protecting him from exposure to selection by the rest of baseball in the Rule 5 Draft on Dec. 14 at the Winter Meetings in Lake Buena Vista, Fla. Clarkin, Chicago's No. 22 prospect according to MLBPipeline.com, was selected by the Yankees at No. 33 overall in the 2013 MLB Draft, one pick after Aaron Judge, the '17 American League Rookie of the Year Award winner and MVP Award runner-up. -

Teacher Notes

Media Series - TV Teacher Notes Television in the Global Age Teachers’ Notes The resources are intended to support teachers delivering on the new AS and A level specifications. They have been created based on the assumption that many teachers will already have some experience of teaching Media Studies and therefore have been pitched at a level which takes this into consideration. Other resources are readily available which outline e.g. technical and visual codes and how to apply these. There is overlap between the different areas of the theoretical framework and the various contexts, and a “text-out” teaching structure may offer opportunities for a more holistic approach. Slides are adaptable to use with your students. Explanatory notes for teachers/suggestions for teaching are in the Teachers’ Notes. The resources are intended to offer guidance only and are by no means exhaustive. It is expected that teachers will subsequently research and use their own materials and teaching strategies within their delivery. Television as an industry has changed dramatically since its inception. Digital technologies and other external factors have led to changes in production, distribution, the increasingly global nature of television and the ways in which audiences consume texts. It is expected that students will require teacher-led delivery which outlines these changes, but the focus of delivery will differ dependent on texts chosen. THE JINX: The Life and Deaths of Robert Durst Episode Suggestions Episode 1 ‘The Body in the Bay’ is the ‘set’ text but you may also want to look at others, particularly Episode 6 with its “shocking” conclusion. -

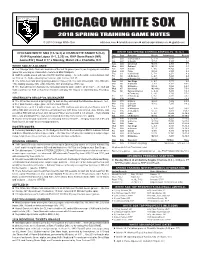

Chicago White Sox 2018 Spring Training Game Notes

CHICAGO WHITE SOX 2018 SPRING TRAINING GAME NOTES © 2018 Chicago White Sox whitesox.com l loswhitesox.com l whitesoxpressbox.com l @whitesox CHICAGO WHITE SOX (15-12-3) at CHARLOTTE KNIGHTS (0-0) WHITE SOX SPRING SCHEDULE/RESULTS: 16-12-3 RHP Reynaldo López (1-1, 3.29) vs. RHP Donn Roach (NR) Day Date Opponent Result Attendance Record Fri. 2/23 at LA Dodgers L, 5-13 6,813 0-1 Game #32 | Road # 17 l Monday, March 26 l Charlotte, N.C. Sat. 2/24 at Seattle W, 5-3 5,107 1-1 Sun. 2/25 Cincinnati W, 8-5 2,703 2-1 WHITE SOX AT A GLANCE Mon. 2/26 Oakland W, 7-6 2,826 3-1 l The Chicago White Sox have won nine of the last 14 games (one tie) as they play an exhibition Tue. 2/27 at Cubs L, 5-6 10,769 3-2 game this evening vs. Class AAA Charlotte at BB&T Ballpark. Wed. 2/28 Texas W, 5-4 2,360 4-2 Thu. 3/1 at Cincinnati L, 7-8 2,271 4-3 l RHP Reynaldo López will make his fifth start this spring … he suffered the loss in his last start Fri. 3/2 LA Dodgers L, 6-7 7,423 4-4 on 3/16 vs. the Cubs, allowing four runs on eight hits over 4.1 IP. Sat. 3/3 at Kansas City W, 9-5 5,793 5-4 l The White Sox rank among spring leaders in triples (1st, 15), runs scored (4th, 187), RBI (4th, Sun. -

2020 Topps Chrome Sapphire Edition .Xls

SERIES 1 1 Mike Trout Angels® 2 Gerrit Cole Houston Astros® 3 Nicky Lopez Kansas City Royals® 4 Robinson Cano New York Mets® 5 JaCoby Jones Detroit Tigers® 6 Juan Soto Washington Nationals® 7 Aaron Judge New York Yankees® 8 Jonathan Villar Baltimore Orioles® 9 Trent Grisham San Diego Padres™ Rookie 10 Austin Meadows Tampa Bay Rays™ 11 Anthony Rendon Washington Nationals® 12 Sam Hilliard Colorado Rockies™ Rookie 13 Miles Mikolas St. Louis Cardinals® 14 Anthony Rendon Angels® 15 San Diego Padres™ 16 Gleyber Torres New York Yankees® 17 Franmil Reyes Cleveland Indians® 18 Minnesota Twins® 19 Angels® Angels® 20 Aristides Aquino Cincinnati Reds® Rookie 21 Shane Greene Atlanta Braves™ 22 Emilio Pagan Tampa Bay Rays™ 23 Christin Stewart Detroit Tigers® 24 Kenley Jansen Los Angeles Dodgers® 25 Kirby Yates San Diego Padres™ 26 Kyle Hendricks Chicago Cubs® 27 Milwaukee Brewers™ Milwaukee Brewers™ 28 Tim Anderson Chicago White Sox® 29 Starlin Castro Washington Nationals® 30 Josh VanMeter Cincinnati Reds® 31 American League™ 32 Brandon Woodruff Milwaukee Brewers™ 33 Houston Astros® Houston Astros® 34 Ian Kinsler San Diego Padres™ 35 Adalberto Mondesi Kansas City Royals® 36 Sean Doolittle Washington Nationals® 37 Albert Almora Chicago Cubs® 38 Austin Nola Seattle Mariners™ Rookie 39 Tyler O'neill St. Louis Cardinals® 40 Bobby Bradley Cleveland Indians® Rookie 41 Brian Anderson Miami Marlins® 42 Lewis Brinson Miami Marlins® 43 Leury Garcia Chicago White Sox® 44 Tommy Edman St. Louis Cardinals® 45 Mitch Haniger Seattle Mariners™ 46 Gary Sanchez New York Yankees® 47 Dansby Swanson Atlanta Braves™ 48 Jeff McNeil New York Mets® 49 Eloy Jimenez Chicago White Sox® Rookie 50 Cody Bellinger Los Angeles Dodgers® 51 Anthony Rizzo Chicago Cubs® 52 Yasmani Grandal Chicago White Sox® 53 Pete Alonso New York Mets® 54 Hunter Dozier Kansas City Royals® 55 Jose Martinez St. -

09-29-2020 White Sox Wild Card Roster

2020 CHICAGO WHITE SOX ROSTER UPDATED: September 29, 2020 WHITE SOX COACHING STAFF, TRAINERS AND SUPPORT STAFF Manager: Rick Renteria (#17) | Coaches: Daryl Boston, First Base (#8); Nick Capra, Third Base (#12); Scott Coolbaugh, Asst. Hitting (#46) Don Cooper, Pitching (#21); Curt Hasler, Bullpen (#29); Joe McEwing, Bench (#82); Frank Menechino, Hitting (#22) Head Trainer: Brian Ball | Assistant Trainers: Brett Walker (Physical Therapist); James Kruk; Josh Fallin Strength/Conditioning: Allen Thomas (Director); Dale Torborg (Assistant) | Massage Therapist: Jessica Labunski Bullpen Catchers: Luis Sierra; Miguel González | Pre-Game Instructor and Replay: Mike Kashirsky Director of Team Travel: Ed Cassin | Video Coaching Coordinator: Bryan Johnson Clubhouse Managers: Rob Warren (Home); Jason Gilliam (Visiting); Joe McNamara Jr. (Umpires) WHITE SOX ACTIVE ROSTER (28) NUMERICAL ROSTER (28) No. Pitchers (13) B T HT WT Born Birthplace Residence No. Player Pos. 39 Aaron Bummer L L 6-3 215 9/21/93 Valencia, Calif. Omaha, Neb. 1 Nick Madrigal ...............................2B 84 Dylan Cease R R 6-2 200 12/28/95 Milton, Ga. Ball Ground, Ga. 5 Yolmer Sánchez .........................INF 48 Alex Colomé ("co-low-MAY") R R 6-1 225 12/31/88 Santo Domingo, D.R. San Pedro de Macorís, D.R. 7 Tim Anderson ..............................SS 50 Jimmy Cordero R R 6-4 235 10/19/91 San Cristóbal, D.R. Reading, Pa. 10 Yoán Moncada ............................INF 45 Garrett Crochet ("CROW-shay") L L 6-6 220 6/21/99 Ocean Springs, Miss. Ocean Springs, Miss. 15 Adam Engel .................................OF 51 Dane Dunning R R 6-4 225 12/20/94 Orange Park, Fla. -

Nancy Grace on Her Third Crimecon and Why She's Driven to Catch Criminals

DRIVEN BY JUSTICE Nancy Grace on Her Third CrimeCon and Why She’s Driven to Catch Criminals Perennial fan-favorite Nancy coming to a family reunion. Grace returns for her third The people who are here are CrimeCon, and she’s bringing all like me — dedicated to crime the fire, emotion, and endearing and crime sleuthing,” she told Southern colloquialisms we’ve the Indy Star during CrimeCon come to expect from the pint- 2017. “It’s very invigorating to be sized powerhouse. Grace has around people who share the become a staple of CrimeCon same interest and the natural weekend, often found taking curiosity that I have.” selfies with fans (“The angle is key,” she says) or laughing with Grace has dedicated most of podcasters on Podcast Row. her life to finding answers to the questions that drive our When she takes the stage at fascination with true crime. CrimeCon, though, she is all Her fans are familiar with the business. Grace is famous for story of Grace’s fiance, Keith, her impassioned rhetoric and who was gunned down in boundless energy, and she has his vehicle when Grace was no trouble rousing a crowd nineteen years old. His death -- and CrimeCon is exactly her sparked a change in Grace, kind of crowd. “It felt like I was who promptly switched gears, dropped her literature major, and began studying for law school. She earned a reputation as an outspoken and energetic prosecutor in Atlanta before becoming a broadcaster and crime commentator on HLN and other cable networks. “Wherever I can best do the work I’m called to do, that’s where I’ll go,” Grace told the CrimeCon Informant in 2017. -

Chicago White Sox 2018 Game Notes and Information

CHICAGO WHITE SOX 2018 GAME NOTES AND INFORMATION Chicago White Sox Media Relations Department 333 W. 35th Street Chicago, IL 60616 Phone: 312-674-5300 Senior Director: Bob Beghtol Assistant Director: Ray Garcia Manager: Billy Russo Coordinators: Joe Roti and Hannah Sundwall © 2018 Chicago White Sox whitesox.com loswhitesox.com whitesoxpressbox.com chisoxpressbox.com @whitesox WHITE SOX 2018 BREAKDOWN OAKLAND A'S (40-37) at CHICAGO WHITE SOX (25-51) Sox After 76 | 77 in 2017 ..............33-43 | 33-44 Streak ...................................................... Lost 1 RHP Paul Blackburn (1-1, 8.03) vs. LHP Carlos Rodón (0-2, 4.41) Current Homestand ......................................1-2 Game #77/Home #40 Sunday, June 24 1:10 p.m. Guaranteed Rate Field Last Trip ........................................................0-3 WGN-TV WGN Radio 720-AM WRTO 1200-AM MLB.TV Last 10 Games .............................................1-9 Series Record ..........................................5-16-3 Series First Game.......................................7-18 WHITE SOX AT A GLANCE WHITE SOX VS. OAKLAND First | Second Half ............................25-51 | 0-0 Home | Road ................................13-26 | 12-25 The Chicago White Sox have lost nine of their last 10 games The A's lead the season series, 5-1, and have clinched back- Day | Night ....................................10-30 | 15-21 as they continue a seven-game homestand this afternoon with to-back season series victories over the White Sox for the fi rst Opp. At-Above | Below .500 ......... 11-28 | 14-23 the fi nale vs. Oakland. time since 2009-10. vs. RHS | LHS ................................19-39 | 6-12 LHP Carlos Rodón is scheduled to start for the White Sox, The White Sox are batting .249/.304/.358 (57-229) with a vs. -

Judging the Suspect-Protagonist in True Crime Documentaries

Wesleyan University The Honors College Did He Do It?: Judging the Suspect-Protagonist in True Crime Documentaries by Paul Partridge Class of 2018 A thesis submitted to the faculty of Wesleyan University in partial fulfillment of the requirements for the Degree of Bachelor of Arts with Departmental Honors from the College of Film and the Moving Image Middletown, Connecticut April, 2018 Table of Contents Acknowledgments…………………………………...……………………….iv Introduction ...…………………………………………………………………1 Review of the Literature…………………………………………………………..3 Questioning Genre………………………………………………………………...9 The Argument of the Suspect-protagonist...…………………………………......11 1. Rise of a Genre……………………………………………………….17 New Ways of Investigating the Past……………………………………..19 Connections to Literary Antecedents…………………..………………...27 Shocks, Twists, and Observation……………………………..………….30 The Genre Takes Off: A Successful Marriage with the Binge-Watch Structure.........................................................................34 2. A Thin Blue Through-Line: Observing the Suspect-Protagonist Since Morris……………………………….40 Conflicts Crafted in Editing……………………………………………...41 Reveal of Delayed Information…………………………………………..54 Depictions of the Past…………………………………………………….61 3. Seriality in True Crime Documentary: Finding Success and Cultural Relevancy in the Binge- Watching Era…………………………………………………………69 Applying Television Structure…………………………………………...70 (De)construction of Innocence Through Long-Form Storytelling……….81 4. The Keepers: What Does it Keep, What Does ii It Change?..............................................................................................95 -

Review of Capote's in Cold Blood

Themis: Research Journal of Justice Studies and Forensic Science Volume 1 Themis: Research Journal of Justice Studies and Forensic Science, Volume I, Spring Article 1 2013 5-2013 Review of Capote’s In Cold Blood Yevgeniy Mayba San Jose State University Follow this and additional works at: https://scholarworks.sjsu.edu/themis Part of the American Literature Commons, Criminal Law Commons, and the Criminology and Criminal Justice Commons Recommended Citation Mayba, Yevgeniy (2013) "Review of Capote’s In Cold Blood," Themis: Research Journal of Justice Studies and Forensic Science: Vol. 1 , Article 1. https://doi.org/10.31979/THEMIS.2013.0101 https://scholarworks.sjsu.edu/themis/vol1/iss1/1 This Book Review is brought to you for free and open access by the Justice Studies at SJSU ScholarWorks. It has been accepted for inclusion in Themis: Research Journal of Justice Studies and Forensic Science by an authorized editor of SJSU ScholarWorks. For more information, please contact [email protected]. Review of Capote’s In Cold Blood Keywords Truman Capote, In Cold Blood, book review This book review is available in Themis: Research Journal of Justice Studies and Forensic Science: https://scholarworks.sjsu.edu/themis/vol1/iss1/1 Mayba: In Cold Blood Review 1 Review of Capote’s In Cold Blood Yevgeniy Mayba Masterfully combining fiction and journalism, Truman Capote delivers a riveting account of the senseless mass murder that occurred on November 15, 1959 in the quiet rural town of Holcomb, Kansas. Refusing to accept the inherent lack of suspense in his work, Capote builds the tension with the brilliant use of imagery and detailed exploration of the characters. -

White Sox Headlines of July 14, 2017

WHITE SOX HEADLINES OF JULY 14, 2017 “White Sox get haul in Quintana blockbuster” … Scott Merkin, MLB.com “Hahn has plenty of work to do before Deadline” … Scott Merkin, MLB.com “Which would you rather have: The Cubs rotation or White Sox farm system?” … Michael Clair, MLB.com “White Sox add to deep farm system” …Jonathan Mayo, MLB.com “Chicago neighbors have pulled off 26 trades in clubs' histories” … David Adler, MLB.com “Trades, debuts in store for Sox second half” … Scott Merkin, MLB.com “Cubs’ ‘best offer’ for Jose Quintana made it easy for Rick Hahn, White sox to pull trigger” … Dan Hayes, CSN Chicago “Rick Hahn: The idea ego would prevent a White Sox-Cubs deal is ‘laughable’” … Dan Hayes, CSN Chicago “One AL scout thinks new White Sox prospect Eloy Jiminez ‘might be a monster’, maybe better than Yoan Moncada” … Vinnie Duber, CSN Chicago “Like with Chris Sale and Adam Eaton, White Sox get another massive haul for Jose Quintana” … Dan Hayes, CSN Chicago “Short journey for new White Sox prospect Eloy Jimenez after crosstown trade” … Colleen Kane, Chicago Tribune “White Sox add 'one of most exciting prospects' to booming farm system” … Colleen Kane, Chicago Tribune “Phone call at All-Star fan convention helped Jose Quintana trade progress” Colleen Kane, Chicago Tribune “Hawk Harrelson on trade: 'If you can make your ballclub better, I don't give a (bleep) who it's with'” … Teddy Greenstein, Chicago Tribune “White Sox, Frazier begin second half with more trades looming” … Daryl Van Schouwen, Chicago Sun-Times “Who won the previous 5 -

Podcasting As Public Media: the Future of U.S

International Journal of Communication 14(2020), 1683–1704 1932–8036/20200005 Podcasting as Public Media: The Future of U.S. News, Public Affairs, and Educational Podcasts PATRICIA AUFDERHEIDE American University, USA DAVID LIEBERMAN The New School, USA ATIKA ALKHALLOUF American University, USA JIJI MAJIRI UGBOMA The New School, USA This article identifies a U.S.-based podcasting ecology as public media and then examines the threats to its future. It first identifies characteristics of a set of podcasts in the United States that allow them to be usefully described as public podcasting. Second, it looks at current business trends in podcasting as platformization proceeds. Third, it identifies threats to public podcasting’s current business practices. Finally, it analyzes responses within public podcasting to the potential threats. The article concludes that currently, the public podcast ecology in the United States maintains some immunity from the most immediate threats, but there are also underappreciated threats to it, both internally and externally. Keywords: podcasting, public media, platformization, business trends, public podcasting ecology As U.S. podcasting becomes a commercially viable part of the media landscape, are its public service functions at risk? This article explores that question, in the process postulating that the concept of public podcasting has utility in describing not only a range of podcasting practices, but also an ecology within the larger podcasting ecology—one that permits analysis of both business methods and social practices, and one that deserves attention and even protection. This analysis contributes to the burgeoning literature on Patricia Aufderheide: [email protected] David Lieberman: [email protected] Atika Alkhallouf: [email protected] Jiji Majiri Ugboma: [email protected] Date submitted: 2019‒09‒27 Copyright © 2020 (Patricia Aufderheide, David Lieberman, Atika Alkhallouf, and Jiji Majiri Ugboma).