Marshall Space Flight Center Faculty Fellowship Program

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Modular Spacecraft with Integrated Structural Electrodynamic Propulsion

NAS5-03110-07605-003-050 Modular Spacecraft with Integrated Structural Electrodynamic Propulsion Nestor R. Voronka, Robert P. Hoyt, Jeff Slostad, Brian E. Gilchrist (UMich/EDA), Keith Fuhrhop (UMich) Tethers Unlimited, Inc. 11807 N. Creek Pkwy S., Suite B-102 Bothell, WA 98011 Period of Performance: 1 September 2005 through 30 April 2006 Report Date: 1 May 2006 Phase I Final Report Contract: NAS5-03110 Subaward: 07605-003-050 Prepared for: NASA Institute for Advanced Concepts Universities Space Research Association Atlanta, GA 30308 NAS5-03110-07605-003-050 TABLE OF CONTENTS TABLE OF CONTENTS.............................................................................................................................................1 TABLE OF FIGURES.................................................................................................................................................2 I. PHASE I SUMMARY ........................................................................................................................................4 I.A. INTRODUCTION .............................................................................................................................................4 I.B. MOTIVATION .................................................................................................................................................4 I.C. ELECTRODYNAMIC PROPULSION...................................................................................................................5 I.D. INTEGRATED STRUCTURAL -

W, A. Pavj-.:S VA -R,&2. ~L

W, A. PaVJ-.:s VA -r,&2._~L NATIONAL ACADEMIES OF SCIENCE AND ENGINEERING NATIONAL RESEARCH COUNCIL of the UNITED STATES OF AMERICA UNITED STATES NATIONAL COMMITTEE International Union of Radio Science, National Radio Science Meeting 5-8 January 1993 Sponsored by USNC/URSI in cooperation with Institute of Electrical and Electronics Engineers University of Colorado Boulder, Colorado U.S.A. National Radio Science Meeting 5-8 January 1993 Condensed Technical Program Monday, 4 January 2000-2400 USNC-URSI Meeting Broker Inn Tuesday, 5 January 0835-1200 B-1 ANTENNAS CRZ-28 0855-1200 A-1 MICROWAVE MEASUREMENTS CRl-46 D-1 MICROWAVE, QUASisOPTICAL, AND ELECTROOPTICAL DEVICES CRl-9 G-1 COORDINATED CAMPAIGNS AND ACTIVE EXPERIMENTS CR0-30 J/H-1 RADIO AND RADAR ASTRONOMY OF THE SOLAR SYSTEM CRZ-26 1335-1700 B-2 SCATIERING CR2-28 F-1 SENSING OF ATMOSPHERE AND OCEAN CRZ-6 G-2 IONOSPHERIC PROPAGATION CHANNEL CR0-30 J/H-2 RADIO AND RADAR ASTRONOMY OF THE SOLAR SYSTEM CR2-26 1355-1700 A-2 EM FIELD MEASUREMENTS CRl-46 D-2 OPTOELECTRONICS DEVICES AND APPLICATION CRl-9 1700-1800 Commission A Business Meeting CRl-46 Commission C Business Meeting CRl-40 Commission D Business Meeting CRl-9 Commission G Business Meeting CR0-30 Wednesday, 6 January 0815-1200 PLENARY SESSION MATH 100 1335-1700 B-3 EM THEORY CRZ-28 D-3 MICROWAVE AND MILLIMETER AND RELATED DEVICES CRl-9 F-2 PROPAGATION MODELING AND SCATTERING CRZ-6 H-1 PLASMA WAYES IN THE IONOSPHERE AND THE MAGNETOSPHERE CRl-42 J-1 FIBER OPTICS IN RADIO ASTRONOMY CR2-26 1355-1700 E-1 HIGH POWER ELECTROMAGNETICS (HPE) AND INTERFERENCE PROBLEMS CRl-40 United States National Committee INTERNATIONAL UNION OF RADIO SCIENCE PROGRAM AND ABSTRACTS National Radio Science Meeting 5-8 January 1993 Sponsored by USNC/URSI in cooperation with IEEE groups and societies: Antennas and Propagation Circuits and Systems Communications Electromagnetic Compatibility Geoscience Electronics Information Theory ,. -

UC Irvine UC Irvine Previously Published Works

UC Irvine UC Irvine Previously Published Works Title Astrophysics in 2006 Permalink https://escholarship.org/uc/item/5760h9v8 Journal Space Science Reviews, 132(1) ISSN 0038-6308 Authors Trimble, V Aschwanden, MJ Hansen, CJ Publication Date 2007-09-01 DOI 10.1007/s11214-007-9224-0 License https://creativecommons.org/licenses/by/4.0/ 4.0 Peer reviewed eScholarship.org Powered by the California Digital Library University of California Space Sci Rev (2007) 132: 1–182 DOI 10.1007/s11214-007-9224-0 Astrophysics in 2006 Virginia Trimble · Markus J. Aschwanden · Carl J. Hansen Received: 11 May 2007 / Accepted: 24 May 2007 / Published online: 23 October 2007 © Springer Science+Business Media B.V. 2007 Abstract The fastest pulsar and the slowest nova; the oldest galaxies and the youngest stars; the weirdest life forms and the commonest dwarfs; the highest energy particles and the lowest energy photons. These were some of the extremes of Astrophysics 2006. We attempt also to bring you updates on things of which there is currently only one (habitable planets, the Sun, and the Universe) and others of which there are always many, like meteors and molecules, black holes and binaries. Keywords Cosmology: general · Galaxies: general · ISM: general · Stars: general · Sun: general · Planets and satellites: general · Astrobiology · Star clusters · Binary stars · Clusters of galaxies · Gamma-ray bursts · Milky Way · Earth · Active galaxies · Supernovae 1 Introduction Astrophysics in 2006 modifies a long tradition by moving to a new journal, which you hold in your (real or virtual) hands. The fifteen previous articles in the series are referenced oc- casionally as Ap91 to Ap05 below and appeared in volumes 104–118 of Publications of V. -

March 21–25, 2016

FORTY-SEVENTH LUNAR AND PLANETARY SCIENCE CONFERENCE PROGRAM OF TECHNICAL SESSIONS MARCH 21–25, 2016 The Woodlands Waterway Marriott Hotel and Convention Center The Woodlands, Texas INSTITUTIONAL SUPPORT Universities Space Research Association Lunar and Planetary Institute National Aeronautics and Space Administration CONFERENCE CO-CHAIRS Stephen Mackwell, Lunar and Planetary Institute Eileen Stansbery, NASA Johnson Space Center PROGRAM COMMITTEE CHAIRS David Draper, NASA Johnson Space Center Walter Kiefer, Lunar and Planetary Institute PROGRAM COMMITTEE P. Doug Archer, NASA Johnson Space Center Nicolas LeCorvec, Lunar and Planetary Institute Katherine Bermingham, University of Maryland Yo Matsubara, Smithsonian Institute Janice Bishop, SETI and NASA Ames Research Center Francis McCubbin, NASA Johnson Space Center Jeremy Boyce, University of California, Los Angeles Andrew Needham, Carnegie Institution of Washington Lisa Danielson, NASA Johnson Space Center Lan-Anh Nguyen, NASA Johnson Space Center Deepak Dhingra, University of Idaho Paul Niles, NASA Johnson Space Center Stephen Elardo, Carnegie Institution of Washington Dorothy Oehler, NASA Johnson Space Center Marc Fries, NASA Johnson Space Center D. Alex Patthoff, Jet Propulsion Laboratory Cyrena Goodrich, Lunar and Planetary Institute Elizabeth Rampe, Aerodyne Industries, Jacobs JETS at John Gruener, NASA Johnson Space Center NASA Johnson Space Center Justin Hagerty, U.S. Geological Survey Carol Raymond, Jet Propulsion Laboratory Lindsay Hays, Jet Propulsion Laboratory Paul Schenk, -

Digitalcommons@UTEP

University of Texas at El Paso DigitalCommons@UTEP Open Access Theses & Dissertations 2017-01-01 Her Alessandra Narvaez-Varela University of Texas at El Paso, [email protected] Follow this and additional works at: https://digitalcommons.utep.edu/open_etd Part of the Creative Writing Commons, and the Women's Studies Commons Recommended Citation Narvaez-Varela, Alessandra, "Her" (2017). Open Access Theses & Dissertations. 509. https://digitalcommons.utep.edu/open_etd/509 This is brought to you for free and open access by DigitalCommons@UTEP. It has been accepted for inclusion in Open Access Theses & Dissertations by an authorized administrator of DigitalCommons@UTEP. For more information, please contact [email protected]. HER ALESSANDRA NARVÁEZ-VARELA Master's Program in Creative Writing APPROVED: Sasha Pimentel, Chair Andrea Cote-Botero, Ph.D. David Ruiter, Ph.D. Charles Ambler, Ph.D. Dean of the Graduate School . Copyright © by Alessandra Narváez-Varela 2017 For my mother—Amanda Socorro Varela Aragón— the original Her HER by ALESSANDRA NARVÁEZ-VARELA, B.S in Biology, B.A. in Creative Writing THESIS Presented to the Faculty of the Graduate School of The University of Texas at El Paso in Partial Fulfillment of the Requirements for the Degree of MASTER OF FINE ARTS Department of Creative Writing THE UNIVERSITY OF TEXAS AT EL PASO December 2017 Acknowledgements Thank you to the editors of Duende for publishing a previous version of “The Cosmic Millimeter,” and to the Department of Hispanic Studies at the University of Houston for the forthcoming publication of a chapbook featuring the following poems: “Curves,” “Savage Nerd Cunt,” “Beauty,” “Bride,” “An Education,” “Daddy, Look at Me,” and “Cut.” Thank you to Casa Cultural de las Américas for sponsoring the reading that made this chapbook possible. -

Action Figures

Action Figures Star Wars (Kenner) (1977-1983) Power of the Force Complete Figures Imperial Dignitary Masters of the Universe (1982-1988) Wave 1 Complete Figures Battle Ram (Vehicle) Castle Grayskull (Playset) Accessories Man-At-Arms - Mini-comic (The Vengeance of Skeletor), Mace, Leg Armor Zodac - Mini-comic (The Vengeance of Skeletor or Slave City!) Beast Man - Whip, Spiked Arm Band, Spiked Arm Band Mer-Man - Mini-comic Teela - Snake Staff Wave 2 Accessories Trap Jaw - Mini-comic (The Menace of Trap Jaw!), Hook Arm, Rifle Arm Wave 3 Complete Figures Battle Armor He-Man Mekaneck (figure and accessories detailed below) Accessories Battle Armor Skeletor - Ram Staff Webstor - Grapple Hook with string Fisto - Sword Orko - Mini-comic (Slave City!), Magic Trick Whiplash - Mini-comic (The Secret Liquid of Life) Weapons Pack - Yellow Beast Man Chest Armor, Yellow Beast Man Arm Band, Gray Grayskull Shield, Gray Grayskull Sword Mekaneck - Club, Armor Wave 4 Complete Figures Dragon Blaster Skeletor (figure and accessories detailed below) Moss Man (figure and accessories detailed below) Battle Bones (Creature) Land Shark (Vehicle) Accessories Dragon Blaster Skeletor - Sword, Chest Armor, Chain, Padlock Hordak - Mini-comic (The Ruthless Leader's Revenge) Modulok - 1 Tail, Left Claw Leg Moss Man - Brown Mace Roboto - Mini-comic (The Battle for Roboto) Spikor - Mini-comic (Spikor Strikes) Fright Zone - Tree, Puppet, Door Thunder Punch He-Man - Shield Wave 5 Complete Figures Flying Fists He-Man Horde Trooper (figure and accessories detailed below) Snout -

Apollo Over the Moon: a View from Orbit (Nasa Sp-362)

chl APOLLO OVER THE MOON: A VIEW FROM ORBIT (NASA SP-362) Chapter 1 - Introduction Harold Masursky, Farouk El-Baz, Frederick J. Doyle, and Leon J. Kosofsky [For a high resolution picture- click here] Objectives [1] Photography of the lunar surface was considered an important goal of the Apollo program by the National Aeronautics and Space Administration. The important objectives of Apollo photography were (1) to gather data pertaining to the topography and specific landmarks along the approach paths to the early Apollo landing sites; (2) to obtain high-resolution photographs of the landing sites and surrounding areas to plan lunar surface exploration, and to provide a basis for extrapolating the concentrated observations at the landing sites to nearby areas; and (3) to obtain photographs suitable for regional studies of the lunar geologic environment and the processes that act upon it. Through study of the photographs and all other arrays of information gathered by the Apollo and earlier lunar programs, we may develop an understanding of the evolution of the lunar crust. In this introductory chapter we describe how the Apollo photographic systems were selected and used; how the photographic mission plans were formulated and conducted; how part of the great mass of data is being analyzed and published; and, finally, we describe some of the scientific results. Historically most lunar atlases have used photointerpretive techniques to discuss the possible origins of the Moon's crust and its surface features. The ideas presented in this volume also rely on photointerpretation. However, many ideas are substantiated or expanded by information obtained from the huge arrays of supporting data gathered by Earth-based and orbital sensors, from experiments deployed on the lunar surface, and from studies made of the returned samples. -

1 Using Eletrodynamic Tethers to Perform Station

USING ELETRODYNAMIC TETHERS TO PERFORM STATION-KEEPING MANEUVERS IN LEO SATELLITES Thais Carneiro Oliveira(1), and Antonio Fernando Bertachini de Almeida Prado(2) (1)(2) National Institute for Space Research (INPE), Av. dos Astronautas 1758 São José dos Campos – SP – Brazil ZIP code 12227-010,+55 12 32086000, [email protected] and [email protected] Abstract: This paper analyses the concept of using an electrodynamic tether to provide propulsion to a space system with an electric power supply and no fuel consumption. The present work is focused on orbit maintenance and on re-boost maneuvers for tethered satellite systems. The analyses of the results will be performed with the help of a practical tool called “Perturbation Integrals” and an orbit integrator that can include many external perturbations, like atmospheric drag, solar radiation pressure and luni-solar perturbation. Keywords: Electromagnetic Tether, Station-Keeping Maneuver, Orbital Maneuver, Tether Systems, Disturbing Forces. 1. Introduction Space tether is a promising and innovating field of study, and many articles, technical reports, books and even missions have used this concept through the recent decades. An overview of the space tethered flight tests missions includes the Gemini tether experiments, the OEDIPUS flights, the TSS-1 experiments, the SEDS flights, the PMG, TiPs, ATEx missions, etc [1-6]. This paper analyses the potential of using an electrodynamic tether to provide propulsion to a space system with an electric power supply and no fuel consumption. The present work is focused on orbit maintenance and re-boosts maneuvers for tethered satellite systems (TSS). This type of system consists of two or more satellites orbiting around a planet linked by a cable or a tether [7]. -

Arxiv:2003.07985V1

Tether Capture of spacecraft at Neptune a,b b, J. R. Sanmart´ın , J. Pel´aez ∗ aReal Academia de Ingenier´ıa of Spain bUniversidad Polit´ecnica de Madrid, Pz. C.Cisneros 3, Madrid 28040, Spain Abstract Past planetary missions have been broad and detailed for Gas Giants, compared to flyby missions for Ice Giants. Presently, a mission to Neptune using electrodynamic tethers is under consideration due to the ability of tethers to provide free propulsion and power for orbital insertion as well as additional exploratory maneuvering — providing more mission capability than a standard orbiter mission. Tether operation depends on plasma density and magnetic field B, though tethers can deal with ill-defined density profiles, with the anodic segment self-adjusting to accommodate densities. Planetary magnetic fields are due to currents in some small volume inside the planet, magnetic-moment vector, and typically a dipole law approximation — which describes the field outside. When compared with Saturn and Jupiter, the Neptunian magnetic structure is significantly more complex: the dipole is located below the equatorial plane, is highly offset from the planet center, and at large tilt with its rotation axis. Lorentz-drag work decreases quickly with distance, thus requiring spacecraft periapsis at capture close to the planet and allowing the large offset to make capture efficiency (spacecraft-to-tether mass ratio) well above a no-offset case. The S/C might optimally reach periapsis when crossing the meridian plane of the dipole, with the S/C facing it; this convenient synchronism is eased by Neptune rotating little during capture. Calculations yield maximum efficiency of approximately 12, whereas a 10◦ meridian error would reduce efficiency by about 6%. -

A Message from the Director-General

A message from the Director-General I am very glad to be able to contribute this introduction to the first CERN COURIER of 1962. We have been without our journal for quite a long time, but we can now look forward to seeing it regularly again once a month. CERN COURIER is an important publication of ours, because not only does it serve to link together all members of the Organization, and their families, but it also has a strong part to play in showing CERN to the member States and to other parts of the world. This institution is remarkable in two ways: It is a place where the most fantastic experiments are carried out. It is a place where international co-operation actually exists. These two statements call for some explanation: It is difficult to appreciate the full significance of an activity if all one's energy is devoted to it. The everyday work accomplished at CERN Message from the Director- hides its real importance, the tremendous impact of what is going on General 2 here. What I am tempted to call the 'romantic glory' of our work is over looked. But you must never forget that in the PS, at the moment when Last year at CERN 3 the beam hits a target, at the moment when matter undergoes a transfer Radiation safety at CERN . 4 of energy, you have created conditions which probably do not exist Johan Baarli 8 anywhere else in the universe, not even in the explosions of super-nova stars. What an opportunity to penetrate the innermost structure of matter CERN theoretical conference . -

Optimal Control of Electrodynamic Tethers

Air Force Institute of Technology AFIT Scholar Theses and Dissertations Student Graduate Works 6-1-2008 Optimal Control of Electrodynamic Tethers Robert E. Stevens Follow this and additional works at: https://scholar.afit.edu/etd Part of the Aerospace Engineering Commons Recommended Citation Stevens, Robert E., "Optimal Control of Electrodynamic Tethers" (2008). Theses and Dissertations. 2656. https://scholar.afit.edu/etd/2656 This Dissertation is brought to you for free and open access by the Student Graduate Works at AFIT Scholar. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of AFIT Scholar. For more information, please contact [email protected]. OPTIMAL CONTROL OF ELECTRODYNAMIC TETHER SATELLITES DISSERTATION Robert E. Stevens, Commander, USN AFIT/DS/ENY/08-13 DEPARTMENT OF THE AIR FORCE AIR UNIVERSITY AIR FORCE INSTITUTE OF TECHNOLOGY Wright-Patterson Air Force Base, Ohio APPROVED FOR PUBLIC RELEASE; DISTRIBUTION UNLIMITED The views expressed in this dissertation are those of the author and do not reflect the official policy or position of the United States Air Force, Department of Defense, or the United States Government. AFIT/DS/ENY/08-13 OPTIMAL CONTROL OF ELECTRODYNAMIC TETHER SATELLITES DISSERTATION Presented to the Faculty Graduate School of Engineering and Management Air Force Institute of Technology Air University Air Education and Training Command in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy Robert E. Stevens, BS, MS Commander, USN June 2008 APPROVED FOR PUBLIC RELEASE; DISTRIBUTION UNLIMITED AFIT/DS/ENY/08-13 OPTIMAL CONTROL OF ELECTRODYNAMIC TETHER SATELLITES Robert E. Stevens, BS, MS Commander, USN Approved: Date ____________________________________ William E. -

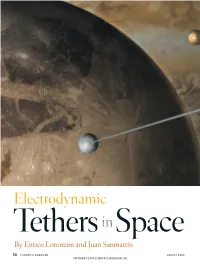

Electrodynamic Tethers in Space

Electrodynamic Tethersin Space By Enrico Lorenzini and Juan Sanmartín 50 SCIENTIFIC AMERICAN AUGUST 2004 COPYRIGHT 2004 SCIENTIFIC AMERICAN, INC. ARTIST’S CONCEPTION depicts how a tether system might operate on an exploratory mission to Jupiter and its moons. As the apparatus and its attached research instruments glide through space between Europa and Callisto, the tether would harvest power from its interaction with the vast magnetic field generated by Jupiter, which looms in the background. By manipulating current flow along the kilometers-long tether, mission controllers could change the tether system’s altitude and direction of flight. By exploiting fundamental physical laws, tethers may provide low-cost electrical power, drag, thrust, and artificial gravity for spaceflight www.sciam.com SCIENTIFIC AMERICAN 51 COPYRIGHT 2004 SCIENTIFIC AMERICAN, INC. There are no filling stations in space. Every spacecraft on every mission has to carry all the energy Tethers are systems in which a flexible cable connects two sources required to get its job done, typically in the form of masses. When the cable is electrically conductive, the ensemble chemical propellants, photovoltaic arrays or nuclear reactors. becomes an electrodynamic tether, or EDT. Unlike convention- The sole alternative—delivery service—can be formidably al arrangements, in which chemical or electrical thrusters ex- expensive. The International Space Station, for example, will change momentum between the spacecraft and propellant, an need an estimated 77 metric tons of booster propellant over its EDT exchanges momentum with the rotating planet through the anticipated 10-year life span just to keep itself from gradually mediation of the magnetic field [see illustration on opposite falling out of orbit.