Performance Evaluation of Commodity Systems for Cluster Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

HP Omnibook XE3 (GF)

HP Omnibook XE3 (GF) Corporate Evaluator’s Guide Notice Technology Code GF This guide contains information about only the Omnibook XE3, technology code GF. The technology code is shown on the serial-number label on the bottom of the computer, and it is also included in the BIOS version number. Information about models with other technology codes may be available in other guides. This manual and any examples contained herein are provided “as is” and are subject to change without notice. Hewlett-Packard Company makes no warranty of any kind with regard to this manual, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. Hewlett-Packard Co. shall not be liable for any errors or for incidental or consequential damages in connection with the furnishing, performance, or use of this manual or the examples herein. Consumer transactions in Australia and the United Kingdom: The above disclaimers and limitations shall not apply to Consumer transactions in Australia and the United Kingdom and shall not affect the statutory rights of Consumers. © Copyright Hewlett-Packard Company 2000–2001. All rights reserved. Reproduction, adaptation, or translation of this manual is prohibited without prior written permission of Hewlett-Packard Company, except as allowed under the copyright laws. The programs that control this product are copyrighted and all rights are reserved. Reproduction, adaptation, or translation of those programs without prior written permission of Hewlett-Packard Co. is also prohibited. Portions of the programs that control this product may also be copyrighted by Microsoft Corporation, Phoenix Technologies, Ltd., ESS, S3 Incorporated, Accton, Trident Microsystems Incorporated, Acer Laboratories Incorporated, and Adobe Systems Incorporated. -

DVCS Or a New Way to Use Version Control Systems for Freebsd

Brief history of VCS FreeBSD context & gures Is Arch/baz suited for FreeBSD? Mercurial to the rescue New processes & policies needed Conclusions DVCS or a new way to use Version Control Systems for FreeBSD Ollivier ROBERT <[email protected]> BSDCan 2006 Ottawa, Canada May, 12-13th, 2006 Ollivier ROBERT <[email protected]> DVCS or a new way to use Version Control Systems for FreeBSD Brief history of VCS FreeBSD context & gures Is Arch/baz suited for FreeBSD? Mercurial to the rescue New processes & policies needed Conclusions Agenda 1 Brief history of VCS 2 FreeBSD context & gures 3 Is Arch/baz suited for FreeBSD? 4 Mercurial to the rescue 5 New processes & policies needed 6 Conclusions Ollivier ROBERT <[email protected]> DVCS or a new way to use Version Control Systems for FreeBSD Brief history of VCS FreeBSD context & gures Is Arch/baz suited for FreeBSD? Mercurial to the rescue New processes & policies needed Conclusions The ancestors: SCCS, RCS File-oriented Use a subdirectory to store deltas and metadata Use lock-based architecture Support shared developments through NFS (fragile) SCCS is proprietary (System V), RCS is Open Source a SCCS clone exists: CSSC You can have a central repository with symlinks (RCS) Ollivier ROBERT <[email protected]> DVCS or a new way to use Version Control Systems for FreeBSD Brief history of VCS FreeBSD context & gures Is Arch/baz suited for FreeBSD? Mercurial to the rescue New processes & policies needed Conclusions CVS, the de facto VCS for the free world Initially written as shell wrappers over RCS then rewritten in C Centralised server Easy UI Use sandboxes to avoid locking Simple 3-way merges Can be replicated through CVSup or even rsync Extensive documentation (papers, websites, books) Free software and used everywhere (SourceForge for example) Ollivier ROBERT <[email protected]> DVCS or a new way to use Version Control Systems for FreeBSD Brief history of VCS FreeBSD context & gures Is Arch/baz suited for FreeBSD? Mercurial to the rescue New processes & policies needed Conclusions CVS annoyances and aws BUT.. -

Zerohack Zer0pwn Youranonnews Yevgeniy Anikin Yes Men

Zerohack Zer0Pwn YourAnonNews Yevgeniy Anikin Yes Men YamaTough Xtreme x-Leader xenu xen0nymous www.oem.com.mx www.nytimes.com/pages/world/asia/index.html www.informador.com.mx www.futuregov.asia www.cronica.com.mx www.asiapacificsecuritymagazine.com Worm Wolfy Withdrawal* WillyFoReal Wikileaks IRC 88.80.16.13/9999 IRC Channel WikiLeaks WiiSpellWhy whitekidney Wells Fargo weed WallRoad w0rmware Vulnerability Vladislav Khorokhorin Visa Inc. Virus Virgin Islands "Viewpointe Archive Services, LLC" Versability Verizon Venezuela Vegas Vatican City USB US Trust US Bankcorp Uruguay Uran0n unusedcrayon United Kingdom UnicormCr3w unfittoprint unelected.org UndisclosedAnon Ukraine UGNazi ua_musti_1905 U.S. Bankcorp TYLER Turkey trosec113 Trojan Horse Trojan Trivette TriCk Tribalzer0 Transnistria transaction Traitor traffic court Tradecraft Trade Secrets "Total System Services, Inc." Topiary Top Secret Tom Stracener TibitXimer Thumb Drive Thomson Reuters TheWikiBoat thepeoplescause the_infecti0n The Unknowns The UnderTaker The Syrian electronic army The Jokerhack Thailand ThaCosmo th3j35t3r testeux1 TEST Telecomix TehWongZ Teddy Bigglesworth TeaMp0isoN TeamHav0k Team Ghost Shell Team Digi7al tdl4 taxes TARP tango down Tampa Tammy Shapiro Taiwan Tabu T0x1c t0wN T.A.R.P. Syrian Electronic Army syndiv Symantec Corporation Switzerland Swingers Club SWIFT Sweden Swan SwaggSec Swagg Security "SunGard Data Systems, Inc." Stuxnet Stringer Streamroller Stole* Sterlok SteelAnne st0rm SQLi Spyware Spying Spydevilz Spy Camera Sposed Spook Spoofing Splendide -

Everything You Need to Know About Openjdk's Move to Git and Github

Menu Topics Archives Downloads Subscribe Everything you need to know JAVA 17 about OpenJDK’s move to Git and GitHub Everything you need to know Blame or thank BitKeeper about OpenJDK’s move to Git Why not Mercurial? and GitHub Why Git? Why GitHub? Why the move, and why now? The move from Mercurial to Git Getting the source code and provided an opportunity to consolidate building the OpenJDK the source code repositories. Conclusion by Ian Darwin Dig deeper May 14, 2021 Download a PDF of this article Have you ever built your own Java Development Kit from source? Most end users of the JDK will not need to build their own JDK from the Oracle source code. I’ve needed to do that only a few times when I was running on the OpenBSD UNIX-like system, which is not one of the three supported platforms. Sure, you might want to build your own JDK to try out a new feature that you think should be added to Java. You might choose to build from source to be sure you are running a more trustworthy binary. Having the complete source code readily available, and now in a more commonly used download format, means it is easier than ever to build your own JDK. Yes, it’s a better-documented, easily configured process than in the past. But it’s still a bit confusing. The source code for the OpenJDK recently moved from the Mercurial version control system (VCS) to the Git VCS and the GitHub repository system, and that’s probably a good thing. -

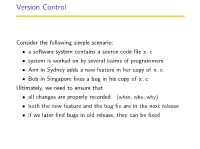

Version Control

Version Control Consider the following simple scenario: • a software system contains a source code file x.c • system is worked on by several teams of programmers • Ann in Sydney adds a new feature in her copy of x.c • Bob in Singapore fixes a bug in his copy of x.c Ultimately, we need to ensure that • all changes are properly recorded (when, who, why) • both the new feature and the bug fix are in the next release • if we later find bugs in old release, they can be fixed Version Control Systems A version control system allows software developers to: • work simultaneously and coperatively on a system • document when, who, & why changes made • discuus and approve changes • recreate old versions of a system when needed • multiple versions of system can be distributed, tested, merged This allows change to be managed/controlled in a systematic way. VCSs also try to minimise resource use in maintaining multiple versions. Unix VCS - Generation 1 (Unix) 1970’s ... SCCS (source code control system) • first version control system • centralized VCS - single central repository • introduced idea of multiple versions via delta’s • single user model: lock - modify - unlock • only one user working on a file at a time 1980’s ... RCS (revision control system) • similar functionality to SCCS (essentially a clean open-source re-write of SCCS) • centralized VCS - single central repository • single user model: lock - modify - unlock • only one user working on a file at a time • still available and in use Unix VCS - Generation 2 (Unix) 1990 ... CVS (concurrent version system) • centralized VCS - single central repository • locked check-out replaced by copy-modify-merge model • users can work simultaneously and later merge changes • allows remote development essential for open source projects • web-accessible interface promoted wide-dist projects • poor handling of file metadata, renames, links Early 2000’s .. -

Was Mr. Hewlett Right? Mergers, Advertising and the PC Industry

Was Mr. Hewlett Right? Mergers, Advertising and the PC Industry Michelle Sovinsky Goeree 1 Preliminary, please do not cite. March, 2005 (First Version June 2002) Abstract In markets characterized by rapid change, such as the personal computer industry, con- sumers may not know every available product. Failing to incorporate limited information and the strategic role of informative advertising into merger analysis may yield misleading results regarding industry competitiveness. This is of particular importance when accessing the welfare impact of mergers. I use the parameters from a model of limited consumer in- formation to (1) estimate the effect on profits and consumer welfare from mergers and (2) to examine the role of advertising as it relates to market power and the implications for an- titrust policy. The methodology used to evaluate the impact of mergers follows Nevo(2000), but incorporates limited information and strategic choices of advertising. I simulate post- merger price and advertising equilibria for the Compaq-HP merger and for a hypothetical merger. I decompose the change in prices into changes due to increased concentration and changes due to the influence of advertising. The results indicate advertising can be used to increase market power when consumers have limited information, which suggests revisions to the current model used to access the impact of mergers in antitrust cases. JEL Classification: L15, D12, M37, D83 Keywords: merger analysis, informative advertising, discrete-choice models, product differ- entiation, structural estimation 1 This paper is based on various chapters from my 2002 dissertation. Special thanks to my dissertation advisors, Steven Stern and Simon Anderson, for their guidance. -

Chapter 1 - Describing Open Source & Free Software Communities

PROTECTING THE VIRTUAL COMMONS Self-organizing open source and free software communities and innovative intellectual property regimes Ruben van Wendel de Joode Hans de Bruijn Michel van Eeten Delft September 2002 Draft version (official version to be published by Asser Press in 2003) For questions, suggestions or comments please contact: Ruben van Wendel de Joode at [email protected] © 2002 Ruben van Wendel de Joode, Hans de Bruijn and Michel van Eeten Table of contents ACKNOWLEDGEMENTS..............................................................................................5 INTRODUCTION.............................................................................................................6 Questions guiding the research...................................................................................6 Structure of the report .................................................................................................7 CHAPTER 1 - DESCRIBING OPEN SOURCE & FREE SOFTWARE COMMUNITIES...............................................................................................................9 1.1 - INTRODUCTION........................................................................................................9 1.2 - POPULARITY OF OPEN SOURCE AND FREE SOFTWARE.............................................9 1.3 - HISTORICAL DEVELOPMENT OF OPENNESS & FREEDOM.......................................11 The origin of the Internet ..........................................................................................11 Richard -

Linux Hardware Compatibility HOWTO

Linux Hardware Compatibility HOWTO Steven Pritchard Southern Illinois Linux Users Group / K&S Pritchard Enterprises, Inc. <[email protected]> 3.2.4 Copyright © 2001−2007 Steven Pritchard Copyright © 1997−1999 Patrick Reijnen 2007−05−22 This document attempts to list most of the hardware known to be either supported or unsupported under Linux. Copyright This HOWTO is free documentation; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free software Foundation; either version 2 of the license, or (at your option) any later version. Linux Hardware Compatibility HOWTO Table of Contents 1. Introduction.....................................................................................................................................................1 1.1. Notes on binary−only drivers...........................................................................................................1 1.2. Notes on proprietary drivers.............................................................................................................1 1.3. System architectures.........................................................................................................................1 1.4. Related sources of information.........................................................................................................2 1.5. Known problems with this document...............................................................................................2 1.6. New versions of this document.........................................................................................................2 -

Designing a Fast Index Format for Git

Free University of Bolzano - Bozen Bachelor Thesis Designing a Fast Index Format for Git Author: Supervisor: Thomas Gummerer Dr. Johann Gamper A thesis submitted in fulfillment of the requirements for the degree of Bachelor in Computer Science in July 2013 Contents List of Figures iv Abstract v 1 Introduction1 1.1 Motivation...................................1 1.2 Problem Description and Objectives.....................2 1.3 Organization of the Thesis...........................2 2 Related Work4 2.1 Version Control Systems............................4 2.2 Types of Version Control Systems.......................4 2.3 Git........................................5 3 A New Index for Git7 3.1 The Git Index File...............................7 3.2 Index v2.....................................8 3.3 The New Index File Format v5........................ 11 4 Implementation 15 4.1 Convert Index.................................. 15 4.2 Read Index................................... 15 4.3 Internal Implementation............................ 16 4.4 New In-memory Format............................ 18 4.5 Index Reading API............................... 19 4.6 Writing the New Index Format........................ 20 5 Discussion 22 5.1 Alternative Solutions.............................. 22 5.1.1 B-tree Format............................. 22 5.1.2 Append-only Data Structure..................... 22 5.1.3 Database Format............................ 23 5.1.4 Padded Structure............................ 23 5.2 Discussion.................................... 24 6 -

SCO Openserver Handbook Changing the Default Editor

SCO OpenServer Handbook How to configure and start using an SCO OpenServer system 1983-2003 Caldera International, Inc. All rights reserved. This publication is protected under copyright laws and international treaties. 1976-2001 The Santa Cruz Operation, Inc.; 1989-1994 Acer Incorporated; 1989-1994 Acer America Corporation; 1990-1994 Adaptec, Inc.; 1993 Advanced Micro Devices, Inc.; 1990 Altos Computer Systems; 1992-1994 American Power Conversion, Inc.; 1988 Archive Corporation; 1990 ATI Technologies, Inc.; 1976-1992 AT&T; 1992-1994 AT&T Global Information Solutions Company; 1993 Berkeley Network Software Consortium; 1985-1986 Bigelow & Holmes; 1988-1991 Carnegie Mellon University; 1989-1990 Cipher Data Products, Inc.; 1985-1992 Compaq Computer Corporation; 1987-1994 Computer Associates, Inc.; 1986-1987 Convergent Technologies, Inc.; 1990-1993 Cornell University; 1985-1994 Corollary, Inc.; 1990-1994 Distributed Processing Technology; 1991 D.L.S. Associates; 1990 Free Software Foundation, Inc.; 1989-1991 Future Domain Corporation; 1994 Isogon Corporation; 1991 Hewlett-Packard Company; 1994 IBM Corporation; 1990-1993 Intel Corporation; 1989 Irwin Magnetic Systems, Inc.; 1988-1991 JSB Computer Systems Ltd.; 1989-1994 Dirk Koeppen EDV- Beratungs-GmbH; 1989-1991 Massachusetts Institute of Technology; 1985-1992 Metagraphics Software Corporation; 1980-1994 Microsoft Corporation; 1984-1989 Mouse Systems Corporation; 1989 Multi-Tech Systems, Inc.; 1991 National Semiconductor Corporation; 1990 NEC Technologies, -

HP Omnibook 6000

HP OmniBook 6000 !"#$%&' ()* +, -./01234 567 89:;<=>?8> :01%*@6A ./BCA@6DEF"AG> A HIJKALM:NO&'()P QR% STUVWXYZ[\]^_`;5a 8>6STUV;WXYZ[ \bc deYZf=gU% © hg&'() 2000%hg %ihgfjkKlm&'()nopqkr staEuAvw% xyzG>{|hg %lm&'()nopqkr} staEuA vw~{|% xyG>{|}r@()ESystemSoft Corp.EPhoenix Technologies, Ltd.EATI Technologies Inc. ; Adobe Systems Incorporated 1 hg% h g#${|% MicrosoftEMS-DOS ; Windows ()X%Pentium ; ™ ™ Intel Inside Intel ()XCeleron ; SpeedStep Intel ()X%TrackPoint™ X>()X%Adobe ; Acrobat Adobe Systems Incorporated % Hewlett-Packard Company Mobile Computing Division 19310 Pruneridge Ave. Cupertino, CA 95014 2 HP HP PC {|% ¡kr¢£% !"# $%&'() *+ ,-./0 123 .456789:;<=>#& ?@A Recovery CD BCDE FGHI&JK LMKNOP QRS,-./0 TL#UVWX Y HP &Z[\]^_ HP FGHZ[O ()` &aCbcdH' efg HP & BFGHZ [hij efVWkBlamn opMicrosoft ¤¥¦§ kr@ Microsoft ¨©>Zkr¢£ (EULA) % ªkr«¬8>6G>] q]YZr./®¯°zG>¡4 r±¡²³´zA µ®¯°zG>%i¶f·¸g_¹YZ rº"»¼uA»uw% rsItu½¾ta¿u ªG>ªÀÁªYZrtaA u](a) ÂÃÄÅ(b) taAu¯°G>ÆÇÈÉ% *YZÊJiË*¡ÌÍÎ gKYZ Ï¡ ./gUA g%YZÐ`bÊJhg cÑhgf1Ò%YZÐ`bÊ J¡r@ÓÔhg#$zÕÖD×M*6ØgAÙ»¡ ¢£"ÚÕÖD±g¾ÛYZÐ0R.% Product Recovery CD-ROM%%Üݯ°ÓÔ Product Recovery CD-ROM] (i) Product Recovery CD-ROM ;ÞAßàÌ>{|ár>6ât¿¡ Product Recovery CD-ROM ¨ãä HP ¯°åæ%(ii) G> Product Recovery CD-ROM z @ Microsoft ./~礥¦§D@ Microsoft ¨©>Zkr¢£ (EULA) kr% & *+vwYZr± gU¥Ú èégUèéêÕÖ 4YZÆÇnoësÕÖ*^ìkr;¢£«¬ÊJ%èéíYZÊJ©î gUc±9ïð;uhAñòêÕÖ% xy@lm&'()nopqÊJYZ s±¡óôA9êõö } s±¡taAuh#÷ÌÍÎAøù(×9ú% z{ÜYZlûü«¬c&'()no#$¾ ýþÿ 30 +l ûü&'()r©î¡kr% 3 |}~YZÊJ¡ 23ࢣªr@&'(); ù% YZÊJ Ù»X \« A D>« A ¡ A.tAuh% *+sX *G>EtaA(×Ñ DFARS 252.227-7013 z¿¯°«¬ gUÓ«¬ (c)(1)(ii) =5a%Hewlett- Packard Company, 3000 Hanover Street, Palo Alto, CA 94304 U.S.A. -

Iron File Systems

IRON FILE SYSTEMS by Vijayan Prabhakaran B.E. Computer Sciences (Regional Engineering College, Trichy, India) 2000 M.S. Computer Sciences (University of Wisconsin-Madison) 2003 A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Sciences University of Wisconsin-Madison 2006 Committee in charge: Andrea C. Arpaci-Dusseau (Co-chair) Remzi H. Arpaci-Dusseau (Co-chair) David J. DeWitt Mary K. Vernon Mikko H. Lipasti ii iv v Abstract IRON FILE SYSTEMS Vijayan Prabhakaran Disk drives are widely used as a primary medium for storing information. While commodity file systems trust disks to either work or fail completely, modern disks exhibit complex failure modes such as latent sector faults and block corrup- tions, where only portions of a disk fail. In this thesis, we focus on understanding the failure policies of file systems and improving their robustness to disk failures. We suggest a new fail-partial failure model for disks, which incorporates realistic localized faults such as latent sector faults and block corruption. We then develop and apply a novel semantic failure analysis technique, which uses file system block type knowledge and transactional semantics, to inject interesting faults and investigate how commodity file systems react to a range of more realistic disk failures. We apply our technique to five important journaling file systems: Linux ext3, ReiserFS, JFS, XFS, and Windows NTFS. We classify their failure policies in a new taxonomy that measures their Internal RObustNess (IRON), which includes both failure detection and recovery techniques. Our analysis results show that commod- ity file systems store little or no redundant information, and contain failure policies that are often inconsistent, sometimes buggy, and generally inadequate in their abil- ity to recover from partial disk failures.