Orca.Cf.Ac.Uk/12297

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Prospectus Cardiff.Ac.Uk

2022 Cardiff University Undergraduate Prospectus cardiff.ac.uk 1 Welcome from a leading university . We are proud to be Wales’ only Croeso Russell (Croy-so - Welcome) Group University “Cardiff has a good reputation. I remember An international being amazed by the university, with facilities here and students from excited by the amount of choice you are more than given when it came to 120 countries selecting modules.” Phoebe, Biomedical Sciences, 2020 Driven by creativity and curiosity, Top 5 we strive to fulfil UK University our social, cultural and economic for research obligations to quality Cardiff, Wales Source: Research Excellence Framework, and the world. see page 18 2 Welcome Hello! I’m pleased to introduce you to Cardiff University. Choosing the right university is a major decision and it’s important that you choose the one that is right for you. Our prospectus describes what it is like to be an undergraduate at Cardiff University in the words of the people who know it best - our students, past and present, and staff. However, a prospectus can only go so far, and the best way to gain an insight into life at Cardiff University is to visit us and experience it for yourself. Whatever your choice, we wish you every success with your studies. Professor Colin Riordan 97% President and Vice-Chancellor of our graduates were in employment and/or further Contents study, due to start a new job or course, or doing Reasons to love Cardiff 4 Students from around the world 36 other activities such as A capital city 8 travelling, 15 months after Location – campus maps 38 A leading university 12 the end of their course.* Degree programmes Building a successful Source: Higher Education Statistics Agency, by Academic School 40 latest Graduate Outcomes Survey 2017/18, university 16 published by HESA in June 2020. -

The City of Cardiff Council, County Borough Councils of Bridgend, Caerphilly, Merthyr Tydfil, Rhondda Cynon Taf and the Vale of Glamorgan

THE CITY OF CARDIFF COUNCIL, COUNTY BOROUGH COUNCILS OF BRIDGEND, CAERPHILLY, MERTHYR TYDFIL, RHONDDA CYNON TAF AND THE VALE OF GLAMORGAN AGENDA ITEM NO: 7 THE GLAMORGAN ARCHIVES JOINT COMMITTEE 27 June 2014 REPORT FOR THE PERIOD 1 March – 31 May 2014 REPORT OF: THE GLAMORGAN ARCHIVIST 1. PURPOSE OF REPORT This report describes the work of Glamorgan Archives for the period 1 March to 31 May 2014. 2. BACKGROUND As part of the agreed reporting process the Glamorgan Archivist updates the Joint Committee quarterly on the work and achievements of the service. 3. Members are asked to note the content of this report. 4. ISSUES A. MANAGEMENT OF RESOURCES 1. Staff: establishment Maintain appropriate levels of staff There has been no staff movement during the quarter. From April the Deputy Glamorgan Archivist reduced her hours to 30 a week. Review establishment The manager-led regrading process has been followed for four staff positions in which responsibilities have increased since the original evaluation was completed. The posts are Administrative Officer, Senior Records Officer, Records Assistant and Preservation Assistant. All were in detriment following the single status assessment and comprise 7 members of staff. Applications have been submitted and results are awaited. 1 Develop skill sharing programme During the quarter 44 volunteers and work experience placements have contributed 1917 hours to the work of the Office. Of these 19 came from Cardiff, nine each from the Vale of Glamorgan and Bridgend, four from Rhondda Cynon Taf and three from outside our area: from Newport, Haverfordwest and Catalonia. In addition nine tours have been provided to prospective volunteers and two references were supplied to former volunteers. -

Heath Students Hit by Welsh NHS 'Crisis'

In this week’s issue: Stories from the Synod, NHS fees for international students revisited, morality in sport, INSRV issues and the continuation of Cagegate gair freewordrh ydd Monday October 27th 2014 | | Issue 1035 Heath students hit by Welsh NHS ‘crisis’ Students and staff fear exposing failings at University Hospital s the National Health Service concerns over “put[ting] their neck on is collective wariness amongst those with problems to speak to their EXCLUSIVE: in Wales comes under intense the line” – adding that staff would not the academic health community has tutors and academic representatives Pictured: Michael political scrutiny, a signifi cant want word of any problems with the led to some suggesting that students as a matter of protocol. e University O’Connell- Anumber of medical students have service to “get out.” on clinical degrees lack the same basic e University also declined to Hospital Davidson told Gair Rhydd that they are unable ose who did speak on the record right to free speech that is aff orded to comment on whether recent media of Wales to share their concerns about the either retracted their statements or those on other courses as a result of controversies had aff ected their ability (Photographer: service or the standard of teaching at asked for their names to be redacted strict fi tness to practice rules. to recruit students, off ering no remark Gair Rhydd) the University Hospital for fear of any from the fi nal version of this article. Cardiff University itself also adopted on whether or not they held concern ramifi cations. -

Buildings for the Performing Arts This Page Intentionally Left Blank Buildings for the Performing Arts a Design and Development Guide

Buildings for the Performing Arts This page intentionally left blank Buildings for the Performing Arts A design and development guide Second Edition Ian Appleton AMSTERDAM • BOSTON • HEIDELBERG • LONDON • NEW YORK • OXFORD PARIS • SAN DIEGO • SAN FRANCISCO • SINGAPORE • SYDNEY • TOKYO Architectural Architectural Press is an imprint of Elsevier Press Architectural Press is an imprint of Elsevier Ltd Linacre House, Jordan Hill, Oxford OX2 8DP 30 Corporate Road, Burlington, MA 01803 First published 1996 Reprinted 1997 Second edition 2008 Copyright © 2008 Ian Appleton. Published by Elsevier Limited. All rights reserved The right of Ian Appleton to be identified as the author of this work ha been asseted in accordance with the copyright, Designs and Patents Act 1988 No part of this publication may be reproduced stored in a retrieval system or transmitted in any form or by any means electronic, mechincal, photocopying, recording or otherwise without the prior written permission of the publisher Permission may be sought directly from Elsevier’s Science & Technology Rights Department in Oxford, UK: phone (ϩ44) (0) 1865 843830; fax (ϩ44) (0) 1865 853333; email: [email protected]. Alternatively you can submit your request online by visiting the Elsevier web site at http://elsevier.com/locate/ permissions, and selecting Obtaining permission to use Elsevier material Notice No responsibility is assumed by the publisher for any injury and/or damage to persons to property as a matter of products liability, negligence or otherwise, or from any use or operation of any methods, products, instructions or ideas contained in the material herein. Becuse or rapid advances in the medical sciences, in particular, independent verification of diagnoses and drug dosages should be made. -

Listing Particulars Dated 15 February 2021

LISTING PARTICULARS DATED 15 FEBRUARY 2021 CARDIFF UNIVERSITY (incorporated by Royal Charter) £ 100,000,000 3.000 per cent. Bonds due 2055 (to be consolidated and form a single series with the £300,000,000 3.000 per cent. Bonds due 2055 issued on 8 February 2016) Issue price: 128.960 per cent. plus 72 days' accrued interest in respect of the period from (and including) 7 December 2020 to (but excluding) 17 February 2021 The issue price of the £100,000,000 3.000 per cent. Bonds due 2055 (the "Further Bonds") of Cardiff University (the "Issuer") is 128.960 per cent. of their principal amount (plus 72 days' accrued interest in respect of the period from (and including) 7 December 2020 (the "Interest Commencement Date") to (but excluding) 17 February 2021 (the "Issue Date")). The Further Bonds will be consolidated and form a single series with the £300,000,000 3.000 per cent. Bonds due 2055 issued on 8 February 2016 and described in a Prospectus dated 4 February 2016 (the "Original Bonds" and, together with the Further Bonds, the "Bonds") on or about the date which is 40 days after the Issue Date. Upon consolidation, the aggregate principal amount of the Bonds will total £400,000.000. Unless previously redeemed or purchased or cancelled, the Bonds will be redeemed at their principal amount on 7 December 2055. The Bonds are subject to redemption, in whole but not in part, at their principal amount at the option of the Issuer at any time in the event of certain changes affecting taxation in the United Kingdom. -

Location Guide Accessible from All Parts of Britain

Routes to Cardiff Cardiff University By Road (£3.00 per person). See Map 2 for “One of the top teaching and experience we believe should be open Cardiff is served by the M4 and is easily location. Car parking in the University research institutions in the UK. to all, encouraging students from the Location Guide accessible from all parts of Britain. From car parks is extremely limited and a It has excellent facilities, often most deprived areas to apply and permit is required. However, there are succeed on Cardiff courses. the south west, take the M5 and from magnificent buildings, great several public car parks located close to When they arrive in Cardiff, our the south of England, follow major social and sporting facilities, 2013 A-roads to the M4. From Scotland, the University, all of which are marked students benefit from a stimulating the north of England and the Midlands, on Map 4. There is also pay and display wrapped up in a vibrant, cultural study environment, research-led travel via the M50 to the M4. car parking available on Park Place and city centre.” teaching and interaction with within the civic centre (along College academics working at the frontiers of Travelling east on the M4 . Leave the Road, City Hall Road, King Edward VII Sunday Times Good University Guide knowledge in their field. motorway at Junction 32, follow the Avenue and Museum Avenue). This Cardiff University is about the freedom As a major higher education provider for A470, sign-posted City Centre. typically costs around £3.50 for two to explore, to learn and to discover. -

Reader/Chair in Architectural History and Theory Appointment Details Contents

Reader/Chair in Architectural History and Theory Appointment details Contents 1 Introduction 2 Cardiff University 3 About Cardiff 4 The Welsh School of Architecture 6 The Appointment 8 Essential/Desirable Criteria Introduction Cardiff is an ambitious university with a bold and strategic vision located in a beautiful and thriving capital city. Founded in 1883 by Royal Charter, Cardiff The Welsh School of Architecture is located is among the collective of 24 research- within the Bute Building which forms part of the intensive universities in the UK that make up University’s historical core of faculty buildings the Russell Group and is among the top 200 in Cardiff’s renowned civic centre. The School currently has some 625 students and has strong universities worldwide according to the QS relationships across related departments from rankings. In the 2014 Research Excellence Engineering to Geography, Planning and the Social Framework (REF), the University was ranked Sciences. Our existing strengths include low 5th for quality of research across the UK. carbon environments, sustainable design, people and the built environment, building technologies, urban regeneration, and architectural and urban history. Architecture is ranked 46th in the 2018 QS World Rankings and is consistently placed in the Top 5 schools of architecture in UK league tables compiled by the Times, Guardian and Complete University Guide. 1 Appointment of Reader/Chair in Architectural History and Theory Cardiff University Teaching excellence, informed by research strength, is also also The University in numbers high on the University agenda, which aims to develop a vibrant community recruiting the brightest students from all strata of 1 With over 28,000 students and 6,000 staff, society with a particular focus on underrepresented groups. -

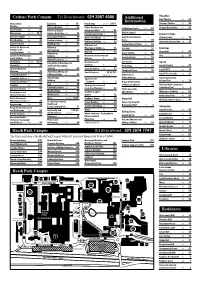

PROGRAMME Map 4 University and City Centre Cathays Park Campus (Map 4) Tel: 029 2087 4000

The Palaeontological Association 59th Annual Meeting 14th–17th December 2015 Cardiff University and Amgueddfa Cymru – National Museum Wales PROGRAMME Map 4 University and City Centre Cathays Park Campus (Map 4) Tel: 029 2087 4000 Aberconway Building C4 11 ARCCA C3 33 Architecture D3 45 MAP 5 Biosciences D4, D3 35 39 Business School C4, C4* 9 11* Bute Building D3 45 Careers & ACCESS ROUTE Employability Service D4 31 (2.5 miles) TO M4 West J32 TO HEATH Centre for Professional PONTYPRIDD, MERTHYR TYDFIL, PARK CAMPUS Legal Studies D4 28 BRIDGEND AND SWANSEA Chaplaincy D4 26 1 Chemistry D3*, E3 39* 25 Computer Science & Informatics E3 58 89 CUBRIC D4 30 Day Care Services D3 41 2 Deri House E3 60 Development and 98 Alumni Relations E3 60 ACCESS ROUTE Earth and Ocean Sciences D3 39 TO CATHAYS PARK CAMPUS Eastgate House F3 59 Engineering E3 58 English,Communication and Philosophy D4 16 Estates F3 66 Eye Clinic D4 15 CURRYS (3.5 miles) Finance F3 66 ACCESS ROUTE TO M4 East J29 NEWPORT, Glamorgan Building D3 49 TO TALYBONT BRISTOL AND LONDON Global Opportunity Centre D3 41 Graduate Centre D3 38 4 Hadyn Ellis Building D4 10 21 Health Centre D4 37 124 TESCO Healthcare Sciences F3 59 History, Archaeology and Religion D4 16 65 5 Human Resources F3 66 IT Services D3 41 6 International Office E3 60 John Percival Building D4 16 Journalism,Media and 121 Cultural Studies D3 45 138 7 127 Julian Hodge Building C4 14 8 Law D4, D4* 24 28* University Buildings 131 Lifelong Learning E3 42 Halls of Residence 121 Main Building D3 39 Shopping Area Mathematics E3 -

Report of the RIBA Visiting Board to Cardiff University the Welsh School of Architecture

Royal Institute of British Architects Report of the RIBA visiting board to Cardiff University The Welsh School of Architecture Date of visiting board: 7 & 8 July 2016 Confirmed by RIBA Education Committee: 7 December 2016 1 Details of institution hosting course/s (report part A) Cardiff University Bute Building King Edward VII Avenue Cardiff CF10 3NB 2 Head of School Professor Chris Tweed 3 Courses offered for validation Part 1 BSc (Hons) in Architectural Studies Part 2 MArch Part 3 Diploma/MA in Architecture: Professional Studies 4 Course leaders Dr Andrew Roberts, Director of Learning and Teaching Ms Juliet Odgers, BSc (Hons) Architecture Scheme Convenor Dr Juliet Davis, MArch Convenor Professor Sarah Lupton Part 3 5 Awarding body Cardiff University 6 The visiting board David Howarth – Chair Pam Cole – Vice Chair Nick Hayhurst Alison Coutinho Jonathan Jones, regional representative Stephanie Beasley-Suffolk, Validation Manager – in attendance. 7 Procedures and criteria for the visit The visiting board was carried out under the RIBA procedures for validation and validation criteria for UK and international courses and examinations in architecture (published July 2011, and effective from September 2011); this document is available at www.architecture.com. 8 Proposals of the visiting board At its meeting on 7 December 2016 the RIBA Education Committee confirmed unconditional revalidation of: Part 1 BSc (Hons) Architectural Studies Part 2 MArch Part 3 Diploma/MA in Architecture: Professional Studies The next full visiting board will take -

This Is an Open Access Document Downloaded from ORCA, Cardiff University's Institutional Repository

This is an Open Access document downloaded from ORCA, Cardiff University's institutional repository: http://orca.cf.ac.uk/65921/ This is the author’s version of a work that was submitted to / accepted for publication. Citation for final published version: Davey, Elaine and Thomas, Alun Huw 2014. 'Chief creator of Modern Wales'. The neglected legacy of Percy Thomas. North American Journal of Welsh Studies 9 , pp. 54-70. file Publishers page: Please note: Changes made as a result of publishing processes such as copy-editing, formatting and page numbers may not be reflected in this version. For the definitive version of this publication, please refer to the published source. You are advised to consult the publisher’s version if you wish to cite this paper. This version is being made available in accordance with publisher policies. See http://orca.cf.ac.uk/policies.html for usage policies. Copyright and moral rights for publications made available in ORCA are retained by the copyright holders. ‘CHIEF CREATOR OF MODERN WALES’: THE NEGLECTED LEGACY OF PERCY THOMAS Elaine Davey and Huw Thomas1 School of Planning and Geography, Cardiff University Abstract The architecture of Percy Thomas, and the practice he founded (Percy Thomas Partnership), are neglected aspects of the development of twentieth century Wales. Twice president of the Royal Institute of British Architects, and awarded its Gold Medal, the architect’s work remains largely undocumented and unanalysed. This paper begins to address that deficiency. It argues that by virtue of its breadth and nature the practice’s work both responded to and helped shape perceptions of Wales as a modernising nation-state. -

The Neglected Legacy of Percy Thomas

‘CHIEF CREATOR OF MODERN WALES’: THE NEGLECTED LEGACY OF PERCY THOMAS Elaine Davey and Huw Thomas1 School of Planning and Geography, Cardiff University Abstract The architecture of Percy Thomas, and the practice he founded (Percy Thomas Partnership), are neglected aspects of the development of twentieth century Wales. Twice president of the Royal Institute of British Architects, and awarded its Gold Medal, the architect’s work remains largely undocumented and unanalysed. This paper begins to address that deficiency. It argues that by virtue of its breadth and nature the practice’s work both responded to and helped shape perceptions of Wales as a modernising nation-state. In so doing, it identified itself with, and benefited from, a political project which assumed hegemonic status in Wales in the inter-war period and beyond. Yet the practice was not simply responding to clients’ requirements, as is illustrated by the process of designing the administrative block for the National Folk Museum at St Fagan’s in the 1960s. This reveals the contribution the architects made to reinterpreting modernity, amidst competing conceptions of Welsh identity and futures for the nation. Introduction: It reads like a tricky question in a Welsh-themed edition of Trivial Pursuits: ‘which Welsh architect was awarded the gold medal of the Royal Institute of British Architects (RIBA), knighted, described on his retirement as “the chief creator of modern Wales” and yet has not been the subject of any monographs or extended studies of his work ?’ Percy Thomas (1883-1969) is that man, and this paper begins the process of redressing this neglect. -

Residences Libraries Additional Information Cathays Park

Cathays Park Campus Tel Switchboard: 029 2087 4000 Additional Theatres Information New Theatre E3 61 Aberconway Eye Clinic C3* 33* Psychology D4, D4* 24 30* Sherman Theatre E3 40 Building C5 11 Finance Division F3 66 Public Relations and All Nations Centre B7 89 Chapter Arts Centre A2 62 Architecture D3 45 Fitness & Squash Communications D3 41 British Council E3 53 Biosciences D4, D3 35 39 Centre – Park Place D4 34 Purchasing DivisionF3 66 Concert Halls Business School C5 11 Glamorgan Building D3 49 Queen’s Buildings E3 58 Cardiff International St David’s Hall D2 76 Bute Building D3 45 Graduate Centre D3 38 Arena E1 84 Redwood Building C3 33 University Concert Hall C4 19 Careers Service C4 25 Health Centre D4 37 Religious and Central Police Station D3 54 Centre for Advanced History & Theological Studies D4 16 City Hall D3 55 Cinemas Studies in the Archaeology D4 16 Social Sciences D3 41 Registry F3 66 Cineworld E2 83 Humanities Coach Station D1 86 Centre for Professional Building D4 16 Research and Commercial Vue D1 99 County Library E2 80 Legal Studies D4 28 Division F3 66 Human Resources F3 66 Chaplaincy C4, D4 10 26 Residences and Law Courts D3 56 International Development Sport Catering B6 3 Chemistry D3 39 and English Language Post Office D2 71 Cardiff City FC A1 87 City and Regional Services D3 41 Security Centre D4 35 Temple of Peace D3 36 Cardiff RFC D2 78 Planning D3 49 Journalism, Media and Social Sciences 31 42 49* Cardiff Tennis Club D3 57 Computing Centre D3 41 Cultural Studies D3 45 D4, E3, D3* University of Wales Registry