AGE VERIFICATION 1 Do You Know the Wooly Bully?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Historic Recordings of the Song Desafinado: Bossa Nova Development and Change in the International Scene1

The historic recordings of the song Desafinado: Bossa Nova development and change in the international scene1 Liliana Harb Bollos Universidade Federal de Goiás, Brasil [email protected] Fernando A. de A. Corrêa Faculdade Santa Marcelina, Brasil [email protected] Carlos Henrique Costa Universidade Federal de Goiás, Brasil [email protected] 1. Introduction Considered the “turning point” (Medaglia, 1960, p. 79) in modern popular Brazi- lian music due to the representativeness and importance it reached in the Brazi- lian music scene in the subsequent years, João Gilberto’s LP, Chega de saudade (1959, Odeon, 3073), was released in 1959 and after only a short time received critical and public acclaim. The musicologist Brasil Rocha Brito published an im- portant study on Bossa Nova in 1960 affirming that “never before had a happe- ning in the scope of our popular music scene brought about such an incitement of controversy and polemic” (Brito, 1993, p. 17). Before the Chega de Saudade recording, however, in February of 1958, João Gilberto participated on the LP Can- ção do Amor Demais (Festa, FT 1801), featuring the singer Elizete Cardoso. The recording was considered a sort of presentation recording for Bossa Nova (Bollos, 2010), featuring pieces by Vinicius de Moraes and Antônio Carlos Jobim, including arrangements by Jobim. On the recording, João Gilberto interpreted two tracks on guitar: “Chega de Saudade” (Jobim/Moraes) and “Outra vez” (Jobim). The groove that would symbolize Bossa Nova was recorded for the first time on this LP with ¹ The first version of this article was published in the Anais do V Simpósio Internacional de Musicologia (Bollos, 2015), in which two versions of “Desafinado” were discussed. -

Into the Mainstream Guide to the Moving Image Recordings from the Production of Into the Mainstream by Ned Lander, 1988

Descriptive Level Finding aid LANDER_N001 Collection title Into the Mainstream Guide to the moving image recordings from the production of Into the Mainstream by Ned Lander, 1988 Prepared 2015 by LW and IE, from audition sheets by JW Last updated November 19, 2015 ACCESS Availability of copies Digital viewing copies are available. Further information is available on the 'Ordering Collection Items' web page. Alternatively, contact the Access Unit by email to arrange an appointment to view the recordings or to order copies. Restrictions on viewing The collection is open for viewing on the AIATSIS premises. AIATSIS holds viewing copies and production materials. Contact AFI Distribution for copies and usage. Contact Ned Lander and Yothu Yindi for usage of production materials. Ned Lander has donated production materials from this film to AIATSIS as a Cultural Gift under the Taxation Incentives for the Arts Scheme. Restrictions on use The collection may only be copied or published with permission from AIATSIS. SCOPE AND CONTENT NOTE Date: 1988 Extent: 102 videocassettes (Betacam SP) (approximately 35 hrs.) : sd., col. (Moving Image 10 U-Matic tapes (Kodak EB950) (approximately 10 hrs.) : sd, col. components) 6 Betamax tapes (approximately 6 hrs.) : sd, col. 9 VHS tapes (approximately 9 hrs.) : sd, col. Production history Made as a one hour television documentary, 'Into the Mainstream' follows the Aboriginal band Yothu Yindi on its journey across America in 1988 with rock groups Midnight Oil and Graffiti Man (featuring John Trudell). Yothu Yindi is famed for drawing on the song-cycles of its Arnhem Land roots to create a mix of traditional Aboriginal music and rock and roll. -

Sustainable Aviation Fuels Road Map: Data Assumptions and Modelling

Sustainable Aviation Fuels Road Map: Data assumptions and modelling CSIRO Paul Graham, Luke Reedman, Luis Rodriguez, John Raison, Andrew Braid, Victoria Haritos, Thomas Brinsmead, Jenny Hayward, Joely Taylor and Deb O’Connell Centre of Policy Studies Phillip Adams May 2011 Enquiries should be addressed to: Paul Graham Energy Modelling Stream Leader CSIRO Energy Transformed Flagship PO Box 330 Newcastle NSW 2300 Ph: (02) 4960 6061 Fax: (02) 4960 6054 Email: [email protected] Copyright and Disclaimer © 2011 CSIRO To the extent permitted by law, all rights are reserved and no part of this publication covered by copyright may be reproduced or copied in any form or by any means except with the written permission of CSIRO. Important Disclaimer CSIRO advises that the information contained in this publication comprises general statements based on scientific research. The reader is advised and needs to be aware that such information may be incomplete or unable to be used in any specific situation. No reliance or actions must therefore be made on that information without seeking prior expert professional, scientific and technical advice. To the extent permitted by law, CSIRO (including its employees and consultants) excludes all liability to any person for any consequences, including but not limited to all losses, damages, costs, expenses and any other compensation, arising directly or indirectly from using this publication (in part or in whole) and any information or material contained in it. CONTENTS Contents ..................................................................................................................... -

San Fernando Valley Burbank, Burbank Sunrise, Calabasas

Owens Valley Bishop, Bishop Sunrise, Mammoth Lakes, Antelope Valley and Mammoth Lakes Sunrise Antelope Valley Sunrise, Lancaster, Lancaster Sunrise, Lancaster West, Palmdale, Santa Clarita Valley and Rosamond Santa Clarita Sunrise and Santa Clarita Valley San Fernando Valley Burbank, Burbank Sunrise, Calabasas, Crescenta Canada, Glendale, Glendale Sunrise, Granada Hills, Mid San Fernando Valley, North East Los Angeles, North San Fernando Valley, North Hollywood, Northridge/Chatsworth, Sherman Oaks Sunset, Studio City/Sherman Oaks, Sun Valley, Sunland Tujunga, Tarzana/Encino, Universal City Sunrise, Van Nuys, West San Fernando Valley and Woodland Hills History of District 5260 Most of us know the early story of Rotary, founded by Paul P. Harris in Chicago Illinois on Feb. 23, 1905. The first meeting was held in Room 711 of the Unity Building. Four prospective members attended that first meeting. From there Rotary spread immediately to San Francisco California, and on November 12, 1908 Club # 2 was chartered. From San Francisco, Homer Woods, the founding President, went on to start clubs in Oakland and in 1909 traveled to southern California and founded the Rotary Club of Los Angeles (LA 5) In 1914, at a fellowship meeting of 6 western Rotary Clubs H. J. Brunnier, Presi- dent of the Rotary Club of San Francisco, awoke in the middle of the night with the concept of Rotary Districts. He summoned a porter to bring him a railroad sched- ule of the United States, which also included a map of the USA, and proceeded to map the location of the 100 Rotary clubs that existed at that time and organized them into 13 districts. -

The Money Band Songlist

THE MONEY BAND SONGLIST 50s Bobby Darin Mack the Knife Somewhere Beyond the Sea Ben E King Stand By Me Carl Perkins Blue Suede Shoe Elvis Presley Heartbreak Hotel Hound Dog Jailhouse Rock Frank Sinatra Fly Me To The Moon Johnny Cash Folsom Prison Blues Louis Armstrong What A Wonderful World Ray Charles I Got A Woman Wha’d I Say 60s Beach Boys Surfing USA Beatles Birthday Michelle Norwegian Wood I Feel Fine I Saw Her Standing There Bobby Darin Dream Lover Chantays Pipeline Chuck Berry Johnny B Good Dick Dale Miserlou Wipe Out Elvis Presley All Shook Up Can’t Help Falling In Love Heartbreak Hotel It’s Now or Never Little Less Conversation Little Sister Suspicious Minds Henri Mancini Pink Panther Jerry Lee Lewis Great Balls of Fire Whole Lotta Shakin Jimi Hendrix Fire Hey Joe Wind Cries Mary Johnny Rivers Secret Agent Man Johnny Cash Ring of Fire Kingsmen Louie Louie Otis Redding Dock of the Bay Ricky Nelson Hello Marylou Traveling Man Rolling Stones Paint It Black Jumping Jack Flash Satisfaction Cant Always Get What You Want Beast of Burden Sam the Sham & the Pharaohs Wooly Bully Tommy James Mony Mony Troggs Wild Thing Van Morrison Baby Please Don’t Go Brown Eyed Girl Into The Mystic The Who My Generation 70s Beatles Get Back Imagine Something Bee Gees You Should Be Dancing Staying Alive Carl Douglas Kung Foo Fighting Cat Stevens Wild World The Commodores Brick House David Bowie Rebel, Rebel Diana Ross Upside Down Donna Summer Hot Stuff Doors LA Woman Love Me Two Times Roadhouse Blues The Eagles Hotel California Elvis Presley Steamroller -

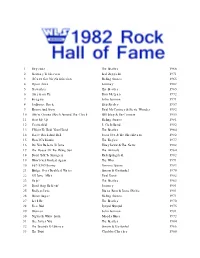

1 Hey Jude the Beatles 1968 2 Stairway to Heaven Led Zeppelin 1971 3 Stayin' Alive Bee Gees 1978 4 YMCA Village People 1979 5

1 Hey Jude The Beatles 1968 2 Stairway To Heaven Led Zeppelin 1971 3 Stayin' Alive Bee Gees 1978 4 YMCA Village People 1979 5 (We're Gonna) Rock Around The Clock Bill Haley & His Comets 1955 6 Da Ya Think I'm Sexy? Rod Stewart 1979 7 Jailhouse Rock Elvis Presley 1957 8 (I Can't Get No) Satisfaction Rolling Stones 1965 9 Tragedy Bee Gees 1979 10 Le Freak Chic 1978 11 Macho Man Village People 1978 12 I Will Survive Gloria Gaynor 1979 13 Yesterday The Beatles 1965 14 Night Fever Bee Gees 1978 15 Fire Pointer Sisters 1979 16 I Want To Hold Your Hand The Beatles 1964 17 Shake Your Groove Thing Peaches & Herb 1979 18 Hound Dog Elvis Presley 1956 19 Heartbreak Hotel Elvis Presley 1956 20 The Twist Chubby Checker 1960 21 Johnny B. Goode Chuck Berry 1958 22 Too Much Heaven Bee Gees 1979 23 Last Dance Donna Summer 1978 24 American Pie Don McLean 1972 25 Heaven Knows Donna Summer & Brooklyn Dreams 1979 26 Mack The Knife Bobby Darin 1959 27 Peggy Sue Buddy Holly 1957 28 Grease Frankie Valli 1978 29 Love Me Tender Elvis Presley 1956 30 Soul Man Blues Brothers 1979 31 You Really Got Me The Kinks 1964 32 Hot Blooded Foreigner 1978 33 She Loves You The Beatles 1964 34 Layla Derek & The Dominos 1972 35 September Earth, Wind & Fire 1979 36 Don't Be Cruel Elvis Presley 1956 37 Blueberry Hill Fats Domino 1956 38 Jumpin' Jack Flash Rolling Stones 1968 39 Copacabana (At The Copa) Barry Manilow 1978 40 Shadow Dancing Andy Gibb 1978 41 Evergreen (Love Theme From "A Star Is Born") Barbra Streisand 1977 42 Miss You Rolling Stones 1978 43 Mandy Barry Manilow 1975 -

Mill Valley Oral History Program a Collaboration Between the Mill Valley Historical Society and the Mill Valley Public Library

Mill Valley Oral History Program A collaboration between the Mill Valley Historical Society and the Mill Valley Public Library David Getz An Oral History Interview Conducted by Debra Schwartz in 2020 © 2020 by the Mill Valley Public Library TITLE: Oral History of David Getz INTERVIEWER: Debra Schwartz DESCRIPTION: Transcript, 60 pages INTERVIEW DATE: January 9, 2020 In this oral history, musician and artist David Getz discusses his life and musical career. Born in New York City in 1940, David grew up in a Jewish family in Brooklyn. David recounts how an interest in Native American cultures originally brought him to the drums and tells the story of how he acquired his first drum kit at the age of 15. David explains that as an adolescent he aspired to be an artist and consequently attended Cooper Union after graduating from high school. David recounts his decision to leave New York in 1960 and drive out to California, where he immediately enrolled at the San Francisco Art Institute and soon after started playing music with fellow artists. David explains how he became the drummer for Big Brother and the Holding Company in 1966 and reminisces about the legendary Monterey Pop Festival they performed at the following year. He shares numerous stories about Janis Joplin and speaks movingly about his grief upon hearing the news of her death. David discusses the various bands he played in after the dissolution of Big Brother and the Holding Company, as well as the many places he performed over the years in Marin County. He concludes his oral history with a discussion of his family: his daughters Alarza and Liz, both of whom are singer- songwriters, and his wife Joan Payne, an actress and singer. -

Mood Music Programs

MOOD MUSIC PROGRAMS MOOD: 2 Pop Adult Contemporary Hot FM ‡ Current Adult Contemporary Hits Hot Adult Contemporary Hits Sample Artists: Andy Grammer, Taylor Swift, Echosmith, Ed Sample Artists: Selena Gomez, Maroon 5, Leona Lewis, Sheeran, Hozier, Colbie Caillat, Sam Hunt, Kelly Clarkson, X George Ezra, Vance Joy, Jason Derulo, Train, Phillip Phillips, Ambassadors, KT Tunstall Daniel Powter, Andrew McMahon in the Wilderness Metro ‡ Be-Tween Chic Metropolitan Blend Kid-friendly, Modern Pop Hits Sample Artists: Roxy Music, Goldfrapp, Charlotte Gainsbourg, Sample Artists: Zendaya, Justin Bieber, Bella Thorne, Cody Hercules & Love Affair, Grace Jones, Carla Bruni, Flight Simpson, Shane Harper, Austin Mahone, One Direction, Facilities, Chromatics, Saint Etienne, Roisin Murphy Bridgit Mendler, Carrie Underwood, China Anne McClain Pop Style Cashmere ‡ Youthful Pop Hits Warm cosmopolitan vocals Sample Artists: Taylor Swift, Justin Bieber, Kelly Clarkson, Sample Artists: The Bird and The Bee, Priscilla Ahn, Jamie Matt Wertz, Katy Perry, Carrie Underwood, Selena Gomez, Woon, Coldplay, Kaskade Phillip Phillips, Andy Grammer, Carly Rae Jepsen Divas Reflections ‡ Dynamic female vocals Mature Pop and classic Jazz vocals Sample Artists: Beyonce, Chaka Khan, Jennifer Hudson, Tina Sample Artists: Ella Fitzgerald, Connie Evingson, Elivs Turner, Paloma Faith, Mary J. Blige, Donna Summer, En Vogue, Costello, Norah Jones, Kurt Elling, Aretha Franklin, Michael Emeli Sande, Etta James, Christina Aguilera Bublé, Mary J. Blige, Sting, Sachal Vasandani FM1 ‡ Shine -

BEU Alm.Del - Bilag 28 Offentligt

Beskæftigelsesudvalget 2018-19 (2. samling) BEU Alm.del - Bilag 28 Offentligt Health effects of exposure to diesel exhaust in diesel-powered trains Maria Helena Guerra Andersen1,2*, Marie Frederiksen2, Anne Thoustrup Saber2, Regitze Sølling Wils1,2, Ana Sofia Fonseca2, Ismo K. Koponen2, Sandra Johannesson3, Martin Roursgaard1, Steffen Loft1, Peter Møller1, Ulla Vogel2,4 1Department of Public Health, Section of Environmental Health, University of Copenhagen, Øster Farimagsgade 5A, DK-1014 Copenhagen K, Denmark 2The National Research Centre for the Working Environment, Lersø Parkalle 105, DK-2100 Copenhagen Ø, Denmark. 3 Department of Occupational and Environmental Medicine, Sahlgrenska Academy at University of Gothenburg, Gothenburg, Sweden. 4 DTU Health Tech., Technical University of Denmark, DK-2800 Kgs. Lyngby, Denmark *Corresponding author: [email protected]; [email protected] Maria Helena Guerra Andersen, [email protected]; [email protected] Marie Frederiksen, [email protected] Anne Thoustrup Saber, [email protected] Regitze Sølling Wils, [email protected] Ana Sofia Fonseca, [email protected] Ismo K. Koponen, [email protected] Sandra Johannesson, [email protected] Martin Roursgaard, [email protected] Steffen Loft, [email protected] Peter Møller, [email protected] Ulla Vogel, [email protected] Abstract Background: Short-term controlled exposure to diesel exhaust (DE) in chamber studies have shown mixed results on lung and systemic effects. There is a paucity of studies on well- characterized real-life DE exposure in humans. In the present study, 29 healthy volunteers were exposed to DE while sitting as passengers in diesel-powered trains. Exposure in electric trains was used as control scenario. -

(I Can't Get No) Satisfaction Rolling Stones 1965 4 Open Ar

1 Hey Jude The Beatles 1968 2 Stairway To Heaven Led Zeppelin 1971 3 (I Can't Get No) Satisfaction Rolling Stones 1965 4 Open Arms Journey 1982 5 Yesterday The Beatles 1965 6 American Pie Don McLean 1972 7 Imagine John Lennon 1971 8 Jailhouse Rock Elvis Presley 1957 9 Ebony And Ivory Paul McCartney & Stevie Wonder 1982 10 (We're Gonna) Rock Around The Clock Bill Haley & His Comets 1955 11 Start Me Up Rolling Stones 1981 12 Centerfold J. Geils Band 1982 13 I Want To Hold Your Hand The Beatles 1964 14 I Love Rock And Roll Joan Jett & The Blackhearts 1982 15 Hotel California The Eagles 1977 16 Do You Believe In Love Huey Lewis & The News 1982 17 The House Of The Rising Sun The Animals 1964 18 Don't Talk To Strangers Rick Springfield 1982 19 Won't Get Fooled Again The Who 1971 20 867-5309/Jenny Tommy Tutone 1982 21 Bridge Over Troubled Water Simon & Garfunkel 1970 22 '65 Love Affair Paul Davis 1982 23 Help! The Beatles 1965 24 Don't Stop Believin' Journey 1981 25 Endless Love Diana Ross & Lionel Richie 1981 26 Brown Sugar Rolling Stones 1971 27 Let It Be The Beatles 1970 28 Free Bird Lynyrd Skynyrd 1975 29 Woman John Lennon 1981 30 Nights In White Satin Moody Blues 1972 31 She Loves You The Beatles 1964 32 The Sounds Of Silence Simon & Garfunkel 1966 33 The Twist Chubby Checker 1960 34 Jumpin' Jack Flash Rolling Stones 1968 35 Jessie's Girl Rick Springfield 1981 36 Born To Run Bruce Springsteen 1975 37 A Hard Day's Night The Beatles 1964 38 California Dreamin' The Mamas & The Papas 1966 39 Lola The Kinks 1970 40 Lights Journey 1978 41 Proud -

Music Impressions Comedy

Music Impressions Comedy Legends & Laughter Legends & Laughter is a musical journey through Jimmy’s favorite songs by a wide variety of performers from classic artists to today's contemporary performers hot off the Las Vegas Strip. Jimmy’s high energy and enthusiasm is tempered only by his ultra smooth vocals as he creates his impressions of Rod Stewart, Joe Cocker, The Beatles, Tom Jones, Engelbert Humperdinck, The Temptations, Tony Orlando, Prince, Bobby Darin, Michael Jackson, Barry White, The Bee Gees and David Bowie. Jimmy adds the crooner voices of Frank Sinatra, Dean Martin and Nat King Cole, then quickly moves on to glide thru the voices of Tony Bennett, Frankie Valli, Lou Rawls, and Neil Diamond and many more. A quick patriotic tune salutes our veterans, then it's on to “The King”, Elvis Presley with a very amusing parody. Jimmy keeps on moving as he belts out the hits and covers many more greats including Billy Joel, Michael Buble’, Elton John, Johnny Cash, Willie Nelson, Garth Brooks, Ray Charles, Louis Armstrong, Jimmy Durante, Blake Shelton, Stevie Wonder, and "The Boss,” Bruce Springsteen. Laughter abounds as Jimmy captures the voices of Ed Sullivan, Jackie Mason, Sylvester Stallone, John Wayne and Arnold Shwarzenegger, just to name a few. In typical Jimmy Mazz style, there's always a few surprises thrown in for good measure. Never the same show twice! Experience Jimmy Mazz With 40 plus years of entertainment experience and as a Headliner at The Superstar Theater at the Resorts Hotel & Casino in Atlantic City, Jimmy Mazz truly understands how to entertain an audience. -

2DAY-FM Chart, 1988-12-12

2DAY-FM TOP THIRTY SINGLES 2DAY-FM TOP THIRTY ALBUMS 2DAY-FM TOP THIRTY COMPACT DISCS No Title Artist Dist No Title Artist Dist No Title Artist Dist 1 Don't Worry Be Happy Bobby McFerrin EMI 1 * Barnestorming Jimmy Barnes Fest 1 Barnestorming Jimmy Barnes Fest 2 A Groovy Kind Of Love Phil Collins WEA 2 "Cocktail" Sountrack WEA 2 Rattle and Hum U2 Fest 3 * If I Could 1927 WEA 3 Rattle and Hum U2 Fest 3 * "Cocktail" Soundtrack WEA 4 The Only Way Is Up Yazz & The Plastic CBS 4 Age Of Reason John Farnham BMG 4 Bryan Ferry Ultimate Collection Bryan Ferry/Roxy Music EMI Population 5 * Greatest Hits Fleetwood Mac WEA 5 * Greatest Hits Fleetwood Mac WEA 5 * Kokomo Beach Boys WEA 6 ...ISH 1927 WEA 6 Money For Nothing Dire Straits Poly 6 When A Man Loves A Woman Jimmy Barnes Fest 7 * Bryan Ferry Ultimate Collection Bryan Ferry/Roxy Music EMI 7 * The Best Of Chris Rea New Light Through Old WEA 7 I Want Your Love Transvision Vamp WEA 8 The Best Of Chris Rea New Light Through Old WEA Windows 8 Bring Me Some Water Melissa Etheridge Fest Windows 8 Age Of Reason John Farnham BMG 9 Wild, Wild West Escape Club WEA 9 Volume One Traveling Wilburys WEA 9 Kick INXS WEA 10 Nothing Can Divide Us Jason Donovan Fest 10 Kick INXS WEA 10 Union Toni Childs Fest 11 Teardrops Womack & Womack Fest 11 * Delicate Sound Of Thunder Pink Floyd CBS 11 * Melissa Etheridge Melissa Etheridge Fest 12 I Still Love You Oe Ne Sais Pas Pourquoi) Kylie Minogue Fest 12 Money For Nothing Dire Straits Poly 12 ...ISH 1927 WEA 13 Don't Need Love Johnny Diesel & The Fest 13 Union Toni