Virtualdub Help

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Through the Looking Glass: Webcam Interception and Protection in Kernel

VIRUS BULLETIN www.virusbulletin.com Covering the global threat landscape THROUGH THE LOOKING GLASS: and WIA (Windows Image Acquisition), which provides a WEBCAM INTERCEPTION AND still image acquisition API. PROTECTION IN KERNEL MODE ATTACK VECTORS Ronen Slavin & Michael Maltsev Reason Software, USA Let’s pretend for a moment that we’re the bad guys. We have gained control of a victim’s computer and we can run any code on it. We would like to use his camera to get a photo or a video to use for our nefarious purposes. What are our INTRODUCTION options? When we talk about digital privacy, the computer’s webcam The simplest option is just to use one of the user-mode APIs is one of the most relevant components. We all have a tiny mentioned previously. By default, Windows allows every fear that someone might be looking through our computer’s app to access the computer’s camera, with the exception of camera, spying on us and watching our every move [1]. And Store apps on Windows 10. The downside for the attackers is while some of us think this scenario is restricted to the realm that camera access will turn on the indicator LED, giving the of movies, the reality is that malware authors and threat victim an indication that somebody is watching him. actors don’t shy away from incorporating such capabilities A sneakier method is to spy on the victim when he turns on into their malware arsenals [2]. the camera himself. Patrick Wardle described a technique Camera manufacturers protect their customers by incorporating like this for Mac [8], but there’s no reason the principle into their devices an indicator LED that illuminates when can’t be applied to Windows, albeit with a slightly different the camera is in use. -

Real-Time Finite Element Method (FEM) and Tressfx

REAL-TIME FEM AND TRESSFX 4 ERIC LARSEN KARL HILLESLAND 1 FEBRUARY 2016 | CONFIDENTIAL FINITE ELEMENT METHOD (FEM) SIMULATION Simulates soft to nearly-rigid objects, with fracture Models object as mesh of tetrahedral elements Each element has material parameters: ‒ Young’s Modulus: How stiff the material is ‒ Poisson’s ratio: Effect of deformation on volume ‒ Yield strength: Deformation limit before permanent shape change ‒ Fracture strength: Stress limit before the material breaks 2 FEBRUARY 2016 | CONFIDENTIAL MOTIVATIONS FOR THIS METHOD Parameters give a lot of design control Can model many real-world materials ‒Rubber, metal, glass, wood, animal tissue Commonly used now for film effects ‒High-quality destruction Successful real-time use in Star Wars: The Force Unleashed 1 & 2 ‒DMM middleware [Parker and O’Brien] 3 FEBRUARY 2016 | CONFIDENTIAL OUR PROJECT New implementation of real-time FEM for games Planned CPU library release ‒Heavy use of multithreading ‒Open-source with GPUOpen license Some highlights ‒Practical method for continuous collision detection (CCD) ‒Mix of CCD and intersection contact constraints ‒Efficient integrals for intersection constraint 4 FEBRUARY 2016 | CONFIDENTIAL STATUS Proof-of-concept prototype First pass at optimization Offering an early look for feedback Several generic components 5 FEBRUARY 2016 | CONFIDENTIAL CCD Find time of impact between moving objects ‒Impulses can prevent intersections [Otaduy et al.] ‒Catches collisions with fast-moving objects Our approach ‒Conservative-advancement based ‒Geometric -

Download Windows Media App How to Download Windows Media Center

download windows media app How to Download Windows Media Center. wikiHow is a “wiki,” similar to Wikipedia, which means that many of our articles are co-written by multiple authors. To create this article, 16 people, some anonymous, worked to edit and improve it over time. This article has been viewed 208,757 times. Windows Media Center was Microsoft's media PC interface, and allowed you to record live TV, manage and playback your media, and more. Media Center has been discontinued, but you can still get it for Windows 7 or 8.1. If you are using Windows 10, you'll need to use an enthusiast- made hacked version, as Windows Media Center has been completely disabled. How to Download Windows Media Center. wikiHow is a “wiki,” similar to Wikipedia, which means that many of our articles are co-written by multiple authors. To create this article, 16 people, some anonymous, worked to edit and improve it over time. This article has been viewed 208,757 times. Windows Media Center was Microsoft's media PC interface, and allowed you to record live TV, manage and playback your media, and more. Media Center has been discontinued, but you can still get it for Windows 7 or 8.1. If you are using Windows 10, you'll need to use an enthusiast- made hacked version, as Windows Media Center has been completely disabled. Download this free app to get Windows Media Center back in Windows 10. With the release of Windows 10, Microsoft waved farewell to Windows Media Center. There are some excellent free alternatives around, but if you miss the classic video recorder and media player there's a free download that brings its suite of streaming and playback tools to the new operating system. -

Open Source Subtitle Editor Software Study for Section 508 Close Caption Applications

https://ntrs.nasa.gov/search.jsp?R=20140002618 2019-08-29T14:44:57+00:00Z Open Source Subtitle Editor Software Study for Section 508 Close Caption Applications Brandon F. Murphy NASA. John F. Kennedy Space Center Major: Computer Science USRP Summer 2013 Session Date: July 30 2013 NASA USRP - Internship Final Report Open Source Subtitle Editor Software Study for Section 508 Close Caption Applications Brandon F. Murphy North Carolina Agricultural and Technical State University Greensboro, North Carolina. 27411 Nomenclature cc = Closed Captioning !TAW = IT Accessibility and Workforce GSA = General Services Administration EfT = Electronic and Information Technology .srt = the file extension for Sub Rip subtitle files oss = Open Source Software .ssa = SubStation Alpha .ass = Advanced SubStation Alpha Subtitle .sub = Subtitling file .txt = Text File .idx = Index file I. Introduction Government agencies are required by law under the Section 508 policy to provide any tools or services for their employees who may need them. For those who need special assistance these tools and services help them do their job to the best of their ability. With these tools and services a person with disabilities will have equal opportunities for advancing in their company, as others do. This research and implementation was used to find cost effective solutions to make information available to all Government employees as required by law, Section 508. II. Abstract This paper will focus on a specific item within the NASA Electronic Information Accessibility Policy - Multimedia Presentation shall have synchronized caption; thus making information accessible to a person with hearing impairment. This synchronized caption will assist a person with hearing or cognitive disability to access the same information as everyone else. -

Virtualdub Tutorial

Using VirtualDub to edit and recompress videos made by cameras like MD-80 or 808 KeyChain Live long and prosper. I do not pretend to teach anything to anyone, but I hope to give some suggestions to help those unfamiliar with the program. My scope is to help people who want to change (e.g. duration or remove timestamp) and then recompress videos, such as DivX. The videos produced by the cameras are usually compressed in MPEG-4 (standard DVD) and are very large. Here are the steps to follow. I’ve written which are mandatory and which optional. INSTALLING VirtualDub (required) Go to this webpage and download VirtualDub: http://virtualdub.sourceforge.net/ Search the first link: List download mirrors for Vx.xx (32-bit) release build. It’s not a program to be installed. Simply unzip the downloaded archive in the folder you want. I've put it here: C:\Program Files\VirtualDub and I made a link to VirtualDub.exe on desktop for convenience. DOWNLOAD PLUG-IN TO REMOVE TIMESTAMP (optional) Open this webpage: http://www.aircommandrockets.com/md80clone.htm and download the file: timestamp.vdf (Thanks to George) Once downloaded, copy it here: C:\Program Files\VirtualDub\plugins (where C:\Program Files\VirtualDub\ is the path where you extracted the archive above) An alternate filter to remove the timestamp is called Logoaway and can be found here: http://voidon.republika.pl/virtualdub/ (Thanks to Krzysztof Wojdon) DOWNLOAD DivX CODEC (optional if already installed) Open this page: http://www.divx.com/en/software/divx-plus and press the Free Download button (green). -

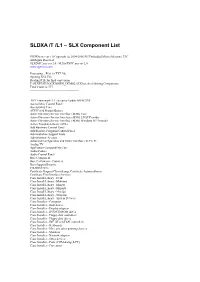

SLDXA /T /L1 – SLX Component List

SLDXA /T /L1 – SLX Component List SLDXA.exe ver 1.0 Copyright (c) 2004-2006 SJJ Embedded Micro Solutions, LLC All Rights Reserved SLXDiffC.exe ver 2.0 / SLXtoTXTC.exe ver 2.0 www.sjjmicro.com Processing... File1 to TXT file. Opening XSL File Reading RTF for final conversion F:\SLXTEST\LOCKDOWN_DEMO2.SLX has the following Components Total Count is: 577 -------------------------------------------------- .NET Framework 1.1 - Security Update KB887998 Accessibility Control Panel Accessibility Core ACPI Fixed Feature Button Active Directory Service Interface (ADSI) Core Active Directory Service Interface (ADSI) LDAP Provider Active Directory Service Interface (ADSI) Windows NT Provider Active Template Library (ATL) Add Hardware Control Panel Add/Remove Programs Control Panel Administration Support Tools Administrator Account Advanced Configuration and Power Interface (ACPI) PC Analog TV Application Compatibility Core Audio Codecs Audio Control Panel Base Component Base Performance Counters Base Support Binaries CD-ROM Drive Certificate Request Client & Certificate Autoenrollment Certificate User Interface Services Class Install Library - Desk Class Install Library - Mdminst Class Install Library - Mmsys Class Install Library - Msports Class Install Library - Netcfgx Class Install Library - Storprop Class Install Library - System Devices Class Installer - Computer Class Installer - Disk drives Class Installer - Display adapters Class Installer - DVD/CD-ROM drives Class Installer - Floppy disk controllers Class Installer - Floppy disk drives -

IBM Thinkpad Notebooks 1992 to 2001 - Withdrawn January 2001 - Version 214 IBM Thinkpad 240 - Withdrawn

IBM PC Institute IBM Personal Systems Reference IBM ThinkPad Notebooks 1992 to 2001 - withdrawn January 2001 - Version 214 IBM ThinkPad 240 - withdrawn IBM ThinkPad Processor Intel Mobile Celeron 300, 366, or 400MHz1 / 66MHz system bus Processor features No upgrade / processor on Ball Grid Array (H-PBGA) L2 cache 128KB / onboard (full speed) / synchronous pipelined burst / ECC / write-back Diskette drive External 3.5" 1.44MB / connects to left side with FDD port / includes case and cable CD-ROM Option: External CD-ROM / via Portable Drive Bay and 24X-10X5 CD-ROM UltraslimBay Drive DVD-ROM Option: External DVD-ROM / via Portable Drive Bay and DVD-ROM UltraslimBay Drive Type-model ✂ 2609-21U ✂ 2609-31U ✂ 2609-41U Processor Celeron 300MHz Celeron 366MHz Celeron 400MHz Disk - size / ms 6.4GB4 / 13ms read / Ultra DMA/33 or PIO Mode 4 12.0GB / 12ms read / ATA-66 or PIO4 Preload (see side) Windows 987 Windows 987 SE Windows 987 SE Avail / withdrawn date June 1999 / February 2000 November 1999 / February 2000 February 2000 / February 2001 Display - size and type 10.4" TFT color (264.16mm) / Active Matrix Display - technology SVGA / 800x600 / 15ms refresh (typical) / 50 to 110 nits 16.7 million simultaneous colors / 250 to 1 contrast (typical) Graphics - controller NeoMagic MagicMedia128XD (NM2160C) / 128-bit accelerator / DDC2B / 2MB / SGRAM (embedded) / color space conversion Graphics - features Simultaneous LCD and CRT26 / 180 degree tilt / no multiple-monitor support / ext SVGA to 1024x768 with 65,536 colors Memory - std / max 64MB / 192MB33 -

This Electronic Thesis Or Dissertation Has Been Downloaded from Explore Bristol Research

This electronic thesis or dissertation has been downloaded from Explore Bristol Research, http://research-information.bristol.ac.uk Author: Gilmour, Lia R V Title: Evaluating methods to deter bats General rights Access to the thesis is subject to the Creative Commons Attribution - NonCommercial-No Derivatives 4.0 International Public License. A copy of this may be found at https://creativecommons.org/licenses/by-nc-nd/4.0/legalcode This license sets out your rights and the restrictions that apply to your access to the thesis so it is important you read this before proceeding. Take down policy Some pages of this thesis may have been removed for copyright restrictions prior to having it been deposited in Explore Bristol Research. However, if you have discovered material within the thesis that you consider to be unlawful e.g. breaches of copyright (either yours or that of a third party) or any other law, including but not limited to those relating to patent, trademark, confidentiality, data protection, obscenity, defamation, libel, then please contact [email protected] and include the following information in your message: •Your contact details •Bibliographic details for the item, including a URL •An outline nature of the complaint Your claim will be investigated and, where appropriate, the item in question will be removed from public view as soon as possible. Evaluating methods to deter bats Lia Rose Vincent Gilmour A dissertation submitted to the University of Bristol in accordance with the requirements for award of the degree of PhD in the Faculty of Life Sciences. School of Biological Sciences, University of Bristol October 2019 34,189 words Abstract Bat deterrents, such as radar and acoustic methods have been suggested as potential mitigation for when bats are at risk of harm from human activities and/or structures. -

Mpc Downloaded Video Cannot Render the File Mpc Downloaded Video Cannot Render the File

mpc downloaded video cannot render the file Mpc downloaded video cannot render the file. Theme by Mishar DESIGN, modded by Team Kodi. Copyright © 2017 XBMC Foundation. Quick Links. Sponsors. About Kodi. Kodi is a free and open source media player application developed by the XBMC Foundation, a non-profit technology consortium. Kodi is available for multiple operating-systems and hardware platforms, featuring a 10-foot user interface for use with televisions and remote controls. It allows users to play and view most videos, music, podcasts, and other digital media files from local and network storage media and the internet. MPC Video Renderer 0.5.5.1732. The renderer can potentially work with any DirectShow player, but full support is available only in the MPC-BE (recommended version of MPC- BE is: 1.5.5 or newer). It is also recommended to have an SSE2-capable CPU, with Windows 7 (or newer) installed and at leaser a DirectX 9 video card. Starting with version 0.3.0 of MPC Video Renderer, it has been added support for XySubFilter. Changes to MPC Video Renderer 0.5.4 : - Fixed dithering when outputting 10-bit for DX11 mode. - Improved compatibility with CoreAVC Video Decoder. - Fixed frame rotation when using DXVA2 or D3D11 VP. - Optimization of processing when using only DXVA2 or D3D11 VP. — Full list of changes. Solved: AVI/MP4/MKV Cannot Render the File. Stuck with the "Cannot Render the File" error on your Media Player Classic? You'll find everything from why you're getting this error. And you will also learn to how to fix it in this post. -

Avisynth for Mac

Avisynth For Mac Avisynth For Mac 1 / 4 2 / 4 [4]AviSynth is built upon filters, which are much like DirectShow filters, but with a different binary interface. 1. avisynth 2. avisynth gui 3. avisynth mac [2] It is free software under GNU GPL license Scripting video editor[edit]AviSynth acts as a non-linear video editor controlled entirely by scripting (without a GUI).. Avisynth ffmpeg free download Universal Media Server Universal Media Server is a DLNA-compliant UPnP Media Server Universal Media Server supports all ma.. Filter capabilities include cropping, deinterlacing, inverse telecine, working with still images, doing basic color grading, reducing video noise, and many other things.. 6 1 Alpha (May 17, 2016; 4 years ago) [±]RepositoryWritten inC++Operating systemWindowsTypeLicenseGNU GPLWebsitewww. avisynth avisynth, avisynth linux, avisynth crop, avisynth plugins, avisynth trim, avisynth gui, avisynth 2.5, avisynth vhs effect, avisynth mac, avisynth 2.6 mt, avisynth deinterlace Free Web Software For Mac Technically, AviSynth constructs a filter graph (like MicrosoftGraphEdit but with added capabilities),[5] controlled by scripts written in the AviSynth scripting language.. More about AviSynth About the download, AviSynth is a program that requires less storage space than the average program in the section Video software.. Its functionality can be extended through the use of third- party filters known as plugins.. It’s also a great tutorial on how to make gifs in general, so if you’re not familiar with how to make gifs at all this is a great walkthrough before you continue on.. The program will play the video file 'myAvi avi' cropped down to its top-left 320 pixels by 240 pixels and blurred by a small amount. -

Opengl ES, XNA, Directx for WP8) Plan

Mobilne akceleratory grafiki Kamil Trzciński, 2013 O mnie Kamil Trzciński http://ayufan.eu / [email protected] ● Od 14 lat zajmuję się programowaniem (C/C++, Java, C#, Python, PHP, itd.) ● Od 10 lat zajmuję się programowaniem grafiki (OpenGL, DirectX 9/10/11, Ray-Tracing) ● Od 6 lat zajmuję się branżą mobilną (SymbianOS, Android, iOS) ● Od 2 lat zajmuję się mobilną grafiką (OpenGL ES, XNA, DirectX for WP8) Plan ● Bardzo krótko o historii ● Mobilne układy graficzne ● API ● Programowalne jednostki cieniowania ● Optymalizacja ● Silniki graficzne oraz silniki gier Wstęp Grafika komputerowa to dziedzina informatyki zajmująca się wykorzystaniem technik komputerowych do celów wizualizacji artystycznej oraz wizualizacji rzeczywistości. Bardzo często grafika komputerowa jest kojarzona z urządzeniem wspomagającym jej generowanie - kartą graficzną, odpowiedzialna za renderowanie grafiki oraz jej konwersję na sygnał zrozumiały dla wyświetlacza 1. Historia Historia Historia kart graficznych sięga wczesnych lat 80-tych ubiegłego wieku. Pierwsze karty graficzne potrafiły jedynie wyświetlać znaki alfabetu łacińskiego ze zdefiniowanego w pamięci generatora znaków, tzw. trybu tekstowego. W późniejszym okresie pojawiły się układy, wykonujące tzw. operacje BitBLT, pozwalające na nałożenie na siebie 2 różnych bitmap w postaci rastrowej. Historia Historia #2 ● Wraz z nadejściem systemu operacyjnego Microsoft Windows 3.0 wzrosło zapotrzebowanie na przetwarzanie grafiki rastrowej dużej rozdzielczości. ● Powstaje interfejs GDI odpowiedzialny za programowanie operacji związanych z grafiką. ● Krótko po tym powstały pierwsze akceleratory 2D wyprodukowane przez firmę S3. Wydarzenie to zapoczątkowało erę graficznych akceleratorów grafiki. Historia #3 ● 1996 – wprowadzenie chipsetu Voodoo Graphics przez firmę 3dfx, dodatkowej karty rozszerzeń pełniącej funkcję akceleratora grafiki 3D. ● Powstają biblioteki umożliwiające tworzenie trójwymiarowych wizualizacji: Direct3D oraz OpenGL. Historia #3 Historia #4 ● 1999/2000 – DirectX 7.0 dodaje obsługę: T&L (ang. -

The Amd Linux Graphics Stack – 2018 Edition Nicolai Hähnle Fosdem 2018

THE AMD LINUX GRAPHICS STACK – 2018 EDITION NICOLAI HÄHNLE FOSDEM 2018 1FEBRUARY 2018 | CONFIDENTIAL GRAPHICS STACK: KERNEL / USER-SPACE / X SERVER Mesa OpenGL & Multimedia Vulkan Vulkan radv AMDVLK OpenGL X Server radeonsi Pro/ r600 Workstation radeon amdgpu LLVM SCPC libdrm radeon amdgpu FEBRUARY 2018 | AMD LINUX GRAPHICS STACK 2FEBRUARY 2018 | CONFIDENTIAL GRAPHICS STACK: OPEN-SOURCE / CLOSED-SOURCE Mesa OpenGL & Multimedia Vulkan Vulkan radv AMDVLK OpenGL X Server radeonsi Pro/ r600 Workstation radeon amdgpu LLVM SCPC libdrm radeon amdgpu FEBRUARY 2018 | AMD LINUX GRAPHICS STACK 3FEBRUARY 2018 | CONFIDENTIAL GRAPHICS STACK: SUPPORT FOR GCN / PRE-GCN HARDWARE ROUGHLY: GCN = NEW GPUS OF THE LAST 5 YEARS Mesa OpenGL & Multimedia Vulkan Vulkan radv AMDVLK OpenGL X Server radeonsi Pro/ r600 Workstation radeon amdgpu LLVM(*) SCPC libdrm radeon amdgpu (*) LLVM has pre-GCN support only for compute FEBRUARY 2018 | AMD LINUX GRAPHICS STACK 4FEBRUARY 2018 | CONFIDENTIAL GRAPHICS STACK: PHASING OUT “LEGACY” COMPONENTS Mesa OpenGL & Multimedia Vulkan Vulkan radv AMDVLK OpenGL X Server radeonsi Pro/ r600 Workstation radeon amdgpu LLVM SCPC libdrm radeon amdgpu FEBRUARY 2018 | AMD LINUX GRAPHICS STACK 5FEBRUARY 2018 | CONFIDENTIAL MAJOR MILESTONES OF 2017 . Upstreaming the DC display driver . Open-sourcing the AMDVLK Vulkan driver . Unified driver delivery . OpenGL 4.5 conformance in the open-source Mesa driver . Zero-day open-source support for new hardware FEBRUARY 2018 | AMD LINUX GRAPHICS STACK 6FEBRUARY 2018 | CONFIDENTIAL KERNEL: AMDGPU AND RADEON HARDWARE SUPPORT Pre-GCN radeon GCN 1st gen (Southern Islands, SI, gfx6) GCN 2nd gen (Sea Islands, CI(K), gfx7) GCN 3rd gen (Volcanic Islands, VI, gfx8) amdgpu GCN 4th gen (Polaris, RX 4xx, RX 5xx) GCN 5th gen (RX Vega, Ryzen Mobile, gfx9) FEBRUARY 2018 | AMD LINUX GRAPHICS STACK 7FEBRUARY 2018 | CONFIDENTIAL KERNEL: AMDGPU VS.