Can You Trust Your Robot?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

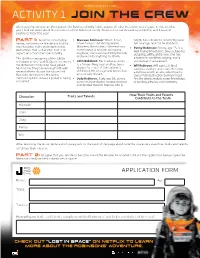

ACTIVITY 1 Join the Crew

REPRODUCIBLE ACTIVITY ACTIVITY 1 Join the Crew After crash-landing on an alien planet, the Robinson family fights against all odds to survive and escape. In this activity, you’ll find out more about the members of the Robinson family. Season 1 is now streaming on Netflix, and Season 2 premieres later this year. Part 1: Read the information • Maureen Robinson: Smart, brave, bright, has a desire to constantly prove below, and then use the details to fill in adventurous, and strong-willed, her courage, and can be stubborn. the character traits and talents table. Maureen, the mother, is the mission • Penny Robinson: Penny, age 15, is a Remember that a character trait is an commander. A brilliant aerospace well-trained mechanic. She is cheerful, aspect of a character’s personality. engineer, she loves her family fiercely outgoing, witty, and brave. She has and would do anything for them. The Netflix reimagining of the 1960s a talent for problem-solving, and is television series “Lost in Space” features • John Robinson: Her husband, John, somewhat of a daredevil. the Robinson family who have joined is a former Navy Seal and has been • Will Robinson: Will, age 11, is kind, Mission 24. They are leaving Earth with absent for much of the children’s cautious, creative, and smart. He forms several others aboard the spacecraft childhood. He is tough and smart, but a tight bond with an alien robot that he Resolute destined for the Alpha emotionally distant. saves from destruction during a forest Centauri system, where a planet is being • Judy Robinson: Judy, age 18, serves fire. -

Hymie the ROBOT By: Xavier Susana Artificial Intelligence G-Period

Robot PowerPoints Artificial Intelligence F & G Periods Mr. Sciame March 10, 2013 Hymie The ROBOT By: Xavier Susana Artificial intelligence G-period who is hymie the robot? • Hymie the robot was a fictional robot who was first seen on the 1960’s spy spoof television show. • Hymie the robot is a humanized robot, who was built by Dr. Ratton • Hymie had one job, that was to serve KAOS • Had many super powers such as: – Being strong – Able to swallow poison and classify their names – Was extremely fast – Capable of jumping really high heights. • Portrayed in 2008, in a movie called “Get Smart” by William Warburton Is the robot meant for good or evil? • Hymie The Robot is is a good robot. This robot is a one of a kind, Its very smart, strong, and does as it is told. Even though there is times when you tell hymie to do something, he does the direct opposite of what he is told to do. From the episodes that I’ve watched on you tube it shows that hymie can do about anything that looks impossible. He does good for society, because he is under complete control. Hymie the Robot • Ryna Runko F Period Hymie the Robot • Hymie is a humanoid robot from the hit television series “ Get Smart”. • Hymie was created by Dr. Ratton, who named the robot after his father. • Hymie was built by KAOS and poses as a CONTROL agent recruit. • Hymie has super strength and is able to disengage a bomb • Hymie is famous for being sensitive and feels that Max does not care for him, even though he is the only one that treats Hymie like a real person. -

![WHY DO PEOPLE IMAGINE ROBOTS] This Project Analyzes Why People Are Intrigued by the Thought of Robots, and Why They Choose to Create Them in Both Reality and Fiction](https://docslib.b-cdn.net/cover/7812/why-do-people-imagine-robots-this-project-analyzes-why-people-are-intrigued-by-the-thought-of-robots-and-why-they-choose-to-create-them-in-both-reality-and-fiction-6717812.webp)

WHY DO PEOPLE IMAGINE ROBOTS] This Project Analyzes Why People Are Intrigued by the Thought of Robots, and Why They Choose to Create Them in Both Reality and Fiction

Project Number: LES RBE3 2009 Worcester Polytechnic Institute Project Advisor: Lance E. Schachterle Project Co-Advisor: Michael J. Ciaraldi Ryan Cassidy Brannon Cote-Dumphy Jae Seok Lee Wade Mitchell-Evans An Interactive Qualifying Project Report submitted to the Faculty of WORCESTER POLYTECHNIC INSTITUTE in partial fulfillment of the requirements for the Degree of Bachelor of Science [WHY DO PEOPLE IMAGINE ROBOTS] This project analyzes why people are intrigued by the thought of robots, and why they choose to create them in both reality and fiction. Numerous movies, literature, news articles, online journals, surveys, and interviews have been used in determining the answer. Table of Contents Table of Figures ...................................................................................................................................... IV Introduction ............................................................................................................................................. I Literature Review .................................................................................................................................... 1 Definition of a Robot ........................................................................................................................... 1 Sources of Robots in Literature ............................................................................................................ 1 Online Lists ..................................................................................................................................... -

Rittenhouse Netflix Lost in Space Season 1 Trading Cards

Distributor Information Sheet LOST IN SPACE (Netflix) Season One Trading Cards Release Date: December 4, 2019 Order Due Date: October 30, 2019 12 Boxes Per Case 24 Packs Per Box 5 Cards Per Pack Limited Edition of 250 Cases 2 “Big Hits” Per Box! Featuring DUAL SIGNATURE CARDS SINGLE SIGNATURE CARDS RELIC CARDS SKETCH CARDS Partial List of Dual Autograph Combinations: (Minimum of 2 Dual Autographs in Every Case) Maxwell Jenkins (Will Robinson)/Toby Stephens (John Robinson) Bill Mumy (Dr. Smith)/Parker Posey (Dr. Smith) Parker Posey (Dr. Smith)/Maxwell Jenkins (Will Robinson) Mina Sundwall (Penny Robinson)/Ajay Friese (Vijay Dhar) Ignacio Serricchio (Don West)/Anna Maria Demara (Tam Soderquist) Partial List of Single Autograph Signers: Maxwell Jenkins (Will Robinson) Bill Mumy (Dr. Zachary Smith) Toby Stephens (John Robinson) Raza Jaffrey (Victor Dhar) Molly Parker (Maureen Robinson) Ajay Friese (Vijay Dhar) Mina Sundwall (Penny Robinson) Cary Tagawa (Hiroki Watanabe) Taylor Russell (Judy Robinson) Kiki Sukezane (Aiko Watanabe) Parker Posey (Dr. Smith) Sibo Mlambo (Angela) Ignacio Serricchio (Don West) and more! Set Composition: 72 Base cards, including 6 plot synopsis cards for each episode! Bonus sets include: • 8 Lost In Space Character Cards, including THE ROBOT (1:48 Packs) • 8 Metal Character Parallel Cards (1:96 Packs) • 6 Lost In Space/Jupiter Cards (1:48 Packs) • 6 Lost In Space/Chariot Cards (1:48 Packs) • 10 Quotable Lost In Space Cards (1:24 Packs) • 8 Juan Ortiz /Lost In Space Character Art Cards (1:48 Packs) • 10 Juan Ortiz/Lost In Space Episode Cards (1:24 Packs) • 8 Lost In Space Relic Cards • One-of-a-kind Lost In Space Sketch Cards, including Artists Carlos Cabaleiro, Warren Martineck, Charles Hall and Louise Draper 9-Case Incentive: Toby Stephens (John Robinson)/Molly Parker (Maureen Robinson) Dual Autograph Card 18-Case Incentive: LOST IN SPACE (Netflix) Season One Trading Cards ARCHIVE BOX, Featuring Several EXCLUSIVE BONUS CARDS: • 2 Sets of 4 Color Printing Plates, each set used to make the front of one card • Bill Mumy (Dr. -

Lost in Space

Lost in Space US TV series : 1965-68 : dir. : CBS / Irwin Allen : 83 x 50 min prod: Irwin Allen : scr: : dir.ph.: Billy Mumy ……………….……………………………………………………………………………… Jonathan Harris; Guy Williams; June Lockhart; Marta Kristen; Angela Cartwright; Mark Goddard; Bob May (as the robot) Ref: Pages Sources Stills Words Ω 8 M Copy on VHS Last Viewed 2852a 10 7 41 3,196 - - - - No Pre 1984 Beset on all sides by Barbie dolls and beach hunks, Dr Smith finds solace with the only other fallible human for light years around. Yep ! That’s you, Will ! Source for all stills except where indicated – Bill Mumy’s home website Halliwell’s Television Companion review: The Golden Age of Children’s Television comment: “A family of the future, on its way to colonise Alpha Centauri, is shipwrecked on an “The king of American science fiction was unknown planet. Cheerfully studio-bound Irwin Allen, who brought us the futuristic adventure, mainly for kids: too much talk for adventures of super submarine Seaview, grown-ups.” commanded by Admiral Harriman Nelson (Richard Basehart), in "Voyage to the Bottom of the Sea"… Allen’s next project was through history in "The Time Tunnel", Allen "Lost in Space", made in 1965 but set in 1997. came up with last of his sixties classics, "Land of It followed the trials and tribulations of the the Giants", where the spaceship Spindrift, on a Space Family Robinson as they set out to routine 1983 shuttle service, passed through a colonise a distant planet, only for their plans to dense white cloud and emerged in a world in be scuppered by fiendish enemy agent Colonel which everything was twelve times bigger. -

Film Essay for "Forbidden Planet"

Forbidden Planet By Ian Olney Visually stunning and thematically rich, Fred M. Wilcox’s “Forbidden Planet” is a landmark film in science-fiction cinema. Set in the twenty-third centu- ry, it tells the story of a United Planets space cruiser sent to the distant world of Altair IV to investigate the fate of a group of colonists with whom Earth has lost contact. Upon landing, the ship’s commander, J.J. Adams (Leslie Nielsen), and his crew learn that most of the colonists are dead, the victims of a mysterious planetary force. The sole survivors are a scientist, Dr. Edward Morbius (Walter Pidgeon), and his teenage daughter Altaira (Anne Francis), who live comfortably in a fortified home, their needs tended to by a me- chanical servant, Robby the Robot. Morbius insists that he and Altaira are perfectly safe and demands that their would-be rescuers leave them in peace. That night, however, the space cruiser is sabotaged, temporarily stranding the commander and his crew on Altair IV. Adams eventually discovers that Morbius is responsible. Shortly after the colonists’ arrival, the scientist discovered ancient technology left behind by the Krell, an advanced alien race that once ruled the planet but destroyed themselves with a machine Anne Francis might have captivated adult males, but that gave form to their thoughts, including their sub- younger audiences thought Robby the Robot was the true conscious fears and desires. Activating the ma- star of the picture. Courtesy Library of Congress Collection. chine, he unwittingly unleashed his own “monsters from the Id,” killing the other colonists, who, unlike lars, it catapulted the genre to mainstream respecta- him, wanted to return to Earth. -

The Reluctant Stowaway”

10 Episode 1: “The Reluctant Stowaway” Written by ShimonCUSHMAN Wincelberg (as S.Bar-David) (Script polishing by Anthony Wilson) Directed by Anton M. Leader (as Tony Leader) Produced by Buck Houghton (uncredited), with Jerry Briskin; Executive Producer: Irwin Allen Plot outline from Lost in Space show files (Irwin Allen Papers Collection): In 1997, from the now desperately overcrowded Earth, the Robinson family and their pilot set off in the Jupiter 2 spaceship, as pioneers to colonize a distant planet circling Alpha Centauri. At blast-off, Smith, agent of an enemy power, who has programmed the Jupiter 2’s Robot to destroy the ship, is trapped aboard. The Robot is de-activated before completely carrying out his orders, but the ship is damaged and now far off-course, lost in another galaxy. Robinson is outside trying to mend the damaged scanner when his tether breaks, leaving him floating helplessly in space. MARC 249 (Episode numbering and the order in which they are presented in this book are by air date. For Seasons One and Two it is also the order in which the episodes were produced. Lost in Space was unique in that it is one of the few filmed primetime series which – until the third season – aired its episodes in the order they were produced. This was done because each episode during the first two seasons was linked to the one which followed by means of the cliffhanger, and each cliffhanger had to be factored in to the overall running time of the episode.) From the Script: (Rev. Shooting Final draft teleplay, July 22, 1965 draft) – Smith: “Aeolis 14 Umbra, come in please. -

Download Bob's Bio in PDF Form

BOB MAY Zany is the word for this very versatile comedian who began his career in 1941 at the tender, impressionable age of two, working with his grandfather, Chic Johnson of the "HELLZAPOPPIN" comedy team of Olsen and Johnson. May worked with his family playing theaters, nightclubs, and hotel supper clubs all over the world, along with their many television shows. May left the show on two separate occasions. The first time to go to New York City where, for a year, he performed in the Broadway hit, "LIFE WITH FATHER" in the role of Harlin. The second time was when he was cast in an even bigger role for Uncle Sam. However, this did not curtail performing with his family, when he could wrangle a furlough. Bob Mays' career was not impaired by the service since the USO needed in-house performers when the outside shows could not reach the camps. Bob gave much to the entertainment of the soldiers, as he produced and performed in many productions during, his stint. Bob's Hollywood debut was with the encouragement and confidence of the ever-popular Jerry Lewis. This was the first of nine pictures with Jerry that would set Bob firmly in Hollywood as an established and revered actor in many films as seen in his resume. It was without question that Bob would find his way into the living rooms of the American home. On most any night he could be found performing in such shows as; RED SKELTON, McHALE'S NAVY, and as The Robot in LOST IN SPACE. -

{Download PDF} Science Quest: Lost in Space

SCIENCE QUEST: LOST IN SPACE PDF, EPUB, EBOOK Dan Green | 48 pages | 01 Oct 2013 | QED PUBLISHING | 9781781711811 | English | London, United Kingdom Science Quest: Lost in Space PDF Book Industrial Revolution in the U. Much Ado About Nothing. Changing Seasons WebQuest. WWII Holocaust. Frog And Toad Are Friends 2. On the lower level were the atomic motors, which use a fictional substance called "deutronium" for fuel. Social Studies Fair. Maureen and John Robinson, barely surviving Hasting's murder attempt, secretly make it back aboard. Smith were unaware that their contemporaries back on Earth had already deemed their mission a failure. In "The Raft", Will improvised several miniature rockoons in an attempt to send an interstellar " message in a bottle " distress signal. An Ocean Pet. Maureen devises a launch plan by piloting the Jupiters like the Apollo program , with John and Don as pilots. Removing life support to trim extra weight, the Resolute signals they must leave orbit within 24 hours due to the approaching Hawking radiation. Sierra Leon. By playing a shell game , the Robinson children deceive the authorities and Will is able to get Scarecrow to Jupiter 2 , only to be stopped by Adler. Creepy, Crawly Critters. Let's Count in Spanish! The Robinsons leave their planet to escape a collision with a comet and find a ship full of frozen convicts. Maureen and Adler escort Will to the planet to find the Robot. Roller Coaster Designer. Many movies and series featuring droids and other AI entities try to make these non-human characters as emotional and as relatable as possible. -

Physical and Earth Sciences Newsletter Number 156 Friday April 20, 2012 Robots Are Grading Your Papers! the Chronicle of Higher

Physical and Earth Sciences Newsletter Number 156 Friday April 20, 2012 Robots Are Grading Your Papers! The Chronicle of Higher Education has an article about robots being used to grade student essays. Many of us find that a bit scary and wonder if a robot can really tease out the message in our student compositions. I know that I was not impressed with the very cut-and-dried attitude of computer grading for higher level thinking at a recent workshop. However, the writer of the article in the Chronicle (Marc Bousquet) makes an excellent point. He first establishes that computers do grade essays as well as humans. He then points out: The fact is: Machines can reproduce human essay-grading so well because human essay-grading practices are already mechanical. [Ouch!] The article then ventures into the idea of robots teaching. We know that robots are already teaching, just look at the on-line homework help systems offered by various book publishers. The question I have is, how mechanical are we making our teaching? Is mechanical teaching a good or a bad thing? Is this really the future for teaching? Please note the Department meeting at 12:30 on Monday. If you have some comments about robots teaching, or anything else, please bring them up at the meeting. -Lou Department News Department Meeting We will have a Department meeting at 12:30 on Monday in 202 Martin Hall. Yes, we will have pizza. Final Grades for Spring 2012 Semester Spring 2012 Final Grades are due Tuesday, May 1 at 1:15 pm.