A Mixed-Method Study of Mobile Devices and Student Self-Directed Learning and Achievement During a Middle School STEM Activity" (2016)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Title I Grants to Local Educational Agencies - UTAH Allocations Under the American Recovery and Reinvestment Act

Title I Grants to Local Educational Agencies - UTAH Allocations under the American Recovery and Reinvestment Act Title I Allocations LEA ID District Under the Recovery Act* 4900030 ALPINE SCHOOL DISTRICT 4,309,345 4900060 BEAVER SCHOOL DISTRICT 74,257 4900090 BOX ELDER SCHOOL DISTRICT 644,807 4900120 CACHE SCHOOL DISTRICT 744,973 4900150 CARBON SCHOOL DISTRICT 330,733 4900180 DAGGETT SCHOOL DISTRICT 0 4900210 DAVIS SCHOOL DISTRICT 4,402,548 4900240 DUCHESNE SCHOOL DISTRICT 272,453 4900270 EMERY SCHOOL DISTRICT 150,117 4900300 GARFIELD SCHOOL DISTRICT 62,504 4900330 GRAND SCHOOL DISTRICT 182,231 4900360 GRANITE SCHOOL DISTRICT 10,016,077 4900390 IRON SCHOOL DISTRICT 1,265,039 4900420 JORDAN SCHOOL DISTRICT 5,320,942 4900450 JUAB SCHOOL DISTRICT 112,187 4900480 KANE SCHOOL DISTRICT 81,202 4900510 LOGAN SCHOOL DISTRICT 688,880 4900540 MILLARD SCHOOL DISTRICT 212,086 4900570 MORGAN SCHOOL DISTRICT 0 4900600 MURRAY SCHOOL DISTRICT 331,218 4900630 NEBO SCHOOL DISTRICT 1,682,601 4900660 NORTH SANPETE SCHOOL DISTRICT 193,923 4900690 NORTH SUMMIT SCHOOL DISTRICT 56,093 4900720 OGDEN SCHOOL DISTRICT 2,760,123 4900750 PARK CITY SCHOOL DISTRICT 119,132 4900780 PIUTE SCHOOL DISTRICT 61,750 4900810 PROVO SCHOOL DISTRICT 2,032,682 4900840 RICH SCHOOL DISTRICT 22,972 4900870 SALT LAKE CITY SCHOOL DISTRICT 6,131,357 4900900 SAN JUAN SCHOOL DISTRICT 1,016,975 4900930 SEVIER SCHOOL DISTRICT 333,355 4900960 SOUTH SANPETE SCHOOL DISTRICT 214,223 4900990 SOUTH SUMMIT SCHOOL DISTRICT 41,135 4901020 TINTIC SCHOOL DISTRICT 24,587 4901050 TOOELE SCHOOL DISTRICT 606,343 4901080 UINTAH SCHOOL DISTRICT 401,201 4901110 WASATCH SCHOOL DISTRICT 167,746 4901140 WASHINGTON SCHOOL DISTRICT 2,624,864 4901170 WAYNE SCHOOL DISTRICT 49,631 4901200 WEBER SCHOOL DISTRICT 1,793,991 4999999 PART D SUBPART 2 0 * Actual amounts received by LEAs will be smaller than shown here due to State-level adjustments to Federal Title I allocations. -

27. Planning & Student Services Manual 2016-17

Jordan School District Patrice A. Johnson, Ed. D., Superintendent of Schools West Jordan, UT 84084 Department Of Planning & Student Services MANUAL 2016-17 P&SS Manual 2016-17 – August 3, 2016 i Jordan School District PLANNING AND STUDENT SERVICES 2016-17 Table of Contents (Yellow highlight indicates item is new or has been changed/updated this year.) Contents TABLE OF CONTENTS ........................................................................................................................................... II PLANNING AND STUDENT SERVICES ............................................................................................................... 1 DIRECTORY ............................................................................................................................................................... 1 ATTENDANCE ACCOUNTING-ELEMENTARY .................................................................................................. 2 PUPIL PROGRESS REPORT FOR STUDENT ATTENDANCE ........................................................................ 3 DATE OF WITHDRAWAL FOR STUDENTS – TEN-DAY RULE ..................................................................... 3 DROPOUT BY ETHNICITY, GRADE AND GENDER INSTRUCTIONS .......................................................... 5 DROPOUT ................................................................................................................................................................................ 5 REPORTING DROPOUTS .......................................................................................................................................................... -

Pre-K-6 Elementary Social Studies Standards Revision Process Update January 7, 2020

Pre-K-6 Elementary Social Studies Standards Revision Process Update January 7, 2020 The elementary social studies revision is currently in step six of the standards revision process. The social studies standards writing team, comprised of experienced elementary teachers and LEA social studies specialist, has met as a full committee four times, in addition to their own smaller grade-band meetings. This work has been carried out even as these teachers have adjusted to the realities of the pandemic and is a testament to their dedication to this revision process. Committee members report that they are very excited about changes they are proposing to the standards. These changes are driven by: •Attentiveness to all of the standards review committee recommendations •Commitment to deeper levels of cognitive rigor whenever appropriate •Consistent attention to natural connections to other disciplines, e.g. science, fine arts, or ELA standards •Clarity regarding what they consider to be essential standards •Dedication to ensuring that all standards are assessable Rigor is a constant theme in the standards conversation. In our most recent full team meeting, the kindergarten and first grade writers were sharing how excited they are about the depth of thinking that will be required of their students in their revision, and how it is shifting towards a more cognitively rich document that will allow for standards-based assessment of authentic knowledge and skills. They are also paying close attention to the reading, writing, speaking, and listening demands called upon in English Language Arts (ELA), and want to reinforce the ELA standards whenever possible. They were updated on the recent Fordham Foundation report linking high-quality social studies instruction to increases in literacy gains. -

OCTOBER 8, 2019 Alpine School District's Board Of

MINUTES OF THE STUDY SESSION – OCTOBER 8, 2019 Alpine School District’s Board of Education met in a study session on Tuesday, October 8, 2019 at 4:00 P.M. The study session took place in the board room at the Alpine School District Office. Board members present: Board President S. Scott Carlson, Vice President Mark J. Clement, Sarah L. Beeson, Amber L. Bonner, Sara M. Hacken, Julie E. King, and Ada S. Wilson. Also present: Superintendent Samuel Y. Jarman, Business Administrator Robert W. Smith, and members of the administrative staff. There were approximately ten others in attendance. Review of 2016 Bond Accountability Presentation Assistant to the Superintendent, Kimberly Bird, reviewed a draft of the accountability presentation for the 2016 bond. When completed, this presentation will be taken out to the PTA and SCC members in all of our schools. Review Procedures for Advisors and Coaches of Extracurricular (PACE) Assistant Superintendent, Rhonda Bromley, reviewed the procedures for advisors and coaches of extracurricular (PACE) with the board members. PACE is a positive, simple resource for administrators to use. The meeting adjourned at 5:30 P.M. MINUTES OF THE BOARD MEETING – OCTOBER 8, 2019 Alpine School District’s Board of Education met in a regularly scheduled board meeting on Tuesday, October 8, 2019 at 6:00 P.M. The meeting took place in the board room at the Alpine School District Office. Board members present: Board President S. Scott Carlson, Vice President Mark J. Clement, Sarah L. Beeson, Amber L. Bonner, Sara M. Hacken, Julie E. King, and Ada S. Wilson. -

Career and Technical Education Regions and Local Education Agencies

CAREER AND TECHNICAL EDUCATION BEAR RIVER REGION SOUTHEAST REGION WASATCH FRONT SOUTH REGION Fast Forward Charter High School CARBON SCHOOL DISTRICT AMES InTech Collegiate High School Carbon High School American Academy of Innovation BOX ELDER SCHOOL DISTRICT Castle Valley Center American Leadership Academy – West Valley Bear River High School Lighthouse High School Beehive Science and Technology Academy Box Elder High School EMERY SCHOOL DISTRICT East Hollywood High School Dale Young Community High Emery High School Itineris Early College High School CACHE SCHOOL DISTRICT Green River High School Providence Hall Charter School Real Salt Lake Academy Cache High School GRAND SCHOOL DISTRICT Roots Charter High School Green Canyon High School Grand County High School Mountain Crest High School Salt Lake School for the Performing Arts SAN JUAN SCHOOL DISTRICT Summit Academy High School Ridgeline High School Monticello High School Sky View High School Utah Virtual Academy Monument Valley High School Vanguard Academy LOGAN SCHOOL DISTRICT Navajo Mountain High School CANYONS SCHOOL DISTRICT Logan High School San Juan High School Alta High School RICH SCHOOL DISTRICT Whitehorse High School Brighton High School Rich High School SOUTHWEST REGION Canyons Technical Education Center (CTEC) CENTRAL REGION Success Academy Corner Canyon High School Diamond Ridge High School JUAB SCHOOL DISTRICT BEAVER SCHOOL DISTRICT Hillcrest High School Beaver High School Juab High School Jordan High School Milford High School MILLARD SCHOOL DISTRICT GRANITE SCHOOL -

Utah Educator Livescan Fingerprint Sites

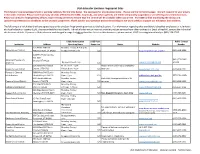

Utah Educator LiveScan Fingerprint Sites The Educator Licensing Department is working remotely for the time being. We apologize for any inconvenience. Please see the Contact Us page. We will respond to your emails in the order received. Please note that many services offered by the USBE, local LEAs, and other agencies are either temporarily suspended or are offering very limited services. Please call ahead to fingerprinting offices and/or testing centers to ensure that the service will be available when you arrive. The USBE will be monitoring the delays and considering extensions to deadlines as the situation progresses. Thank you for your patience and understanding as we work safely to support our educators and students. The following is a list of sites that have agreed to provide LiveScan fingerprinting services to Utah educators. For information regarding sites available to classified employees or volunteers at a local education agency (LEA), please contact the sites directly. Individual sites may or may not provide LiveScan services from other entities (i.e. Dept. of Health), contact the individual site for more details. If you are a Utah educator and charged a usage fee higher than that listed on this document, please contact USBE Licensing immediately at (801) 538-7740. USBE Authorization USBE Auth. Public Contact Institution Address Operating Hours Usage Fee Notes Website Number 575 North 100 East Monday - Friday; 9-4:30 p.m. Alpine School District American Fork, UT 84003 by appointment only $20 http://alpineschools.org/hr/ (801) -

2006 Annual Report

annual report 2006 1 Fit for Partnership ANNUAL REPORT IN SHAPE 2006 FITTING INOUTFIT IT’S A FIT DAVID O. MCKAY SCHOOL OF EDUCATION ~ BRIGHAM YOUNG UNIVERSITY CENTER FOR THE IMPROVEMENT OF TEACHER EDUCATION AND SCHOOLING BRIGHAM YOUNG UNIVERSITY -PUBLIC SCHOOL PARTNERSHIP 2 fit for partnership Fit for Partnership GLOSSARY CONTENTS FITTING IN 3 Message From The Director Sense or feeling 4 Fitting In of belonging 6 In Shape 8 Outfit IN SHAPE 10 It’s A Fit Prepared; to render competent or qualified 11 Alpine School District 12 Jordan School District 13 Nebo School District 14 Provo School District OUTFIT 15 Wasatch School District Equip or supply with necessities 16 BYU Arts & Sciences & David O. McKay School of Education 17 Grant Initiatives in the BYU-Public School Partnership 19 CITES & Partnership Leadership IT’S A FIT Appropriate or 19 Contact Information suitable to a particular need annual report 2006 3 MESSagE FROM THE DIRECTOR Center for the Improvement of Teacher Education and Schooling WHAT COMES TO YOUR MIND when someone asks you if you are fit? Do you think of your physical condition, your bank account balance, or maybe your mental preparation for a particular challenge? The idea of being fit or in shape generally refers to our current ability to meet the requirements of a particular need. Our confidence in succeeding in any given challenge is often related to our degree of fitness. Our work in the university-public school partnership is no different. The Partnership is composed of five school districts and multiple colleges across the BYU campus. -

2019-2020 Title I Schools

Utah Title I Schools 2019-2020 LEA School Grade Number District Name Number Building Name Span TA SW NEW Address City Zip 1 Alpine School District 107 Bonneville Elementary PK-6 SW 1245 North 800 West Orem 84057 1 Alpine School District 116 Central Elementary PK-6 SW 95 North 400 East Pleasant Grove 84062 1 Alpine School District 118 Cherry Hill Elementary PK-6 SW 250 East 1650 South Orem 84097 1 Alpine School District 128 Geneva Elementary PK-6 SW 665 West 400 North Orem 84057 1 Alpine School District 132 Greenwood Elementary PK-6 SW 50 East 200 South American Fork 84003 1 Alpine School District 161 Mount Mahogany Elementary PK-6 TA 618 N 1300 W Pleasant Grove 84062 1 Alpine School District 414 Orem Junior High School 7-9 TA 765 North 600 West Orem 84057 1 Alpine School District 168 Sharon Elementary PK-6 SW 525 North 400 East Orem 84097 1 Alpine School District 174 Suncrest Elementary PK-6 SW 668 West 150 North Orem 84057 1 Alpine School District 178 Westmore Elementary PK-6 SW 1150 South Main Orem 84058 1 Alpine School District 182 Windsor Elementary PK-6 SW 1315 North Main Orem 84057 2 Beaver School District 104 Belknap Elementary K-6 SW 30 West 300 North, P.O. Box 686 Beaver 84713 2 Beaver School District 108 Milford Elementary K-6 SW 450 South 700 West, P.O. Box 309 Milford 84751 2 Beaver School District 112 Minersville Elementary K-6 SW 450 South 200 West, P.O. Box 189 Minersville 84752 3 Box Elder School District 125 Discovery Elementary K-4 SW 810 North 500 West Brigham City 84302 3 Box Elder School District 150 Lake View Elementary -

FY 2017 Budget Will Continue the Police Vehicle Purchase Program

FY 2017 TENTATIVE ANNUAL BUDGET This page intentionally left blank American Fork City State of Utah Fiscal Year 2016-17 Budget The Government Finance Officers Association of the United States and Canada (GFOA) presented a Distinguished Budget Presentation Award to American Fork City, Utah for its annual budget for the fiscal year beginning July 1, 2014. In order to receive this award, a governmental unit must publish a budget document that meets program criteria as a policy document, as an operations guide, as a financial plan, and as a communications device. This award is valid for a period of one year only. We believe our current budget continues to conform to program requirements, and we are submitting it to GFOA to determine its eligibility for another award. American Fork City Elected Officials Councilman Councilman Mayor Mayor Pro-tem Councilman Councilman Kevin Barnes Rob Shelton James H. Hadfield Brad Frost Jeffrey Shorter Carlton Bowen Appointed Officials Historic City Hall City Administrator D. Craig Whitehead City Treasurer Laurel Allman City Recorder Chief of Police Lance Call Fire Chief Kriss Garcia City Attorney (Civil) Kasey Wright Civil Attorney (Criminal) Tucker Hansen AMERICAN FORK CITY Organizational Chart Citizens of American Fork City City Council Mayor City Administrator Legal Admin. Analyst Exec. Secretary Fire/EMS Public Works Broadband/ Parks and Rec Administratration Technology Police Library Planning Citizen Committees Chief Streets City Engineer Recreation Recorder Human Resources Finance/Budget Econ Develop./ Literacy Communications Chief Senior Citizens Water Fire marshall Chaplain Engineering Fitness Asst Recorder Payroll/Acc. Receivable Treasurer (2) Lieutenants Celebration Platoon Office Facilitator Sew er/Storm Inspections Cemetery Captains & Accounts Payable (7) Sergeants Drain Historical Firefighters/ Volunteer Committee Parks Utility Billing/ (8) Detectives EMTs Coordinator Buildings/Grounds Bus. -

ENROLLMENT History & Projection

ENROLLMENT History & Projection Published: November 26, 2019 575 North 100 East, American Fork, UT 84003 ENROLLMENT HISTORY & PROJECTION NOVEMBER 26, 2019 ALPINE SCHOOL DISTRICT 575 North 100 East American Fork, Utah 84003 www.alpineschools.org BOARD OF EDUCATION: S. Scott Carlson, President; Mark J. Clement, Vice-President Sarah Beeson; Amber Bonner; Sara M. Hacken; Julie King; Ada Wilson SUPERINTENDENT OF SCHOOLS: Samuel Y. Jarman BUSINESS ADMINISTRATOR: Robert W. Smith Prepared By: Alpine School District Business Services Team TABLE OF CONTENTS Enrollment & Projection Overview 1 Projection Summary with Charter History and High Schools 5 Projection Aggregate 13 Projection by Cluster 33 Enrollment History 59 October 1st Enrollment Statewide and Additional Charter School Information 71 Three Grade Total by High School 91 Birth and Census Data 105 Construction Growth Report 125 Enrollment & Projection Overview 1 2 Enrollment & Projection Overview • October 1, 2019 Actual Enrollment: • 81,532 Students • +1,676 from October 1, 2018 • +361 Elementary School • -5 Middle School (9th graders to Cedar Valley HS) • +1,341 High School (9th graders to Cedar Valley HS) • New Schools This Year: • Cedar Valley High School • Lake Mountain Middle School • Liberty Hills Elementary • Centennial Elementary • October 1, 2020 Projection: • 82,782 Students • +1,250 from October 1, 2019 • +97 Elementary School • +654 Middle School • +499 High School • Changes for Next Year: • New Elementary in Eagle Mountain (Silver Lake) (Future Projections NOT Included, Boundaries -

Ed Scott Mayor 1994–1997 by Petrea G

city Information Highland City Administration Offices 5378 West 10400 North, Highland, Utah 84003 www.highland.org 756-5751 | 756-6190 | 756-6903 Fax | 420-4860 pager City Emergency Services City Meeting Schedule Police (801) 756-9800 City Council meets every 1st and 3rd Tuesday Fire Department (801) 763-5365 of each month. The meeting starts at 7:00 p.m. County Dispatch (801) 375-3601 at City Hall (5378 West 10400 North). Animal Control (801) 756-9800 Emergency and Ambulance Services (801) 763-5365 Planning Commission meets every 2nd and 4th Public Safety Director (801) 763-5365 Tuesday of each month. The meeting starts at Administrative Assistant (801) 756-5751 7:00 p.m. at City Hall. Culinary Water (801) 492-6362 Pressurized Irrigation (801) 342-1471 Sewer (Timpanogos Service Sewer District) (801) 342-1471 Businesses in Highland The following services are provided by businesses in Highland: • Post Office • Banks • Grocery • Copy Center • LDS Clothing and Video Store • Dry Cleaning • Dining • Music store • Tire and service • Professional services including vision, • Oil and Lube dental, and health center • Convenience store with gasoline School Locations and Information School District School Address Phone / Fax Freedom Elementary Alpine 10326 N 6800 W, Highland, UT 84003 801-766-5270 / 801-766-5272 Highland Elementary Alpine 10865 N 6000 W, Highland, UT 84003 801-756-8537 / 801-763-7001 Ridgeline Elementary Alpine 6250 W 11800 N, Highland, UT 84003 801-492-0401 / 801-492-0263 Mountain Ridge Jr High Alpine 5525 W 10400 N, Highland, UT 84003 801-763-7010 / 801-763-7018 Lone Peak HS Alpine 10189 N 4800 W, Highland, UT 84003 801-763-7050 / 801-763-7064 Park Reservation Timpanogos Cave American Fork Canyon Fees National Monument To reserve a city park, get a Reserva- Entrance into American Fork Canyon is tion Request form at the City Hall. -

Summary of Participating Employers

Utah Retirement Systems Summary of Participating Employers N / Public Employees Retirement System — Noncontributory • C / Public Employees Retirement System — Contributory PS / Public Safety Retirement System • F / Firefighters Retirement System • T / Tier 2 Retirement Systems D / 457 Plan • K / 401(k) Plan Employer N C PS F T D K Employer N C PS F T D K School Districts and Education Employers Park City School District .................................... N C T D K Piute School District............................................ N C T K Academy for Math, Engineering Provo School District .......................................... N C T D K and Science Charter School ........................... N T K Recreation and Habilitation Services ............... N T K Active Re-Entry Incorporated ........................... N T K Rich School District ............................................. N C T K Alpine School District ......................................... N C T D K Salt Lake Arts Academy ..................................... N T Alpine Uniserv .................................................... N T D K Salt Lake Community College .......................... N C T D K American Leadership Academy ....................... N T K Salt Lake School District ..................................... N C T D K Beaver School District ......................................... N T K Salt Lake/Tooele Applied Technical Center .... N T K Bonneville Uniserv .............................................. N T D K San Juan School District ....................................