Exploring Ontario Universities' Strategic Mandate Agreements

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Assessing the Influence of First Nation Education Counsellors on First Nation Post-Secondary Students and Their Program Choices

Assessing the Influence of First Nation Education Counsellors on First Nation Post-Secondary Students and their Program Choices by Pamela Williamson A dissertation submitted in conformity with the requirements for the degree of Doctor of Higher Education Graduate Department of Theory and Policy Studies in Education Ontario Institute for Studies in Education of the University of Toronto © Copyright by Pamela Williamson (2011) Assessing the Influence of First Nation Education Counsellors on First Nation Post-Secondary Students and their Post-Secondary Program Choices Doctor of Higher Education 2011 Pamela Williamson Department of Theory and Policy Studies in Education University of Toronto Abstract The exploratory study focused on First Nation students and First Nation education counsellors within Ontario. Using an interpretative approach, the research sought to determine the relevance of the counsellors as a potentially influencing factor in the students‘ post-secondary program choices. The ability of First Nation education counsellors to be influential is a consequence of their role since they administer Post- Secondary Student Support Program (PSSSP) funding. A report evaluating the program completed by Indian and Northern Affairs Canada in 2005 found that many First Nation students would not have been able to achieve post-secondary educational levels without PSSSP support. Eight self-selected First Nation Education counsellors and twenty-nine First Nation post- secondary students participated in paper surveys, and five students and one counsellor agreed to complete a follow-up interview. The quantitative and qualitative results revealed differences in the perceptions of the two survey groups as to whether First Nation education counsellors influenced students‘ post-secondary program choices. -

Research Board Minutes

Research Board Minutes Date: January 27, 2021 Time: 10:00 am – 12:00 pm Place: Google Meets Attendees: K. Atkinson, B. Chang, C. Davidson, A. Eamer, G. Edwards, S. Forrester, J. Freeman, L. Jacobs (Chair), M. Lemonde, S. Rahnamayan, L. Roy, V. Sharpe (secretary), A. Slane Guest(s): Regrets: 1. Approval of the agenda Approved by consensus. 2. Approval of previous meeting’s minutes Approved by consensus. 3. Report of the Vice President Research & Innovation Canada Research Chairs - L. Jacobs shared that both our CRC applications have been approved. He thanked Laura Rendl, Jenn Freeman and the Dean’s offices in FBIT and FEAS as well as individual faculty members for the immense work that went into the applications. There is currently an embargo on releasing the names of the CRCs but we are able to share internally that the FEAS Tier 1 is starting May 1 and the FBIT Tier 2 is starting on June 1. Many people have made significant moves to get them involved in the university research enterprise already. The Tier 1 is already integrated into a number of diverse initiatives including our partnership with the University of Miami. We have plans to get the Tier 2 involved in a number of projects such as project arrow and cybersecurity initiatives. It would be ideal to have them ready to hit the ground running when they start at the university. ACTION: If you have ideas about where they might fit in with your research or your faculty’s research reach out to L. Jacobs and he can connect you. -

Digital Fluency Expression of Interest

January 6, 2021 Digital Fluency Expression of Interest Please review the attached document and submit your application electronically according to the guidelines provided by 11:59 pm EST on February 3, 2021. Applications will not be accepted unless: • Submitted electronically according to the instructions. Submission by any other form such as email, facsimiles or paper copy mail will not be accepted. • Received by the date and time specified. Key Dates: Date Description January 6, 2021 Expression of Interest Released Closing Date and Time for Submissions February 3, 2021 Submissions received after the closing date and 11:59pm EST time will not be considered for evaluation Submit applications here By February 28, 2021 Successful applicants notified Please note: due to the volume of submissions received, unsuccessful applicants will not be notified. Feedback will not be provided eCampusOntario will not be held responsible for documents that are not submitted in accordance with the above instructions NOTE: Awards for this EOI are contingent upon funding from MCU. 1 TABLE OF CONTENTS 1. BACKGROUND .................................................................................................................... 3 2. DESCRIPTION ....................................................................................................................... 4 WHAT IS DIGITAL FLUENCY? .......................................................................................................... 4 3. PROJECT TYPE ..................................................................................................................... -

Eurasia Version

Our Total Care Education System® • Fosters Student Success • Delivers Peace of Mind to Parents Class of 2020 Eurasia Version Proud Success at Top Universities “You made a good choice coming “ In 2009 an additional partnership was “ For the University of Toronto, CIC is our to CIC.This is one of University of formed to guarantee admission to all largest feeder school in the entire world, Waterloo's largest sources of Columbia students who meet period. Not one of them, it is the largest students anywhere in the world, McMasters admission requirements. school, domestic or international. not largest sources of international As a result, every year McMaster So, CIC has a huge impact on our university students, the largest sources of accepts many Columbia graduates into and we're very proud of that. We've been students.” challenging programs to help achieve associated with CIC for many years. their education and career goals.” We found the graduates to be excellent. ” Andrea Jardin Melissa Pool Ken Withers Associate Registrar University Registrar Director Admissions McMaster University Office of Student Recruitment University of Waterloo University of Toronto Founded 1979 SUCCESSTOTAL CARESTORIES EDUCATION SYSTEM® 2020 Grads $ 9,528,850 CAD Our Class of 2020 Top Graduates Enter the World’s Best Universities with Competitive Scholarships Tobi Ayodele Madi Burabayev Ngozi Egbunike Anh Phu Tran Yang Yijun Sizova Veronika Imperial College London Ivey Business School Purdue University University of Toronto University of Waterloo University -

MRS Prerequisite: Physics

MRS Prerequisite: Physics Undergraduate courses which are equivalent to U of T’s PHY131H1 (Introduction to Physics I) or courses that include topics such as classical kinematics and dynamics, energy, force, angular momentum, oscillations and/or waves will usually be sufficient to satisfy the Physics prerequisite requirement. To determine course equivalencies, please refer to U of T’s Transfer Explorer tool: http://transferex.utoronto.ca. Please note that courses intended for non-scientists with a focus on general concepts of Physics (or astronomy) will not typically provide an adequate foundation for the MRS Program and therefore are not considered appropriate for admission purposes. Prerequisite courses must be part of (or transferable to) an undergraduate degree program; we are not able to consider diploma or certificate program courses for admission purposes. Listed below are popular Physics courses that are appropriate for admission purposes. If you have completed, or plan to complete, a course not listed below (or not equivalent to a course listed below), please forward the course code and course syllabus (if available) to [email protected] for review. INSTITUTION COURSE INSTITUTION COURSE Athabasca University PHYS200 University of Guelph PHYS1000 Brock University KINE3P10 University of Guelph PHYS1010 Brock University PHYS1P21/1P91 University of Guelph PHYS1070 Brock University PHYS1P23 University of Guelph PHYS1080 Brock University PHYS1P93 University of Guelph SCMA2080 Carleton University PHYS1003 University of Ottawa -

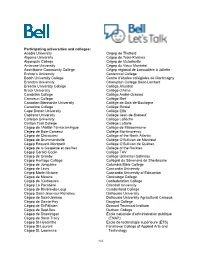

Participating Universities and Colleges: Acadia University Algoma University Algonquin College Ambrose University Assiniboine C

Participating universities and colleges: Acadia University Cégep de Thetford Algoma University Cégep de Trois-Rivières Algonquin College Cégep de Victoriaville Ambrose University Cégep du Vieux Montréal Assiniboine Community College Cégep régional de Lanaudière à Joliette Bishop’s University Centennial College Booth University College Centre d'études collégiales de Montmagny Brandon University Champlain College Saint-Lambert Brescia University College Collège Ahuntsic Brock University Collège d’Alma Cambrian College Collège André-Grasset Camosun College Collège Bart Canadian Mennonite University Collège de Bois-de-Boulogne Canadore College Collège Boréal Cape Breton University Collège Ellis Capilano University Collège Jean-de-Brébeuf Carleton University Collège Laflèche Carlton Trail College Collège LaSalle Cégep de l’Abitibi-Témiscamingue Collège de Maisonneuve Cégep de Baie-Comeau Collège Montmorency Cégep de Chicoutimi College of the North Atlantic Cégep de Drummondville Collège O’Sullivan de Montréal Cégep Édouard-Montpetit Collège O’Sullivan de Québec Cégep de la Gaspésie et des Îles College of the Rockies Cégep Gérald-Godin Collège TAV Cégep de Granby Collège Universel Gatineau Cégep Heritage College Collégial du Séminaire de Sherbrooke Cégep de Jonquière Columbia Bible College Cégep de Lévis Concordia University Cégep Marie-Victorin Concordia University of Edmonton Cégep de Matane Conestoga College Cégep de l’Outaouais Confederation College Cégep La Pocatière Crandall University Cégep de Rivière-du-Loup Cumberland College Cégep Saint-Jean-sur-Richelieu Dalhousie University Cégep de Saint-Jérôme Dalhousie University Agricultural Campus Cégep de Sainte-Foy Douglas College Cégep de St-Félicien Dumont Technical Institute Cégep de Sept-Îles Durham College Cégep de Shawinigan École nationale d’administration publique Cégep de Sorel-Tracy (ENAP) Cégep St-Hyacinthe École de technologie supérieure (ÉTS) Cégep St-Laurent Fanshawe College of Applied Arts and Cégep St. -

Faculty of Business & Information Technology

ONTARIO TECH UNIVERSITY FACULTY OF BUSINESS & INFORMATION TECHNOLOGY ONTARIO TECH UNIVERSITY CLASS OF 2020 CONVOCATION INFORMATION TECHNOLOGY INFORMATION FACULTY OF BUSINESS AND FACULTY VIRTUAL CONVOCATION CEREMONY ONTARIO TECH UNIVERSITY WEDNESDAY, JUNE 23, 2021 CONGRATULATIONS GRADUATES! 2 CONVOCATION CELEBRATES THE SUCCESS OF OUR STUDENTS—THEIR SUCCESS AT ONTARIO TECH AS WELL AS THE ACCOMPLISHMENTS THEY WILL ACHIEVE IN THE FUTURE. We can all take great pride in this moment. After all, each of us—parents and friends, professors, academic advisors, members of the board—has helped to ensure the academic success of our students. Although we’re celebrating in a different format than past Convocations, our sentiment remains the same. We’re proud of our students and we know they’re well equipped to meet the challenges of today and in the future. 3 After graduating from York University with a Bachelor CHANCELLOR of Arts degree, Mr. Frazer received his Master of Arts degree from Brock University. He also holds a The Chancellor serves as the titular Bachelor of Law degree from Western University and head of the university, presiding over a Master of Business Administration degree from Convocation and conferring all degrees, Wilfrid Laurier University. honorary degrees, certificates and diplomas on behalf of the university. Mr. Frazer’s awards and recognitions include Wilfrid Laurier University’s MBA Outstanding Executive The Chancellor advocates for the Leadership Award; Western University Law School’s university’s vision as endorsed by the Ivan Rand Alumni Award; the Queen Elizabeth II Board of Governors, and is an essential Diamond Jubilee Medal; the Ted Rogers School ambassador who advances the of Management Honorary Alumni Award; and the university’s external interests. -

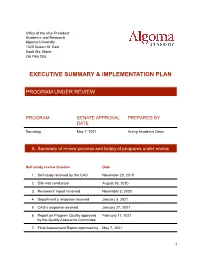

EXECUTIVE SUMMARY SOCI Program May

Office of the Vice President Academic and Research Algoma University 1520 Queen St. East Sault Ste. Marie ON P6A 2G4 EXECUTIVE SUMMARY & IMPLEMENTATION PLAN PROGRAM UNDER REVIEW PROGRAM SENATE APPROVAL PREPARED BY DATE Sociology May 7, 2021 Acting Academic Dean A. Summary of review process and listing of programs under review Self-study review timeline Date 1. Self-study received by the CAO November 23, 2019 2. Site visit conducted August 26, 2020 3. Reviewers’ report received November 2, 2020 4. Department’s response received January 5, 2021 5. CAO’s response received January 27, 2021 6. Report on Program Quality approved February 11, 2021 by the Quality Assurance Committee 7. Final Assessment Report approved by May 7, 2021 1 Senate The members of the Review Committee were: ● Dr. Chris Sanders (Lakehead University) ● Dr. Alan Law (Trent University) The academic programs offered by the Department which were examined as part of the review included: ● Bachelor of Arts (Honours) Single Major Sociology ● Bachelor of Arts (Honours) Combined Major Sociology ● Bachelor of Arts (General) Single Major Sociology ● Bachelor of Arts (General) Combined Major Sociology ● Honours Diploma in Sociology ● Minor in Sociology and ● Human Development Minor. This review was launched under the terms and conditions of the IQAP approved by Senate on November 1, 2013 and ratified by the Quality Council on December 13, 2013. Steps following the submission of the departmental response followed the terms and conditions of the IQAP approved by Senate on September 8, 2017 and re-ratified by Quality Council on April 20, 2018. B. Implementation Plan Below are the recommendations from the Review that require further actions, together with the specific unit or position responsible for executing it, action timelines and required resources. -

Student Transitions Project WebBased Resources

Ontario Native Education Counselling Association Student Transitions Project WebBased Resources Index Section Content Page 1 Schools and Education Institutions for First Nations, Inuit and Métis 3 ‐ Alternative Schools ‐ First Nations Schools ‐ Post‐Secondary Institutions in Ontario 2 Community Education Services 5 3 Aboriginal Student Centres, Colleges 6 4 Aboriginal Services, Universities 8 5 Organizations Supporting First Nations, Inuit and Métis 11 6 Language and Culture 12 7 Academic Support 15 8 For Counsellors and Educators 19 9 Career Support 23 10 Health and Wellness 27 11 Financial Assistance 30 12 Employment Assistance for Students and Graduates 32 13 Applying for Post‐Secondary 33 14 Child Care 34 15 Safety 35 16 Youth Voices 36 17 Youth Employment 38 18 Advocacy in Education 40 19 Social Media 41 20 Other Resources 42 This document has been prepared by the Ontario Native Education Counselling Association March 2011 ONECA Student Transitions Project Web‐Based Resources, March 2011 Page 2 Section 1 – Schools and Education Institutions for First Nations, Métis and Inuit 1.1 Alternative schools, Ontario Contact the local Friendship Centre for an alternative high school near you Amos Key Jr. E‐Learning Institute – high school course on line http://www.amoskeyjr.com/ Kawenni:io/Gaweni:yo Elementary/High School Six Nations Keewaytinook Internet High School (KiHS) for Aboriginal youth in small communities – on line high school courses, university prep courses, student awards http://kihs.knet.ca/drupal/ Matawa Learning Centre Odawa -

Media Release

MEDIA RELEASE FOR IMMEDIATE RELEASE August 25, 2021 ONTARIO TECH STUDENT UNION ESPORTS JOINS PREMIER ONTARIO COLLEGIATE LEAGUE Provides students with greater scholarship opportunities OSHAWA, ON — The Ontario Tech Student Union (OTSU), the organization representing over 10,000 students that attend Ontario Tech University, today announced that OTSU Esports has joined Ontario Post-Secondary Esports (OPSE), the preemi- nent league for collegiate-level esports in Ontario. Beginning in September 2021, OTSU Esports will be a member of the 2021/2022 OPSE league, competing in all five of the league’s games: Hearthstone, League of Legends, Overwatch, Rocket League, and Valorant. OTSU Esports joins other top universities in Ontario who compete in the OPSE, including, Carleton University, Trent University, and the University of Windsor, all vying for five titles and $30,00 0 in scholarships. “We’re so excited to see OTSU Esports enter this league just one year after being formed — it’s truly a testament to just how fast our community is growing and evolving,” said OTSU President, Josh Sankaralal. “Esports teams have quickly become a mainstay for many university campuses across Canada in the last couple years, and the OTSU is absolutely thrilled to be competing among the best of them and contributing to the industry’s growth.” The OTSU’s esports community started as a campus club that grew into a fully-fledged service after the pandemic forced classes to be offered virtually in 2020 and has since grown to include over 60 varsity players, a Discord following of nearly 1000, and is actively competitive in eight games. -

Bachelor of Health Sciences (Honours) Kinesiology Major Student Handbook

Bachelor of Health Sciences (Honours) Kinesiology Major Student Handbook 1 Last updated 6/11/2020 Table of Contents SECTION 1: PROGRAM BACKGROUND ............................................................................................... 4 THE STUDY OF KINESIOLOGY .......................................................................................................... 4 PROGRAM MISSION, VISION & OBJECTIVES ................................................................................ 5 SECTION 2: FACULTY & STAFF ............................................................................................................. 7 FULL-TIME KINESIOLOGY FACULTY ............................................................................................... 7 PROGRAM ADMINISTRATION .......................................................................................................... 7 SECTION 3: CURRICULUM ...................................................................................................................... 8 1ST YEAR AT ONTARIO TECH UNIVERSITY ................................................................................... 10 FITNESS AND HEALTH PROMOTION PATHWAY ........................................................................ 11 OCCUPATIONAL THERAPY ASSISTANT (OTA) / ......................................................................... 12 PHYSICAL THERARY ASSISTANT (PTA) ........................................................................................ 12 TRENT UNIVERSITY ........................................................................................................................ -

Ministry of Training, Colleges and University

Ministry of Training, Colleges and Universities Strategic Policy and Programs Division GUIDELINES FOR WORKPLACE INSURANCE FOR POSTSECONDARY STUDENTS ON UNPAID WORK PLACEMENTS Appendix A: Training Agencies for the Purposes of these Guidelines Algoma University Brock University Carleton University College of the Dominican or Friar Preachers of Ottawa University of Guelph Le Collège de Hearst Lakehead University Laurentian University McMaster University Northern Ontario Medical School Nipissing University Ontario College of Art & Design University University of Ontario Institute of Technology University of Ottawa Queen's University Ryerson University University of Toronto Trent University University of Waterloo University of Western Ontario Wilfrid Laurier University University of Windsor York University Algonquin College of Applied Arts and Technology Cambrian College of Applied Arts and Technology Canadore College of Applied Arts and Technology Centennial College of Applied Arts and Technology Collège Boréal d’arts appliqués et de technologie. Collège d’arts appliqués et de technologie La Cité collégiale. Conestoga College Institute of Technology and Advanced Learning Confederation College of Applied Arts and Technology Durham College of Applied Arts and Technology Fanshawe College of Applied Arts and Technology George Brown College of Applied Arts and Technology Georgian College of Applied Arts and Technology Humber College Institute of Technology and Advanced Learning Lambton College of Applied Arts and Technology Loyalist College of Applied Arts and Technology Mohawk College of Applied Arts and Technology Niagara College of Applied Arts and Technology Northern College of Applied Arts and Technology St. Clair College of Applied Arts and Technology Revised: June 2014 Page 13 of 16 Ministry of Training, Colleges and Universities Strategic Policy and Programs Division GUIDELINES FOR WORKPLACE INSURANCE FOR POSTSECONDARY STUDENTS ON UNPAID WORK PLACEMENTS St.