A Low Power and Fast Cmos Arithmetic Logic Unit Nur

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Subchapter 2.4–Hp Server Rp5400 Series

Chapter 2 hp server rp5400 series Subchapter 2.4—hp server rp5400 series hp server rp5470 Table 2.4.1 HP Server rp5470 Specifications Server model number rp5470 Max. Network Interface Cards (cont.)–see supported I/O table Server product number A6144B ATM 155 Mb/s–MMF 10 Number of Processors 1-4 ATM 155 Mb/s–UTP5 10 Supported Processors ATM 622 Mb/s–MMF 10 PA-RISC PA-8700 Processor @ 650 and 750 MHz 802.5 Token Ring 4/16/100 Mb/s 10 Cache–Instr/data per CPU (KB) 750/1500 Dual port X.25/SDLC/FR 10 Floating Point Coprocessor included Yes Quad port X.25/FR 7 FDDI 10 Max. Additional Interface Cards–see supported I/O table 8 port Terminal Multiplexer 4 64 port Terminal Multiplexer 10 PA-RISC PA-8600 Processor @ 550 MHz Graphics/USB kit 1 kit (2 cards) Cache–Instr/data/CPU (KB) 512/1024 Public Key Cryptography 10 Floating Point Coprocessor included Yes HyperFabric 7 Electrical Characteristics TPM estimate (4 CPUs) 34,500 AC Input power 100-240V 50/60 Hz SPECweb99 (4 CPUs) 3,750 Hotswap Power supplies 2 included, 3rd for N+1 Redundant AC power inputs 2 required, 3rd for N+1 Min. memory 256 MB Current requirements at 200V 6.5 A (shared across inputs) Max. memory capacity 16 GB Typical Power dissipation (watts) 1008 W Internal Disks Maximum Power dissipation (watts) 1 1360 W Max. disk mechanisms 4 Power factor at full load .98 Max. disk capacity 292 GB kW rating for UPS loading1 1.3 Standard Integrated I/O Maximum Heat dissipation (BTUs/hour) 1 4380 - (3000 typical) Ultra2 SCSI–LVD Yes Site Preparation 10/100Base-T (RJ-45 connector) Yes Site planning and installation included No RS-232 serial ports (multiplexed from DB-25 port) 3 Depth (mm/inches) 774 mm/30.5 Web Console (including 10Base-T port) Yes Width (mm/inches) 482 mm/19 I/O buses and slots Rack Height (EIA/mm/inches) 7 EIA/311/12.25 Total PCI Slots (supports 66/33 MHz×64/32 bits) 10 Deskside Height (mm/inches) 368 mm/14.5 2 Hot-Plug Twin-Turbo (500 MB/s) and 6 Hot-Plug Turbo slots (250 MB/s) Weight (kg/lbs) Max. -

Analysis of GPGPU Programs for Data-Race and Barrier Divergence

Analysis of GPGPU Programs for Data-race and Barrier Divergence Santonu Sarkar1, Prateek Kandelwal2, Soumyadip Bandyopadhyay3 and Holger Giese3 1ABB Corporate Research, India 2MathWorks, India 3Hasso Plattner Institute fur¨ Digital Engineering gGmbH, Germany Keywords: Verification, SMT Solver, CUDA, GPGPU, Data Races, Barrier Divergence. Abstract: Todays business and scientific applications have a high computing demand due to the increasing data size and the demand for responsiveness. Many such applications have a high degree of parallelism and GPGPUs emerge as a fit candidate for the demand. GPGPUs can offer an extremely high degree of data parallelism owing to its architecture that has many computing cores. However, unless the programs written to exploit the architecture are correct, the potential gain in performance cannot be achieved. In this paper, we focus on the two important properties of the programs written for GPGPUs, namely i) the data-race conditions and ii) the barrier divergence. We present a technique to identify the existence of these properties in a CUDA program using a static property verification method. The proposed approach can be utilized in tandem with normal application development process to help the programmer to remove the bugs that can have an impact on the performance and improve the safety of a CUDA program. 1 INTRODUCTION ans that the program will never end-up in an errone- ous state, or will never stop functioning in an arbitrary With the order of magnitude increase in computing manner, is a well-known and critical property that an demand in the business and scientific applications, de- operational system should exhibit (Lamport, 1977). -

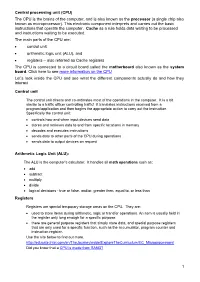

Soft Machines Targets Ipcbottleneck

SOFT MACHINES TARGETS IPC BOTTLENECK New CPU Approach Boosts Performance Using Virtual Cores By Linley Gwennap (October 27, 2014) ................................................................................................................... Coming out of stealth mode at last week’s Linley Pro- president/CTO Mohammad Abdallah. Investors include cessor Conference, Soft Machines disclosed a new CPU AMD, GlobalFoundries, and Samsung as well as govern- technology that greatly improves performance on single- ment investment funds from Abu Dhabi (Mubdala), Russia threaded applications. The new VISC technology can con- (Rusnano and RVC), and Saudi Arabia (KACST and vert a single software thread into multiple virtual threads, Taqnia). Its board of directors is chaired by Global Foun- which it can then divide across multiple physical cores. dries CEO Sanjay Jha and includes legendary entrepreneur This conversion happens inside the processor hardware Gordon Campbell. and is thus invisible to the application and the software Soft Machines hopes to license the VISC technology developer. Although this capability may seem impossible, to other CPU-design companies, which could add it to Soft Machines has demonstrated its performance advan- their existing CPU cores. Because its fundamental benefit tage using a test chip that implements a VISC design. is better IPC, VISC could aid a range of applications from Without VISC, the only practical way to improve single-thread performance is to increase the parallelism Application (sequential code) (instructions per cycle, or IPC) of the CPU microarchi- Single Thread tecture. Taken to the extreme, this approach results in massive designs such as Intel’s Haswell and IBM’s Power8 OS and Hypervisor that deliver industry-leading performance but waste power Standard ISA and die area. -

Arithmetic and Logical Unit Design for Area Optimization for Microcontroller Amrut Anilrao Purohit 1,2 , Mohammed Riyaz Ahmed 2 and R

et International Journal on Emerging Technologies 11 (2): 668-673(2020) ISSN No. (Print): 0975-8364 ISSN No. (Online): 2249-3255 Arithmetic and Logical Unit Design for Area Optimization for Microcontroller Amrut Anilrao Purohit 1,2 , Mohammed Riyaz Ahmed 2 and R. Venkata Siva Reddy 2 1Research Scholar, VTU Belagavi (Karnataka), India. 2School of Electronics and Communication Engineering, REVA University Bengaluru, (Karnataka), India. (Corresponding author: Amrut Anilrao Purohit) (Received 04 January 2020, Revised 02 March 2020, Accepted 03 March 2020) (Published by Research Trend, Website: www.researchtrend.net) ABSTRACT: Arithmetic and Logic Unit (ALU) can be understood with basic knowledge of digital electronics and any engineer will go through the details only once. The advantage of knowing ALU in detail is two- folded: firstly, programming of the processing device can be efficient and secondly, can design a new ALU architecture as per the various constraints of the use cases. The miniaturization of digital circuits can be achieved by either reducing the size of transistor (Moore’s law) or by optimizing the gate count of the circuit. The first has been explored extensively while the latter has been ignored which deals with the application of Boolean rules and requires sound knowledge of logic design. The ultimate outcome is to have an area optimized architecture/approach that optimizes the circuit at gate level. The design of ALU is for various processing devices varies with the device/system requirements. The area optimization places a significant role in the chip design. Here in this work, we have attempted to design an ALU which is area efficient while being loaded with additional functionality necessary for microcontrollers. -

Unit 8 : Microprocessor Architecture

Unit 8 : Microprocessor Architecture Lesson 1 : Microcomputer Structure 1.1. Learning Objectives On completion of this lesson you will be able to : ♦ draw the block diagram of a simple computer ♦ understand the function of different units of a microcomputer ♦ learn the basic operation of microcomputer bus system. 1.2. Digital Computer A digital computer is a multipurpose, programmable machine that reads A digital computer is a binary instructions from its memory, accepts binary data as input and multipurpose, programmable processes data according to those instructions, and provides results as machine. output. 1.3. Basic Computer System Organization Every computer contains five essential parts or units. They are Basic computer system organization. i. the arithmetic logic unit (ALU) ii. the control unit iii. the memory unit iv. the input unit v. the output unit. 1.3.1. The Arithmetic and Logic Unit (ALU) The arithmetic and logic unit (ALU) is that part of the computer that The arithmetic and logic actually performs arithmetic and logical operations on data. All other unit (ALU) is that part of elements of the computer system - control unit, register, memory, I/O - the computer that actually are there mainly to bring data into the ALU to process and then to take performs arithmetic and the results back out. logical operations on data. An arithmetic and logic unit and, indeed, all electronic components in the computer are based on the use of simple digital logic devices that can store binary digits and perform simple Boolean logic operations. Data are presented to the ALU in registers. These registers are temporary storage locations within the CPU that are connected by signal paths of the ALU. -

Computer Architecture Out-Of-Order Execution

Computer Architecture Out-of-order Execution By Yoav Etsion With acknowledgement to Dan Tsafrir, Avi Mendelson, Lihu Rappoport, and Adi Yoaz 1 Computer Architecture 2013– Out-of-Order Execution The need for speed: Superscalar • Remember our goal: minimize CPU Time CPU Time = duration of clock cycle × CPI × IC • So far we have learned that in order to Minimize clock cycle ⇒ add more pipe stages Minimize CPI ⇒ utilize pipeline Minimize IC ⇒ change/improve the architecture • Why not make the pipeline deeper and deeper? Beyond some point, adding more pipe stages doesn’t help, because Control/data hazards increase, and become costlier • (Recall that in a pipelined CPU, CPI=1 only w/o hazards) • So what can we do next? Reduce the CPI by utilizing ILP (instruction level parallelism) We will need to duplicate HW for this purpose… 2 Computer Architecture 2013– Out-of-Order Execution A simple superscalar CPU • Duplicates the pipeline to accommodate ILP (IPC > 1) ILP=instruction-level parallelism • Note that duplicating HW in just one pipe stage doesn’t help e.g., when having 2 ALUs, the bottleneck moves to other stages IF ID EXE MEM WB • Conclusion: Getting IPC > 1 requires to fetch/decode/exe/retire >1 instruction per clock: IF ID EXE MEM WB 3 Computer Architecture 2013– Out-of-Order Execution Example: Pentium Processor • Pentium fetches & decodes 2 instructions per cycle • Before register file read, decide on pairing Can the two instructions be executed in parallel? (yes/no) u-pipe IF ID v-pipe • Pairing decision is based… On data -

Performance of a Computer (Chapter 4) Vishwani D

ELEC 5200-001/6200-001 Computer Architecture and Design Fall 2013 Performance of a Computer (Chapter 4) Vishwani D. Agrawal & Victor P. Nelson epartment of Electrical and Computer Engineering Auburn University, Auburn, AL 36849 ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 1 What is Performance? Response time: the time between the start and completion of a task. Throughput: the total amount of work done in a given time. Some performance measures: MIPS (million instructions per second). MFLOPS (million floating point operations per second), also GFLOPS, TFLOPS (1012), etc. SPEC (System Performance Evaluation Corporation) benchmarks. LINPACK benchmarks, floating point computing, used for supercomputers. Synthetic benchmarks. ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 2 Small and Large Numbers Small Large 10-3 milli m 103 kilo k 10-6 micro μ 106 mega M 10-9 nano n 109 giga G 10-12 pico p 1012 tera T 10-15 femto f 1015 peta P 10-18 atto 1018 exa 10-21 zepto 1021 zetta 10-24 yocto 1024 yotta ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 3 Computer Memory Size Number bits bytes 210 1,024 K Kb KB 220 1,048,576 M Mb MB 230 1,073,741,824 G Gb GB 240 1,099,511,627,776 T Tb TB ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 4 Units for Measuring Performance Time in seconds (s), microseconds (μs), nanoseconds (ns), or picoseconds (ps). Clock cycle Period of the hardware clock Example: one clock cycle means 1 nanosecond for a 1GHz clock frequency (or 1GHz clock rate) CPU time = (CPU clock cycles)/(clock rate) Cycles per instruction (CPI): average number of clock cycles used to execute a computer instruction. -

Chap01: Computer Abstractions and Technology

CHAPTER 1 Computer Abstractions and Technology 1.1 Introduction 3 1.2 Eight Great Ideas in Computer Architecture 11 1.3 Below Your Program 13 1.4 Under the Covers 16 1.5 Technologies for Building Processors and Memory 24 1.6 Performance 28 1.7 The Power Wall 40 1.8 The Sea Change: The Switch from Uniprocessors to Multiprocessors 43 1.9 Real Stuff: Benchmarking the Intel Core i7 46 1.10 Fallacies and Pitfalls 49 1.11 Concluding Remarks 52 1.12 Historical Perspective and Further Reading 54 1.13 Exercises 54 CMPS290 Class Notes (Chap01) Page 1 / 24 by Kuo-pao Yang 1.1 Introduction 3 Modern computer technology requires professionals of every computing specialty to understand both hardware and software. Classes of Computing Applications and Their Characteristics Personal computers o A computer designed for use by an individual, usually incorporating a graphics display, a keyboard, and a mouse. o Personal computers emphasize delivery of good performance to single users at low cost and usually execute third-party software. o This class of computing drove the evolution of many computing technologies, which is only about 35 years old! Server computers o A computer used for running larger programs for multiple users, often simultaneously, and typically accessed only via a network. o Servers are built from the same basic technology as desktop computers, but provide for greater computing, storage, and input/output capacity. Supercomputers o A class of computers with the highest performance and cost o Supercomputers consist of tens of thousands of processors and many terabytes of memory, and cost tens to hundreds of millions of dollars. -

Evolving GPU Machine Code

Journal of Machine Learning Research 16 (2015) 673-712 Submitted 11/12; Revised 7/14; Published 4/15 Evolving GPU Machine Code Cleomar Pereira da Silva [email protected] Department of Electrical Engineering Pontifical Catholic University of Rio de Janeiro (PUC-Rio) Rio de Janeiro, RJ 22451-900, Brazil Department of Education Development Federal Institute of Education, Science and Technology - Catarinense (IFC) Videira, SC 89560-000, Brazil Douglas Mota Dias [email protected] Department of Electrical Engineering Pontifical Catholic University of Rio de Janeiro (PUC-Rio) Rio de Janeiro, RJ 22451-900, Brazil Cristiana Bentes [email protected] Department of Systems Engineering State University of Rio de Janeiro (UERJ) Rio de Janeiro, RJ 20550-013, Brazil Marco Aur´elioCavalcanti Pacheco [email protected] Department of Electrical Engineering Pontifical Catholic University of Rio de Janeiro (PUC-Rio) Rio de Janeiro, RJ 22451-900, Brazil Leandro Fontoura Cupertino [email protected] Toulouse Institute of Computer Science Research (IRIT) University of Toulouse 118 Route de Narbonne F-31062 Toulouse Cedex 9, France Editor: Una-May O'Reilly Abstract Parallel Graphics Processing Unit (GPU) implementations of GP have appeared in the lit- erature using three main methodologies: (i) compilation, which generates the individuals in GPU code and requires compilation; (ii) pseudo-assembly, which generates the individuals in an intermediary assembly code and also requires compilation; and (iii) interpretation, which interprets the codes. This paper proposes a new methodology that uses the concepts of quantum computing and directly handles the GPU machine code instructions. Our methodology utilizes a probabilistic representation of an individual to improve the global search capability. -

CPU) the CPU Is the Brains of the Computer, and Is Also Known As the Processor (A Single Chip Also Known As Microprocessor)

Central processing unit (CPU) The CPU is the brains of the computer, and is also known as the processor (a single chip also known as microprocessor). This electronic component interprets and carries out the basic instructions that operate the computer. Cache as a rule holds data waiting to be processed and instructions waiting to be executed. The main parts of the CPU are: control unit arithmetic logic unit (ALU), and registers – also referred as Cache registers The CPU is connected to a circuit board called the motherboard also known as the system board. Click here to see more information on the CPU Let’s look inside the CPU and see what the different components actually do and how they interact Control unit The control unit directs and co-ordinates most of the operations in the computer. It is a bit similar to a traffic officer controlling traffic! It translates instructions received from a program/application and then begins the appropriate action to carry out the instruction. Specifically the control unit: controls how and when input devices send data stores and retrieves data to and from specific locations in memory decodes and executes instructions sends data to other parts of the CPU during operations sends data to output devices on request Arithmetic Logic Unit (ALU): The ALU is the computer’s calculator. It handles all math operations such as: add subtract multiply divide logical decisions - true or false, and/or, greater then, equal to, or less than Registers Registers are special temporary storage areas on the CPU. They are: used to store items during arithmetic, logic or transfer operations. -

Readingsample

Embedded Robotics Mobile Robot Design and Applications with Embedded Systems Bearbeitet von Thomas Bräunl Neuausgabe 2008. Taschenbuch. xiv, 546 S. Paperback ISBN 978 3 540 70533 8 Format (B x L): 17 x 24,4 cm Gewicht: 1940 g Weitere Fachgebiete > Technik > Elektronik > Robotik Zu Inhaltsverzeichnis schnell und portofrei erhältlich bei Die Online-Fachbuchhandlung beck-shop.de ist spezialisiert auf Fachbücher, insbesondere Recht, Steuern und Wirtschaft. Im Sortiment finden Sie alle Medien (Bücher, Zeitschriften, CDs, eBooks, etc.) aller Verlage. Ergänzt wird das Programm durch Services wie Neuerscheinungsdienst oder Zusammenstellungen von Büchern zu Sonderpreisen. Der Shop führt mehr als 8 Millionen Produkte. CENTRAL PROCESSING UNIT . he CPU (central processing unit) is the heart of every embedded system and every personal computer. It comprises the ALU (arithmetic logic unit), responsible for the number crunching, and the CU (control unit), responsible for instruction sequencing and branching. Modern microprocessors and microcontrollers provide on a single chip the CPU and a varying degree of additional components, such as counters, timing coprocessors, watchdogs, SRAM (static RAM), and Flash-ROM (electrically erasable ROM). Hardware can be described on several different levels, from low-level tran- sistor-level to high-level hardware description languages (HDLs). The so- called register-transfer level is somewhat in-between, describing CPU compo- nents and their interaction on a relatively high level. We will use this level in this chapter to introduce gradually more complex components, which we will then use to construct a complete CPU. With the simulation system Retro [Chansavat Bräunl 1999], [Bräunl 2000], we will be able to actually program, run, and test our CPUs. -

Reverse Engineering X86 Processor Microcode

Reverse Engineering x86 Processor Microcode Philipp Koppe, Benjamin Kollenda, Marc Fyrbiak, Christian Kison, Robert Gawlik, Christof Paar, and Thorsten Holz, Ruhr-University Bochum https://www.usenix.org/conference/usenixsecurity17/technical-sessions/presentation/koppe This paper is included in the Proceedings of the 26th USENIX Security Symposium August 16–18, 2017 • Vancouver, BC, Canada ISBN 978-1-931971-40-9 Open access to the Proceedings of the 26th USENIX Security Symposium is sponsored by USENIX Reverse Engineering x86 Processor Microcode Philipp Koppe, Benjamin Kollenda, Marc Fyrbiak, Christian Kison, Robert Gawlik, Christof Paar, and Thorsten Holz Ruhr-Universitat¨ Bochum Abstract hardware modifications [48]. Dedicated hardware units to counter bugs are imperfect [36, 49] and involve non- Microcode is an abstraction layer on top of the phys- negligible hardware costs [8]. The infamous Pentium fdiv ical components of a CPU and present in most general- bug [62] illustrated a clear economic need for field up- purpose CPUs today. In addition to facilitate complex and dates after deployment in order to turn off defective parts vast instruction sets, it also provides an update mechanism and patch erroneous behavior. Note that the implementa- that allows CPUs to be patched in-place without requiring tion of a modern processor involves millions of lines of any special hardware. While it is well-known that CPUs HDL code [55] and verification of functional correctness are regularly updated with this mechanism, very little is for such processors is still an unsolved problem [4, 29]. known about its inner workings given that microcode and the update mechanism are proprietary and have not been Since the 1970s, x86 processor manufacturers have throughly analyzed yet.