Computer Analysis of Human Belligerency

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Does Cyclical Explanation Provide Insight to Protracted Conflicts in Africa?

Arabian Journal of Business and Management Review (Nigerian Chapter) Vol. 3, No. 11, 2015 DOES CYCLICAL EXPLANATION PROVIDE INSIGHT TO PROTRACTED CONFLICTS IN AFRICA? David Oladimeji Alao, Ph.D Department of Political Science and Public Administration Veronica Adeleke School of Social Sciences, Babcock University, Ilishan-Remo, Ogun State +2348035572279. [email protected] Ngozi Nwogwugwu, PhD Department of Political Science and Public Administration Veronica Adeleke School of Social Sciences, Babcock University, Ilishan-Remo, Ogun State. [email protected] ABSTRACT Africa accounted for greater percentage of violent conflict globally since the end of the cold war. There had been resurgence of violent conflict in many nations after what had been presumed to be peaceful resolution of such conflicts. Among the countries that have had recurring violent conflicts are Mali, Central African Republic, Egypt among others. This had resulted in formulation of many theories, largely revolving around causative and redemptive measures. The resurgence of deep rooted and protracted conflicts informed the paper which examined the cyclical model of conflicts in Africa. The cyclical model points government and practitioners to the defects of the haphazard conflict resolution measures which show lack of political will to combine causative and redemptive measures in ensuring peaceful resolution of conflicts. INTRODUCTION The joy and expectations of nations in Africa becoming independent was short-lived as conflicts and crises of multidimensional nature dotted the whole map turning citizens to refugees within and outside their nations. According to DFID (2001) report, 10 of the twenty four nations of the World engulfed in direct violence or outright war between 1980 and 1994 were located in Africa. -

The Peace Vs. Justice Debate and the Syrian Crisis

American University Washington College of Law Digital Commons @ American University Washington College of Law Articles in Law Reviews & Other Academic Journals Scholarship & Research 2018 The Peace vs. Justice Debate and the Syrian Crisis Paul Williams Lisa Dicker C. Danae Paterson Follow this and additional works at: https://digitalcommons.wcl.american.edu/facsch_lawrev Part of the Human Rights Law Commons, International Humanitarian Law Commons, International Law Commons, Law and Gender Commons, and the Military, War, and Peace Commons THE PEACE VS. JUSTICE PUZZLE AND THE SYRIAN CRISIS Paul R Williams,' Lisa K Dicker," C. Danae Paterson I. INTRODUCTION........................................... 418 II. THE "PEACE-FIRST" APPROACH ........................ ..... 420 A. PrioritizingEnding the Conflict. .................. ....... 421 B. Benefits of the Peace-FirstApproach.............. ............ 422 1. Saving Lives...................................... 422 2. Ending Harm to the Environment and Infrastructure ..................... 422 3. Promoting Reconciliation ....................... ..... 423 C. Achieving Peace-Firstin Practice........................ 423 1. Singular Objective of Ending the Conflict............ ..... 423 2. Negotiating with the Guys with Guns ...... ....................... 424 3. Accommodation and Appeasement................ ......... 424 4. Minimizing Justice .............. ................... 425 5. Amnesty ........................................ 426 D. Case Studies ............................... ........ 426 * Rebecca -

Boko Haram Beyond the Headlines: Analyses of Africa’S Enduring Insurgency

Boko Haram Beyond the Headlines: Analyses of Africa’s Enduring Insurgency Editor: Jacob Zenn Boko Haram Beyond the Headlines: Analyses of Africa’s Enduring Insurgency Jacob Zenn (Editor) Abdulbasit Kassim Elizabeth Pearson Atta Barkindo Idayat Hassan Zacharias Pieri Omar Mahmoud Combating Terrorism Center at West Point United States Military Academy www.ctc.usma.edu The views expressed in this report are the authors’ and do not necessarily reflect those of the Combating Terrorism Center, United States Military Academy, Department of Defense, or U.S. Government. May 2018 Cover Photo: A group of Boko Haram fighters line up in this still taken from a propaganda video dated March 31, 2016. COMBATING TERRORISM CENTER ACKNOWLEDGMENTS Director The editor thanks colleagues at the Combating Terrorism Center at West Point (CTC), all of whom supported this endeavor by proposing the idea to carry out a LTC Bryan Price, Ph.D. report on Boko Haram and working with the editor and contributors to see the Deputy Director project to its rightful end. In this regard, I thank especially Brian Dodwell, Dan- iel Milton, Jason Warner, Kristina Hummel, and Larisa Baste, who all directly Brian Dodwell collaborated on the report. I also thank the two peer reviewers, Brandon Kend- hammer and Matthew Page, for their input and valuable feedback without which Research Director we could not have completed this project up to such a high standard. There were Dr. Daniel Milton numerous other leaders and experts at the CTC who assisted with this project behind-the-scenes, and I thank them, too. Distinguished Chair Most importantly, we would like to dedicate this volume to all those whose lives LTG (Ret) Dell Dailey have been afected by conflict and to those who have devoted their lives to seeking Class of 1987 Senior Fellow peace and justice. -

The Herodotos Project (OSU-Ugent): Studies in Ancient Ethnography

Faculty of Literature and Philosophy Julie Boeten The Herodotos Project (OSU-UGent): Studies in Ancient Ethnography Barbarians in Strabo’s ‘Geography’ (Abii-Ionians) With a case-study: the Cappadocians Master thesis submitted in fulfilment of the requirements for the degree of Master in Linguistics and Literature, Greek and Latin. 2015 Promotor: Prof. Dr. Mark Janse UGent Department of Greek Linguistics Co-Promotores: Prof. Brian Joseph Ohio State University Dr. Christopher Brown Ohio State University ACKNOWLEDGMENT In this acknowledgment I would like to thank everybody who has in some way been a part of this master thesis. First and foremost I want to thank my promotor Prof. Janse for giving me the opportunity to write my thesis in the context of the Herodotos Project, and for giving me suggestions and answering my questions. I am also grateful to Prof. Joseph and Dr. Brown, who have given Anke and me the chance to be a part of the Herodotos Project and who have consented into being our co- promotores. On a whole other level I wish to express my thanks to my parents, without whom I would not have been able to study at all. They have also supported me throughout the writing process and have read parts of the draft. Finally, I would also like to thank Kenneth, for being there for me and for correcting some passages of the thesis. Julie Boeten NEDERLANDSE SAMENVATTING Deze scriptie is geschreven in het kader van het Herodotos Project, een onderneming van de Ohio State University in samenwerking met UGent. De doelstelling van het project is het aanleggen van een databank met alle volkeren die gekend waren in de oudheid. -

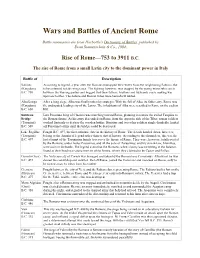

Wars and Battles of Ancient Rome

Wars and Battles of Ancient Rome Battle summaries are from Harbottle's Dictionary of Battles, published by Swan Sonnenschein & Co., 1904. Rise of Rome—753 to 3911 B.C. The rise of Rome from a small Latin city to the dominant power in Italy Battle of Description Sabines According to legend, a year after the Romans kidnapped their wives from the neighboring Sabines, the (Kingdom) tribes returned to take vengeance. The fighting however, was stopped by the young wives who ran in B.C. 750 between the warring parties and begged that their fathers, brothers and husbands cease making war upon each other. The Sabine and Roman tribes were henceforth united. Alba Longa After a long siege, Alba was finally taken by strategm. With the fall of Alba, its father-city, Rome was (Kingdom) the undisputed leading city of the Latins. The inhabitants of Alba were resettled in Rome on the caelian B.C. 650 Hill. Sublican Lars Porsenna, king of Clusium was marching toward Rome, planning to restore the exiled Tarquins to Bridge the Roman throne. As his army descended on Rome from the opposite side of the Tiber, roman soldiers (Tarquinii) worked furiously to destroy the wooden bridge. Horatius and two other soldiers single-handedly fended B.C. 509 off Porsenna's army until the bridge could be destroyed. Lake Regillus Fought B.C. 497, the first authentic date in the history of Rome. The details handed down, however, (Tarquinii) belong to the domain of legend rather than to that of history. According to the chroniclers, this was the B.C. -

Representing the Algerian Civil War: Literature, History, and the State

Representing the Algerian Civil War: Literature, History, and the State By Neil Grant Landers A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in French in the GRADUATE DIVISION of the UNIVERSITY OF CALIFORNIA, BERKELEY Committee in charge: Professor Debarati Sanyal, Co-Chair Professor Soraya Tlatli, Co-Chair Professor Karl Britto Professor Stefania Pandolfo Fall 2013 1 Abstract of the Dissertation Representing the Algerian Civil War: Literature, History, and the State by Neil Grant Landers Doctor of Philosophy in French Literature University of California, Berkeley Professor Debarati Sanyal, Co-Chair Professor Soraya Tlatli, Co-Chair Representing the Algerian Civil War: Literature, History, and the State addresses the way the Algerian civil war has been portrayed in 1990s novelistic literature. In the words of one literary critic, "The Algerian war has been, in a sense, one big murder mystery."1 This may be true, but literary accounts portray the "mystery" of the civil war—and propose to solve it—in sharply divergent ways. The primary aim of this study is to examine how three of the most celebrated 1990s novels depict—organize, analyze, interpret, and "solve"—the civil war. I analyze and interpret these novels—by Assia Djebar, Yasmina Khadra, and Boualem Sansal—through a deep contextualization, both in terms of Algerian history and in the novels' contemporary setting. This is particularly important in this case, since the civil war is so contested, and is poorly understood. Using the novels' thematic content as a cue for deeper understanding, I engage through them and with them a number of elements crucial to understanding the civil war: Algeria's troubled nationalist legacy; its stagnant one-party regime; a fear, distrust, and poor understanding of the Islamist movement and the insurgency that erupted in 1992; and the unending, horrifically bloody violence that piled on throughout the 1990s. -

The Institutional Legacy of African Independence Movements∗

The Institutional Legacy of African Independence Movements∗ Leonard Wantchekon† Omar García-Ponce‡ This draft: September 2011 Abstract We show that current cross-country differences in levels of democracy in Africa originate in most part from the nature of independence movements. We find that countries that experienced anti- colonial "rural insurgencies" (e.g., Cameroon and Kenya) tend to have autocratic regimes, while those that experienced "urban insurgencies" (e.g., Senegal and Ghana) tend to have democratic institutions. We provide evidence for causality of this relationship by using terrain ruggedness as an instrument for rural insurgency and by performing a number of falsification tests. Finally, we find that urban social movements against colonial rule facilitated post-Cold War democratization by generating more inclusive governments and stronger civil societies during the Cold War. More generally, our results indicate that democratization in Africa may result from the legacy of historical events, specifically from the forms of political dissent under colonial rule. ∗We are grateful to Karen Ferree, Elisabeth Fink, Romain Houssa, David Lake, Nathan Nunn, Kaare Strom, David Stasavage, Devesh Tiwari, and seminar participants at Georgetown, UCSD, Université Paris 1 Panthéon-Sorbonne, Univer- sity of Namur, Université de Toulouse, and APSA Annual Meeting 2011 for helpful comments and suggestions. Excellent research assistance was provided by Nami Patel, Laura Roberts, Rachel Shapiro, Jennifer Velasquez, and Camilla White.The usual caveat applies. †Department of Politics, Princeton University. [email protected] ‡Department of Politics, New York University. [email protected] 1 1Introduction Modernization theory remains one of the most intense and open research questions in the social sciences. -

Jihadism in Africa Local Causes, Regional Expansion, International Alliances

SWP Research Paper Stiftung Wissenschaft und Politik German Institute for International and Security Affairs Guido Steinberg and Annette Weber (Eds.) Jihadism in Africa Local Causes, Regional Expansion, International Alliances RP 5 June 2015 Berlin All rights reserved. © Stiftung Wissenschaft und Politik, 2015 SWP Research Papers are peer reviewed by senior researchers and the execu- tive board of the Institute. They express exclusively the personal views of the authors. SWP Stiftung Wissenschaft und Politik German Institute for International and Security Affairs Ludwigkirchplatz 34 10719 Berlin Germany Phone +49 30 880 07-0 Fax +49 30 880 07-100 www.swp-berlin.org [email protected] ISSN 1863-1053 Translation by Meredith Dale (Updated English version of SWP-Studie 7/2015) Table of Contents 5 Problems and Recommendations 7 Jihadism in Africa: An Introduction Guido Steinberg and Annette Weber 13 Al-Shabaab: Youth without God Annette Weber 31 Libya: A Jihadist Growth Market Wolfram Lacher 51 Going “Glocal”: Jihadism in Algeria and Tunisia Isabelle Werenfels 69 Spreading Local Roots: AQIM and Its Offshoots in the Sahara Wolfram Lacher and Guido Steinberg 85 Boko Haram: Threat to Nigeria and Its Northern Neighbours Moritz Hütte, Guido Steinberg and Annette Weber 99 Conclusions and Recommendations Guido Steinberg and Annette Weber 103 Appendix 103 Abbreviations 104 The Authors Problems and Recommendations Jihadism in Africa: Local Causes, Regional Expansion, International Alliances The transnational terrorism of the twenty-first century feeds on local and regional conflicts, without which most terrorist groups would never have appeared in the first place. That is the case in Afghanistan and Pakistan, Syria and Iraq, as well as in North and West Africa and the Horn of Africa. -

Democratic Republic of Congo

DEMOCRATIC REPUBLIC OF CONGO 350 Fifth Ave 34 th Floor New York, N.Y. 10118-3299 http://www.hrw.org (212) 290-4700 Vol. 15, No. 11 (A) - July 2003 I hid in the mountains and went back down to Songolo at about 3:00 p.m. I saw many people killed and even saw traces of blood where people had been dragged. I counted 82 bodies most of whom had been killed by bullets. We did a survey and found that 787 people were missing – we presumed they were all dead though we don’t know. Some of the bodies were in the road, others in the forest. Three people were even killed by mines. Those who attacked knew the town and posted themselves on the footpaths to kill people as they were fleeing. -- Testimony to Human Rights Watch ITURI: “COVERED IN BLOOD” Ethnically Targeted Violence In Northeastern DR Congo 1630 Connecticut Ave, N.W., Suite 500 2nd Floor, 2-12 Pentonville Road 15 Rue Van Campenhout Washington, DC 20009 London N1 9HF, UK 1000 Brussels, Belgium TEL (202) 612-4321 TEL: (44 20) 7713 1995 TEL (32 2) 732-2009 FAX (202) 612-4333 FAX: (44 20) 7713 1800 FAX (32 2) 732-0471 E-mail: [email protected] E-mail: [email protected] E-mail: [email protected] “You cannot escape from the horror” This story of fifteen-year-old Elise is one of many in Ituri. She fled one attack after another and witnessed appalling atrocities. Walking for more than 300 miles in her search for safety, Elise survived to tell her tale; many others have not. -

Stabilizing Mali Neighbouring States´Poliitical and Military Engagement

This report aims to contribute to a deeper understanding of the Stabilising Mali political and security context in which the United Nations’ stabili- sation mission in Mali, MINUSMA, operates, with a particular focus on the neighbouring states. The study seeks to identify and explain the different drivers that have led to fi ve of Mali’s neighbouring states – Burkina Faso, Côte d’Ivoire, Guinea, Niger and Senegal – contributing troops to MINUSMA, while two of them – Algeria and Mauretania – have decided not to. Through an analysis of the main interests and incentives that explain these states’ political and military engagement in Mali, the study also highlights how the neighbouring states could infl uence confl ict resolution in Mali. Stabilising Mali Neighbouring states’ political and military engagement Gabriella Ingerstad and Magdalena Tham Lindell FOI-R--4026--SE ISSN1650-1942 www.foi.se January 2015 Gabriella Ingerstad and Magdalena Tham Lindell Stabilising Mali Neighbouring states’ political and military engagement Bild/Cover: (UN Photo/Blagoje Grujic) FOI-R--4026--SE Titel Stabiliser ing av Mali. Grannstaters politiska och militära engagemang Title Stabilising Mali. Neighbouring states’ political and military engagement Rapportnr/Report no FOI-R--4026--SE Månad/Month Januari/ January Utgivningsår/Year 2015 Antal sidor/Pages 90 ISSN 1650-1942 Kund/Customer Utrikesdepartementet/Swedish Ministry for Foreign Affairs Forskningsområde 8. Säkerhetspolitik Projektnr/Project no B12511 Godkänd av/Approved by Maria Lignell Jakobsson Ansvarig avdelning Försvarsanalys Detta verk är skyddat enligt lagen (1960:729) om upphovsrätt till litterära och konstnärliga verk. All form av kopiering, översättning eller bearbetning utan medgivande är förbjuden. This work is protected under the Act on Copyright in Literary and Artistic Works (SFS 1960:729). -

The Clove Tree of Madagascar : a Success Story with an Unpredictable

BOIS ET FORÊTS DES TROPIQUES, 2014, N° 320 (2) SYZYGIUM AROMATICUM / FORUM 83 Pascal Danthu1, 2, 9 Eric Penot3, 2 The clove tree of Madagascar : Karen Mahafaka Ranoarisoa4 Jean Chrysostôme Rakotondravelo4 a success story with Isabelle Michel5 Marine Tiollier5 Thierry Michels6 an unpredictable future Fréderic Normand6 Gaylor Razafimamonjison2, 4, 7 Fanja Fawbush4 Michel Jahiel2, 7, 8 1 Cirad UR 105 Bsef 34398 Montpellier Cedex 5 France 2 Cirad DP Forêts et Biodiversité BP 853, Antananarivo Madagascar 3 Cirad UMR Innovations BP 853, Antananarivo Madagascar 4 École Supérieure des Sciences Agronomique Université d’Antananarivo BP 175, Antananarivo Madagascar 5 Montpellier Supagro Institut des Régions Chaudes UMR Innovation 1101 avenue d’Agropolis BP 5098 34093 Montpellier Cedex 05 France 6 Cirad UR HortSys Station de Bassin Plat, BP 180 97455 Saint-Pierre Cedex France 7 Centre Technique Horticole de Tamatave BP 11, Tamatave Madagascar 8 Cirad UR HortSys BP 11, Tamatave Madagascar 9 Cirad Direction régionale à Madagascar BP 853, Antananarivo Madagascar Photo 1. Young clove trees near Tamatave. Photograph P. Danthu. BOIS ET FORÊTS DES TROPIQUES, 2014, N° 320 (2) P. Danthu, E. Penot, K. M. Ranoarisoa, 84 FORUM / SYZYGIUM AROMATICUM J. C. Rakotondravelo, I. Michel, M. Tiollier, T. Michels, F. Normand, G. Razafimamonjison, F. Fawbush, M. Jahiel RÉSUMÉ ABSTRACT RESUMEN LE GIROFLIER DE MADAGASCAR : THE CLOVE TREE OF MADAGASCAR: A SUCCESS EL CLAVERO DE MADAGASCAR: UNE INTRODUCTION RÉUSSIE, STORY WITH AN UNPREDICTABLE FUTURE UNA INTRODUCCIÓN EXITOSA, UN AVENIR À CONSTRUIRE UN FUTURO POR CONSTRUIR Introduit à Madagascar au début du 19e siècle, The clove tree was introduced to Madagascar El clavero, introducido en Madagascar a princi- le giroflier est originaire des îles Moluques en from the Maluku Islands in Indonesia at the pios del s. -

If Our Men Won't Fight, We Will"

“If our men won’t ourmen won’t “If This study is a gender based confl ict analysis of the armed con- fl ict in northern Mali. It consists of interviews with people in Mali, at both the national and local level. The overwhelming result is that its respondents are in unanimous agreement that the root fi causes of the violent confl ict in Mali are marginalization, discrimi- ght, wewill” nation and an absent government. A fact that has been exploited by the violent Islamists, through their provision of services such as health care and employment. Islamist groups have also gained support from local populations in situations of pervasive vio- lence, including sexual and gender-based violence, and they have offered to restore security in exchange for local support. Marginality serves as a place of resistance for many groups, also northern women since many of them have grievances that are linked to their limited access to public services and human rights. For these women, marginality is a site of resistance that moti- vates them to mobilise men to take up arms against an unwilling government. “If our men won’t fi ght, we will” A Gendered Analysis of the Armed Confl ict in Northern Mali Helené Lackenbauer, Magdalena Tham Lindell and Gabriella Ingerstad FOI-R--4121--SE ISSN1650-1942 November 2015 www.foi.se Helené Lackenbauer, Magdalena Tham Lindell and Gabriella Ingerstad "If our men won't fight, we will" A Gendered Analysis of the Armed Conflict in Northern Mali Bild/Cover: (Helené Lackenbauer) Titel ”If our men won’t fight, we will” Title “Om våra män inte vill strida gör vi det” Rapportnr/Report no FOI-R--4121—SE Månad/Month November Utgivningsår/Year 2015 Antal sidor/Pages 77 ISSN 1650-1942 Kund/Customer Utrikes- & Försvarsdepartementen Forskningsområde 8.