Arm System-On-Chip Architecture.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

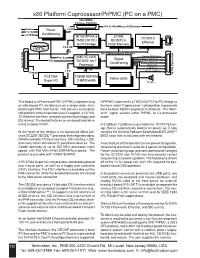

X86 Platform Coprocessor/Prpmc (PC on a PMC)

x86 Platform Coprocessor/PrPMC (PC on a PMC) 32b/33MHz PCI bus PN1/PN2 +5V to Kbd/Mouse/USB power Vcore Power +3.3V +2.5v Conditioning +3.3VIO 1K100 FPGA & 512KB 10/100TX TMS2250 PCI BIOS/PLA Ethernet RJ45 Compact to PCI bridge Flash Memory PLA I/O Flash site 8 32b/33MHz Internal PCI bus Analog SVGA Video Pwr Seq AMD SC2200 Signal COM 1 (RXD/TXD only) IDE "GEODE (tm)" Conditioning COM 2 (RXD/TXD only) Processor USB Port 1 Rear I/O PN4 I/O Rear 64 USB Port 2 LPC Keyboard/Mouse Floppy 36 Pin 36 Pin Connector PC87364 128MB SDRAM Status LEDs Super I/O (16MWx64b) PC Spkr This board is a Processor PMC (PrPMC) implementing A PrPMC implements a TMS2250 PCI-to-PCI bridge to an x86-based PC architecture on a single-wide, stan- the host, and a “Coprocessor” cofniguration implements dard height PMC form factor. This delivers a complete back-to-back 16550 compatible COM ports. The “Mon- x86 platform with comprehensive I/O support, a 10/100- arch” signal selects either PrPMC or Co-processor TX Ethernet interface, and disk access (both floppy and mode. IDE drives). The board features an on-board hard drive using Compact Flash. A 512Kbyte FLASH memory holds the 1K100 PLA im- age that is automatically loaded on power up. It also At the heart of the design is an Advanced Micro De- contains the General Software Embedded BIOS 2000™ vices SC2200 GEODE™ processor that integrates video, BIOS code that is included with each board. -

Convey Overview

THE WORLD’S FIRST HYBRID-CORE COMPUTER. CONVEY HYBRID-CORE COMPUTING Hybrid-core Computing Convey HC-1 High Performance of application- specific hardware Heterogenous solutions • can be much more efficient Performance/ • still hard to program Programmability and Power efficiency deployment ease of an x86 server Application Multicore solutions • don’t always scale well • parallel programming is hard Low Difficult Ease of Deployment Easy 1/22/2010 3 Hybrid-Core Computing Application-Specific Personalities Applications • Extend the x86 instruction set • Implement key operations in Convey Compilers hardware Life Sciences x86-64 ISA Custom ISA CAE Custom Financial Oil & Gas Shared Virtual Memory Cache-coherent, shared memory • Both ISAs address common memory *ISA: Instruction Set Architecture 7/12/2010 4 HC-1 Hardware PCI I/O FPGA FPGA Intel Personalities Chipset FPGA FPGA 8 GB/s 80 GB/s Memory Memory Cache Coherent, Shared Virtual Memory 1/22/2010 5 Using Personalities C/C++ Fortran • Personalities are user specifies reloadable personality at instruction sets Convey Software compile time Development Suite • Compiler instruction generates x86 descriptions and coprocessor instructions from Hybrid-Core Executable P ANSI standard x86-64 and Coprocessor Personalities C/C++ & Fortran Instructions • Executable can run on x86 nodes FPGA Convey HC-1 or Convey Hybrid- bitfiles Core nodes Intel x86 Coprocessor personality loaded at runtime by OS 1/22/2010 6 SYSTEM ARCHITECTURE HC-1 Architecture “Commodity” Intel Server Convey FPGA-based coprocessor Direct -

Configurable RISC-V Softcore Processor for FPGA Implementation

1 Configurable RISC-V softcore processor for FPGA implementation Joao˜ Filipe Monteiro Rodrigues, Instituto Superior Tecnico,´ Universidade de Lisboa Abstract—Over the past years, the processor market has and development of several programming tools. The RISC-V been dominated by proprietary architectures that implement Foundation controls the RISC-V evolution, and its members instruction sets that require licensing and the payment of fees to are responsible for promoting the adoption of RISC-V and receive permission so they can be used. ARM is an example of one of those companies that sell its microarchitectures to participating in the development of the new ISA. In the list of the manufactures so they can implement them into their own members are big companies like Google, NVIDIA, Western products, and it does not allow the use of its instruction set Digital, Samsung, or Qualcomm. (ISA) in other implementations without licensing. The RISC-V The main goal of this work is the development of a RISC- instruction set appeared proposing the hardware and software V softcore processor to be implemented in an FPGA, using development without costs, through the creation of an open- source ISA. This way, it is possible that any project that im- a non-RISC-V core as the base of this architecture. The plements the RISC-V ISA can be made available open-source or proposed solution is focused on solving the problems and even implemented in commercial products. However, the RISC- limitations identified in the other RISC-V cores that were V solutions that have been developed do not present the needed analyzed in this thesis, especially in terms of the adaptability requirements so they can be included in projects, especially the and flexibility, allowing future modifications according to the research projects, because they offer poor documentation, and their performances are not suitable. -

IBM Z Systems Introduction May 2017

IBM z Systems Introduction May 2017 IBM z13s and IBM z13 Frequently Asked Questions Worldwide ZSQ03076-USEN-15 Table of Contents z13s Hardware .......................................................................................................................................................................... 3 z13 Hardware ........................................................................................................................................................................... 11 Performance ............................................................................................................................................................................ 19 z13 Warranty ............................................................................................................................................................................ 23 Hardware Management Console (HMC) ..................................................................................................................... 24 Power requirements (including High Voltage DC Power option) ..................................................................... 28 Overhead Cabling and Power ..........................................................................................................................................30 z13 Water cooling option .................................................................................................................................................... 31 Secure Service Container ................................................................................................................................................. -

Microprocessor Architecture

EECE416 Microcomputer Fundamentals Microprocessor Architecture Dr. Charles Kim Howard University 1 Computer Architecture Computer System CPU (with PC, Register, SR) + Memory 2 Computer Architecture •ALU (Arithmetic Logic Unit) •Binary Full Adder 3 Microprocessor Bus 4 Architecture by CPU+MEM organization Princeton (or von Neumann) Architecture MEM contains both Instruction and Data Harvard Architecture Data MEM and Instruction MEM Higher Performance Better for DSP Higher MEM Bandwidth 5 Princeton Architecture 1.Step (A): The address for the instruction to be next executed is applied (Step (B): The controller "decodes" the instruction 3.Step (C): Following completion of the instruction, the controller provides the address, to the memory unit, at which the data result generated by the operation will be stored. 6 Harvard Architecture 7 Internal Memory (“register”) External memory access is Very slow For quicker retrieval and storage Internal registers 8 Architecture by Instructions and their Executions CISC (Complex Instruction Set Computer) Variety of instructions for complex tasks Instructions of varying length RISC (Reduced Instruction Set Computer) Fewer and simpler instructions High performance microprocessors Pipelined instruction execution (several instructions are executed in parallel) 9 CISC Architecture of prior to mid-1980’s IBM390, Motorola 680x0, Intel80x86 Basic Fetch-Execute sequence to support a large number of complex instructions Complex decoding procedures Complex control unit One instruction achieves a complex task 10 -

ARM Software Development Toolkit Version 2.11 User Guide

211ug.book : SDT_UG_Front_cover.fm Page i Sunday, June 1, 1997 12:21 PM ARM Software Development Toolkit Version 2.11 User Guide Document number: ARM DUI 0040C Issued: May 1997 Copyright Advanced RISC Machines Ltd (ARM) 1996 Beta Draft Beta ENGLAND GERMANY Advanced RISC Machines Limited Advanced RISC Machines Limited Fulbourn Road Otto-Hahn Str. 13b Cherry Hinton 85521 Ottobrunn-Riemerling Cambridge CB1 4JN Munich UK Germany Telephone: +44 1223 400400 Telephone: +49 89 608 75545 Facsimile: +44 1223 400410 Facsimile: +49 89 608 75599 Email: [email protected] Email: [email protected] JAPAN USA Advanced RISC Machines K.K. ARM USA Incorporated KSP West Bldg, 3F 300D, 3-2-1 Sakado Suite 5 Takatsu-ku, Kawasaki-shi 985 University Avenue Kanagawa Los Gatos 213 Japan CA 95030 USA Telephone: +81 44 850 1301 Telephone: +1 408 399 5199 Facsimile: +81 44 850 1308 Facsimile: +1 408 399 8854 Email: [email protected] Email: [email protected] World Wide Web address: http://www.arm.com Partner Confidential - Final Draft 211ug.book : SDT_UG_Front_cover.fm Page ii Sunday, June 1, 1997 12:21 PM Proprietary Notice ARM, the ARM Powered logo and EmbeddedICE are trademarks of Advanced RISC Machines Ltd. Neither the whole nor any part of the information contained in, or the product described in, this manual may be adapted or reproduced in any material form except with the prior written permission of the copyright holder. The product described in this manual is subject to continuous developments and improvements. All particulars of the product and its use contained in this manual are given by ARM in good faith. -

What Do We Mean by Architecture?

Embedded programming: Comparing the performance and development workflows for architectures Embedded programming week FABLAB BRIGHTON 2018 What do we mean by architecture? The architecture of microprocessors and microcontrollers are classified based on the way memory is allocated (memory architecture). There are two main ways of doing this: Von Neumann architecture (also known as Princeton) Von Neumann uses a single unified cache (i.e. the same memory) for both the code (instructions) and the data itself, Under pure von Neumann architecture the CPU can be either reading an instruction or reading/writing data from/to the memory. Both cannot occur at the same time since the instructions and data use the same bus system. Harvard architecture Harvard architecture uses different memory allocations for the code (instructions) and the data, allowing it to be able to read instructions and perform data memory access simultaneously. The best performance is achieved when both instructions and data are supplied by their own caches, with no need to access external memory at all. How does this relate to microcontrollers/microprocessors? We found this page to be a good introduction to the topic of microcontrollers and microprocessors, the architectures they use and the difference between some of the common types. First though, it’s worth looking at the difference between a microprocessor and a microcontroller. Microprocessors (e.g. ARM) generally consist of just the Central Processing Unit (CPU), which performs all the instructions in a computer program, including arithmetic, logic, control and input/output operations. Microcontrollers (e.g. AVR, PIC or 8051) contain one or more CPUs with RAM, ROM and programmable input/output peripherals. -

ARM Architecture

ARM Architecture Comppgzuter Organization and Assembly ygg Languages Yung-Yu Chuang with slides by Peng-Sheng Chen, Ville Pietikainen ARM history • 1983 developed by Acorn computers – To replace 6502 in BBC computers – 4-man VLSI design team – Its simp lic ity comes from the inexper ience team – Match the needs for generalized SoC for reasonable power, performance and die size – The first commercial RISC implemenation • 1990 ARM (Advanced RISC Mac hine ), owned by Acorn, Apple and VLSI ARM Ltd Design and license ARM core design but not fabricate Why ARM? • One of the most licensed and thus widespread processor cores in the world – Used in PDA, cell phones, multimedia players, handheld game console, digital TV and cameras – ARM7: GBA, iPod – ARM9: NDS, PSP, Sony Ericsson, BenQ – ARM11: Apple iPhone, Nokia N93, N800 – 90% of 32-bit embedded RISC processors till 2009 • Used especially in portable devices due to its low power consumption and reasonable performance ARM powered products ARM processors • A simple but powerful design • A whlhole filfamily of didesigns shiharing siilimilar didesign principles and a common instruction set Naming ARM •ARMxyzTDMIEJFS – x: series – y: MMU – z: cache – T: Thumb – D: debugger – M: Multiplier – I: EmbeddedICE (built-in debugger hardware) – E: Enhanced instruction – J: Jazell e (JVM) – F: Floating-point – S: SthiiblSynthesizible version (source code version for EDA tools) Popular ARM architectures •ARM7TDMI – 3 pipe line stages (ft(fetc h/deco de /execu te ) – High code density/low power consumption – One of the most used ARM-version (for low-end systems) – All ARM cores after ARM7TDMI include TDMI even if they do not include TDMI in their labels • ARM9TDMI – Compatible with ARM7 – 5 stages (fe tc h/deco de /execu te /memory /wr ite ) – Separate instruction and data cache •ARM11 ARM family comparison year 1995 1997 1999 2003 ARM is a RISC • RISC: simple but powerful instructions that execute within a single cycle at high clock speed. -

The Intel Microprocessors: Architecture, Programming and Interfacing Introduction to the Microprocessor and Computer

Microprocessors (0630371) Fall 2010/2011 – Lecture Notes # 1 The Intel Microprocessors: Architecture, Programming and Interfacing Introduction to the Microprocessor and computer Outline of the Lecture Evolution of programming languages. Microcomputer Architecture. Instruction Execution Cycle. Evolution of programming languages: Machine language - the programmer had to remember the machine codes for various operations, and had to remember the locations of the data in the main memory like: 0101 0011 0111… Assembly Language - an instruction is an easy –to- remember form called a mnemonic code . Example: Assembly Language Machine Language Load 100100 ADD 100101 SUB 100011 We need a program called an assembler that translates the assembly language instructions into machine language. High-level languages Fortran, Cobol, Pascal, C++, C# and java. We need a compiler to translate instructions written in high-level languages into machine code. Microprocessor-based system (Micro computer) Architecture Data Bus, I/O bus Memory Storage I/O I/O Registers Unit Device Device Central Processing Unit #1 #2 (CPU ) ALU CU Clock Control Unit Address Bus The figure shows the main components of a microprocessor-based system: CPU- Central Processing Unit , where calculations and logic operations are done. CPU contains registers , a high-frequency clock , a control unit ( CU ) and an arithmetic logic unit ( ALU ). o Clock : synchronizes the internal operations of the CPU with other system components using clock pulsing at a constant rate (the basic unit of time for machine instructions is a machine cycle or clock cycle) One cycle A machine instruction requires at least one clock cycle some instruction require 50 clocks. o Control Unit (CU) - generate the needed control signals to coordinate the sequencing of steps involved in executing machine instructions: (fetches data and instructions and decodes addresses for the ALU). -

Acorn Risc Pc 600

ACORN RISC PC 600 Acorn Acorns retort to the PowerMacs is an example of innovative design, with extensive expansion, the promise of RISC better cross-platform compatibility and graphics performance Archimedes owners only dreamed about. Ian PC 600 Burley gets a slice of the action. and CPU fans as the chip generates less than 1W of heat. Current ARM610s are 0.8 micron parts, and sample 0.6 micron parts are testing at 40MHz. One of the most striking aspects of the new RISC PC is its case, designed under the auspices of Allen Boothroyd, who designed the original BBC Micro and was a force behind hi-fi manufacturer Meridian. It is made of tough Bayer Bayblend ABS/Polycarbonate, which is used to make riot shields. Internal surfaces are coated to reduce radio frequency interference (RFI) but the external surface is an unpainted light grey. There is provision for screw-mounted peripherals inside but devices like CD-ROMs and hard disks will be clip-mounted Apple-style. Two twist-locking pins need to be turned 90° to get the case lid off. These can be padlocked and the case tethered. It takes less than a minute to open the case, swap processor modules and refit the lid, without any tools. Standard models have a slimline base case with ^ RISC PC Acorn Computers of Cambridge, and not their a two-expansion slot backplane; the front panel has a 600s get the colleagues from Cupertino, were the first to bring spring-loaded door to hide the floppy drive. If you need latest release affordable RISC computing to the masses. -

Unit 8 : Microprocessor Architecture

Unit 8 : Microprocessor Architecture Lesson 1 : Microcomputer Structure 1.1. Learning Objectives On completion of this lesson you will be able to : ♦ draw the block diagram of a simple computer ♦ understand the function of different units of a microcomputer ♦ learn the basic operation of microcomputer bus system. 1.2. Digital Computer A digital computer is a multipurpose, programmable machine that reads A digital computer is a binary instructions from its memory, accepts binary data as input and multipurpose, programmable processes data according to those instructions, and provides results as machine. output. 1.3. Basic Computer System Organization Every computer contains five essential parts or units. They are Basic computer system organization. i. the arithmetic logic unit (ALU) ii. the control unit iii. the memory unit iv. the input unit v. the output unit. 1.3.1. The Arithmetic and Logic Unit (ALU) The arithmetic and logic unit (ALU) is that part of the computer that The arithmetic and logic actually performs arithmetic and logical operations on data. All other unit (ALU) is that part of elements of the computer system - control unit, register, memory, I/O - the computer that actually are there mainly to bring data into the ALU to process and then to take performs arithmetic and the results back out. logical operations on data. An arithmetic and logic unit and, indeed, all electronic components in the computer are based on the use of simple digital logic devices that can store binary digits and perform simple Boolean logic operations. Data are presented to the ALU in registers. These registers are temporary storage locations within the CPU that are connected by signal paths of the ALU. -

A Developer's Guide to the POWER Architecture

http://www.ibm.com/developerworks/linux/library/l-powarch/ 7/26/2011 10:53 AM English Sign in (or register) Technical topics Evaluation software Community Events A developer's guide to the POWER architecture POWER programming by the book Brett Olsson , Processor architect, IBM Anthony Marsala , Software engineer, IBM Summary: POWER® processors are found in everything from supercomputers to game consoles and from servers to cell phones -- and they all share a common architecture. This introduction to the PowerPC application-level programming model will give you an overview of the instruction set, important registers, and other details necessary for developing reliable, high performing POWER applications and maintaining code compatibility among processors. Date: 30 Mar 2004 Level: Intermediate Also available in: Japanese Activity: 22383 views Comments: The POWER architecture and the application-level programming model are common across all branches of the POWER architecture family tree. For detailed information, see the product user's manuals available in the IBM® POWER Web site technical library (see Resources for a link). The POWER architecture is a Reduced Instruction Set Computer (RISC) architecture, with over two hundred defined instructions. POWER is RISC in that most instructions execute in a single cycle and typically perform a single operation (such as loading storage to a register, or storing a register to memory). The POWER architecture is broken up into three levels, or "books." By segmenting the architecture in this way, code compatibility can be maintained across implementations while leaving room for implementations to choose levels of complexity for price/performances trade-offs. The levels are: Book I.