Big-Data-As-A-Self-Service Test and Integration Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Progress Software Introduces a New Generation of the Apama Capital Markets Foundation

June 22, 2010 Progress Software Introduces a New Generation of the Apama Capital Markets Foundation Apama Capital Markets Foundation Enhances CEP Platform With Reusable Services That Enable Developers to Help Quant Traders Become More Successful NEW YORK, NY and BEDFORD, MA, Jun 22, 2010 (MARKETWIRE via COMTEX News Network) -- SIFMA - Progress Software Corporation (NASDAQ: PRGS), a leading software provider that enables enterprises to be operationally responsive, today announced the launch and immediate availability of a new generation of its Progress(R) Apama(R) Capital Markets Foundation. The Apama Capital Markets Foundation builds on the award-winning Apama Complex Event Processing platform by providing a powerful set of pre-built capabilities for developers creating a broad range of capital markets trading applications. The new version includes new functionality and across-the-board feature enhancements that deliver significant improvements in performance and productivity. As a result, developers can enable quantitative and systematic traders to more rapidly create trading strategies and platforms that are highly competitive and differentiated, as well as create highly flexible market surveillance and risk management applications. Most importantly, these enhancements help developers significantly improve the way traders can research, design and implement their own strategies. The new Apama Capital Markets Foundation is built in the form of easily configurable building blocks and includes the following capabilities: 1. A new market data architecture, which provides an increase in performance and flexibility in the processing of cross-asset market data. The architecture makes optimal use of the patented Apama parallel event processing engine that can scale to hundreds of thousands of market data updates per second. -

Apama 5.3 Readme

Apama 5.3.0.0 Readme Version 5.3.0.0 Ports: All Date: April 2015 -------------------------------------------------------------------------------- CONTENTS ======== 1. Release Notes for 5.3.0.0 2. Customer Reported Defects Fixed in 5.3.0.0 and previous releases 3. Other Defects Fixed in 5.3.0.0 4. Copyright Notices 5. Support For installation information, see "Installing Apama" (installing_apama.pdf in the downloaded package). Adobe Acrobat Reader version 8.0 or greater is required to view the included PDF documentation. ------------------------------ 1. Release Notes for 5.3.0.0 ------------------------------ a. ADBC PAM-10573 : Some supported databases store empty strings as NULL (since 5.3.0.0) ================================================================================ Some databases such as Oracle are known to store empty strings as NULL values, which can lead to confusion when executing queries where a field is compared to the empty string. For example: select * from table where field = "" Would not match rows where the field was null for such databases. To ensure the desired results are returned when running queries against databases that store empty strings as null, we recommend queries to be written to check for NULL instead of empty string literals. However, despite this limitation in the underlying database, the supplied ODBC driver for Oracle includes special logic to allow queries to use empty strings (though this does not apply to the supplied JDBC driver, or to most other 3rd party Oracle drivers). PAM-21466 : Bundled Oracle JDBC driver can incorrectly report Oracle Advanced Security errors (since 5.2.0.0) ================================================================================ Even when Oracle Advanced Security is not turned on for the server, the following error can occur during connect: [Oracle JDBC Driver]The connect attempt failed because the server requires Oracle Advanced Security. -

Apama 10.7 Supported Platforms.Xlsx

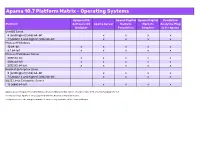

Apama 10.7 Platform Matrix - Operating Systems Apama with Apama Capital Apama Capital Predictive Platform Software AG Apama Server Markets Markets Analytics Plug- Designer Foundation Adapters in for Apama CentOS Linux 8 (and higher) (x86) 64-bit x x x x 7 (Update 2 and higher) (x86) 64-bit x x x x Microsoft Windows 10 64-bit x x x x x 8.1 64-bit x x x x x Microsoft Windows Server 2019 64-bit x x x x x 2016 64-bit x x x x x 2012 R2 64-bit x x x x x Red Hat Enterprise Linux 8 (and higher) (x86) 64-bit x x x x 7 (Update 2 and higher) (x86) 64-bit x x x x SUSE Linux Enterprise Server 12 (x86) 64-bit x x x x Apama does not support Security Enhanced Linux (SELinux). This option should be turned off on Linux for Apama to run. For Oracle Linux, Apama is only supported with the Red Hat Compatible Kernel. For Apama server, the compiled runtime feature is only available on the Linux platforms. Apama 10.7 Platform Matrix - Compilers Microsoft Visual Studio GCC GCC Platform 2015 Update 3 4.8 8.2 (C++) CentOS Linux 8 (and higher) (x86) 64-bit x 7 (Update 2 and higher) (x86) 64-bit x Microsoft Windows 10 64-bit x 8.1 64-bit x Microsoft Windows Server 2019 64-bit x 2016 64-bit x 2012 R2 64-bit x Red Hat Enterprise Linux 8 (and higher) (x86) 64-bit x 7 (Update 2 and higher) (x86) 64-bit x SUSE Linux Enterprise Server 12 (x86) 64-bit x Compilers are relevant for Apama when creating C++ plug-ins and applications using the C++ client API. -

Progress Software Introduces Apama 4.3 for Faster Deployment and Massive Scalability for Event Processing Applications

November 17, 2010 Progress Software Introduces Apama 4.3 for Faster Deployment and Massive Scalability for Event Processing Applications New and Unique Functionality Includes Combination of Discrete and Streaming Events BEDFORD, MA -- (MARKET WIRE) -- 11/17/10 -- Progress Software Corporation (NASDAQ: PRGS), a leading independent enterprise software provider that enables companies to be operationally responsive, today announced the availability of Progress® Apama® 4.3, a major new release of the market leading Progress Apama Event Processing platform. This new version includes a next-generation Event Processing Language (EPL) optimized for the concise expression of business and temporal logic. Moreover, events can be captured as a stream with common attributes, giving developers and business users greater scalability and more control over how they can sense and respond to events. This is the third major product release of the Apama Event Processing platform in two years from Progress Software, demonstrating the company's commitment to leading research, innovation and development in this important area. The Apama development team remains steadfast in its focus on enhancing productivity, improving performance and increasing integration with innovative language features, new dashboard functionality and massive scalability. Enhancements in the Apama 4.3 platform's Event Processing Language (EPL), formerly known as MonitorScript, support sophisticated event stream management, and are the result of more than 10 person years of R&D effort. The new capabilities provide developers and business users with a simplified but extremely powerful syntax that allows "event stream networks" to be defined with common attributes, giving them more direct control over their critical business events. -

A Complex Event Processing Engine Based on Data Stream

ReCEPtor: Sensing Complex Events in Data Streams for Service-Oriented Architectures Mingzhu Wei, Ismail Ari, Jun Li, Mohamed Dekhil Digital Printing and Imaging Laboratory HP Laboratories Palo Alto HPL-2007-176 November 2, 2007* data stream, complex All mission-critical applications read or generate raw data streams and require event processing, real-time processing of these streams to collect statistics, control flow and detect SOA, query plan, abnormal patterns. A trend which has gained strong momentum in different EPL industry sectors is the use of Complex Event Processing (CEP) over streams for all critical business processes, thus pushing its span beyond military and financial applications. A parallel trend has been the re-architecting of existing business processes with Service Oriented Architecture (SOA) principals to provide integration and interoperability. This requires use of middleware that incorporates web services, Business Process Management (BPM) systems or an Enterprise Service Bus (ESB), and in some cases Business Rule Engines (BRE). There is a gap between the new generation of business processes which desperately need CEP and the proposed CEP engines that were not built with SOA in mind. These engines either don’t support the flexible, dynamic and distributed business applications deployed in SOA or they try to merge the middleware with the CEP engine as one big proprietary package. This paper describes the design and implementation of our CEP engine called ReCEPtor, which can sense complex events in data streams in real-time. Our modular architecture describes how to integrate ReCEPtor with different business applications in different platforms either directly or by using an off-the-shelf BPM system and a platform adapter. -

Introduction to Apama

Introduction to Apama 9.9.0 October 2015 This document applies to Apama 9.9.0 and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © 2013-2015 Software AG, Darmstadt, Germany and/or Software AG USA Inc., Reston, VA, USA, and/or its subsidiaries and/or its affiliates and/or their licensors. The name Software AG and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA Inc. and/or its subsidiaries and/or its affiliates and/or their licensors. Other company and product names mentioned herein may be trademarks of their respective owners. Detailed information on trademarks and patents owned by Software AG and/or its subsidiaries is located at http://softwareag.com/licenses. Use of this software is subject to adherence to Software AG's licensing conditions and terms. These terms are part of the product documentation, located at http://softwareag.com/licenses/ and/or in the root installation directory of the licensed product(s). This software may include portions of third-party products. For third-party copyright notices, license terms, additional rights or restrictions, please refer to "License Texts, Copyright Notices and Disclaimers of Third Party Products". For certain specific third-party license restrictions, please refer to section E of the Legal Notices available under "License Terms and Conditions for Use of Software AG Products / Copyright and Trademark Notices of Software AG Products". These documents are part of the product documentation, located at http://softwareag.com/licenses and/or in the root installation directory of the licensed product(s). -

The Forrester Wave™: Streaming Analytics, Q3 2019 the 11 Providers That Matter Most and How They Stack up by Mike Gualtieri September 23, 2019

LICENSED FOR INDIVIDUAL USE ONLY The Forrester Wave™: Streaming Analytics, Q3 2019 The 11 Providers That Matter Most And How They Stack Up by Mike Gualtieri September 23, 2019 Why Read This Report Key Takeaways In our 26-criterion evaluation of streaming Software AG, IBM, Microsoft, Google, And analytics providers, we identified the 11 most TIBCO Software Lead The Pack significant ones — Alibaba, Amazon Web Forrester’s research uncovered a market in which Services, Cloudera, EsperTech, Google, IBM, Software AG, IBM, Microsoft, Google, and TIBCO Impetus, Microsoft, SAS, Software AG, and Software are Leaders; Cloudera, SAS, Amazon TIBCO Software — and researched, analyzed, Web Services, and Impetus are Strong Performers; and scored them. This report shows how each and EsperTech and Alibaba are Contenders. provider measures up and helps application Analytics Prowess, Scalability, And development and delivery (AD&D) professionals Deployment Freedom Are Key Differentiators select the right one for their needs. Depth and breadth of analytics types on streaming data are critical. But that is all for naught if streaming analytics vendors cannot also scale to handle potentially huge volumes of streaming data. Also, it’s critical that streaming analytics can be deployed where it is most needed, such as on-premises, in the cloud, and/ or at the edge. This PDF is only licensed for individual use when downloaded from forrester.com or reprints.forrester.com. All other distribution prohibited. FORRESTER.COM FOR APPLICATION DEVELOPMENT & DELIVERY PROFESSIONALS The Forrester Wave™: Streaming Analytics, Q3 2019 The 11 Providers That Matter Most And How They Stack Up by Mike Gualtieri with Srividya Sridharan and Robert Perdoni September 23, 2019 Table Of Contents Related Research Documents 2 Enterprises Must Take A Streaming-First The Future Of Machine Learning Is Unstoppable Approach To Analytics Predictions 2019: Artificial Intelligence 3 Evaluation Summary Predictions 2019: Business Insights 6 Vendor Offerings 6 Vendor Profiles Leaders Share reports with colleagues. -

Introduction to Apama

Introduction to Apama 5.1.0 November 2013 This document applies to Apama 5.1.0 and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © 2013 Software AG, Darmstadt, Germany and/or Software AG USA Inc., Reston, VA, USA, and/or Terracotta Inc., San Francisco, CA, USA, and/ or Software AG (Canada) Inc., Cambridge, Ontario, Canada, and/or, Software AG (UK) Ltd., Derby, United Kingdom, and/or Software A.G. (Israel) Ltd., Or- Yehuda, Israel and/or their licensors. The name Software AG and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA Inc. and/or its Subsidiaries and/or its Affiliates and/or their licensors. Other company and product names mentioned herein may be trademarks of their respective owners. Detailed information on trademarks and patents owned by Software AG and/or its subsidiaries is located at http://documentation.softwareag.com/legal/. Use of this software is subject to adherence to Software AG′s licensing conditions and terms. These terms are located at http://documentation.softwareag.com/ legal/ and/or in the root installation directory of the licensed product(s). This software may include portions of third-party products. For third-party copyright notices and license terms, please refer to "License Texts, Copyright Notices and Disclaimers of Third Party Products." This document is located at http://documentation.softwareag.com/legal/ and/or -

Complex Event Processing As a Service in Multi-Cloud Environments

Western University Scholarship@Western Electronic Thesis and Dissertation Repository August 2016 Complex Event Processing as a Service in Multi-Cloud Environments Wilson A. Higashino The University of Western Ontario Supervisor Dr. Miriam A. M. Capretz The University of Western Ontario Graduate Program in Electrical and Computer Engineering A thesis submitted in partial fulfillment of the equirr ements for the degree in Doctor of Philosophy © Wilson A. Higashino 2016 Follow this and additional works at: https://ir.lib.uwo.ca/etd Part of the Databases and Information Systems Commons Recommended Citation Higashino, Wilson A., "Complex Event Processing as a Service in Multi-Cloud Environments" (2016). Electronic Thesis and Dissertation Repository. 4016. https://ir.lib.uwo.ca/etd/4016 This Dissertation/Thesis is brought to you for free and open access by Scholarship@Western. It has been accepted for inclusion in Electronic Thesis and Dissertation Repository by an authorized administrator of Scholarship@Western. For more information, please contact [email protected]. Abstract The rise of mobile technologies and the Internet of Things, combined with advances in Web technologies, have created a new Big Data world in which the volume and velocity of data gen- eration have achieved an unprecedented scale. As a technology created to process continuous streams of data, Complex Event Processing (CEP) has been often related to Big Data and used as a tool to obtain real-time insights. However, despite this recent surge of interest, the CEP market is still dominated by solutions that are costly and inflexible or too low-level and hard to operate. To address these problems, this research proposes the creation of a CEP system that can be o↵ered as a service and used over the Internet. -

Distributed Event-Processing Engine† Abstract: the Main Challenges in Complex Event Processing Are Volume and Velocity of Data That Needs to Be Processed in Real-Time

Horizon 2020 Program (2014-2020) Big data PPP Research addressing main technology challenges of the data economy Industrial-Driven Big Data as a Self-Service Solution D4.2: Distributed Event-Processing Engine† Abstract: The main challenges in complex event processing are volume and velocity of data that needs to be processed in real-time. Data can come from different sources in different amounts and different frequencies, e.g. in the I-BiDaaS use cases from the three data providers CAIXA, CRF and TID where the data rates differ. However, not all data is connected, and one needs to divide the data into parts that need to be processed together. This document describes the design of an event-processing architecture where the event-processing engine is divided in separate, independent processing units that can share and exchange information and events. This reduces the load and enhances the performance without leaving out important relations between the data. Contractual Date of Delivery 31/12/2019 Actual Date of Delivery 30/12/2019 Deliverable Security Class Public Editor Dr. Gerald Ristow (SAG) Contributors FORTH, CRF Quality Assurance Dr. Yolanda Becerra (BSC) Dr. Giorgos Vasiliadis (FORTH) Dr. Kostas Lampropoulos (FORTH) † The research leading to these results has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 780787. I-BIDAAS D4.2 ICT-16-2017/№ 780787 The I-BiDaaS Consortium Foundation for Research and Technology – Coordinator Greece Hellas (FORTH) Barcelona Supercomputing Center (BSC) Principal Contractor Spain IBM Israel – Science and Technology LTD Principal Contractor Israel (IBM) Centro Ricerche FIAT (FCA/CRF) Principal Contractor Italy Software AG (SAG) Principal Contractor Germany Caixabank S.A. -

Installation and Configuration of Sonic Workbench and Openedge Architect

Combining OpenEdge Architect and Sonic Workbench to Enable ABL Drag and Drop in OpenEdge 10.2B OpenEdge® Architect and Sonic® Workbench are both developed on the Eclipse platform. OpenEdge Architect 10.2B is released on Eclipse 3.4.2; Sonic Workbench 7.5 and 7.6 are released on Eclipse 3.2.2. OpenEdge Architect and Sonic Workbench can be configured to share a common Eclipse platform, however, because the two products are based on different Eclipse platforms, you must use the files and directions in the following sections to successfully combine the two products: • Introduction • Required products and downloads • Installation and configuration of Sonic Workbench and OpenEdge Architect • Third party acknowledgements The information contained in this web paper supplements two books: OpenEdge Getting Started: Installation and Configuration and OpenEdge Development: Messaging and ESB. © 2009 Progress Software Corporation and/or its subsidiaries or affiliates. All rights reserved. These materials and all Progress® software products are copyrighted and all rights are reserved by Progress Software Corporation. The information in these materials is subject to change without notice, and Progress Software Corporation assumes no responsibility for any errors that may appear therein. The references in these materials to specific platforms supported are subject to change. Actional, Apama, Apama (and Design), Artix, Business Empowerment, DataDirect (and design), DataDirect Connect, DataDirect Connect64, DataDirect Technologies, DataDirect XML Converters, -

Progress Actional Progress Sonic Progress Apama

Progress® OpenEdge® Progress® Fuse™ ® ® Progress Actional Progress® Orbix ® ® ® D A T A S H E E T Progress Sonic ® Progress Orbacus™ Progress® Apama ® Progress® DataXtend® ® ® ® Progress ObjectStore Progress® Artix ® Data Progress® Artix Services® PROGRESS® ® SONICMQ® Progress DataDirect Connect ENTERPRISE-GRADE MESSAGING HIGHLIGHTS >> Standards-based ® ® Progress SonicMQ delivers a unique combination of high performance, >> High>performance guaranteed message delivery under all conditions, and continuous availability— >> Publish/subscribe>and>point-to-point> providing a foundation for operational responsiveness across the enterprise. SonicMQ messaging ensures system uptime through the patent-pending Sonic Continuous Availability >> Guaranteed>message>delivery ® Architecture and flexibly scales through the Dynamic Routing Architecture (DRA) and >> Load>balancing>and>clustering advanced clustering technologies. The SonicMQ advanced distributed management >> Continuous>availability>with>zero> and deployment infrastructure dramatically simplifies operations and lowers the total downtime cost of ownership for business-critical communication across the enterprise. Superior >> Management>framework authentication, authorization, and encryption support ensures that messages and >> Centralized>installation,>upgrades,> systems are protected inside and outside the firewall. patches,>and>management >> Wide-area>deployability ROBUST ENTERPRISE MESSAGING SYSTEM >> Comprehensive,>out-of-the-box> Industry-leading companies rely on SonicMQ for mission-critical