The Forrester Wave™: Streaming Analytics, Q3 2019 the 11 Providers That Matter Most and How They Stack up by Mike Gualtieri September 23, 2019

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Progress Software Introduces a New Generation of the Apama Capital Markets Foundation

June 22, 2010 Progress Software Introduces a New Generation of the Apama Capital Markets Foundation Apama Capital Markets Foundation Enhances CEP Platform With Reusable Services That Enable Developers to Help Quant Traders Become More Successful NEW YORK, NY and BEDFORD, MA, Jun 22, 2010 (MARKETWIRE via COMTEX News Network) -- SIFMA - Progress Software Corporation (NASDAQ: PRGS), a leading software provider that enables enterprises to be operationally responsive, today announced the launch and immediate availability of a new generation of its Progress(R) Apama(R) Capital Markets Foundation. The Apama Capital Markets Foundation builds on the award-winning Apama Complex Event Processing platform by providing a powerful set of pre-built capabilities for developers creating a broad range of capital markets trading applications. The new version includes new functionality and across-the-board feature enhancements that deliver significant improvements in performance and productivity. As a result, developers can enable quantitative and systematic traders to more rapidly create trading strategies and platforms that are highly competitive and differentiated, as well as create highly flexible market surveillance and risk management applications. Most importantly, these enhancements help developers significantly improve the way traders can research, design and implement their own strategies. The new Apama Capital Markets Foundation is built in the form of easily configurable building blocks and includes the following capabilities: 1. A new market data architecture, which provides an increase in performance and flexibility in the processing of cross-asset market data. The architecture makes optimal use of the patented Apama parallel event processing engine that can scale to hundreds of thousands of market data updates per second. -

Apama 5.3 Readme

Apama 5.3.0.0 Readme Version 5.3.0.0 Ports: All Date: April 2015 -------------------------------------------------------------------------------- CONTENTS ======== 1. Release Notes for 5.3.0.0 2. Customer Reported Defects Fixed in 5.3.0.0 and previous releases 3. Other Defects Fixed in 5.3.0.0 4. Copyright Notices 5. Support For installation information, see "Installing Apama" (installing_apama.pdf in the downloaded package). Adobe Acrobat Reader version 8.0 or greater is required to view the included PDF documentation. ------------------------------ 1. Release Notes for 5.3.0.0 ------------------------------ a. ADBC PAM-10573 : Some supported databases store empty strings as NULL (since 5.3.0.0) ================================================================================ Some databases such as Oracle are known to store empty strings as NULL values, which can lead to confusion when executing queries where a field is compared to the empty string. For example: select * from table where field = "" Would not match rows where the field was null for such databases. To ensure the desired results are returned when running queries against databases that store empty strings as null, we recommend queries to be written to check for NULL instead of empty string literals. However, despite this limitation in the underlying database, the supplied ODBC driver for Oracle includes special logic to allow queries to use empty strings (though this does not apply to the supplied JDBC driver, or to most other 3rd party Oracle drivers). PAM-21466 : Bundled Oracle JDBC driver can incorrectly report Oracle Advanced Security errors (since 5.2.0.0) ================================================================================ Even when Oracle Advanced Security is not turned on for the server, the following error can occur during connect: [Oracle JDBC Driver]The connect attempt failed because the server requires Oracle Advanced Security. -

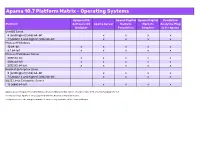

Apama 10.7 Supported Platforms.Xlsx

Apama 10.7 Platform Matrix - Operating Systems Apama with Apama Capital Apama Capital Predictive Platform Software AG Apama Server Markets Markets Analytics Plug- Designer Foundation Adapters in for Apama CentOS Linux 8 (and higher) (x86) 64-bit x x x x 7 (Update 2 and higher) (x86) 64-bit x x x x Microsoft Windows 10 64-bit x x x x x 8.1 64-bit x x x x x Microsoft Windows Server 2019 64-bit x x x x x 2016 64-bit x x x x x 2012 R2 64-bit x x x x x Red Hat Enterprise Linux 8 (and higher) (x86) 64-bit x x x x 7 (Update 2 and higher) (x86) 64-bit x x x x SUSE Linux Enterprise Server 12 (x86) 64-bit x x x x Apama does not support Security Enhanced Linux (SELinux). This option should be turned off on Linux for Apama to run. For Oracle Linux, Apama is only supported with the Red Hat Compatible Kernel. For Apama server, the compiled runtime feature is only available on the Linux platforms. Apama 10.7 Platform Matrix - Compilers Microsoft Visual Studio GCC GCC Platform 2015 Update 3 4.8 8.2 (C++) CentOS Linux 8 (and higher) (x86) 64-bit x 7 (Update 2 and higher) (x86) 64-bit x Microsoft Windows 10 64-bit x 8.1 64-bit x Microsoft Windows Server 2019 64-bit x 2016 64-bit x 2012 R2 64-bit x Red Hat Enterprise Linux 8 (and higher) (x86) 64-bit x 7 (Update 2 and higher) (x86) 64-bit x SUSE Linux Enterprise Server 12 (x86) 64-bit x Compilers are relevant for Apama when creating C++ plug-ins and applications using the C++ client API. -

Progress Software Introduces Apama 4.3 for Faster Deployment and Massive Scalability for Event Processing Applications

November 17, 2010 Progress Software Introduces Apama 4.3 for Faster Deployment and Massive Scalability for Event Processing Applications New and Unique Functionality Includes Combination of Discrete and Streaming Events BEDFORD, MA -- (MARKET WIRE) -- 11/17/10 -- Progress Software Corporation (NASDAQ: PRGS), a leading independent enterprise software provider that enables companies to be operationally responsive, today announced the availability of Progress® Apama® 4.3, a major new release of the market leading Progress Apama Event Processing platform. This new version includes a next-generation Event Processing Language (EPL) optimized for the concise expression of business and temporal logic. Moreover, events can be captured as a stream with common attributes, giving developers and business users greater scalability and more control over how they can sense and respond to events. This is the third major product release of the Apama Event Processing platform in two years from Progress Software, demonstrating the company's commitment to leading research, innovation and development in this important area. The Apama development team remains steadfast in its focus on enhancing productivity, improving performance and increasing integration with innovative language features, new dashboard functionality and massive scalability. Enhancements in the Apama 4.3 platform's Event Processing Language (EPL), formerly known as MonitorScript, support sophisticated event stream management, and are the result of more than 10 person years of R&D effort. The new capabilities provide developers and business users with a simplified but extremely powerful syntax that allows "event stream networks" to be defined with common attributes, giving them more direct control over their critical business events. -

Regeldokument

Master’s degree project Source code quality in connection to self-admitted technical debt Author: Alina Hrynko Supervisor: Morgan Ericsson Semester: VT20 Subject: Computer Science Abstract The importance of software code quality is increasing rapidly. With more code being written every day, its maintenance and support are becoming harder and more expensive. New automatic code review tools are developed to reach quality goals. One of these tools is SonarQube. However, people keep their leading role in the development process. Sometimes they sacrifice quality in order to speed up the development. This is called Technical Debt. In some particular cases, this process can be admitted by the developer. This is called Self-Admitted Technical Debt (SATD). Code quality can also be measured by such static code analysis tools as SonarQube. On this occasion, different issues can be detected. The purpose of this study is to find a connection between code quality issues, found by SonarQube and those marked as SATD. The research questions include: 1) Is there a connection between the size of the project and the SATD percentage? 2) Which types of issues are the most widespread in the code, marked by SATD? 3) Did the introduction of SATD influence the bug fixing time? As a result of research, a certain percentage of SATD was found. It is between 0%–20.83%. No connection between the size of the project and the percentage of SATD was found. There are certain issues that seem to relate to the SATD, such as “Duplicated code”, “Unused method parameters should be removed”, “Cognitive Complexity of methods should not be too high”, etc. -

Trifacta Data Preparation for Amazon Redshift and S3 Must Be Deployed Into an Existing Virtual Private Cloud (VPC)

Install Guide for Data Preparation for Amazon Redshift and S3 Version: 7.1 Doc Build Date: 05/26/2020 Copyright © Trifacta Inc. 2020 - All Rights Reserved. CONFIDENTIAL These materials (the “Documentation”) are the confidential and proprietary information of Trifacta Inc. and may not be reproduced, modified, or distributed without the prior written permission of Trifacta Inc. EXCEPT AS OTHERWISE PROVIDED IN AN EXPRESS WRITTEN AGREEMENT, TRIFACTA INC. PROVIDES THIS DOCUMENTATION AS-IS AND WITHOUT WARRANTY AND TRIFACTA INC. DISCLAIMS ALL EXPRESS AND IMPLIED WARRANTIES TO THE EXTENT PERMITTED, INCLUDING WITHOUT LIMITATION THE IMPLIED WARRANTIES OF MERCHANTABILITY, NON-INFRINGEMENT AND FITNESS FOR A PARTICULAR PURPOSE AND UNDER NO CIRCUMSTANCES WILL TRIFACTA INC. BE LIABLE FOR ANY AMOUNT GREATER THAN ONE HUNDRED DOLLARS ($100) BASED ON ANY USE OF THE DOCUMENTATION. For third-party license information, please select About Trifacta from the Help menu. 1. Quick Start . 4 1.1 Install from AWS Marketplace . 4 1.2 Upgrade for AWS Marketplace . 7 2. Configure . 8 2.1 Configure for AWS . 8 2.1.1 Configure for EC2 Role-Based Authentication . 14 2.1.2 Enable S3 Access . 16 2.1.2.1 Create Redshift Connections 28 3. Contact Support . 30 4. Legal 31 4.1 Third-Party License Information . 31 Page #3 Quick Start Install from AWS Marketplace Contents: Product Limitations Internet access Install Desktop Requirements Pre-requisites Install Steps - CloudFormation template SSH Access Troubleshooting SELinux Upgrade Documentation Related Topics This guide steps through the requirements and process for installing Trifacta® Data Preparation for Amazon Redshift and S3 through the AWS Marketplace. -

A Complex Event Processing Engine Based on Data Stream

ReCEPtor: Sensing Complex Events in Data Streams for Service-Oriented Architectures Mingzhu Wei, Ismail Ari, Jun Li, Mohamed Dekhil Digital Printing and Imaging Laboratory HP Laboratories Palo Alto HPL-2007-176 November 2, 2007* data stream, complex All mission-critical applications read or generate raw data streams and require event processing, real-time processing of these streams to collect statistics, control flow and detect SOA, query plan, abnormal patterns. A trend which has gained strong momentum in different EPL industry sectors is the use of Complex Event Processing (CEP) over streams for all critical business processes, thus pushing its span beyond military and financial applications. A parallel trend has been the re-architecting of existing business processes with Service Oriented Architecture (SOA) principals to provide integration and interoperability. This requires use of middleware that incorporates web services, Business Process Management (BPM) systems or an Enterprise Service Bus (ESB), and in some cases Business Rule Engines (BRE). There is a gap between the new generation of business processes which desperately need CEP and the proposed CEP engines that were not built with SOA in mind. These engines either don’t support the flexible, dynamic and distributed business applications deployed in SOA or they try to merge the middleware with the CEP engine as one big proprietary package. This paper describes the design and implementation of our CEP engine called ReCEPtor, which can sense complex events in data streams in real-time. Our modular architecture describes how to integrate ReCEPtor with different business applications in different platforms either directly or by using an off-the-shelf BPM system and a platform adapter. -

Introduction to Apama

Introduction to Apama 9.9.0 October 2015 This document applies to Apama 9.9.0 and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © 2013-2015 Software AG, Darmstadt, Germany and/or Software AG USA Inc., Reston, VA, USA, and/or its subsidiaries and/or its affiliates and/or their licensors. The name Software AG and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA Inc. and/or its subsidiaries and/or its affiliates and/or their licensors. Other company and product names mentioned herein may be trademarks of their respective owners. Detailed information on trademarks and patents owned by Software AG and/or its subsidiaries is located at http://softwareag.com/licenses. Use of this software is subject to adherence to Software AG's licensing conditions and terms. These terms are part of the product documentation, located at http://softwareag.com/licenses/ and/or in the root installation directory of the licensed product(s). This software may include portions of third-party products. For third-party copyright notices, license terms, additional rights or restrictions, please refer to "License Texts, Copyright Notices and Disclaimers of Third Party Products". For certain specific third-party license restrictions, please refer to section E of the Legal Notices available under "License Terms and Conditions for Use of Software AG Products / Copyright and Trademark Notices of Software AG Products". These documents are part of the product documentation, located at http://softwareag.com/licenses and/or in the root installation directory of the licensed product(s). -

Portable Stateful Big Data Processing in Apache Beam

Portable stateful big data processing in Apache Beam Kenneth Knowles Apache Beam PMC Software Engineer @ Google https://s.apache.org/ffsf-2017-beam-state [email protected] / @KennKnowles Flink Forward San Francisco 2017 Agenda 1. What is Apache Beam? 2. State 3. Timers 4. Example & Little Demo What is Apache Beam? TL;DR (Flink draws it more like this) 4 DAGs, DAGs, DAGs Apache Beam Apache Flink Apache Cloud Hadoop Apache Apache Dataflow Spark Samza MapReduce Apache Apache Apache (paper) Storm Gearpump Apex (incubating) FlumeJava (paper) Heron MillWheel (paper) Dataflow Model (paper) 2004 2005 2006 2007 2008 2009 2010 2011 2012 2013 2014 2015 2016 Apache Flink local, on-prem, The Beam Vision cloud Cloud Dataflow: Java fully managed input.apply( Apache Spark Sum.integersPerKey()) local, on-prem, cloud Sum Per Key Apache Apex Python local, on-prem, cloud input | Sum.PerKey() Apache Gearpump (incubating) ⋮ ⋮ 6 Apache Flink local, on-prem, The Beam Vision cloud Cloud Dataflow: Python fully managed input | KakaIO.read() Apache Spark local, on-prem, cloud KafkaIO Apache Apex ⋮ local, on-prem, cloud Apache Java Gearpump (incubating) class KafkaIO extends UnboundedSource { … } ⋮ 7 The Beam Model PTransform Pipeline PCollection (bounded or unbounded) 8 The Beam Model What are you computing? (read, map, reduce) Where in event time? (event time windowing) When in processing time are results produced? (triggers) How do refinements relate? (accumulation mode) 9 What are you computing? Read ParDo Grouping Composite Parallel connectors to Per element Group -

Scalable and Flexible Middleware for Dynamic Data Flows

SCALABLEANDFLEXIBLEMIDDLEWAREFORDYNAMIC DATAFLOWS stephan boomker Master thesis Computing Science Software Engineering and Distributed Systems Primaray RuG supervisor : Prof. Dr. M. Aiello Secondary RuG supervisor : Prof. Dr. P. Avgeriou Primary TNO supervisor : MSc. E. Harmsma Secondary TNO supervisor : MSc. E. Lazovik August 16, 2016 – version 1.0 [ August 16, 2016 at 11:47 – classicthesis version 1.0 ] Stephan Boomker: Scalable and flexible middleware for dynamic data flows, Master thesis Computing Science, © August 16, 2016 [ August 16, 2016 at 11:47 – classicthesis version 1.0 ] ABSTRACT Due to the concepts of Internet of Things and Big data, the traditional client-server architecture is not sufficient any more. One of the main reasons is wide range of expanding heterogeneous applications, data sources and environments. New forms of data processing require new architectures and techniques in order to be scalable, flexible and able to handle fast dynamic data flows. The backbone of all those objects, applications and users is called the middleware. This research goes about designing and implementing a middle- ware by taking into account different state of the art tools and tech- niques. To come up to a solution which is able to handle a flexible set of sources and models across organizational borders. At the same time it is de-centralized, distributed and, although de-central able to perform semantic based system integration centrally. This is accom- plished by introducing of an architecture containing a combination of data integration patterns, semantic storage and stream processing patterns. A reference implementation is presented of the proposed architec- ture based on Apache Camel framework. This prototype provides the ability to dynamically create and change flexible and distributed data flows during runtime. -

Big Data Analysis Using Hadoop Lecture 4 Hadoop Ecosystem

1/16/18 Big Data Analysis using Hadoop Lecture 4 Hadoop EcoSystem Hadoop Ecosytems 1 1/16/18 Overview • Hive • HBase • Sqoop • Pig • Mahoot / Spark / Flink / Storm • Hadoop & Data Management Architectures Hive 2 1/16/18 Hive • Data Warehousing Solution built on top of Hadoop • Provides SQL-like query language named HiveQL • Minimal learning curve for people with SQL expertise • Data analysts are target audience • Ability to bring structure to various data formats • Simple interface for ad hoc querying, analyzing and summarizing large amountsof data • Access to files on various data stores such as HDFS, etc Website : http://hive.apache.org/ Download : http://hive.apache.org/downloads.html Documentation : https://cwiki.apache.org/confluence/display/Hive/LanguageManual Hive • Hive does NOT provide low latency or real-time queries • Even querying small amounts of data may take minutes • Designed for scalability and ease-of-use rather than low latency responses • Translates HiveQL statements into a set of MapReduce Jobs which are then executed on a Hadoop Cluster • This is changing 3 1/16/18 Hive • Hive Concepts • Re-used from Relational Databases • Database: Set of Tables, used for name conflicts resolution • Table: Set of Rows that have the same schema (same columns) • Row: A single record; a set of columns • Column: provides value and type for a single value • Can can be dived up based on • Partitions • Buckets Hive – Let’s work through a simple example 1. Create a Table 2. Load Data into a Table 3. Query Data 4. Drop a Table 4 1/16/18 -

Researching Algorithmic Institutions Essay

Researching Algorithmic Institutions RESEARCHING ALGORITHMIC governance produces an essentially harnessed to the pursuit of procedures experimental condition of institutionality. That and realization of rules. Experiments lend INSTITUTIONS which becomes available for ‘disruption’ or themselves to the goal-oriented world of Liam Magee and Ned Rossiter ‘innovation’ — both institutional encodings — algorithms. As such, the invention of new Like any research centre that today is equally prescribed within silicon test-beds institutional forms and practices would seem 3 investigates the media conditions of social that propose limits to political possibility. antithetical to experiments in algorithmic organization, the Institute for Culture and Experiments privilege the repeatability and governance. Yet what if we consider Society modulates functional institutional reproducibility of action. This is characteristic experience itself as conditioned and governance with what might be said to be, of algorithmic routines that accommodate made possible by experiments in operatively and with a certain conscientious variation only through the a priori of known algorithmic governance? attention to method, algorithmic experiments. statistical parameters. Innovation, in other Surely enough, the past few decades have In this essay we convolve these terms. words, is merely a variation of the known seen a steady transformation of many As governance moves beyond Weberian within the horizon of fault tolerance. institutional settings. There are many studies proceduralism toward its algorithmic Experiments in algorithmic governance that account for such change as coinciding automation, research life itself becomes are radically dissimilar from the experience with and often directly resulting from the ways subject to institutional experimentation. of politics and culture, which can be in which neoliberal agendas have variously Parametric adjustment generates sine wave- understood as the constitutive outside of impacted organizational values and practices.