Complex Event Processing As a Service in Multi-Cloud Environments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Large-Scale Learning from Data Streams with Apache SAMOA

Large-Scale Learning from Data Streams with Apache SAMOA Nicolas Kourtellis1, Gianmarco De Francisci Morales2, and Albert Bifet3 1 Telefonica Research, Spain, [email protected] 2 Qatar Computing Research Institute, Qatar, [email protected] 3 LTCI, Télécom ParisTech, France, [email protected] Abstract. Apache SAMOA (Scalable Advanced Massive Online Anal- ysis) is an open-source platform for mining big data streams. Big data is defined as datasets whose size is beyond the ability of typical soft- ware tools to capture, store, manage, and analyze, due to the time and memory complexity. Apache SAMOA provides a collection of dis- tributed streaming algorithms for the most common data mining and machine learning tasks such as classification, clustering, and regression, as well as programming abstractions to develop new algorithms. It fea- tures a pluggable architecture that allows it to run on several distributed stream processing engines such as Apache Flink, Apache Storm, and Apache Samza. Apache SAMOA is written in Java and is available at https://samoa.incubator.apache.org under the Apache Software Li- cense version 2.0. 1 Introduction Big data are “data whose characteristics force us to look beyond the traditional methods that are prevalent at the time” [18]. For instance, social media are one of the largest and most dynamic sources of data. These data are not only very large due to their fine grain, but also being produced continuously. Furthermore, such data are nowadays produced by users in different environments and via a multitude of devices. For these reasons, data from social media and ubiquitous environments are perfect examples of the challenges posed by big data. -

DSP Frameworks DSP Frameworks We Consider

Università degli Studi di Roma “Tor Vergata” Dipartimento di Ingegneria Civile e Ingegneria Informatica DSP Frameworks Corso di Sistemi e Architetture per Big Data A.A. 2017/18 Valeria Cardellini DSP frameworks we consider • Apache Storm (with lab) • Twitter Heron – From Twitter as Storm and compatible with Storm • Apache Spark Streaming (lab) – Reduce the size of each stream and process streams of data (micro-batch processing) • Apache Flink • Apache Samza • Cloud-based frameworks – Google Cloud Dataflow – Amazon Kinesis Streams Valeria Cardellini - SABD 2017/18 1 Apache Storm • Apache Storm – Open-source, real-time, scalable streaming system – Provides an abstraction layer to execute DSP applications – Initially developed by Twitter • Topology – DAG of spouts (sources of streams) and bolts (operators and data sinks) Valeria Cardellini - SABD 2017/18 2 Stream grouping in Storm • Data parallelism in Storm: how are streams partitioned among multiple tasks (threads of execution)? • Shuffle grouping – Randomly partitions the tuples • Field grouping – Hashes on a subset of the tuple attributes Valeria Cardellini - SABD 2017/18 3 Stream grouping in Storm • All grouping (i.e., broadcast) – Replicates the entire stream to all the consumer tasks • Global grouping – Sends the entire stream to a single task of a bolt • Direct grouping – The producer of the tuple decides which task of the consumer will receive this tuple Valeria Cardellini - SABD 2017/18 4 Storm architecture • Master-worker architecture Valeria Cardellini - SABD 2017/18 5 Storm -

Comparative Analysis of Data Stream Processing Systems

Shah Zeb Mian Comparative Analysis of Data Stream Processing Systems Master’s Thesis in Information Technology February 23, 2020 University of Jyväskylä Faculty of Information Technology Author: Shah Zeb Mian Contact information: [email protected] Supervisors: Oleksiy Khriyenko, and Vagan Terziyan Title: Comparative Analysis of Data Stream Processing Systems Työn nimi: Vertaileva analyysi Data Stream-käsittelyjärjestelmistä Project: Master’s Thesis Study line: All study lines Page count: 48+0 Abstract: Big data processing systems are evolving to be more stream oriented where data is processed continuously by processing it as soon as it arrives. Earlier data was often stored in a database, a file system or other form of data storage system. Applications would query the data as needed. Stram processing is the processing of data in motion. It works on continuous data retrieved from different resources. Instead of periodically collecting huge static data, streaming frameworks process data as soon as it becomes available, hence reducing latency. This thesis aims to conduct a comparative analysis of different streaming processors based on selected features. Research focuses on Apache Samza, Apache Flink, Apache Storm and Apache Spark Structured Streaming. Also, this thesis explains Apache Kafka which is a log-based data storage widely used in streaming frameworks. Keywords: Big Data, Stream Processing,Batch Processing,Streaming Engines, Apache Kafka, Apache Samza Suomenkielinen tiivistelmä: Big data-käsittelyjärjestelmät ovat tällä hetkellä kehittymässä stream-orientoituneiksi, eli data käsitellään heti saapuessaan. Perinteisemmin data säilöt- tiin tietokantaan, tiedostopohjaisesti tai muuhun tiedonsäilytysjärjestelmään, ja applikaatiot hakivat datan tarvittaessa. Stream-pohjainen järjestelmä käsittelee liikkuvaa dataa, jatkuva- aikaista dataa useasta lähteestä. Sen sijaan, että haetaan ajoittain dataa, stream-pohjaiset frameworkit pystyvät käsittelemään i dataa heti kun se on saatavilla, täten vähentäen viivettä. -

Network Traffic Profiling and Anomaly Detection for Cyber Security

Network traffic profiling and anomaly detection for cyber security Laurens D’hooge Student number: 01309688 Supervisors: Prof. dr. ir. Filip De Turck, dr. ir. Tim Wauters Counselors: Prof. dr. Bruno Volckaert, dr. ir. Tim Wauters A dissertation submitted to Ghent University in partial fulfilment of the requirements for the degree of Master of Science in Information Engineering Technology Academic year: 2017-2018 Acknowledgements This thesis is the result of 4 months work and I would like to express my gratitude towards the people who have guided me throughout this process. First and foremost I’d like to thank my thesis advisors prof. dr. Bruno Volckaert and dr. ir. Tim Wauters. By virtue of their knowledge and clear communication, I was able to maintain a clear target. Secondly I would like to thank prof. dr. ir. Filip De Turck for providing me the opportunity to conduct research in this field with the IDLab research group. Special thanks to Andres Felipe Ocampo Palacio and dr. Marleen Denert are in order as well. Mr. Ocampo’s Phd research into big data processing for network traffic and the resulting framework are an integral part of this thesis. Ms. Denert has been the go-to member of the faculty staff for general advice and administrative dealings. The final token of gratitude I’d like to extend to my family and friends for their continued support during this process. Laurens D’hooge Network traffic profiling and anomaly detection for cyber security Laurens D’hooge Supervisor(s): prof. dr. ir. Filip De Turck, dr. ir. Tim Wauters Abstract— This article is a short summary of the research findings of a creation of APT2. -

Progress Software Introduces a New Generation of the Apama Capital Markets Foundation

June 22, 2010 Progress Software Introduces a New Generation of the Apama Capital Markets Foundation Apama Capital Markets Foundation Enhances CEP Platform With Reusable Services That Enable Developers to Help Quant Traders Become More Successful NEW YORK, NY and BEDFORD, MA, Jun 22, 2010 (MARKETWIRE via COMTEX News Network) -- SIFMA - Progress Software Corporation (NASDAQ: PRGS), a leading software provider that enables enterprises to be operationally responsive, today announced the launch and immediate availability of a new generation of its Progress(R) Apama(R) Capital Markets Foundation. The Apama Capital Markets Foundation builds on the award-winning Apama Complex Event Processing platform by providing a powerful set of pre-built capabilities for developers creating a broad range of capital markets trading applications. The new version includes new functionality and across-the-board feature enhancements that deliver significant improvements in performance and productivity. As a result, developers can enable quantitative and systematic traders to more rapidly create trading strategies and platforms that are highly competitive and differentiated, as well as create highly flexible market surveillance and risk management applications. Most importantly, these enhancements help developers significantly improve the way traders can research, design and implement their own strategies. The new Apama Capital Markets Foundation is built in the form of easily configurable building blocks and includes the following capabilities: 1. A new market data architecture, which provides an increase in performance and flexibility in the processing of cross-asset market data. The architecture makes optimal use of the patented Apama parallel event processing engine that can scale to hundreds of thousands of market data updates per second. -

Distributed Complex Event Processing in Multiclouds

Distributed Complex Event Processing in Multiclouds Vassilis Stefanidis1, Yiannis Verginadis1, Ioannis Patiniotakis1 and Gregoris Mentzas1 1 Institute of Communications and Computer Systems, National Technical University of Ath- ens, Greece {stefanidis, jverg, ipatini, gmentzas}@mail.ntua.gr Abstract. The last few years, the generation of vast amounts of heterogeneous data with different velocity and veracity and the requirement to process them, has significantly challenged the computational capacity and efficiency of the modern infrastructural resources. The propagation of Big Data among different pro- cessing and storage architectures, has amplified the need for adequate and cost- efficient infrastructures to host them. An overabundance of cloud service offer- ings is currently available and is being rapidly adopted by small and medium enterprises based on its many benefits to traditional computing models. However, at the same time the Big Data computing requirements pose new research chal- lenges that question the adoption of single cloud provider resources. Nowadays, we discuss the emerging data-intensive applications that necessitate the wide adoption of multicloud deployment models, in order to use all the advantages of cloud computing. A key tool for managing such multicloud applications and guarantying their quality of service, even in extreme scenarios of workload fluc- tuations, are adequate distributed monitoring mechanisms. In this work, we dis- cuss a distributed complex event processing architecture that follows automati- cally the big data application deployment in order to efficiently monitor its health status and detect reconfiguration opportunities. This proposal is examined against an illustrative scenario and is preliminary evaluated for revealing its performance results. Keywords: Distributed CEP, Cloud Monitoring, Multiclouds, Big Data. -

Apama 5.3 Readme

Apama 5.3.0.0 Readme Version 5.3.0.0 Ports: All Date: April 2015 -------------------------------------------------------------------------------- CONTENTS ======== 1. Release Notes for 5.3.0.0 2. Customer Reported Defects Fixed in 5.3.0.0 and previous releases 3. Other Defects Fixed in 5.3.0.0 4. Copyright Notices 5. Support For installation information, see "Installing Apama" (installing_apama.pdf in the downloaded package). Adobe Acrobat Reader version 8.0 or greater is required to view the included PDF documentation. ------------------------------ 1. Release Notes for 5.3.0.0 ------------------------------ a. ADBC PAM-10573 : Some supported databases store empty strings as NULL (since 5.3.0.0) ================================================================================ Some databases such as Oracle are known to store empty strings as NULL values, which can lead to confusion when executing queries where a field is compared to the empty string. For example: select * from table where field = "" Would not match rows where the field was null for such databases. To ensure the desired results are returned when running queries against databases that store empty strings as null, we recommend queries to be written to check for NULL instead of empty string literals. However, despite this limitation in the underlying database, the supplied ODBC driver for Oracle includes special logic to allow queries to use empty strings (though this does not apply to the supplied JDBC driver, or to most other 3rd party Oracle drivers). PAM-21466 : Bundled Oracle JDBC driver can incorrectly report Oracle Advanced Security errors (since 5.2.0.0) ================================================================================ Even when Oracle Advanced Security is not turned on for the server, the following error can occur during connect: [Oracle JDBC Driver]The connect attempt failed because the server requires Oracle Advanced Security. -

Efficient Pattern Matching Over Event Streams

Efficient Pattern Matching over Event Streams ∗ Jagrati Agrawal, Yanlei Diao, Daniel Gyllstrom, and Neil Immerman Department of Computer Science University of Massachusetts Amherst Amherst, MA, USA fjagrati, yanlei, dpg, [email protected] ABSTRACT against complex patterns and the events used to match each Pattern matching over event streams is increasingly being pattern are transformed into new events for output. Re- employed in many areas including financial services, RFID- cently, such pattern matching over streams has aroused sig- based inventory management, click stream analysis, and elec- nificant interest in industry [28, 30, 29, 9] due to its wide tronic health systems. While regular expression matching applicability in areas such as financial services [10], RFID- is well studied, pattern matching over streams presents two based inventory management [31], click stream analysis [26], new challenges: Languages for pattern matching over streams and electronic health systems [16]. In financial services, for are significantly richer than languages for regular expression instance, a brokerage customer may be interested in a se- matching. Furthermore, efficient evaluation of these pattern quence of stock trading events that represent a new market queries over streams requires new algorithms and optimiza- trend. In RFID-based tracking and monitoring, applica- tions: the conventional wisdom for stream query processing tions may want to track valid paths of shipments and detect (i.e., using selection-join-aggregation) is inadequate. anomalies such as food contamination in supply chains. In this paper, we present a formal evaluation model that While regular expression matching is a well studied com- offers precise semantics for this new class of queries and a puter science problem [17], pattern matching over streams query evaluation framework permitting optimizations in a presents two new challenges: principled way. -

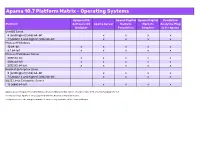

Apama 10.7 Supported Platforms.Xlsx

Apama 10.7 Platform Matrix - Operating Systems Apama with Apama Capital Apama Capital Predictive Platform Software AG Apama Server Markets Markets Analytics Plug- Designer Foundation Adapters in for Apama CentOS Linux 8 (and higher) (x86) 64-bit x x x x 7 (Update 2 and higher) (x86) 64-bit x x x x Microsoft Windows 10 64-bit x x x x x 8.1 64-bit x x x x x Microsoft Windows Server 2019 64-bit x x x x x 2016 64-bit x x x x x 2012 R2 64-bit x x x x x Red Hat Enterprise Linux 8 (and higher) (x86) 64-bit x x x x 7 (Update 2 and higher) (x86) 64-bit x x x x SUSE Linux Enterprise Server 12 (x86) 64-bit x x x x Apama does not support Security Enhanced Linux (SELinux). This option should be turned off on Linux for Apama to run. For Oracle Linux, Apama is only supported with the Red Hat Compatible Kernel. For Apama server, the compiled runtime feature is only available on the Linux platforms. Apama 10.7 Platform Matrix - Compilers Microsoft Visual Studio GCC GCC Platform 2015 Update 3 4.8 8.2 (C++) CentOS Linux 8 (and higher) (x86) 64-bit x 7 (Update 2 and higher) (x86) 64-bit x Microsoft Windows 10 64-bit x 8.1 64-bit x Microsoft Windows Server 2019 64-bit x 2016 64-bit x 2012 R2 64-bit x Red Hat Enterprise Linux 8 (and higher) (x86) 64-bit x 7 (Update 2 and higher) (x86) 64-bit x SUSE Linux Enterprise Server 12 (x86) 64-bit x Compilers are relevant for Apama when creating C++ plug-ins and applications using the C++ client API. -

Progress Software Introduces Apama 4.3 for Faster Deployment and Massive Scalability for Event Processing Applications

November 17, 2010 Progress Software Introduces Apama 4.3 for Faster Deployment and Massive Scalability for Event Processing Applications New and Unique Functionality Includes Combination of Discrete and Streaming Events BEDFORD, MA -- (MARKET WIRE) -- 11/17/10 -- Progress Software Corporation (NASDAQ: PRGS), a leading independent enterprise software provider that enables companies to be operationally responsive, today announced the availability of Progress® Apama® 4.3, a major new release of the market leading Progress Apama Event Processing platform. This new version includes a next-generation Event Processing Language (EPL) optimized for the concise expression of business and temporal logic. Moreover, events can be captured as a stream with common attributes, giving developers and business users greater scalability and more control over how they can sense and respond to events. This is the third major product release of the Apama Event Processing platform in two years from Progress Software, demonstrating the company's commitment to leading research, innovation and development in this important area. The Apama development team remains steadfast in its focus on enhancing productivity, improving performance and increasing integration with innovative language features, new dashboard functionality and massive scalability. Enhancements in the Apama 4.3 platform's Event Processing Language (EPL), formerly known as MonitorScript, support sophisticated event stream management, and are the result of more than 10 person years of R&D effort. The new capabilities provide developers and business users with a simplified but extremely powerful syntax that allows "event stream networks" to be defined with common attributes, giving them more direct control over their critical business events. -

A Complex Event Processing Engine Based on Data Stream

ReCEPtor: Sensing Complex Events in Data Streams for Service-Oriented Architectures Mingzhu Wei, Ismail Ari, Jun Li, Mohamed Dekhil Digital Printing and Imaging Laboratory HP Laboratories Palo Alto HPL-2007-176 November 2, 2007* data stream, complex All mission-critical applications read or generate raw data streams and require event processing, real-time processing of these streams to collect statistics, control flow and detect SOA, query plan, abnormal patterns. A trend which has gained strong momentum in different EPL industry sectors is the use of Complex Event Processing (CEP) over streams for all critical business processes, thus pushing its span beyond military and financial applications. A parallel trend has been the re-architecting of existing business processes with Service Oriented Architecture (SOA) principals to provide integration and interoperability. This requires use of middleware that incorporates web services, Business Process Management (BPM) systems or an Enterprise Service Bus (ESB), and in some cases Business Rule Engines (BRE). There is a gap between the new generation of business processes which desperately need CEP and the proposed CEP engines that were not built with SOA in mind. These engines either don’t support the flexible, dynamic and distributed business applications deployed in SOA or they try to merge the middleware with the CEP engine as one big proprietary package. This paper describes the design and implementation of our CEP engine called ReCEPtor, which can sense complex events in data streams in real-time. Our modular architecture describes how to integrate ReCEPtor with different business applications in different platforms either directly or by using an off-the-shelf BPM system and a platform adapter. -

Introduction to Apama

Introduction to Apama 9.9.0 October 2015 This document applies to Apama 9.9.0 and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © 2013-2015 Software AG, Darmstadt, Germany and/or Software AG USA Inc., Reston, VA, USA, and/or its subsidiaries and/or its affiliates and/or their licensors. The name Software AG and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA Inc. and/or its subsidiaries and/or its affiliates and/or their licensors. Other company and product names mentioned herein may be trademarks of their respective owners. Detailed information on trademarks and patents owned by Software AG and/or its subsidiaries is located at http://softwareag.com/licenses. Use of this software is subject to adherence to Software AG's licensing conditions and terms. These terms are part of the product documentation, located at http://softwareag.com/licenses/ and/or in the root installation directory of the licensed product(s). This software may include portions of third-party products. For third-party copyright notices, license terms, additional rights or restrictions, please refer to "License Texts, Copyright Notices and Disclaimers of Third Party Products". For certain specific third-party license restrictions, please refer to section E of the Legal Notices available under "License Terms and Conditions for Use of Software AG Products / Copyright and Trademark Notices of Software AG Products". These documents are part of the product documentation, located at http://softwareag.com/licenses and/or in the root installation directory of the licensed product(s).