15: Miscellaneous Parsing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Layout-Sensitive Generalized Parsing

Layout-sensitive Generalized Parsing Sebastian Erdweg, Tillmann Rendel, Christian K¨astner,and Klaus Ostermann University of Marburg, Germany Abstract. The theory of context-free languages is well-understood and context-free parsers can be used as off-the-shelf tools in practice. In par- ticular, to use a context-free parser framework, a user does not need to understand its internals but can specify a language declaratively as a grammar. However, many languages in practice are not context-free. One particularly important class of such languages is layout-sensitive languages, in which the structure of code depends on indentation and whitespace. For example, Python, Haskell, F#, and Markdown use in- dentation instead of curly braces to determine the block structure of code. Their parsers (and lexers) are not declaratively specified but hand-tuned to account for layout-sensitivity. To support declarative specifications of layout-sensitive languages, we propose a parsing framework in which a user can annotate layout in a grammar. Annotations take the form of constraints on the relative posi- tioning of tokens in the parsed subtrees. For example, a user can declare that a block consists of statements that all start on the same column. We have integrated layout constraints into SDF and implemented a layout- sensitive generalized parser as an extension of generalized LR parsing. We evaluate the correctness and performance of our parser by parsing 33 290 open-source Haskell files. Layout-sensitive generalized parsing is easy to use, and its performance overhead compared to layout-insensitive parsing is small enough for practical application. 1 Introduction Most computer languages prescribe a textual syntax. -

Parse Forest Diagnostics with Dr. Ambiguity

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by CWI's Institutional Repository Parse Forest Diagnostics with Dr. Ambiguity H. J. S. Basten and J. J. Vinju Centrum Wiskunde & Informatica (CWI) Science Park 123, 1098 XG Amsterdam, The Netherlands {Jurgen.Vinju,Bas.Basten}@cwi.nl Abstract In this paper we propose and evaluate a method for locating causes of ambiguity in context-free grammars by automatic analysis of parse forests. A parse forest is the set of parse trees of an ambiguous sentence. Deducing causes of ambiguity from observing parse forests is hard for grammar engineers because of (a) the size of the parse forests, (b) the complex shape of parse forests, and (c) the diversity of causes of ambiguity. We first analyze the diversity of ambiguities in grammars for programming lan- guages and the diversity of solutions to these ambiguities. Then we introduce DR.AMBIGUITY: a parse forest diagnostics tools that explains the causes of ambiguity by analyzing differences between parse trees and proposes solutions. We demonstrate its effectiveness using a small experiment with a grammar for Java 5. 1 Introduction This work is motivated by the use of parsers generated from general context-free gram- mars (CFGs). General parsing algorithms such as GLR and derivates [35,9,3,6,17], GLL [34,22], and Earley [16,32] support parser generation for highly non-deterministic context-free grammars. The advantages of constructing parsers using such technology are that grammars may be modular and that real programming languages (often requiring parser non-determinism) can be dealt with efficiently1. -

Formal Languages - 3

Formal Languages - 3 • Ambiguity in PLs – Problems with if-then-else constructs – Harder problems • Chomsky hierarchy for formal languages – Regular and context-free languages – Type 1: Context-sensitive languages – Type 0 languages and Turing machines Formal Languages-3, CS314 Fall 01© BGRyder 1 Dangling Else Ambiguity (Pascal) Start ::= Stmt Stmt ::= Ifstmt | Astmt Ifstmt ::= IF LogExp THEN Stmt | IF LogExp THEN Stmt ELSE Stmt Astmt ::= Id := Digit Digit ::= 0|1|2|3|4|5|6|7|8|9 LogExp::= Id = 0 Id ::= a|b|c|d|e|f|g|h|i|j|k|l|m|n|o|p|q|r|s|t|u|v|w|x|y|z How are compound if statements parsed using this grammar?? Formal Languages-3, CS314 Fall 01© BGRyder 2 1 IF x = 0 THEN IF y = 0 THEN z := 1 ELSE w := 2; Start Parse Tree 1 Stmt Ifstmt IF LogExp THEN Stmt Ifstmt Id = 0 IF LogExp THEN Stmt ELSE Stmt x Id = 0 Astmt Astmt Id := Digit y Id := Digit z 1 w 2 Formal Languages-3, CS314 Fall 01© BGRyder 3 IF x = 0 THEN IF y = 0 THEN z := 1 ELSE w := 2; Start Stmt Parse Tree 2 Ifstmt Q: which tree is correct? IF LogExp THEN Stmt ELSE Stmt Id = 0 Ifstmt Astmt IF LogExp THEN Stmt x Id := Digit Astmt Id = 0 w 2 Id := Digit y z 1 Formal Languages-3, CS314 Fall 01© BGRyder 4 2 How Solve the Dangling Else? • Algol60: use block structure if x = 0 then begin if y = 0 then z := 1 end else w := 2 • Algol68: use statement begin/end markers if x = 0 then if y = 0 then z := 1 fi else w := 2 fi • Pascal: change the if statement grammar to disallow parse tree 2; that is, always associate an else with the closest if Formal Languages-3, CS314 Fall 01© BGRyder 5 New Pascal Grammar Start ::= Stmt Stmt ::= Stmt1 | Stmt2 Stmt1 ::= IF LogExp THEN Stmt1 ELSE Stmt1 | Astmt Stmt2 ::= IF LogExp THEN Stmt | IF LogExp THEN Stmt1 ELSE Stmt2 Astmt ::= Id := Digit Digit ::= 0|1|2|3|4|5|6|7|8|9 LogExp::= Id = 0 Id ::= a|b|c|d|e|f|g|h|i|j|k|l|m|n|o|p|q|r|s|t|u|v|w|x|y|z Note: only if statements with IF..THEN..ELSE are allowed after the THEN clause of an IF-THEN-ELSE statement. -

Ordered Sets in the Calculus of Data Structures

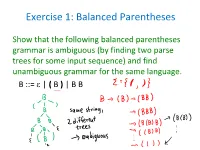

Exercise 1: Balanced Parentheses Show that the following balanced parentheses grammar is ambiguous (by finding two parse trees for some input sequence) and find unambiguous grammar for the same language. B ::= | ( B ) | B B Remark • The same parse tree can be derived using two different derivations, e.g. B -> (B) -> (BB) -> ((B)B) -> ((B)) -> (()) B -> (B) -> (BB) -> ((B)B) -> (()B) -> (()) this correspond to different orders in which nodes in the tree are expanded • Ambiguity refers to the fact that there are actually multiple parse trees, not just multiple derivations. Towards Solution • (Note that we must preserve precisely the set of strings that can be derived) • This grammar: B ::= | A A ::= ( ) | A A | (A) solves the problem with multiple symbols generating different trees, but it is still ambiguous: string ( ) ( ) ( ) has two different parse trees Solution • Proposed solution: B ::= | B (B) • this is very smart! How to come up with it? • Clearly, rule B::= B B generates any sequence of B's. We can also encode it like this: B ::= C* C ::= (B) • Now we express sequence using recursive rule that does not create ambiguity: B ::= | C B C ::= (B) • but now, look, we "inline" C back into the rules for so we get exactly the rule B ::= | B (B) This grammar is not ambiguous and is the solution. We did not prove this fact (we only tried to find ambiguous trees but did not find any). Exercise 2: Dangling Else The dangling-else problem happens when the conditional statements are parsed using the following grammar. S ::= S ; S S ::= id := E S ::= if E then S S ::= if E then S else S Find an unambiguous grammar that accepts the same conditional statements and matches the else statement with the nearest unmatched if. -

14 a Simple Compiler - the Front End

Compilers and Compiler Generators © P.D. Terry, 2000 14 A SIMPLE COMPILER - THE FRONT END At this point it may be of interest to consider the construction of a compiler for a simple programming language, specifically that of section 8.7. In a text of this nature it is impossible to discuss a full-blown compiler, and the value of our treatment may arguably be reduced by the fact that in dealing with toy languages and toy compilers we shall be evading some of the real issues that a compiler writer has to face. However, we hope the reader will find the ensuing discussion of interest, and that it will serve as a useful preparation for the study of much larger compilers. The technique we shall follow is one of slow refinement, supplementing the discussion with numerous asides on the issues that would be raised in compiling larger languages. Clearly, we could opt to develop a completely hand-crafted compiler, or simply to use a tool like Coco/R. We shall discuss both approaches. Even when a compiler is constructed by hand, having an attributed grammar to describe it is very worthwhile. On the source diskette can be found a great deal of code, illustrating different stages of development of our system. Although some of this code is listed in appendices, its volume precludes printing all of it. Some of it has deliberately been written in a way that allows for simple modification when attempting the exercises, and is thus not really of "production quality". For example, in order to allow components such as the symbol table handler and code generator to be used either with hand-crafted or with Coco/R generated systems, some compromises in design have been necessary. -

Ambiguity Detection Methods for Context-Free Grammars

Ambiguity Detection Methods for Context-Free Grammars H.J.S. Basten Master’s thesis August 17, 2007 One Year Master Software Engineering Universiteit van Amsterdam Thesis and Internship Supervisor: Prof. Dr. P. Klint Institute: Centrum voor Wiskunde en Informatica Availability: public domain Contents Abstract 5 Preface 7 1 Introduction 9 1.1 Motivation . 9 1.2 Scope . 10 1.3 Criteria for practical usability . 11 1.4 Existing ambiguity detection methods . 12 1.5 Research questions . 13 1.6 Thesis overview . 14 2 Background and context 15 2.1 Grammars and languages . 15 2.2 Ambiguity in context-free grammars . 16 2.3 Ambiguity detection for context-free grammars . 18 3 Current ambiguity detection methods 19 3.1 Gorn . 19 3.2 Cheung and Uzgalis . 20 3.3 AMBER . 20 3.4 Jampana . 21 3.5 LR(k)test...................................... 22 3.6 Brabrand, Giegerich and Møller . 23 3.7 Schmitz . 24 3.8 Summary . 26 4 Research method 29 4.1 Measurements . 29 4.1.1 Accuracy . 29 4.1.2 Performance . 31 4.1.3 Termination . 31 4.1.4 Usefulness of the output . 31 4.2 Analysis . 33 4.2.1 Scalability . 33 4.2.2 Practical usability . 33 4.3 Investigated ADM implementations . 34 5 Analysis of AMBER 35 5.1 Accuracy . 35 5.2 Performance . 36 3 5.3 Termination . 37 5.4 Usefulness of the output . 38 5.5 Analysis . 38 6 Analysis of MSTA 41 6.1 Accuracy . 41 6.2 Performance . 41 6.3 Termination . 42 6.4 Usefulness of the output . 42 6.5 Analysis . -

Disambiguation Filters for Scannerless Generalized LR Parsers

Disambiguation Filters for Scannerless Generalized LR Parsers Mark G. J. van den Brand1,4, Jeroen Scheerder2, Jurgen J. Vinju1, and Eelco Visser3 1 Centrum voor Wiskunde en Informatica (CWI) Kruislaan 413, 1098 SJ Amsterdam, The Netherlands {Mark.van.den.Brand,Jurgen.Vinju}@cwi.nl 2 Department of Philosophy, Utrecht University Heidelberglaan 8, 3584 CS Utrecht, The Netherlands [email protected] 3 Institute of Information and Computing Sciences, Utrecht University P.O. Box 80089, 3508TB Utrecht, The Netherlands [email protected] 4 LORIA-INRIA 615 rue du Jardin Botanique, BP 101, F-54602 Villers-l`es-Nancy Cedex, France Abstract. In this paper we present the fusion of generalized LR pars- ing and scannerless parsing. This combination supports syntax defini- tions in which all aspects (lexical and context-free) of the syntax of a language are defined explicitly in one formalism. Furthermore, there are no restrictions on the class of grammars, thus allowing a natural syntax tree structure. Ambiguities that arise through the use of unrestricted grammars are handled by explicit disambiguation constructs, instead of implicit defaults that are taken by traditional scanner and parser gener- ators. Hence, a syntax definition becomes a full declarative description of a language. Scannerless generalized LR parsing is a viable technique that has been applied in various industrial and academic projects. 1 Introduction Since the introduction of efficient deterministic parsing techniques, parsing is considered a closed topic for research, both by computer scientists and by practi- cioners in compiler construction. Tools based on deterministic parsing algorithms such as LEX & YACC [15,11] (LALR) and JavaCC (recursive descent), are con- sidered adequate for dealing with almost all modern (programming) languages. -

Introduction to Programming Languages and Syntax

Programming Languages Session 1 – Main Theme Programming Languages Overview & Syntax Dr. Jean-Claude Franchitti New York University Computer Science Department Courant Institute of Mathematical Sciences Adapted from course textbook resources Programming Language Pragmatics (3rd Edition) Michael L. Scott, Copyright © 2009 Elsevier 1 Agenda 11 InstructorInstructor andand CourseCourse IntroductionIntroduction 22 IntroductionIntroduction toto ProgrammingProgramming LanguagesLanguages 33 ProgrammingProgramming LanguageLanguage SyntaxSyntax 44 ConclusionConclusion 2 Who am I? - Profile - ¾ 27 years of experience in the Information Technology Industry, including thirteen years of experience working for leading IT consulting firms such as Computer Sciences Corporation ¾ PhD in Computer Science from University of Colorado at Boulder ¾ Past CEO and CTO ¾ Held senior management and technical leadership roles in many large IT Strategy and Modernization projects for fortune 500 corporations in the insurance, banking, investment banking, pharmaceutical, retail, and information management industries ¾ Contributed to several high-profile ARPA and NSF research projects ¾ Played an active role as a member of the OMG, ODMG, and X3H2 standards committees and as a Professor of Computer Science at Columbia initially and New York University since 1997 ¾ Proven record of delivering business solutions on time and on budget ¾ Original designer and developer of jcrew.com and the suite of products now known as IBM InfoSphere DataStage ¾ Creator of the Enterprise -

CS4200 | Compiler Construction | September 10, 2020 Disambiguation and Layout-Sensitive Syntax

Disambiguation and Layout-Sensitive Syntax Eelco Visser CS4200 | Compiler Construction | September 10, 2020 Disambiguation and Layout-Sensitive Syntax Syntax Definition Summary Derivations - Generating sentences and trees from context-free grammars Ambiguity Declarative Disambiguation Rules - Associativity and priority Grammar Transformations - Eliminating ambiguity by transformation Layout-Sensitive Syntax - Disambiguation using layout constraints Structure Syntax = Structure module structure let inc = function(x) { x + 1 } imports Common in inc(3) context-free start-symbols Exp end context-free syntax Exp.Var = ID Exp.Int = INT Exp.Add = Exp "+" Exp Let( Exp.Fun = "function" "(" {ID ","}* ")" "{" Exp "}" [ Bnd( "inc" Exp.App = Exp "(" {Exp ","}* ")" , Fun(["x"], Add(Var("x"), Int("1"))) ) Exp.Let = "let" Bnd* "in" Exp "end" ] , App(Var("inc"), [Int("3")]) Bnd.Bnd = ID "=" Exp ) Token = Character module structure let inc = function(x) { x + 1 } imports Common in inc(3) context-free start-symbols Exp end context-free syntax Exp.Var = ID module Common Exp.Int = INT lexical syntax Exp.Add = Exp "+" Exp ID = [a-zA-Z] [a-zA-Z0-9]* Exp.Fun = "function" "(" {ID ","}* ")" "{" Exp "}" INT = [\-]? [0-9]+ Exp.App = Exp "(" {Exp ","}* ")" Exp.Let = "let" Bnd* "in" Exp "end" Lexical Syntax = Context-Free Syntax Bnd.Bnd = ID "=" Exp (But we don’t care about structure of lexical syntax) Literal = Non-Terminal module structure let inc = function(x) { x + 1 } imports Common in inc(3) context-free start-symbols Exp end context-free syntax syntax Exp.Var -

Programming Languages

Programming Languages CSCI-GA.2110-001 Summer 2011 Dr. Cory Plock What this course is ■ A study of programming language paradigms ◆ Imperitive ◆ Functional ◆ Logical ◆ Object-oriented ■ Tour of programming language history & roots. ■ Introduction to core language design & implementation concepts. ■ Exposure to new languages you may not have used before. ■ Ability to reason about language benefits/pitfalls. ■ A look at programming language implementation. ■ Offers an appreciation of language standards. ■ Provides the ability to more quickly learn new languages. 2 / 26 What this course isn’t ■ A comprehensive study of one or more languages. ■ An exercise in learning as many languages as possible. ■ A software engineering course. ■ A compiler course. 3 / 26 Introduction The main themes of programming language design and use: ■ Paradigm (Model of computation) ■ Expressiveness ◆ control structures ◆ abstraction mechanisms ◆ types and their operations ◆ tools for programming in the large ■ Ease of use: Writeability / Readability / Maintainability 4 / 26 Language as a tool for thought ■ Role of language as a communication vehicle among programmers can be just as important as ease of writing ■ All general-purpose languages are Turing complete (They can compute the same things) ■ But languages can make expression of certain algorithms difficult or easy. ◆ Try multiplying two Roman numerals ■ Idioms in language A may be useful inspiration when writing in language B. 5 / 26 Idioms ■ Copying a string q to p in C: while (*p++ = *q++) ; ■ Removing duplicates -

Context Free Languages and Pushdown Automata

Context Free Languages and Pushdown Automata COMP2600 — Formal Methods for Software Engineering Ranald Clouston Australian National University Semester 2, 2013 COMP 2600 — Context Free Languages and Pushdown Automata 1 Parsing The process of parsing a program is partly about confirming that a given program is well-formed – syntax. But it is also about representing the structure of the program so that it can be executed – semantics. For this purpose, the trail of sentential forms created en route to generating a given sentence is just as important as the question of whether the sentence can be generated or not. COMP 2600 — Context Free Languages and Pushdown Automata 2 The Semantics of Parses Take the code if e1 then if e2 then s1 else s2 where e1, e2 are boolean expressions and s1, s2 are subprograms. Does this mean if e1 then( if e2 then s1 else s2) or if e1 then( if e2 else s1) else s2 We’d better have an unambiguous way to tell which is right, or we cannot know what the program will do at runtime! COMP 2600 — Context Free Languages and Pushdown Automata 3 Ambiguity Recall that we can present CFG derivations as parse trees. Until now this was mere pretty presentation; now it will become important. A context-free grammar G is unambiguous iff every string can be derived by at most one parse tree. G is ambiguous iff there exists any word w 2 L(G) derivable by more than one parse tree. COMP 2600 — Context Free Languages and Pushdown Automata 4 Example: If-Then and If-Then-Else Consider the CFG S ! if bexp then S j if bexp then S else S j prog where bexp and prog stand for boolean expressions and (if-statement free) programs respectively, defined elsewhere. -

A Practical Tutorial on Context Free Grammars

A Practical Tutorial on Context Free Grammars Robert B. Heckendorn University of Idaho September 16, 2020 Contents 1 Some Definitions 3 2 Some Common Idioms and Hierarchical Development 5 2.1 A grammar for a language that allows a list of X's . .5 2.2 A Language that is a List of X's or Y's . .5 2.3 A Language that is a List of X's Terminated with a Semicolon . .5 2.4 Hierarchical Complexification . .6 2.5 A Language Where There are not Terminal Semicolons but Separating Commas . .6 2.6 A language where each X is followed by a terminating semicolon: . .6 2.7 An arg list of X's, Y's and Z's: . .6 2.8 A Simple Type Declaration: . .6 2.9 Augment the Type Grammar with the Keyword Static . .7 2.10 A Tree Structure as Nested Parentheses . .7 3 Associativity and Precedence 7 3.1 A Grammar for an Arithmetic Expression . .7 3.2 Grouping with Parentheses . .8 3.3 Unary operators . .9 3.4 An Example Problem . 10 4 How can Things go Wrong 10 4.1 Inescapable Productions . 10 4.2 Ambiguity . 11 4.2.1 Ambiguity by Ill-specified Associativity . 12 4.2.2 Ambiguity by Infinite Loop . 13 4.2.3 Ambiguity by Multiple Paths . 14 1 5 The Dangling Else 15 6 The Power of Context Free and incomplete grammars 16 7 Tips for Grammar Writing 18 8 Extended BNF 18 2 A grammar is used to specify the syntax of a language. It answers the question: What sentences are in the language and what are not? A sentence is a finite sequence of symbols from the alphabet of the language.