Interpretation and Performance Practice in Realising Stockhausen's

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Expanding Horizons: the International Avant-Garde, 1962-75

452 ROBYNN STILWELL Joplin, Janis. 'Me and Bobby McGee' (Columbia, 1971) i_ /Mercedes Benz' (Columbia, 1971) 17- Llttle Richard. 'Lucille' (Specialty, 1957) 'Tutti Frutti' (Specialty, 1955) Lynn, Loretta. 'The Pili' (MCA, 1975) Expanding horizons: the International 'You Ain't Woman Enough to Take My Man' (MCA, 1966) avant-garde, 1962-75 'Your Squaw Is On the Warpath' (Decca, 1969) The Marvelettes. 'Picase Mr. Postman' (Motown, 1961) RICHARD TOOP Matchbox Twenty. 'Damn' (Atlantic, 1996) Nelson, Ricky. 'Helio, Mary Lou' (Imperial, 1958) 'Traveling Man' (Imperial, 1959) Phair, Liz. 'Happy'(live, 1996) Darmstadt after Steinecke Pickett, Wilson. 'In the Midnight Hour' (Atlantic, 1965) Presley, Elvis. 'Hound Dog' (RCA, 1956) When Wolfgang Steinecke - the originator of the Darmstadt Ferienkurse - The Ravens. 'Rock All Night Long' (Mercury, 1948) died at the end of 1961, much of the increasingly fragüe spirit of collegial- Redding, Otis. 'Dock of the Bay' (Stax, 1968) ity within the Cologne/Darmstadt-centred avant-garde died with him. Boulez 'Mr. Pitiful' (Stax, 1964) and Stockhausen in particular were already fiercely competitive, and when in 'Respect'(Stax, 1965) 1960 Steinecke had assigned direction of the Darmstadt composition course Simón and Garfunkel. 'A Simple Desultory Philippic' (Columbia, 1967) to Boulez, Stockhausen had pointedly stayed away.1 Cage's work and sig- Sinatra, Frank. In the Wee SmallHoun (Capítol, 1954) Songsfor Swinging Lovers (Capítol, 1955) nificance was a constant source of acrimonious debate, and Nono's bitter Surfaris. 'Wipe Out' (Decca, 1963) opposition to himz was one reason for the Italian composer being marginal- The Temptations. 'Papa Was a Rolling Stone' (Motown, 1972) ized by the Cologne inner circle as a structuralist reactionary. -

Sonification As a Means to Generative Music Ian Baxter

Sonification as a means to generative music By: Ian Baxter A thesis submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy The University of Sheffield Faculty of Arts & Humanities Department of Music January 2020 Abstract This thesis examines the use of sonification (the transformation of non-musical data into sound) as a means of creating generative music (algorithmic music which is evolving in real time and is of potentially infinite length). It consists of a portfolio of ten works where the possibilities of sonification as a strategy for creating generative works is examined. As well as exploring the viability of sonification as a compositional strategy toward infinite work, each work in the portfolio aims to explore the notion of how artistic coherency between data and resulting sound is achieved – rejecting the notion that sonification for artistic means leads to the arbitrary linking of data and sound. In the accompanying written commentary the definitions of sonification and generative music are considered, as both are somewhat contested terms requiring operationalisation to correctly contextualise my own work. Having arrived at these definitions each work in the portfolio is documented. For each work, the genesis of the work is considered, the technical composition and operation of the piece (a series of tutorial videos showing each work in operation supplements this section) and finally its position in the portfolio as a whole and relation to the research question is evaluated. The body of work is considered as a whole in relation to the notion of artistic coherency. This is separated into two main themes: the relationship between the underlying nature of the data and the compositional scheme and the coherency between the data and the soundworld generated by each piece. -

Common Tape Manipulation Techniques and How They Relate to Modern Electronic Music

Common Tape Manipulation Techniques and How They Relate to Modern Electronic Music Matthew A. Bardin Experimental Music & Digital Media Center for Computation & Technology Louisiana State University Baton Rouge, Louisiana 70803 [email protected] ABSTRACT the 'play head' was utilized to reverse the process and gen- The purpose of this paper is to provide a historical context erate the output's audio signal [8]. Looking at figure 1, from to some of the common schools of thought in regards to museumofmagneticsoundrecording.org (Accessed: 03/20/2020), tape composition present in the later half of the 20th cen- the locations of the heads can be noticed beneath the rect- tury. Following this, the author then discusses a variety of angular protective cover showing the machine's model in the more common techniques utilized to create these and the middle of the hardware. Previous to the development other styles of music in detail as well as provides examples of the reel-to-reel machine, electronic music was only achiev- of various tracks in order to show each technique in process. able through live performances on instruments such as the In the following sections, the author then discusses some of Theremin and other early predecessors to the modern syn- the limitations of tape composition technologies and prac- thesizer. [11, p. 173] tices. Finally, the author puts the concepts discussed into a modern historical context by comparing the aspects of tape composition of the 20th century discussed previous to the composition done in Digital Audio recording and manipu- lation practices of the 21st century. Author Keywords tape, manipulation, history, hardware, software, music, ex- amples, analog, digital 1. -

Applied Tape Techniques for Use with Electronic Music Synthesizers. Robert Bruce Greenleaf Louisiana State University and Agricultural & Mechanical College

Louisiana State University LSU Digital Commons LSU Historical Dissertations and Theses Graduate School 1974 Applied Tape Techniques for Use With Electronic Music Synthesizers. Robert Bruce Greenleaf Louisiana State University and Agricultural & Mechanical College Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_disstheses Part of the Music Commons Recommended Citation Greenleaf, Robert Bruce, "Applied Tape Techniques for Use With Electronic Music Synthesizers." (1974). LSU Historical Dissertations and Theses. 8157. https://digitalcommons.lsu.edu/gradschool_disstheses/8157 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Historical Dissertations and Theses by an authorized administrator of LSU Digital Commons. For more information, please contact [email protected]. A p p l ie d tape techniques for use with ELECTRONIC MUSIC SYNTHESIZERS/ A Monograph Submitted to the Graduate Faculty of the Louisiana State University and Agricultural and Mechanical College in partial fulfillment of the Doctor of Musical Arts In The School of Music by Robert Bruce Greenleaf M.M., Louisiana State University, 1972 A ugust, 19714- UMI Number: DP69544 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. UMT Dissertation Publishing UMI DP69544 Published by ProQuest LLC (2015). Copyright in the Dissertation held by the Author. Microform Edition © ProQuest LLC. -

A Symphonic Poem on Dante's Inferno and a Study on Karlheinz Stockhausen and His Effect on the Trumpet

Louisiana State University LSU Digital Commons LSU Doctoral Dissertations Graduate School 2008 A Symphonic Poem on Dante's Inferno and a study on Karlheinz Stockhausen and his effect on the trumpet Michael Joseph Berthelot Louisiana State University and Agricultural and Mechanical College, [email protected] Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_dissertations Part of the Music Commons Recommended Citation Berthelot, Michael Joseph, "A Symphonic Poem on Dante's Inferno and a study on Karlheinz Stockhausen and his effect on the trumpet" (2008). LSU Doctoral Dissertations. 3187. https://digitalcommons.lsu.edu/gradschool_dissertations/3187 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Doctoral Dissertations by an authorized graduate school editor of LSU Digital Commons. For more information, please [email protected]. A SYMPHONIC POEM ON DANTE’S INFERNO AND A STUDY ON KARLHEINZ STOCKHAUSEN AND HIS EFFECT ON THE TRUMPET A Dissertation Submitted to the Graduate Faculty of the Louisiana State University and Agriculture and Mechanical College in partial fulfillment of the requirements for the degree of Doctor of Philosophy in The School of Music by Michael J Berthelot B.M., Louisiana State University, 2000 M.M., Louisiana State University, 2006 December 2008 Jackie ii ACKNOWLEDGEMENTS I would like to thank Dinos Constantinides most of all, because it was his constant support that made this dissertation possible. His patience in guiding me through this entire process was remarkable. It was Dr. Constantinides that taught great things to me about composition, music, and life. -

Latin American Nimes: Electronic Musical Instruments and Experimental Sound Devices in the Twentieth Century

Latin American NIMEs: Electronic Musical Instruments and Experimental Sound Devices in the Twentieth Century Martín Matus Lerner Desarrollos Tecnológicos Aplicados a las Artes EUdA, Universidad Nacional de Quilmes Buenos Aires, Argentina [email protected] ABSTRACT 2. EARLY EXPERIENCES During the twentieth century several Latin American nations 2.1 The singing arc in Argentina (such as Argentina, Brazil, Chile, Cuba and Mexico) have In 1900 William du Bois Duddell publishes an article in which originated relevant antecedents in the NIME field. Their describes his experiments with “the singing arc”, one of the first innovative authors have interrelated musical composition, electroacoustic musical instruments. Based on the carbon arc lutherie, electronics and computing. This paper provides a lamp (in common use until the appearance of the electric light panoramic view of their original electronic instruments and bulb), the singing or speaking arc produces a high volume buzz experimental sound practices, as well as a perspective of them which can be modulated by means of a variable resistor or a regarding other inventions around the World. microphone [35]. Its functioning principle is present in later technologies such as plasma loudspeakers and microphones. Author Keywords In 1909 German physicist Emil Bose assumes direction of the Latin America, music and technology history, synthesizer, drawn High School of Physics at the Universidad de La Plata. Within sound, luthería electrónica. two years Bose turns this institution into a first-rate Department of Physics (pioneer in South America). On March 29th 1911 CCS Concepts Bose presents the speaking arc at a science event motivated by the purchase of equipment and scientific instruments from the • Applied computing → Sound and music German company Max Kohl. -

AMRC Journal Volume 21

American Music Research Center Jo urnal Volume 21 • 2012 Thomas L. Riis, Editor-in-Chief American Music Research Center College of Music University of Colorado Boulder The American Music Research Center Thomas L. Riis, Director Laurie J. Sampsel, Curator Eric J. Harbeson, Archivist Sister Dominic Ray, O. P. (1913 –1994), Founder Karl Kroeger, Archivist Emeritus William Kearns, Senior Fellow Daniel Sher, Dean, College of Music Eric Hansen, Editorial Assistant Editorial Board C. F. Alan Cass Portia Maultsby Susan Cook Tom C. Owens Robert Fink Katherine Preston William Kearns Laurie Sampsel Karl Kroeger Ann Sears Paul Laird Jessica Sternfeld Victoria Lindsay Levine Joanne Swenson-Eldridge Kip Lornell Graham Wood The American Music Research Center Journal is published annually. Subscription rate is $25 per issue ($28 outside the U.S. and Canada) Please address all inquiries to Eric Hansen, AMRC, 288 UCB, University of Colorado, Boulder, CO 80309-0288. Email: [email protected] The American Music Research Center website address is www.amrccolorado.org ISBN 1058-3572 © 2012 by Board of Regents of the University of Colorado Information for Authors The American Music Research Center Journal is dedicated to publishing arti - cles of general interest about American music, particularly in subject areas relevant to its collections. We welcome submission of articles and proposals from the scholarly community, ranging from 3,000 to 10,000 words (exclud - ing notes). All articles should be addressed to Thomas L. Riis, College of Music, Uni ver - sity of Colorado Boulder, 301 UCB, Boulder, CO 80309-0301. Each separate article should be submitted in two double-spaced, single-sided hard copies. -

Ferienkurse Für Internationale Neue Musik, 25.8.-29.9. 1946

Ferienkurse für internationale neue Musik, 25.8.-29.9. 1946 Seminare der Fachgruppen: Dirigieren Carl Mathieu Lange Komposition Wolfgang Fortner (Hauptkurs) Hermann Heiß (Zusatzkurs) Kammermusik Fritz Straub (Hauptkurs) Kurt Redel (Zusatzkurs) Klavier Georg Kuhlmann (auch Zusatzkurs Kammermusik) Gesang Elisabeth Delseit Henny Wolff (Zusatzkurs) Violine Günter Kehr Opernregie Bruno Heyn Walter Jockisch Musikkritik Fred Hamel Gemeinsame Veranstaltungen und Vorträge: Den zweiten Teil dieser Übersicht bilden die Veranstaltungen der „Internationalen zeitgenössischen Musiktage“ (22.9.-29.9.), die zum Abschluß der Ferienkurse von der Stadt Darmstadt in Verbindung mit dem Landestheater Darmstadt, der „Neuen Darmstädter Sezession“ und dem Süddeutschen Rundfunk, Radio Frankfurt, durchgeführt wurden. Datum Veranstaltungstitel und Programm Interpreten Ort u. Zeit So., 25.8. Erste Schloßhof-Serenade Kst., 11.00 Ansprache: Bürgermeister Julius Reiber Conrad Beck Serenade für Flöte, Klarinette und Streichorchester des Landes- Streichorchester (1935) theaters Darmstadt, Ltg.: Carl Wolfgang Fortner Konzert für Streichorchester Mathieu Lange (1933) Solisten: Kurt Redel (Fl.), Michael Mayer (Klar.) Kst., 16.00 Erstes Schloß-Konzert mit neuer Kammermusik Ansprachen: Kultusminister F. Schramm, Oberbürger- meister Ludwig Metzger Lehrkräfte der Ferienkurse: Paul Hindemith Sonate für Klavier vierhändig Heinz Schröter, Georg Kuhl- (1938) mann (Kl.) Datum Veranstaltungstitel und Programm Interpreten Ort u. Zeit Hermann Heiß Sonate für Flöte und Klavier Kurt Redel (Fl.), Hermann Heiß (1944-45) (Kl.) Heinz Schröter Altdeutsches Liederspiel , II. Teil, Elisabeth Delseit (Sopr.), Heinz op. 4 Nr. 4-6 (1936-37) Schröter (Kl.) Wolfgang Fortner Sonatina für Klavier (1934) Georg Kuhlmann (Kl.) Igor Strawinsky Duo concertant für Violine und Günter Kehr (Vl.), Heinz Schrö- Klavier (1931-32) ter (Kl.) Mo., 26.8. Komponisten-Selbstporträts I: Helmut Degen Kst., 16.00 Kst., 19.00 Einführung zum Klavierabend Georg Kuhlmann Di., 27.8. -

Holmes Electronic and Experimental Music

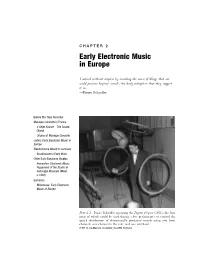

C H A P T E R 2 Early Electronic Music in Europe I noticed without surprise by recording the noise of things that one could perceive beyond sounds, the daily metaphors that they suggest to us. —Pierre Schaeffer Before the Tape Recorder Musique Concrète in France L’Objet Sonore—The Sound Object Origins of Musique Concrète Listen: Early Electronic Music in Europe Elektronische Musik in Germany Stockhausen’s Early Work Other Early European Studios Innovation: Electronic Music Equipment of the Studio di Fonologia Musicale (Milan, c.1960) Summary Milestones: Early Electronic Music of Europe Plate 2.1 Pierre Schaeffer operating the Pupitre d’espace (1951), the four rings of which could be used during a live performance to control the spatial distribution of electronically produced sounds using two front channels: one channel in the rear, and one overhead. (1951 © Ina/Maurice Lecardent, Ina GRM Archives) 42 EARLY HISTORY – PREDECESSORS AND PIONEERS A convergence of new technologies and a general cultural backlash against Old World arts and values made conditions favorable for the rise of electronic music in the years following World War II. Musical ideas that met with punishing repression and indiffer- ence prior to the war became less odious to a new generation of listeners who embraced futuristic advances of the atomic age. Prior to World War II, electronic music was anchored down by a reliance on live performance. Only a few composers—Varèse and Cage among them—anticipated the importance of the recording medium to the growth of electronic music. This chapter traces a technological transition from the turntable to the magnetic tape recorder as well as the transformation of electronic music from a medium of live performance to that of recorded media. -

Kreuzspiel, Louange À L'éternité De Jésus, and Mashups Three

Kreuzspiel, Louange à l’Éternité de Jésus, and Mashups Three Analytical Essays on Music from the Twentieth and Twenty-First Centuries Thomas Johnson A thesis submitted in partial fulfillment of the requirements for the degree of Master of Arts University of Washington 2013 Committee: Jonathan Bernard, Chair Áine Heneghan Program Authorized to Offer Degree: Music ©Copyright 2013 Thomas Johnson Johnson, Kreuzspiel, Louange, and Mashups TABLE OF CONTENTS Page Chapter 1: Stockhausen’s Kreuzspiel and its Connection to his Oeuvre ….….….….….…........1 Chapter 2: Harmonic Development and The Theme of Eternity In Messiaen’s Louange à l’Éternité de Jésus …………………………………….....37 Chapter 3: Meaning and Structure in Mashups ………………………………………………….60 Appendix I: Mashups and Constituent Songs from the Text with Links ……………………....103 Appendix II: List of Ways Charles Ives Used Existing Musical Material ….….….….……...104 Appendix III: DJ Overdub’s “Five Step” with Constituent Samples ……………………….....105 Bibliography …………………………………........……...…………….…………………….106 i Johnson, Kreuzspiel, Louange, and Mashups LIST OF EXAMPLES EXAMPLE 1.1. Phase 1 pitched instruments ……………………………………………....………5 EXAMPLE 1.2. Phase 1 tom-toms …………………………………………………………………5 EXAMPLE 1.3. Registral rotation with linked pitches in measures 14-91 ………………………...6 EXAMPLE 1.4. Tumbas part from measures 7-9, with duration values above …………………....7 EXAMPLE 1.5. Phase 1 tumba series, measures 7-85 ……………………………………………..7 EXAMPLE 1.6. The serial treatment of the tom-toms in Phase 1 …………………………........…9 EXAMPLE 1.7. Phase two pitched mode ………………………………………………....……...11 EXAMPLE 1.8. Phase two percussion mode ………………………………………………....…..11 EXAMPLE 1.9. Pitched instruments section II …………………………………………………...13 EXAMPLE 1.10. Segmental grouping in pitched instruments in section II ………………….......14 EXAMPLE 1.11. -

Christine Anderson: Franco Evangelisti »Ambasciatore Musicale« Tra Italia E Germania Schriftenreihe Analecta Musicologica

Christine Anderson: Franco Evangelisti »ambasciatore musicale« tra Italia e Germania Schriftenreihe Analecta musicologica. Veröffentlichungen der Musikgeschichtlichen Abteilung des Deutschen Historischen Instituts in Rom Band 45 (2011) Herausgegeben vom Deutschen Historischen Institut Rom Copyright Das Digitalisat wird Ihnen von perspectivia.net, der Online-Publikationsplattform der Max Weber Stiftung – Deutsche Geisteswissenschaftliche Institute im Ausland, zur Verfügung gestellt. Bitte beachten Sie, dass das Digitalisat der Creative- Commons-Lizenz Namensnennung-Keine kommerzielle Nutzung-Keine Bearbeitung (CC BY-NC-ND 4.0) unterliegt. Erlaubt ist aber das Lesen, das Ausdrucken des Textes, das Herunterladen, das Speichern der Daten auf einem eigenen Datenträger soweit die vorgenannten Handlungen ausschließlich zu privaten und nicht-kommerziellen Zwecken erfolgen. Den Text der Lizenz erreichen Sie hier: https://creativecommons.org/licenses/by-nc-nd/4.0/legalcode Franco Evangelisti »ambasciatore musicale« tra Italia e Germania* Christine Anderson In der Tat, die Zeit ist reif, unsere Konzeption vom Klang zu revidieren. Weder die seriellen Werke noch die aleatorischen Kompositionen scheinen mir aber die Klangwelt hinreichend zu erneuern. Beide sind letzte Ausläufer der Tradition auf der Suche nach einem logischen Übergang in Neuland. In der Tradition bleiben wollen, bedeutet richtig verstanden nicht, dass man vergangene Ereignisse nochmals erneuert, sondern dass man die eigenen zeitgenössischen Ereignisse der Erinnerung übergibt. Deswegen bedeutet Erneuerung zugleich Verzicht auf Gewohntes und den wagemutigen Aufbruch ins völlig Ungewohnte.1 Franco Evangelisti parla in tedesco – un raro documento audio del compositore romano tratto da una conferenza dal titolo In der Kürze meiner Zeit (Nel breve arco del mio tempo). Si tratta di una registrazione della radio tedesca di Monaco di Baviera del 1963, che risale quindi al periodo in cui Evangelisti lavorava a Die Schachtel, l’opera di teatro musicale che sarebbe rimasta a lungo la sua ultima composizione. -

The Musical Legacy of Karlhein Stockhausen

06_907-140_BkRevs_pp246-287 11/8/17 11:10 AM Page 281 Book Reviews 281 to the composer’s conception of the piece, intended final chapter (“Conclusion: since it is a “diachronic narrative” of Nativity Between Surrealism and Scripture”), which events (ibid.). Conversely, Messiaen’s Vingt is unfortunate because it would have been regards centers on the birth of the eternal highly welcome, given this book’s fascinat- God in time. His “regards” are synchronic in ing viewpoints. nature—a jubilant, “‘timeless’ succession of In spite of the high quality of its insights, stills” (ibid.)—rather than a linear unfold- this book contains unfortunate content and ing of Nativity events. To grasp the compo- copy-editing errors. For example, in his dis- sitional aesthetics of Vingt regards more cussion of Harawi (p. 182), Burton reverses thoroughly, Burton turns to the Catholic the medieval color symbolism that writer Columba Marmion, whose view of Messiaen associated with violet in a 1967 the Nativity is truly more Catholic than that conversation with Claude Samuel (Conver - of Toesca. But Burton notes how much sa tions with Olivier Messiaen, trans. Felix Messiaen added to Marmion’s reading of Aprahamian [London: Stainer and Bell, the Nativity in the latter’s Le Christ dans ses 1976], 20) by stating that reddish violet mystères, while retaining his essential visual (purple) suggests the “Truth of Love” and “gazes” (p. 95). Respecting Trois petites litur- bluish violet (hyacinth) the “Love of gies, Burton probes the depths of the inven- Truth.” Although Burton repeats an error tive collage citation technique characteriz- in the source he cites (Audrey Ekdahl ing its texts, as Messiaen juxtaposes Davidson, Olivier Messiaen and the Tristan different images and phrases from the Myth [Westport, CR: Greenwood, 2001], Bible—interspersed with his own images— 25), this state of affairs reflects the Burton’s to generate a metaphorical rainbow of tendency to depend too much on secondary Scriptural associations (p.