SAVI Objects: Sharing and Virtuality Incorporated

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Virtual Memory

Chapter 4 Virtual Memory Linux processes execute in a virtual environment that makes it appear as if each process had the entire address space of the CPU available to itself. This virtual address space extends from address 0 all the way to the maximum address. On a 32-bit platform, such as IA-32, the maximum address is 232 − 1or0xffffffff. On a 64-bit platform, such as IA-64, this is 264 − 1or0xffffffffffffffff. While it is obviously convenient for a process to be able to access such a huge ad- dress space, there are really three distinct, but equally important, reasons for using virtual memory. 1. Resource virtualization. On a system with virtual memory, a process does not have to concern itself with the details of how much physical memory is available or which physical memory locations are already in use by some other process. In other words, virtual memory takes a limited physical resource (physical memory) and turns it into an infinite, or at least an abundant, resource (virtual memory). 2. Information isolation. Because each process runs in its own address space, it is not possible for one process to read data that belongs to another process. This improves security because it reduces the risk of one process being able to spy on another pro- cess and, e.g., steal a password. 3. Fault isolation. Processes with their own virtual address spaces cannot overwrite each other’s memory. This greatly reduces the risk of a failure in one process trig- gering a failure in another process. That is, when a process crashes, the problem is generally limited to that process alone and does not cause the entire machine to go down. -

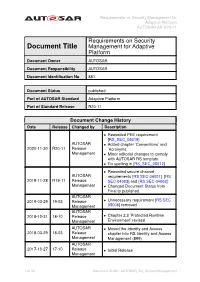

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Requirements on Security Document Title Management for Adaptive Platform Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 881 Document Status published Part of AUTOSAR Standard Adaptive Platform Part of Standard Release R20-11 Document Change History Date Release Changed by Description • Reworded PEE requirement [RS_SEC_05019] AUTOSAR • Added chapter ’Conventions’ and 2020-11-30 R20-11 Release ’Acronyms’ Management • Minor editorial changes to comply with AUTOSAR RS template • Fix spelling in [RS_SEC_05012] • Reworded secure channel AUTOSAR requirements [RS SEC 04001], [RS 2019-11-28 R19-11 Release SEC 04003] and [RS SEC 04003] Management • Changed Document Status from Final to published AUTOSAR 2019-03-29 19-03 Release • Unnecessary requirement [RS SEC Management 05006] removed AUTOSAR 2018-10-31 18-10 Release • Chapter 2.3 ’Protected Runtime Management Environment’ revised AUTOSAR • Moved the Identity and Access 2018-03-29 18-03 Release chapter into RS Identity and Access Management Management (899) AUTOSAR 2017-10-27 17-10 Release • Initial Release Management 1 of 32 Document ID 881: AUTOSAR_RS_SecurityManagement Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intel- lectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. -

Improving Virtual Function Call Target Prediction Via Dependence-Based Pre-Computation

Improving Virtual Function Call Target Prediction via Dependence-Based Pre-Computation Amir Roth, Andreas Moshovos and Gurindar S. Sohi Computer Sciences Department University of Wisconsin-Madison 1210 W Dayton Street, Madison, WI 53706 {amir, moshovos, sohi}@cs.wisc.edu Abstract In this initial work, we concentrate on pre-computing virtual function call (v-call) targets. The v-call mecha- We introduce dependence-based pre-computation as a nism enables code reuse by allowing a single static func- complement to history-based target prediction schemes. tion call to transparently invoke one of multiple function We present pre-computation in the context of virtual implementations based on run-time type information. function calls (v-calls), a class of control transfers that As shown in Table 1, v-calls are currently used in is becoming increasingly important and has resisted object-oriented programs with frequencies ranging from conventional prediction. Our proposed technique 1 in 200 to 1000 instructions (4 to 20 times fewer than dynamically identifies the sequence of operations that direct calls and 30 to 150 times fewer than conditional computes a v-call’s target. When the first instruction in branches). V-call importance will increase as object-ori- such a sequence is encountered, a small execution ented languages and methodologies gain popularity. For engine speculatively and aggressively pre-executes the now, v-calls make an ideal illustration vehicle for pre- rest. The pre-computed target is stored and subse- computation techniques since their targets are both diffi- quently used when a prediction needs to be made. We cult to predict and easy to pre-compute. -

Valloy – Virtual Functions Meet a Relational Language

VAlloy – Virtual Functions Meet a Relational Language Darko Marinov and Sarfraz Khurshid MIT Laboratory for Computer Science 200 Technology Square Cambridge, MA 02139 USA {marinov,khurshid}@lcs.mit.edu Abstract. We propose VAlloy, a veneer onto the first order, relational language Alloy. Alloy is suitable for modeling structural properties of object-oriented software. However, Alloy lacks support for dynamic dis- patch, i.e., function invocation based on actual parameter types. VAlloy introduces virtual functions in Alloy, which enables intuitive modeling of inheritance. Models in VAlloy are automatically translated into Alloy and can be automatically checked using the existing Alloy Analyzer. We illustrate the use of VAlloy by modeling object equality, such as in Java. We also give specifications for a part of the Java Collections Framework. 1 Introduction Object-oriented design and object-oriented programming have become predom- inant software methodologies. An essential feature of object-oriented languages is inheritance. It allows a (sub)class to inherit variables and methods from su- perclasses. Some languages, such as Java, only support single inheritance for classes. Subclasses can override some methods, changing the behavior inherited from superclasses. We use C++ term virtual functions to refer to methods that can be overridden. Virtual functions are dynamically dispatched—the actual function to invoke is selected based on the dynamic types of parameters. Java only supports single dynamic dispatch, i.e., the function is selected based only on the type of the receiver object. Alloy [9] is a first order, declarative language based on relations. Alloy is suitable for specifying structural properties of software. Alloy specifications can be analyzed automatically using the Alloy Analyzer (AA) [8]. -

Inheritance & Polymorphism

6.088 Intro to C/C++ Day 5: Inheritance & Polymorphism Eunsuk Kang & JeanYang In the last lecture... Objects: Characteristics & responsibilities Declaring and defining classes in C++ Fields, methods, constructors, destructors Creating & deleting objects on stack/heap Representation invariant Today’s topics Inheritance Polymorphism Abstract base classes Inheritance Types A class defines a set of objects, or a type people at MIT Types within a type Some objects are distinct from others in some ways MIT professors MIT students people at MIT Subtype MIT professor and student are subtypes of MIT people MIT professors MIT students people at MIT Type hierarchy MIT Person extends extends Student Professor What characteristics/behaviors do people at MIT have in common? Type hierarchy MIT Person extends extends Student Professor What characteristics/behaviors do people at MIT have in common? �name, ID, address �change address, display profile Type hierarchy MIT Person extends extends Student Professor What things are special about students? �course number, classes taken, year �add a class taken, change course Type hierarchy MIT Person extends extends Student Professor What things are special about professors? �course number, classes taught, rank (assistant, etc.) �add a class taught, promote Inheritance A subtype inherits characteristics and behaviors of its base type. e.g. Each MIT student has Characteristics: Behaviors: name display profile ID change address address add a class taken course number change course classes taken year Base type: MITPerson -

CSE 307: Principles of Programming Languages Classes and Inheritance

OOP Introduction Type & Subtype Inheritance Overloading and Overriding CSE 307: Principles of Programming Languages Classes and Inheritance R. Sekar 1 / 52 OOP Introduction Type & Subtype Inheritance Overloading and Overriding Topics 1. OOP Introduction 3. Inheritance 2. Type & Subtype 4. Overloading and Overriding 2 / 52 OOP Introduction Type & Subtype Inheritance Overloading and Overriding Section 1 OOP Introduction 3 / 52 OOP Introduction Type & Subtype Inheritance Overloading and Overriding OOP (Object Oriented Programming) So far the languages that we encountered treat data and computation separately. In OOP, the data and computation are combined into an “object”. 4 / 52 OOP Introduction Type & Subtype Inheritance Overloading and Overriding Benefits of OOP more convenient: collects related information together, rather than distributing it. Example: C++ iostream class collects all I/O related operations together into one central place. Contrast with C I/O library, which consists of many distinct functions such as getchar, printf, scanf, sscanf, etc. centralizes and regulates access to data. If there is an error that corrupts object data, we need to look for the error only within its class Contrast with C programs, where access/modification code is distributed throughout the program 5 / 52 OOP Introduction Type & Subtype Inheritance Overloading and Overriding Benefits of OOP (Continued) Promotes reuse. by separating interface from implementation. We can replace the implementation of an object without changing client code. Contrast with C, where the implementation of a data structure such as a linked list is integrated into the client code by permitting extension of new objects via inheritance. Inheritance allows a new class to reuse the features of an existing class. -

Virtual Memory - Paging

Virtual memory - Paging Johan Montelius KTH 2020 1 / 32 The process code heap (.text) data stack kernel 0x00000000 0xC0000000 0xffffffff Memory layout for a 32-bit Linux process 2 / 32 Segments - a could be solution Processes in virtual space Address translation by MMU (base and bounds) Physical memory 3 / 32 one problem Physical memory External fragmentation: free areas of free space that is hard to utilize. Solution: allocate larger segments ... internal fragmentation. 4 / 32 another problem virtual space used code We’re reserving physical memory that is not used. physical memory not used? 5 / 32 Let’s try again It’s easier to handle fixed size memory blocks. Can we map a process virtual space to a set of equal size blocks? An address is interpreted as a virtual page number (VPN) and an offset. 6 / 32 Remember the segmented MMU MMU exception no virtual addr. offset yes // < within bounds index + physical address segment table 7 / 32 The paging MMU MMU exception virtual addr. offset // VPN available ? + physical address page table 8 / 32 the MMU exception exception virtual address within bounds page available Segmentation Paging linear address physical address 9 / 32 a note on the x86 architecture The x86-32 architecture supports both segmentation and paging. A virtual address is translated to a linear address using a segmentation table. The linear address is then translated to a physical address by paging. Linux and Windows do not use use segmentation to separate code, data nor stack. The x86-64 (the 64-bit version of the x86 architecture) has dropped many features for segmentation. -

Behavioral Subtyping, Specification Inheritance, and Modular Reasoning Gary T

Computer Science Technical Reports Computer Science 9-3-2006 Behavioral Subtyping, Specification Inheritance, and Modular Reasoning Gary T. Leavens Iowa State University David A. Naumann Iowa State University Follow this and additional works at: http://lib.dr.iastate.edu/cs_techreports Part of the Software Engineering Commons Recommended Citation Leavens, Gary T. and Naumann, David A., "Behavioral Subtyping, Specification Inheritance, and Modular Reasoning" (2006). Computer Science Technical Reports. 269. http://lib.dr.iastate.edu/cs_techreports/269 This Article is brought to you for free and open access by the Computer Science at Iowa State University Digital Repository. It has been accepted for inclusion in Computer Science Technical Reports by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Behavioral Subtyping, Specification Inheritance, and Modular Reasoning Abstract Behavioral subtyping is an established idea that enables modular reasoning about behavioral properties of object-oriented programs. It requires that syntactic subtypes are behavioral refinements. It validates reasoning about a dynamically-dispatched method call, say E.m(), using the specification associated with the static type of the receiver expression E. For languages with references and mutable objects the idea of behavioral subtyping has not been rigorously formalized as such, the standard informal notion has inadequacies, and exact definitions are not obvious. This paper formalizes behavioral subtyping and supertype abstraction for a Java-like sequential language with classes, interfaces, exceptions, mutable heap objects, references, and recursive types. Behavioral subtyping is proved sound and semantically complete for reasoning with supertype abstraction. Specification inheritance, as used in the specification language JML, is formalized and proved to entail behavioral subtyping. -

Virtual Memory in X86

Fall 2017 :: CSE 306 Virtual Memory in x86 Nima Honarmand Fall 2017 :: CSE 306 x86 Processor Modes • Real mode – walks and talks like a really old x86 chip • State at boot • 20-bit address space, direct physical memory access • 1 MB of usable memory • No paging • No user mode; processor has only one protection level • Protected mode – Standard 32-bit x86 mode • Combination of segmentation and paging • Privilege levels (separate user and kernel) • 32-bit virtual address • 32-bit physical address • 36-bit if Physical Address Extension (PAE) feature enabled Fall 2017 :: CSE 306 x86 Processor Modes • Long mode – 64-bit mode (aka amd64, x86_64, etc.) • Very similar to 32-bit mode (protected mode), but bigger address space • 48-bit virtual address space • 52-bit physical address space • Restricted segmentation use • Even more obscure modes we won’t discuss today xv6 uses protected mode w/o PAE (i.e., 32-bit virtual and physical addresses) Fall 2017 :: CSE 306 Virt. & Phys. Addr. Spaces in x86 Processor • Both RAM hand hardware devices (disk, Core NIC, etc.) connected to system bus • Mapped to different parts of the physical Virtual Addr address space by the BIOS MMU Data • You can talk to a device by performing Physical Addr read/write operations on its physical addresses Cache • Devices are free to interpret reads/writes in any way they want (driver knows) System Interconnect (Bus) : all addrs virtual DRAM Network … Disk (Memory) Card : all addrs physical Fall 2017 :: CSE 306 Virt-to-Phys Translation in x86 0xdeadbeef Segmentation 0x0eadbeef Paging 0x6eadbeef Virtual Address Linear Address Physical Address Protected/Long mode only • Segmentation cannot be disabled! • But can be made a no-op (a.k.a. -

Advances in Programming Languages Lecture 18: Concurrency and More in Rust

Advances in Programming Languages Lecture 18: Concurrency and More in Rust Ian Stark School of Informatics The University of Edinburgh Friday 24 November 2016 Semester 1 Week 10 https://blog.inf.ed.ac.uk/apl16 Topic: Programming for Memory Safety The final block of lectures cover features used in the Rust programming language. Introduction: Zero-Cost Abstractions (and their cost) Control of Memory: Ownership Concurrency and more This section of the course is entirely new — Rust itself is not that old — and I apologise for any consequent lack of polish. Ian Stark Advances in Programming Languages / Lecture 18: Concurrency and More in Rust 2016-11-24 The Rust Programming Language The Rust language is intended as a tool for safe systems programming. Three key objectives contribute to this. Zero-cost abstractions Basic References Memory safety https://www.rust-lang.org https://blog.rust-lang.org Safe concurrency The “systems programming” motivation resonates with that for imperative C/C++. The “safe” part draws extensively on techniques developed for functional Haskell and OCaml. Sometimes these align more closely than you might expect, often by overlap between two aims: Precise control for the programmer; Precise information for the compiler. Ian Stark Advances in Programming Languages / Lecture 18: Concurrency and More in Rust 2016-11-24 Previous Homework Watch This Peter O’Hearn: Reasoning with Big Code https://is.gd/reasoning_big_code Talk at the Alan Turing Institute about how Facebook is using automatic verification at scale to check code and give feedback to programmers. Ian Stark Advances in Programming Languages / Lecture 18: Concurrency and More in Rust 2016-11-24 Guest Speaker ! Probabilistic Programming: What is it and how do we make it better? Maria Gorinova University of Edinburgh 3.10pm Tuesday 29 November 2016 Ian Stark Advances in Programming Languages / Lecture 18: Concurrency and More in Rust 2016-11-24 Optional: Review and Exam Preparation ! The final lecture will be an exam review, principally for single-semester visiting students. -

Virtual Memory

CSE 410: Systems Programming Virtual Memory Ethan Blanton Department of Computer Science and Engineering University at Buffalo Introduction Address Spaces Paging Summary References Virtual Memory Virtual memory is a mechanism by which a system divorces the address space in programs from the physical layout of memory. Virtual addresses are locations in program address space. Physical addresses are locations in actual hardware RAM. With virtual memory, the two need not be equal. © 2018 Ethan Blanton / CSE 410: Systems Programming Introduction Address Spaces Paging Summary References Process Layout As previously discussed: Every process has unmapped memory near NULL Processes may have access to the entire address space Each process is denied access to the memory used by other processes Some of these statements seem contradictory. Virtual memory is the mechanism by which this is accomplished. Every address in a process’s address space is a virtual address. © 2018 Ethan Blanton / CSE 410: Systems Programming Introduction Address Spaces Paging Summary References Physical Layout The physical layout of hardware RAM may vary significantly from machine to machine or platform to platform. Sometimes certain locations are restricted Devices may appear in the memory address space Different amounts of RAM may be present Historically, programs were aware of these restrictions. Today, virtual memory hides these details. The kernel must still be aware of physical layout. © 2018 Ethan Blanton / CSE 410: Systems Programming Introduction Address Spaces Paging Summary References The Memory Management Unit The Memory Management Unit (MMU) translates addresses. It uses a per-process mapping structure to transform virtual addresses into physical addresses. The MMU is physical hardware between the CPU and the memory bus. -

Developing Rust on Bare-Metal

RustyGecko - Developing Rust on Bare-Metal An experimental embedded software platform Håvard Wormdal Høiby Sondre Lefsaker Master of Science in Computer Science Submission date: June 2015 Supervisor: Magnus Lie Hetland, IDI Co-supervisor: Antonio Garcia Guirado, IDI Marius Grannæs, Silicon Labs Norwegian University of Science and Technology Department of Computer and Information Science Preface This report is submitted to the Norwegian University of Science and Technology in fulfillment of the requirements for master thesis. This work has been performed at the Department of Computer and Information Science, NTNU, with Prof. Magnus Lie Hetland as the supervisor, Antonio Garcia Guirado (ARM), and Marius Grannæs (Silicon Labs) as co-supervisors. The initial problem description was our own proposal and further developed in co-operation with our supervisors. Acknowledgements Thanks to Magnus Lie Hetland, Antonio Garcia Guirado, and Marius Grannæs for directions and guidance on technical issues and this report. Thanks to Silicon Labs for providing us with development platforms for us to develop and test our implementation on. Thanks to Antonio Garcia Guirado for the implementation of the CircleGame application for us to port to Rust and use in our benchmarks. Thanks to Itera w/Tommy Ryen for office space. A special thanks to Leslie Ho and Siri Aagedal for all the support and for proofreading the thesis. Sondre Lefsaker and H˚avard Wormdal Høiby 2015-06-14 i Project Description The Rust programming language is a new system language developed by Mozilla. With the language being statically compiled and built on the LLVM compiler infras- tructure, and because of features like low-level control and zero-cost abstractions, the language is a candidate for use in bare-metal systems.