Musical Use of a General and Expressive Plucked-String

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Synchronous Programming in Audio Processing Karim Barkati, Pierre Jouvelot

Synchronous programming in audio processing Karim Barkati, Pierre Jouvelot To cite this version: Karim Barkati, Pierre Jouvelot. Synchronous programming in audio processing. ACM Computing Surveys, Association for Computing Machinery, 2013, 46 (2), pp.24. 10.1145/2543581.2543591. hal- 01540047 HAL Id: hal-01540047 https://hal-mines-paristech.archives-ouvertes.fr/hal-01540047 Submitted on 15 Jun 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. A Synchronous Programming in Audio Processing: A Lookup Table Oscillator Case Study KARIM BARKATI and PIERRE JOUVELOT, CRI, Mathématiques et systèmes, MINES ParisTech, France The adequacy of a programming language to a given software project or application domain is often con- sidered a key factor of success in software development and engineering, even though little theoretical or practical information is readily available to help make an informed decision. In this paper, we address a particular version of this issue by comparing the adequacy of general-purpose synchronous programming languages to more domain-specific -

Tosca: an OSC Communication Plugin for Object-Oriented Spatialization Authoring

ICMC 2015 – Sept. 25 - Oct. 1, 2015 – CEMI, University of North Texas ToscA: An OSC Communication Plugin for Object-Oriented Spatialization Authoring Thibaut Carpentier UMR 9912 STMS IRCAM-CNRS-UPMC 1, place Igor Stravinsky, 75004 Paris [email protected] ABSTRACT or consensus on the representation of spatialization metadata (in spite of several initiatives [11, 12]) complicates the inter- The paper presents ToscA, a plugin that uses the OSC proto- exchange between applications. col to transmit automation parameters between a digital audio This paper introduces ToscA, a plugin that allows to commu- workstation and a remote application. A typical use case is the nicate automation parameters between a digital audio work- production of an object-oriented spatialized mix independently station and a remote application. The main use case is the of the limitations of the host applications. production of massively multichannel object-oriented spatial- ized mixes. 1. INTRODUCTION There is today a growing interest in sound spatialization. The 2. WORKFLOW movie industry seems to be shifting towards “3D” sound sys- We propose a workflow where the spatialization rendering tems; mobile devices have radically changed our listening engine lies outside of the workstation (Figure 1). This archi- habits and multimedia broadcast companies are evolving ac- tecture requires (bi-directional) communication between the cordingly, showing substantial interest in binaural techniques DAW and the remote renderer, and both the audio streams and and interactive content; massively multichannel equipments the automation data shall be conveyed. After being processed and transmission protocols are available and they facilitate the by the remote renderer, the spatialized signals could either installation of ambitious speaker setups in concert halls [1] or feed the reproduction setup (loudspeakers or headphones), be exhibition venues. -

Resonance and Resonators

Dept of Speech, Music and Hearing ACOUSTICS FOR VIOLIN AND GUITAR MAKERS Erik Jansson Chapter II: Resonance and Resonators Fourth edition 2002 http://www.speech.kth.se/music/acviguit4/part2.pdf Index of chapters Preface/Chapter I Sound and hearing Chapter II Resonance and resonators Chapter III Sound and the room Chapter IV Properties of the violin and guitar string Chapter V Vibration properties of the wood and tuning of violin plates Chapter VI The function, tone, and tonal quality of the guitar Chapter VII The function of the violin Chapter VIII The tone and tonal quality of the violin Chapter IX Sound examples and simple experimental material – under preparation Webpage: http://www.speech.kth.se/music/acviguit4/index.html ACOUSTICS FOR VIOLIN AND GUITAR MAKERS Chapter 2 – Fundamentals of Acoustics RESONANCE AND RESONATORS Part 1: RESONANCE 2.1. Resonance 2.2. Vibration sensitivity 2.3. The mechanical and acoustical measures of the resonator 2.4. Summary 2.5. Key words Part 2: RESONATORS 2.6. The hole-volume resonator 2.7. Complex resonators 2.8. Mesurements of resonances in bars, plates and shells 2.9. Summary 2.10. Key words Jansson: Acoustics for violin and guitar makers 2.2 Chapter 2. FUNDAMENTALS OF ACOUSTICS - RESONANCE AND RESONATORS First part: RESONANCE INTRODUCTION In chapter 1, I presented the fundamental properties of sound and how these properties can be measured. Fundamental hearing sensations were connected to measurable sound properties. In this, the second chapter the concept of RESONANCE and of RESONATORS will be introduced. Resonators are fundamental building blocks of the sound generating systems such as the violin and the guitar. -

Proceedings of the Fourth International Csound Conference

Proceedings of the Fourth International Csound Conference Edited by: Luis Jure [email protected] Published by: Escuela Universitaria de Música, Universidad de la República Av. 18 de Julio 1772, CP 11200 Montevideo, Uruguay ISSN 2393-7580 © 2017 International Csound Conference Conference Chairs Paper Review Committee Luis Jure (Chair) +yvind Brandtsegg Martín Rocamora (Co-Chair) Pablo Di Liscia John -tch Organization Team Michael Gogins Jimena Arruti Joachim )eintz Pablo Cancela Alex )ofmann Guillermo Carter /armo Johannes Guzmán Calzada 0ictor Lazzarini Ignacio Irigaray Iain McCurdy Lucía Chamorro Rory 1alsh "eli#e Lamolle Juan Martín L$#ez Music Review Committee Gusta%o Sansone Pablo Cetta Sofía Scheps Joel Chadabe Ricardo Dal "arra Sessions Chairs Pablo Di Liscia Pablo Cancela "olkmar )ein Pablo Di Liscia Joachim )eintz Michael Gogins Clara Ma3da Joachim )eintz Iain McCurdy Luis Jure "lo Menezes Iain McCurdy Daniel 4##enheim Martín Rocamora Juan Pampin Steven *i Carmelo Saitta Music Curator Rodrigo Sigal Luis Jure Clemens %on Reusner Index Preface Keynote talks The 60 years leading to Csound 6.09 Victor Lazzarini Don Quijote, the Island and the Golden Age Joachim Heintz The ATS technique in Csound: theoretical background, present state and prospective Oscar Pablo Di Liscia Csound – The Swiss Army Synthesiser Iain McCurdy How and Why I Use Csound Today Steven Yi Conference papers Working with pch2csd – Clavia NM G2 to Csound Converter Gleb Rogozinsky, Eugene Cherny and Michael Chesnokov Daria: A New Framework for Composing, Rehearsing and Performing Mixed Media Music Guillermo Senna and Juan Nava Aroza Interactive Csound Coding with Emacs Hlöðver Sigurðsson Chunking: A new Approach to Algorithmic Composition of Rhythm and Metre for Csound Georg Boenn Interactive Visual Music with Csound and HTML5 Michael Gogins Spectral and 3D spatial granular synthesis in Csound Oscar Pablo Di Liscia Preface The International Csound Conference (ICSC) is the principal biennial meeting for members of the Csound community and typically attracts worldwide attendance. -

Computer Music

THE OXFORD HANDBOOK OF COMPUTER MUSIC Edited by ROGER T. DEAN OXFORD UNIVERSITY PRESS OXFORD UNIVERSITY PRESS Oxford University Press, Inc., publishes works that further Oxford University's objective of excellence in research, scholarship, and education. Oxford New York Auckland Cape Town Dar es Salaam Hong Kong Karachi Kuala Lumpur Madrid Melbourne Mexico City Nairobi New Delhi Shanghai Taipei Toronto With offices in Argentina Austria Brazil Chile Czech Republic France Greece Guatemala Hungary Italy Japan Poland Portugal Singapore South Korea Switzerland Thailand Turkey Ukraine Vietnam Copyright © 2009 by Oxford University Press, Inc. First published as an Oxford University Press paperback ion Published by Oxford University Press, Inc. 198 Madison Avenue, New York, New York 10016 www.oup.com Oxford is a registered trademark of Oxford University Press All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission of Oxford University Press. Library of Congress Cataloging-in-Publication Data The Oxford handbook of computer music / edited by Roger T. Dean. p. cm. Includes bibliographical references and index. ISBN 978-0-19-979103-0 (alk. paper) i. Computer music—History and criticism. I. Dean, R. T. MI T 1.80.09 1009 i 1008046594 789.99 OXF tin Printed in the United Stares of America on acid-free paper CHAPTER 12 SENSOR-BASED MUSICAL INSTRUMENTS AND INTERACTIVE MUSIC ATAU TANAKA MUSICIANS, composers, and instrument builders have been fascinated by the expres- sive potential of electrical and electronic technologies since the advent of electricity itself. -

Implementing Stochastic Synthesis for Supercollider and Iphone

Implementing stochastic synthesis for SuperCollider and iPhone Nick Collins Department of Informatics, University of Sussex, UK N [dot] Collins ]at[ sussex [dot] ac [dot] uk - http://www.cogs.susx.ac.uk/users/nc81/index.html Proceedings of the Xenakis International Symposium Southbank Centre, London, 1-3 April 2011 - www.gold.ac.uk/ccmc/xenakis-international-symposium This article reflects on Xenakis' contribution to sound synthesis, and explores practical tools for music making touched by his ideas on stochastic waveform generation. Implementations of the GENDYN algorithm for the SuperCollider audio programming language and in an iPhone app will be discussed. Some technical specifics will be reported without overburdening the exposition, including original directions in computer music research inspired by his ideas. The mass exposure of the iGendyn iPhone app in particular has provided a chance to reach a wider audience. Stochastic construction in music can apply at many timescales, and Xenakis was intrigued by the possibility of compositional unification through simultaneous engagement at multiple levels. In General Dynamic Stochastic Synthesis Xenakis found a potent way to extend stochastic music to the sample level in digital sound synthesis (Xenakis 1992, Serra 1993, Roads 1996, Hoffmann 2000, Harley 2004, Brown 2005, Luque 2006, Collins 2008, Luque 2009). In the central algorithm, samples are specified as a result of breakpoint interpolation synthesis (Roads 1996), where breakpoint positions in time and amplitude are subject to probabilistic perturbation. Random walks (up to second order) are followed with respect to various probability distributions for perturbation size. Figure 1 illustrates this for a single breakpoint; a full GENDYN implementation would allow a set of breakpoints, with each breakpoint in the set updated by individual perturbations each cycle. -

The Viability of the Web Browser As a Computer Music Platform

Lonce Wyse and Srikumar Subramanian The Viability of the Web Communications and New Media Department National University of Singapore Blk AS6, #03-41 Browser as a Computer 11 Computing Drive Singapore 117416 Music Platform [email protected] [email protected] Abstract: The computer music community has historically pushed the boundaries of technologies for music-making, using and developing cutting-edge computing, communication, and interfaces in a wide variety of creative practices to meet exacting standards of quality. Several separate systems and protocols have been developed to serve this community, such as Max/MSP and Pd for synthesis and teaching, JackTrip for networked audio, MIDI/OSC for communication, as well as Max/MSP and TouchOSC for interface design, to name a few. With the still-nascent Web Audio API standard and related technologies, we are now, more than ever, seeing an increase in these capabilities and their integration in a single ubiquitous platform: the Web browser. In this article, we examine the suitability of the Web browser as a computer music platform in critical aspects of audio synthesis, timing, I/O, and communication. We focus on the new Web Audio API and situate it in the context of associated technologies to understand how well they together can be expected to meet the musical, computational, and development needs of the computer music community. We identify timing and extensibility as two key areas that still need work in order to meet those needs. To date, despite the work of a few intrepid musical Why would musicians care about working in explorers, the Web browser platform has not been the browser, a platform not specifically designed widely considered as a viable platform for the de- for computer music? Max/MSP is an example of a velopment of computer music. -

Interactive Csound Coding with Emacs

Interactive Csound coding with Emacs Hlöðver Sigurðsson Abstract. This paper will cover the features of the Emacs package csound-mode, a new major-mode for Csound coding. The package is in most part a typical emacs major mode where indentation rules, comple- tions, docstrings and syntax highlighting are provided. With an extra feature of a REPL1, that is based on running csound instance through the csound-api. Similar to csound-repl.vim[1] csound- mode strives to enable the Csound user a faster feedback loop by offering a REPL instance inside of the text editor. Making the gap between de- velopment and the final output reachable within a real-time interaction. 1 Introduction After reading the changelog of Emacs 25.1[2] I discovered a new Emacs feature of dynamic modules, enabling the possibility of Foreign Function Interface(FFI). Being insired by Gogins’s recent Common Lisp FFI for the CsoundAPI[3], I decided to use this new feature and develop an FFI for Csound. I made the dynamic module which ports the greater part of the C-lang’s csound-api and wrote some of Steven Yi’s csound-api examples for Elisp, which can be found on the Gihub page for CsoundAPI-emacsLisp[4]. This sparked my idea of creating a new REPL based Csound major mode for Emacs. As a composer using Csound, I feel the need to be close to that which I’m composing at any given moment. From previous Csound front-end tools I’ve used, the time between writing a Csound statement and hearing its output has been for me a too long process of mouseclicking and/or changing windows. -

Expanding the Power of Csound with Integrated Html and Javascript

Michael Gogins. Expanding the Power of Csound with Intergrated HTML and JavaScript EXPANDING THE POWER OF CSOUND WITH INTEGRATED HTML AND JAVA SCRIPT Michael Gogins [email protected] https://michaelgogins.tumblr.com http://michaelgogins.tumblr.com/ This paper presents recent developments integrating Csound [1] with HTML [2] and JavaScript [3, 4]. For those new to Csound, it is a “MUSIC N” style, user- programmable software sound synthesizer, one of the first yet still being extended, written mostly in the C language. No synthesizer is more powerful. Csound can now run in an interactive Web page, using all the capabilities of current Web browsers: custom widgets, 2- and 3-dimensional animated and interactive graphics canvases, video, data storage, WebSockets, Web Audio, mathematics typesetting, etc. See the whole list at HTML5 TEST [5]. Above all, the JavaScript programming language can be used to control Csound, extend its capabilities, generate scores, and more. JavaScript is the “glue” that binds together the components and capabilities of HTML5. JavaScript is a full-featured, dynamically typed language that supports functional programming and prototype-based object- oriented programming. In most browsers, the JavaScript virtual machine includes a just- in-time compiler that runs about 4 times slower than compiled C, very fast for a dynamic language. JavaScript has limitations. It is single-threaded, and in standard browsers, is not permitted to access the local file system outside the browser's sandbox. But most musical applications can use an embedded browser, which bypasses the sandbox and accesses the local file system. HTML Environments for Csound There are two approaches to integrating Csound with HTML and JavaScript. -

Musical Acoustics - Wikipedia, the Free Encyclopedia 11/07/13 17:28 Musical Acoustics from Wikipedia, the Free Encyclopedia

Musical acoustics - Wikipedia, the free encyclopedia 11/07/13 17:28 Musical acoustics From Wikipedia, the free encyclopedia Musical acoustics or music acoustics is the branch of acoustics concerned with researching and describing the physics of music – how sounds employed as music work. Examples of areas of study are the function of musical instruments, the human voice (the physics of speech and singing), computer analysis of melody, and in the clinical use of music in music therapy. Contents 1 Methods and fields of study 2 Physical aspects 3 Subjective aspects 4 Pitch ranges of musical instruments 5 Harmonics, partials, and overtones 6 Harmonics and non-linearities 7 Harmony 8 Scales 9 See also 10 External links Methods and fields of study Frequency range of music Frequency analysis Computer analysis of musical structure Synthesis of musical sounds Music cognition, based on physics (also known as psychoacoustics) Physical aspects Whenever two different pitches are played at the same time, their sound waves interact with each other – the highs and lows in the air pressure reinforce each other to produce a different sound wave. As a result, any given sound wave which is more complicated than a sine wave can be modelled by many different sine waves of the appropriate frequencies and amplitudes (a frequency spectrum). In humans the hearing apparatus (composed of the ears and brain) can usually isolate these tones and hear them distinctly. When two or more tones are played at once, a variation of air pressure at the ear "contains" the pitches of each, and the ear and/or brain isolate and decode them into distinct tones. -

62 Years and Counting: MUSIC N and the Modular Revolution

62 Years and Counting: MUSIC N and the Modular Revolution By Brian Lindgren MUSC 7660X - History of Electronic and Computer Music Fall 2019 24 December 2019 © Copyright 2020 Brian Lindgren Abstract. MUSIC N by Max Mathews had two profound impacts in the world of music synthesis. The first was the implementation of modularity to ensure a flexibility as a tool for the user; with the introduction of the unit generator, the instrument and the compiler, composers had the building blocks to create an unlimited range of sounds. The second was the impact of this implementation in the modular analog synthesizers developed a few years later. While Jean-Claude Risset, a well known Mathews associate, asserts this, Mathews actually denies it. They both are correct in their perspectives. Introduction Over 76 years have passed since the invention of the first electronic general purpose computer,1 the ENIAC. Today, we carry computers in our pockets that can perform millions of times more calculations per second.2 With the amazing rate of change in computer technology, it's hard to imagine that any development of yesteryear could maintain a semblance of relevance today. However, in the world of music synthesis, the foundations that were laid six decades ago not only spawned a breadth of multifaceted innovation but continue to function as the bedrock of important digital applications used around the world today. Not only did a new modular approach implemented by its creator, Max Mathews, ensure that the MUSIC N lineage would continue to be useful in today’s world (in one of its descendents, Csound) but this approach also likely inspired the analog synthesizer engineers of the day, impacting their designs. -

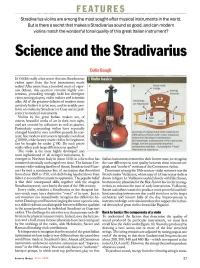

Science and the Stradivarius

FEATURES Stradivarius violins are among the most sought-after musical instruments in the world. But is there a secret that makes a Stradivarius sound so good, and can modern violins match the wonderful tonal quality of this great Italian instrument? Science and the Stradivarius Colin Gough IS TH ERE really a lost secret that sets Stradivarius 1 violin basics violins apart from the best instruments made today? After more than a hundred years of vigor- force rocks bridge ous debate, this question remains highly con- bowing tentious, provoking strongly held but divergent direction views among players, violin makers and scientists alike. All of the greatest violinists of modern times certainly believe it to be true, and invariably per- form on violins by Stradivari or Guarneri in pref- erence to modern instruments. Violins by the great Italian makers are, of course, beautiful works of art in their own right, and are coveted by collectors as well as players. Particularly outstanding violins have reputedly changed hands for over a million pounds. In con- (a) A copy of a Guarnerius violin made by the trast, fine modern instruments typically cost about 19th-century French violin maker Vuillaume, shown from the player's perspective. £ 10 000, while factory-made violins for beginners (b) A schematic cross-section of the violin at the can be bought for under £100. Do such prices bridge, with the acoustically important really reflect such large differences in quality? components labelled - including the "f-hole" The violin is the most highly developed and Helmholtz air resonance. most sophisticated of all stringed instruments.