Expanding the Power of Csound with Integrated Html and Javascript

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Synchronous Programming in Audio Processing Karim Barkati, Pierre Jouvelot

Synchronous programming in audio processing Karim Barkati, Pierre Jouvelot To cite this version: Karim Barkati, Pierre Jouvelot. Synchronous programming in audio processing. ACM Computing Surveys, Association for Computing Machinery, 2013, 46 (2), pp.24. 10.1145/2543581.2543591. hal- 01540047 HAL Id: hal-01540047 https://hal-mines-paristech.archives-ouvertes.fr/hal-01540047 Submitted on 15 Jun 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. A Synchronous Programming in Audio Processing: A Lookup Table Oscillator Case Study KARIM BARKATI and PIERRE JOUVELOT, CRI, Mathématiques et systèmes, MINES ParisTech, France The adequacy of a programming language to a given software project or application domain is often con- sidered a key factor of success in software development and engineering, even though little theoretical or practical information is readily available to help make an informed decision. In this paper, we address a particular version of this issue by comparing the adequacy of general-purpose synchronous programming languages to more domain-specific -

Proceedings of the Fourth International Csound Conference

Proceedings of the Fourth International Csound Conference Edited by: Luis Jure [email protected] Published by: Escuela Universitaria de Música, Universidad de la República Av. 18 de Julio 1772, CP 11200 Montevideo, Uruguay ISSN 2393-7580 © 2017 International Csound Conference Conference Chairs Paper Review Committee Luis Jure (Chair) +yvind Brandtsegg Martín Rocamora (Co-Chair) Pablo Di Liscia John -tch Organization Team Michael Gogins Jimena Arruti Joachim )eintz Pablo Cancela Alex )ofmann Guillermo Carter /armo Johannes Guzmán Calzada 0ictor Lazzarini Ignacio Irigaray Iain McCurdy Lucía Chamorro Rory 1alsh "eli#e Lamolle Juan Martín L$#ez Music Review Committee Gusta%o Sansone Pablo Cetta Sofía Scheps Joel Chadabe Ricardo Dal "arra Sessions Chairs Pablo Di Liscia Pablo Cancela "olkmar )ein Pablo Di Liscia Joachim )eintz Michael Gogins Clara Ma3da Joachim )eintz Iain McCurdy Luis Jure "lo Menezes Iain McCurdy Daniel 4##enheim Martín Rocamora Juan Pampin Steven *i Carmelo Saitta Music Curator Rodrigo Sigal Luis Jure Clemens %on Reusner Index Preface Keynote talks The 60 years leading to Csound 6.09 Victor Lazzarini Don Quijote, the Island and the Golden Age Joachim Heintz The ATS technique in Csound: theoretical background, present state and prospective Oscar Pablo Di Liscia Csound – The Swiss Army Synthesiser Iain McCurdy How and Why I Use Csound Today Steven Yi Conference papers Working with pch2csd – Clavia NM G2 to Csound Converter Gleb Rogozinsky, Eugene Cherny and Michael Chesnokov Daria: A New Framework for Composing, Rehearsing and Performing Mixed Media Music Guillermo Senna and Juan Nava Aroza Interactive Csound Coding with Emacs Hlöðver Sigurðsson Chunking: A new Approach to Algorithmic Composition of Rhythm and Metre for Csound Georg Boenn Interactive Visual Music with Csound and HTML5 Michael Gogins Spectral and 3D spatial granular synthesis in Csound Oscar Pablo Di Liscia Preface The International Csound Conference (ICSC) is the principal biennial meeting for members of the Csound community and typically attracts worldwide attendance. -

Computer Music

THE OXFORD HANDBOOK OF COMPUTER MUSIC Edited by ROGER T. DEAN OXFORD UNIVERSITY PRESS OXFORD UNIVERSITY PRESS Oxford University Press, Inc., publishes works that further Oxford University's objective of excellence in research, scholarship, and education. Oxford New York Auckland Cape Town Dar es Salaam Hong Kong Karachi Kuala Lumpur Madrid Melbourne Mexico City Nairobi New Delhi Shanghai Taipei Toronto With offices in Argentina Austria Brazil Chile Czech Republic France Greece Guatemala Hungary Italy Japan Poland Portugal Singapore South Korea Switzerland Thailand Turkey Ukraine Vietnam Copyright © 2009 by Oxford University Press, Inc. First published as an Oxford University Press paperback ion Published by Oxford University Press, Inc. 198 Madison Avenue, New York, New York 10016 www.oup.com Oxford is a registered trademark of Oxford University Press All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission of Oxford University Press. Library of Congress Cataloging-in-Publication Data The Oxford handbook of computer music / edited by Roger T. Dean. p. cm. Includes bibliographical references and index. ISBN 978-0-19-979103-0 (alk. paper) i. Computer music—History and criticism. I. Dean, R. T. MI T 1.80.09 1009 i 1008046594 789.99 OXF tin Printed in the United Stares of America on acid-free paper CHAPTER 12 SENSOR-BASED MUSICAL INSTRUMENTS AND INTERACTIVE MUSIC ATAU TANAKA MUSICIANS, composers, and instrument builders have been fascinated by the expres- sive potential of electrical and electronic technologies since the advent of electricity itself. -

Interactive Csound Coding with Emacs

Interactive Csound coding with Emacs Hlöðver Sigurðsson Abstract. This paper will cover the features of the Emacs package csound-mode, a new major-mode for Csound coding. The package is in most part a typical emacs major mode where indentation rules, comple- tions, docstrings and syntax highlighting are provided. With an extra feature of a REPL1, that is based on running csound instance through the csound-api. Similar to csound-repl.vim[1] csound- mode strives to enable the Csound user a faster feedback loop by offering a REPL instance inside of the text editor. Making the gap between de- velopment and the final output reachable within a real-time interaction. 1 Introduction After reading the changelog of Emacs 25.1[2] I discovered a new Emacs feature of dynamic modules, enabling the possibility of Foreign Function Interface(FFI). Being insired by Gogins’s recent Common Lisp FFI for the CsoundAPI[3], I decided to use this new feature and develop an FFI for Csound. I made the dynamic module which ports the greater part of the C-lang’s csound-api and wrote some of Steven Yi’s csound-api examples for Elisp, which can be found on the Gihub page for CsoundAPI-emacsLisp[4]. This sparked my idea of creating a new REPL based Csound major mode for Emacs. As a composer using Csound, I feel the need to be close to that which I’m composing at any given moment. From previous Csound front-end tools I’ve used, the time between writing a Csound statement and hearing its output has been for me a too long process of mouseclicking and/or changing windows. -

DVD Program Notes

DVD Program Notes Part One: Thor Magnusson, Alex Click Nilson is a Swedish avant McLean, Nick Collins, Curators garde codisician and code-jockey. He has explored the live coding of human performers since such Curators’ Note early self-modifiying algorithmic text pieces as An Instructional Game [Editor’s note: The curators attempted for One to Many Musicians (1975). to write their Note in a collaborative, He is now actively involved with improvisatory fashion reminiscent Testing the Oxymoronic Potency of of live coding, and have left the Language Articulation Programmes document open for further interaction (TOPLAP), after being in the right from readers. See the following URL: bar (in Hamburg) at the right time (2 https://docs.google.com/document/d/ AM, 15 February 2004). He previously 1ESzQyd9vdBuKgzdukFNhfAAnGEg curated for Leonardo Music Journal LPgLlCe Mw8zf1Uw/edit?hl=en GB and the Swedish Journal of Berlin Hot &authkey=CM7zg90L&pli=1.] Drink Outlets. Alex McLean is a researcher in the area of programming languages for Figure 1. Sam Aaron. the arts, writing his PhD within the 1. Overtone—Sam Aaron Intelligent Sound and Music Systems more effectively and efficiently. He group at Goldsmiths College, and also In this video Sam gives a fast-paced has successfully applied these ideas working within the OAK group, Uni- introduction to a number of key and techniques in both industry versity of Sheffield. He is one-third of live-programming techniques such and academia. Currently, Sam the live-coding ambient-gabba-skiffle as triggering instruments, scheduling leads Improcess, a collaborative band Slub, who have been making future events, and synthesizer design. -

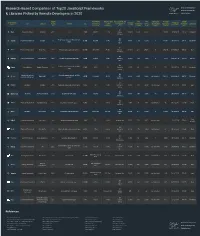

Research-Based Comparison of Top20 Javascript Frameworks & Libraries Picked by Remote Developers in 2020

Research-Based Comparison of Top20 JavaScript Frameworks & Libraries Picked by Remote Developers in 2020 Original # of Websites # of Devs Used Top 3 Countries with # of # of Ranking on # of Open Average JS Frameworks/ # of Stars Average Dev Type Founded by Release Ideal for Size Powered by a It (2019, the Highest % of Contributors Forks on Stack Overflow Vacancies on Hourly Rate Learnability Libraries on GitHub Salary (USA) Year Framework Worldwide) Usage on GitHub GitHb (2019) LinkedIn (USA) US React JavaScript library Facebook 2011 UI 133KB 380,164 71.7% Russia 146,000 1,373 28,300 1 130,300 $91,000.00 $35.19 Moderate Germany US Single-page apps and dynamic web Angular Front-end framework Google 2016 566KB 706,489 21.9% UK 59,000 1,104 16,300 2 94,282 $84,000.00 $39.14 Moderate apps Brazil US Vue.js Front-end framework Evan You 2014 UI and single-page applications 58.8KB 241,б615 40.5% Russia 161,000 291 24,300 5 27,395 $116,562.00 $42.08 Easy Germany US Ember.js Front-end framework Yehuda Katz 2011 Scalable single-page web apps 435KB 20,985 3.6% Germany 21,400 782 4,200 8 3,533 $105,315.00 $51.00 Difficult UK US Scalable web, mobile and desktop Meteor App platform Meteor Software 2012 4.2MB 8,674 4% Germany 41,700 426 5,100 7 256 $96,687.00 $27.92 Moderate apps France US JavaScript runtime Network programs, such as Web Node.js Ryan Dahl 2009 141KB 1,610,630 49.9% UK 69,000 2,676 16,600 not included 52,919 $104,964.00 $33.78 Moderate (server) environment servers Germany US Polymer JS library Google 2015 Web apps using web components -

Readme for 3Rd Party Software FIN Framework

Smart Infrastructure Note to Resellers: Please pass on this document to your customer to avoid license breach and copyright infringements. Third-Party Software Information for FIN Framework 5.0.7 J2 Innovations Inc. is a Siemens company and is referenced herein as SIEMENS. This product, solution or service ("Product") contains third-party software components listed in this document. These components are Open Source Software licensed under a license approved by the Open Source Initiative (www.opensource.org) or similar licenses as determined by SIEMENS ("OSS") and/or commercial or freeware software components. With respect to the OSS components, the applicable OSS license conditions prevail over any other terms and conditions covering the Product. The OSS portions of this Product are provided royalty-free and can be used at no charge. If SIEMENS has combined or linked certain components of the Product with/to OSS components licensed under the GNU LGPL version 2 or later as per the definition of the applicable license, and if use of the corresponding object file is not unrestricted ("LGPL Licensed Module", whereas the LGPL Licensed Module and the components that the LGPL Licensed Module is combined with or linked to is the "Combined Product"), the following additional rights apply, if the relevant LGPL license criteria are met: (i) you are entitled to modify the Combined Product for your own use, including but not limited to the right to modify the Combined Product to relink modified versions of the LGPL Licensed Module, and (ii) you may reverse-engineer the Combined Product, but only to debug your modifications. -

Secrets of the Javascript Ninja

Secrets of the JavaScript Ninja JOHN RESIG BEAR BIBEAULT MANNING SHELTER ISLAND For online information and ordering of this and other Manning books, please visit www.manning.com. The publisher offers discounts on this book when ordered in quantity. For more information, please contact Special Sales Department Manning Publications Co. 20 Baldwin Road PO Box 261 Shelter Island, NY 11964 Email: [email protected] ©2013 by Manning Publications Co. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by means electronic, mechanical, photocopying, or otherwise, without prior written permission of the publisher. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in the book, and Manning Publications was aware of a trademark claim, the designations have been printed in initial caps or all caps. Recognizing the importance of preserving what has been written, it is Manning’s policy to have the books we publish printed on acid-free paper, and we exert our best efforts to that end. Recognizing also our responsibility to conserve the resources of our planet, Manning books are printed on paper that is at least 15 percent recycled and processed without the use of elemental chlorine. Manning Publications Co. Development editors: Jeff Bleiel, Sebastian Stirling 20 Baldwin Road Technical editor: Valentin Crettaz PO Box 261 Copyeditor: Andy Carroll Shelter Island, NY 11964 Proofreader: Melody Dolab Typesetter: Dennis Dalinnik Cover designer: Leslie Haimes ISBN: 978-1-933988-69-6 Printed in the United States of America 1 2 3 4 5 6 7 8 9 10 – MAL – 18 17 16 15 14 13 12 brief contents PART 1 PREPARING FOR TRAINING. -

Algorithmic Composition with Open Music and Csound: Two Examples

Algorithmic Composition with Open Music and Csound: two examples Fabio De Sanctis De Benedictis ISSM \P. Mascagni" { Leghorn (Italy) [email protected] Abstract. In this paper, after a concise and not exhaustive review about GUI software related to Csound, and brief notes about Algorithmic Com- position, two examples of Open Music patches will be illustrated, taken from the pre-compositive work in some author's compositions. These patches are utilized for sound generation and spatialization using Csound as synthesis engine. Very specific and thorough Csound programming ex- amples will be not discussed here, even if automatically generated .csd file examples will be showed, nor will it be possible to explain in detail Open Music patches; however we retain that what will be described can stimulate the reader towards further deepening. Keywords: Csound, Algorithmic Composition, Open Music, Electronic Music, Sound Spatialization, Music Composition 1 Introduction As well-known, \Csound is a sound and music computing system"([1], p. 35), founded on a text file containing synthesis instructions and the score, that the software reads and transforms into sound. By its very nature Csound can be supported by other software that facilitates the writing of code, or that, provided by a graphic interface, can use Csound itself as synthesis engine. Dave Phillips has mentioned several in his book ([2]): hYdraJ by Malte Steiner; Cecilia by Jean Piche and Alexander Burton; Silence by Michael Go- gins; the family of HPK software, by Didiel Debril and Jean-Pierre Lemoine.1 The book by Bianchini and Cipriani encloses Windows software for interfacing Csound, and various utilities ([6]). -

WELLE - a Web-Based Music Environment for the Blind

WELLE - a web-based music environment for the blind Jens Vetter Tangible Music Lab University of Art and Design Linz, Austria [email protected] ABSTRACT To improve the process of music production on the com- This paper presents WELLE, a web-based music environ- puter for the blind, several attempts have been made in ment for blind people, and describes its development, de- the past focusing on assistive external hardware, such as sign, notation syntax and first experiences. WELLE is in- the Haptic Wave [14], the HaptEQ [6] or the Moose [5]. tended to serve as a collaborative, performative and educa- All these external interfaces, however, are based on the in- tional tool to quickly create and record musical ideas. It is tegration into a music production environment. But only pattern-oriented, based on textual notation and focuses on very few audio software platforms support screen readers accessibility, playful interaction and ease of use. like JAWS. Amongst them is the cross-platform audio soft- WELLE was developed as part of the research project ware Audacity. Yet in terms of music production, Audacity Tangible Signals and will also serve as a platform for the is only suitable for editing single audio files, but not for integration of upcoming new interfaces. more complex editing of multiple audio tracks or even for composing computer music. DAW's (Digital Audio Work- stations) like Sonar or Reaper allow more complex audio Author Keywords processing and also offer screen reader integration, but us- Musical Interfaces, Accessibility, Livecoding, Web-based In- ing them with the screen reader requires extensive training. -

John Resig About Me

Coder Day of Service John Resig About Me • Lots of Open Source work • jQuery, Processing.js, other projects! • Worked at Mozilla (Non-profit, Open Source) • Working at Khan Academy (Non-profit, free content, some Open Source) jQuery Beginnings • Library for making JavaScript easier to write. • Created it in 2005 • While I was in college! • I hated cross-browser inconsistencies • Also really dis-liked the DOM jQuery’s Growth • Released in 2006 • Slowly grew for many years • Started to explode in 2009 • Drupal, Microsoft, Wordpress, Adobe all use jQuery • Currently used by 70% of the top 10,000 web sites on the web. Full-time • From 2009 to 2011 got funding from Mozilla (my employer) • Worked on jQuery full-time! • Built up the jQuery Foundation (non-profit) Letting Go • Decided to step down to focus on building applications • Moved to Khan Academy! • jQuery Foundation runs everything now • They do a really good job :) Open Source/Open Data Tips Don’t be try to be perfect. Non-code things are just as important as code. Your community will mimic you. Benefits of Open Source/Open Data You use Open Source, why not give back? People will do projects you don’t have time for. You’ll attract interesting developers. ...which can lead to hiring good developers! Khan Academy Stats • 3 million problems practiced per day • 300 million lessons delivered • 100,000 educators around the world • 10 million students per month Khan API • Access to all data and user info • (with proper authentication) • You can access: • Videos + video data • All nicely organized • Exercise data • Student logs Data Analysis iPhone Application Exercises Exercises Exercise Racer Museums and Libraries Museums and Libraries • Cultural institutions • (Typically) Free for all • (Typically) Non-profits • They can use our help! Ukiyo-e.org Helping Public Institutions • Brooklyn Museum • New York Public Library • Digital Public Library of America • ...and many more! • All have public, free, open, APIs!. -

Secrets of the Javascript Ninja.Pdf

MEAP Edition Manning Early Access Program Copyright 2008 Manning Publications For more information on this and other Manning titles go to www.manning.com Contents Preface Part 1 JavaScript language Chapter 1 Introduction Chapter 2 Functions Chapter 3 Closures Chapter 4 Timers Chapter 5 Function prototype Chapter 6 RegExp Chapter 7 with(){} Chapter 8 eval Part 2 Cross-browser code Chapter 9 Strategies for cross-browser code Chapter 10 CSS Selector Engine Chapter 11 DOM modification Chapter 12 Get/Set attributes Chapter 13 Get/Set CSS Chapter 14 Events Chapter 15 Animations Part 3 Best practices Chapter 16 Unit testing Chapter 17 Performance analysis Chapter 18 Validation Chapter 19 Debugging Chapter 20 Distribution 1 Introduction Authorgroup John Resig Legalnotice Copyright 2008 Manning Publications Introduction In this chapter: Overview of the purpose and structure of the book Overview of the libraries of focus Explanation of advanced JavaScript programming Theory behind cross-browser code authoring Examples of test suite usage There is nothing simple about creating effective, cross-browser, JavaScript code. In addition to the normal challenge of writing clean code you have the added complexity of dealing with obtuse browser complexities. To counter-act this JavaScript developers frequently construct some set of common, reusable, functionality in the form of JavaScript library. These libraries frequently vary in content and complexity but one constant remains: They need to be easy to use, be constructed with the least amount of overhead, and be able to work in all browsers that you target. It stands to reason, then, that understanding how the very best JavaScript libraries are constructed and maintained can provide great insight into how your own code can be constructed.