Two Cheers for Corporate Experimentation: the A/B Illusion and the Virtues of Data-Driven Innovation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Show Code: #11-35 Show Date: Weekend of August 27-28, 2011 Disc One/Hour One Opening Billboard: None Seg

Show Code: #11-35 Show Date: Weekend of August 27-28, 2011 Disc One/Hour One Opening Billboard: None Seg. 1 Content: #40 “HELLO” – Martin Solveig & Dragonette #39 “EVERY TEARDROP IS A WATERFALL” – Coldplay #38 “TONIGHT” – Enrique Iglesias f/Ludacris Commercials: :60 Proactiv :30 NHTSA :30 Relativity Medi Outcue: “...under 13. Starts Friday.” Segment Time: 14:48 Local Break 2:00 Seg. 2 Content: #37 “SMILE” – Avril Lavigne #36 “DYNAMITE” – Taio Cruz #35 “FORGET YOU” – Cee Lo Green Extra: “MOTIVATION” – Kelly Rowland f/Lil Wayne Commercials: :30 Subway/Fresh Bu :30 Lowe's/Pre-Labo :60 California Psyc Outcue: “...psychics dot com.” Segment Time: 18:07 Local Break 2:00 Seg. 3 Content: #34 “FOR THE FIRST TIME” – The Script #33 “PRETTY GIRLS” – Iyaz f/Travie McCoy #32 “BLOW” – Ke$ha #31 “S&M” – Rihanna Commercials: :30 Kleenex :30 Autotrader.com Outcue: “...car market place.” Segment Time: 18:00 Local Break 1:00 Seg. 4 ***This is an optional cut - Stations can opt to drop song for local inventory*** Content: AT40 Extra: “DIRTY LITTLE SECRET” – The All-American Rejects Outcue: “…forward to that.” (sfx) Segment Time: 3:15 Hour 1 Total Time: 59:10 END OF DISC ONE Show Code: #11-35 Show Date: Weekend of August 27-28, 2011 Disc Two/Hour Two Opening Billboard Autotrader Seg. 1 Content: #30 “ON THE FLOOR” – Jennifer Lopez f/Pitbull #29 “DON'T STOP THE PARTY” – The Black Eyed Peas #28 “CHEERS (DRINK TO THAT)” – Rihanna Commercials: :30 Subway/Fresh Bu :30 Relativity Medi :60 Proactiv Outcue: “...1-800-360-9200.” Segment Time: 14:42 Local Break 2:00 Seg. -

Section 10: Outdoor Action Song Guide

Section 10: Outdoor Action Song Guide Songs, just like games, can be a useful and fun addition to a trip. Whether used to get the group warmed up on a chilly morning or to lighten the mood on the trail, songs should be a part of your leader bag of tricks. Most of these songs represent relatively low interpersonal risk except for songs that single out individuals such as Little Sally Walker. As for any activity, use your leader radar to determine when the individual and group dynamics are appropriate for a particular song. Enjoy! 10-1 Old Nassau Tune every heart and every voice, bid every care withdraw; Let all with one accord rejoice, in praise of Old Nassau. In praise of Old Nassau we sing, Hurrah! hurrah! hurrah! Our hearts will give while we shall live, three cheers for Old Nassau. 10-2 10-3 10-4 10-5 10-6 10-7 10-8 Go Bananas CHORUS (With Actions) LEADER: Bananas of the world…UNITE! Thumbs up! Elbows back! LEADER: Bananas…SPLIT! Thumbs up! Elbows back! Knees together! Toes together ALL: Peel bananas, peel peel bananas Butt out Slice bananas… Head out Mash bananas… Spinning round Dice bananas… Tongue out Whip bananas… Jump bananas… GO BANANAS… The Jellyfish (Jellyfish should be yelled in silly voice to yield its full comedic effect.) CHORUS Penguin Song ALL: The jellyfish, the jellyfish, the jellyfish (while (With Actions) moving like a jellyfish). CHORUS ALL: Have you ever seen a penguin come to tea? LEADER: Feet together!! Take a look at me, a penguin you will see ALL: Feet together!! Penguins attention! Penguins begin! (Salute here) -

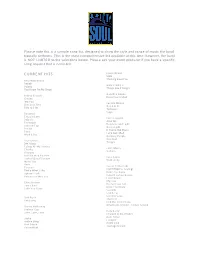

Bad Habit Song List

BAD HABIT SONG LIST Artist Song 4 Non Blondes Whats Up Alanis Morissette You Oughta Know Alanis Morissette Crazy Alanis Morissette You Learn Alanis Morissette Uninvited Alanis Morissette Thank You Alanis Morissette Ironic Alanis Morissette Hand In My Pocket Alice Merton No Roots Billie Eilish Bad Guy Bobby Brown My Prerogative Britney Spears Baby One More Time Bruno Mars Uptown Funk Bruno Mars 24K Magic Bruno Mars Treasure Bruno Mars Locked Out of Heaven Chris Stapleton Tennessee Whiskey Christina Aguilera Fighter Corey Hart Sunglasses at Night Cyndi Lauper Time After Time David Guetta Titanium Deee-Lite Groove Is In The Heart Dishwalla Counting Blue Cars DNCE Cake By the Ocean Dua Lipa One Kiss Dua Lipa New Rules Dua Lipa Break My Heart Ed Sheeran Blow BAD HABIT SONG LIST Artist Song Elle King Ex’s & Oh’s En Vogue Free Your Mind Eurythmics Sweet Dreams Fall Out Boy Beat It George Michael Faith Guns N’ Roses Sweet Child O’ Mine Hailee Steinfeld Starving Halsey Graveyard Imagine Dragons Whatever It Takes Janet Jackson Rhythm Nation Jessie J Price Tag Jet Are You Gonna Be My Girl Jewel Who Will Save Your Soul Jo Dee Messina Heads Carolina, Tails California Jonas Brothers Sucker Journey Separate Ways Justin Timberlake Can’t Stop The Feeling Justin Timberlake Say Something Katy Perry Teenage Dream Katy Perry Dark Horse Katy Perry I Kissed a Girl Kings Of Leon Sex On Fire Lady Gaga Born This Way Lady Gaga Bad Romance Lady Gaga Just Dance Lady Gaga Poker Face Lady Gaga Yoü and I Lady Gaga Telephone BAD HABIT SONG LIST Artist Song Lady Gaga Shallow Letters to Cleo Here and Now Lizzo Truth Hurts Lorde Royals Madonna Vogue Madonna Into The Groove Madonna Holiday Madonna Border Line Madonna Lucky Star Madonna Ray of Light Meghan Trainor All About That Bass Michael Jackson Dirty Diana Michael Jackson Billie Jean Michael Jackson Human Nature Michael Jackson Black Or White Michael Jackson Bad Michael Jackson Wanna Be Startin’ Something Michael Jackson P.Y.T. -

Cheers, Yells, and Applauses Compiled by Daniel R

Cheers, Yells, and Applauses Compiled by Daniel R. Mott: Roundtable Staff Beaver: Cut a tree by tapping front teeth together, District 23, West Jordan, Utah slap your tail by slapping a palm against your With a couple contributions by Gary Hendra, Pack thigh, then yell, "TIMBER!" and Troop 92, Milpitas, CA Bear: Growl like a bear four times, turning halfway Applause stunts are a great way to recognize around each time. a person or den/patrol in a troop/pack meeting for some accomplishment they have performed. Be Bee: Put arms straight out and pretend to fly, sure before you start that everyone knows and while going "Buzz-z-z-z, Buzz-z-z-z." understands the applause stunt and how to do it. Applause stunts serve more then one purpose Ben Franklin: Hold both hands out in front of you they not only provide recognition but also help as if flying a kite. Jerk back suddenly while saying, liven up a meeting. Applause stunts need to be "Zap, Zap, Zap."(Lightening) fun. Strive for quality of performance in your stunts. Bicycle Cheer: Pump, Pump, Pump. How to Make a Cheers Box Big Hand: Leader says, "let's give them a big Find a Cheer laundry detergent box. Print out hand" everybody in the audience holds up one of these cheers, yells and applauses on card stock. their hands with the palm up. Cut the card stock up so that one cheer is on each piece of paper. Put them all in the Cheer box. Big Sneeze: Cup hands in front of nose and Take the box to your meetings or campfires. -

To Download a Sample Song List

Please note this is a sample song list, designed to show the style and range of music the band typically performs. This is the most comprehensive list available at this time; however, the band is NOT LIMITED to the selections below. Please ask your event producer if you have a specific song request that is not listed. CURRENT HITS Frank Ocean Slide Thinking Bout You Amy Winehouse Rehab Gary Clark Jr. Valerie Things Are Changin' You Know I’m No Good Guordan Banks Ariana Grande Keep You in Mind Greedy Into You Janelle Monae One Last Time Q.U.E.E.N. Side to Side Tightrope Yoga Beyonce Crazy in Love John Legend Deja Vu All of Me Formation Darkness and Light Love on Top Green Light Rocket If You’re Out There Sorry Let’s Get Lifted Work it Out Ordinary People Overload Bruno Mars Tonight 24K Magic Calling All My Lovelies John Mayer Chunky Vultures Grenade Just the Way You Are Joss Stone Locked Out of Heaven Walk on By Marry You Perm Justin Timberlake Treasure Can’t Stop the Feeling! That’s What I Like Drink You Away Uptown Funk FutureSex/LoveSound Versace on the Floor LoveStoned My Love Chris Brown Pusher Love Girl Fine China Rock Your Body Take You Down Senorita Suit & Tie Summer Love Daft Punk That Girl Get Lucky Until the End of Time What Goes Around...Comes Around Donny Hathaway Jealous Guy Katy Perry Love, Love, Love Chained to the Rhythm Dark Horse Drake Firework Hotline Bling Hot N Cold One Dance Teenage Dream Passionfruit Leon Bridges Brian McKnight Better Man Back At One Coming Home Don’t Stop Maroon 5 Chaka Khan Makes Me Wonder Tell -

Skyline Orchestras

PRESENTS… SKYLINE Thank you for joining us at our showcase this evening. Tonight, you’ll be viewing the band Skyline, led by Ross Kash. Skyline has been performing successfully in the wedding industry for over 10 years. Their experience and professionalism will ensure a great party and a memorable occasion for you and your guests. In addition to the music you’ll be hearing tonight, we’ve supplied a song playlist for your convenience. The list is just a part of what the band has done at prior affairs. If you don’t see your favorite songs listed, please ask. Every concern and detail for your musical tastes will be held in the highest regard. Please inquire regarding the many options available. Skyline Members: • VOCALS AND MASTER OF CEREMONIES…………………………..…….…ROSS KASH • VOCALS……..……………………….……………………………….….BRIDGET SCHLEIER • VOCALS AND KEYBOARDS..………….…………………….……VINCENT FONTANETTA • GUITAR………………………………….………………………………..…….JOHN HERRITT • SAXOPHONE AND FLUTE……………………..…………..………………DAN GIACOMINI • DRUMS, PERCUSSION AND VOCALS……………………………….…JOEY ANDERSON • BASS GUITAR, VOCALS AND UKULELE………………….……….………TOM MCGUIRE • TRUMPET…….………………………………………………………LEE SCHAARSCHMIDT • TROMBONE……………………………………………………………………..TIM CASSERA • ALTO SAX AND CLARINET………………………………………..ANTHONY POMPPNION www.skylineorchestras.com (631) 277 – 7777 DANCE: 24K — BRUNO MARS A LITTLE PARTY NEVER KILLED NOBODY — FERGIE A SKY FULL OF STARS — COLD PLAY LONELY BOY — BLACK KEYS AIN’T IT FUN — PARAMORE LOVE AND MEMORIES — O.A.R. ALL ABOUT THAT BASS — MEGHAN TRAINOR LOVE ON TOP — BEYONCE BAD ROMANCE — LADY GAGA MANGO TREE — ZAC BROWN BAND BANG BANG — JESSIE J, ARIANA GRANDE & NIKKI MARRY YOU — BRUNO MARS MINAJ MOVES LIKE JAGGER — MAROON 5 BE MY FOREVER — CHRISTINA PERRI FT. ED SHEERAN MR. SAXOBEAT — ALEXANDRA STAN BEST DAY OF MY LIFE — AMERICAN AUTHORS NO EXCUSES — MEGHAN TRAINOR BETTER PLACE — RACHEL PLATTEN NOTHING HOLDING ME BACK — SHAWN MENDES BLOW — KE$HA ON THE FLOOR — J. -

Most Requested Songs of 2012

Top 200 Most Requested Songs Based on millions of requests made through the DJ Intelligence® music request system at weddings & parties in 2012 RANK ARTIST SONG 1 Journey Don't Stop Believin' 2 Black Eyed Peas I Gotta Feeling 3 Lmfao Feat. Lauren Bennett And Goon Rock Party Rock Anthem 4 Lmfao Sexy And I Know It 5 Cupid Cupid Shuffle 6 AC/DC You Shook Me All Night Long 7 Diamond, Neil Sweet Caroline (Good Times Never Seemed So Good) 8 Bon Jovi Livin' On A Prayer 9 Maroon 5 Feat. Christina Aguilera Moves Like Jagger 10 Morrison, Van Brown Eyed Girl 11 Beyonce Single Ladies (Put A Ring On It) 12 DJ Casper Cha Cha Slide 13 B-52's Love Shack 14 Rihanna Feat. Calvin Harris We Found Love 15 Pitbull Feat. Ne-Yo, Afrojack & Nayer Give Me Everything 16 Def Leppard Pour Some Sugar On Me 17 Jackson, Michael Billie Jean 18 Lady Gaga Feat. Colby O'donis Just Dance 19 Pink Raise Your Glass 20 Beatles Twist And Shout 21 Cruz, Taio Dynamite 22 Lynyrd Skynyrd Sweet Home Alabama 23 Sir Mix-A-Lot Baby Got Back 24 Jepsen, Carly Rae Call Me Maybe 25 Usher Feat. Ludacris & Lil' Jon Yeah 26 Outkast Hey Ya! 27 Isley Brothers Shout 28 Clapton, Eric Wonderful Tonight 29 Brooks, Garth Friends In Low Places 30 Sister Sledge We Are Family 31 Train Marry Me 32 Kool & The Gang Celebration 33 Sinatra, Frank The Way You Look Tonight 34 Temptations My Girl 35 ABBA Dancing Queen 36 Loggins, Kenny Footloose 37 Flo Rida Good Feeling 38 Perry, Katy Firework 39 Houston, Whitney I Wanna Dance With Somebody (Who Loves Me) 40 Jackson, Michael Thriller 41 James, Etta At Last 42 Timberlake, Justin Sexyback 43 Lopez, Jennifer Feat. -

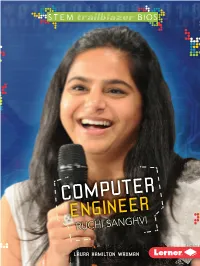

Computer Engineer Ruchi Sanghvi

STEM TRAILBLAZER BIOS COMPUTER ENGINEER RUCHI SANGHVI THIS PAGE LEFT BLANK INTENTIONALLY STEM TRAILBLAZER BIOS COMPUTER ENGINEER RUCHI SANGHVI LAURA HAMILTON WAXMAN Lerner Publications Minneapolis For Caleb, my computer wizard Copyright © 2015 by Lerner Publishing Group, Inc. All rights reserved. International copyright secured. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means—electronic, mechanical, photocopying, recording, or otherwise—without the prior written permission of Lerner Publishing Group, Inc., except for the inclusion of brief quotations in an acknowledged review. Lerner Publications Company A division of Lerner Publishing Group, Inc. 241 First Avenue North Minneapolis, MN 55401 USA For reading levels and more information, look up this title at www.lernerbooks.com. Content Consultant: Robert D. Nowak, PhD, McFarland-Bascom Professor in Engineering, Electrical and Computer Engineering, University of Wisconsin–Madison Library of Congress Cataloging-in-Publication Data Waxman, Laura Hamilton. Computer engineer Ruchi Sanghvi / Laura Hamilton Waxman. pages cm. — (STEM trailblazer bios) Includes index. ISBN 978–1–4677–5794–2 (lib. bdg. : alk. paper) ISBN 978–1–4677–6283–0 (eBook) 1. Sanghvi, Ruchi, 1982–—Juvenile literature. 2. Computer engineers—United States— Biography—Juvenile literature. 3. Women computer engineers—United States—Biography— Juvenile literature. I. Title. QA76.2.S27W39 2015 621.39092—dc23 [B] 2014015878 Manufactured in the United States of America 1 – PC – 12/31/14 The images in this book are used with the permission of: picture alliance/Jan Haas/Newscom, p. 4; © Dinodia Photos/Alamy, p. 5; © Tadek Kurpaski/flickr.com (CC BY 2.0), p. -

Riaa Gold & Platinum Awards

6/1/2015 — 6/30/2015 In June 2015, RIAA certified 126 Digital Single Awards and 6 Album Awards. Complete lists of all album, single and video awards dating all the way back to 1958 can be accessed at riaa.com. RIAA GOLD & JUNE 2015 PLATINUM AWARDS DIGITAL MULTI-PLATINUM SINGLE (53) Cert Date Title Artist Label Plat Level Rel. Date 6/19/2015 ALL I DO IS WIN (FEAT. T-PAIN, DJ KHALED E1 ENTERTAINMENT 3 2/9/2010 LUDACRIS, SNOOP DOGG & RICK ROSS 6/12/2015 RAP GOD EMINEM SHADY/AFTERMATH/ 2 10/15/2013 INTERSCOPE 6/12/2015 RAP GOD EMINEM SHADY/AFTERMATH/ 3 10/15/2013 INTERSCOPE 6/11/2015 GDFR FLO RIDA ATLANTIC RECORDS 2 10/21/2014 6/15/2015 DOG DAYS ARE OVER FLORENCE AND UNIVERSAL RECORDS 3 10/20/2009 THE MACHINE 6/15/2015 DOG DAYS ARE OVER FLORENCE AND UNIVERSAL RECORDS 4 10/20/2009 THE MACHINE 6/17/2015 ROUND HERE FLORIDA GEORGIA REPUBLIC NASHVILLE 2 12/4/2012 LINE 6/15/2015 PROBLEM GRANDE, ARIANA REPUBLIC RECORDS 6 4/28/2014 6/15/2015 PURSUIT OF HAPPINESS KID CUDI UNIVERSAL RECORDS 2 9/15/2009 (NIGHTMARE) 6/15/2015 PURSUIT OF HAPPINESS KID CUDI UNIVERSAL RECORDS 3 9/15/2009 (NIGHTMARE) 6/2/2015 ADORN MIGUEL RCA RECORDS 2 7/31/2012 6/30/2015 ONLY GIRL (IN THE WORLD) RIHANNA DEF JAM 5 10/13/2010 6/30/2015 DISTURBIA RIHANNA DEF JAM 5 6/17/2008 6/30/2015 DIAMONDS RIHANNA DEF JAM 5 11/16/2012 6/30/2015 POUR IT UP RIHANNA DEF JAM 2 11/19/2012 6/30/2015 WHAT’S MY NAME RIHANNA DEF JAM 4 11/16/2010 www.riaa.com GoldandPlatinum @RIAA @riaa_awards JUNE 2015 DIGITAL MULTI-PLATINUM SINGLE (53) continued.. -

Rihanna Cheers Drink to That Karaoke

Rihanna cheers drink to that karaoke Download Link: - Cheers. Cheers (Drink to That) (Explicit) karaoke video originally performed by Rihanna. Music Licensing, Backing. Rihanna - Cheers, Drink To That World`s finest Karaoke Playbacks from your favorite Stars. Sing the. (Lyrics) Cheers to the freakin' weekend I drink to that, yeah yeah Oh let the Jameson sink in I drink to that. Mix - Rihanna-Cheers(Drink to that) Official instrumental/karaoke(Lyrics onscreen)YouTube · Cheers (Drink. Rihanna - Cheers (Drink To That) (Karaoke Version) #Rihanna #CheersDrinkToThat. Cheers (Drink to That) (Explicit) - Karaoke HD (In the style of Rihanna) - Duration: singsongsmusic Rihanna Cheers - Drink to That (Lyrics). Top Rap Cheers (Drink to That) (Explicit) - Karaoke HD (In. Videoklip, překlad a text písně Cheers (Drink To That) od Rihanna. Life's too short to be sitting around miserable People gonna talk whether you doing ba. Cheers Drink To That Karaoke Hd In The Style Of Rihanna mp3 high quality download at MusicEel. Choose from several source of music. Discover Cheers (Drink To That) Instrumental MP3 as made famous by Rihanna. Download the best MP3 Karaoke Songs on Karaoke Version. Buscar resultados para: Cheers Drink To That Karaoke Version With Hook Cheers. Rihanna - Cheers (Drink To That) (Karaoke without Vocal). Cheers (Drink To That) - Originally by Rihanna [Ka. By Master Q Karaoke. • 1 song. Play on Spotify. 1. Cheers (Drink To that) (Originally Performed by Ri. Cheers (Drink to That) [Clean] [In the Style of Rihanna] [Karaoke Version]. By Ameritz - Karaoke. • 1 song, Play on Spotify. 1. Cheers (Drink to That). Search results for: Cheers Drink To That Karaoke Version With Hook Cheers. -

Rihanna Loud Deluxe Edition Zip

Rihanna loud deluxe edition zip El Album contiene 13 Canciones · Espero que te guste · Agrega a Tracklist: · S&M · What's My Name (feat. Drake) · Ch Album|Loud (Deluxe. Rihanna – Loud (Deluxe Edition) (Dirty) – - kbps Download Link: Code: Code. Rihanna – Loud (Bonus Track Version), Itunes Plus Full, Rihanna – Loud (Bonus Track Avril Lavigne - Goodbye Lullaby (Special Deluxe Edition) David Guetta. Download CD Loud: Deluxe Edition. by Camilo Fenty 5 comentários. Formato: mp3. Tamanho: 88 MB. S&M What's My Name? feat. Drake Cheers (Drink To That). Rihanna Loud Deluxe Album Free Download Zip > acer aspire one windows xp home edition ulcpc. Aguirre furore di dio. Play and Download all songs on Loud, a music album by Barbados artist Rihanna on rihanna loud full album download zip Download Link . Rihanna - Loud (Deluxe Edition) (). Download Album Rihanna - Loud (Deluxe Edition). Rihanna - Loud (Deluxe - iTunes).7z. MB American Idiot (Deluxe Version) MB .. Keane | Hopes and Fears (Deluxe Edition) [заказ 58]. Zip rihanna loud album boo deluxe Rihanna – ANTi (Album) Zip Results of eminem recovery deluxe edition viperial: Free download software. Download Descargar Cd De Rihanna Loud Deluxe Edition. 7/6/ Download File Rihanna Unapologetic (Deluxe Edition) zip. Login; Sign Up Forgot your. Listen to songs from the album Loud, including "S&M", "What's My Name? (feat. Drake)", "Cheers The Hits Collection, Vol. One (Deluxe Edition with Videos). Escucha canciones del álbum Loud, incluyendo "S&M", "What's My Name? (feat. Drake)" Love the Way You Lie (feat. Rihanna). Recovery (Deluxe Edition). Rihanna keeps on looking Filename: Rihanna - Loud - (Deluxe Edition).zip: Size: MB ( bytes) Report abuse: Uploaded by. -

Coders-Noten.Indd 1 16-05-19 09:20 1

Noten coders-noten.indd 1 16-05-19 09:20 1. DE SOFTWARE-UPDATE DIE DE WERKELIJKHEID HEEFT VERANDERD 1 Adam Fisher, Valley of Genius: The Uncensored History of Silicon Valley (As Told by the Hackers, Founders, and Freaks Who Made It Boom, (New York: Twelve, 2017), 357. 2 Fisher, Valley of Genius, 361. 3 Dit segment is gebaseerd op een interview van mij met Sanghvi, en op verscheidene boeken, artikelen en video’s over de begindagen van Facebook, waaronder: Daniela Hernandez, ‘Facebook’s First Female Engineer Speaks Out on Tech’s Gender Gap’, Wired, 12 december 2014, https://www.wired.com/2014/12/ruchi-qa/; Mark Zucker berg, ‘Live with the Original News Feed Team’, Facebookvideo, 25:36, 6 september 2016, https://www.facebook.com/zuck/ videos/10103087013971051; David Kirkpatrick, The Facebook Effect: The Inside Story of the Company That Is Connecting the World (New York: Simon & Schuster, 2011); INKtalksDirector, ‘Ruchi Sanghvi: From Facebook to Facing the Unknown’, YouTube, 11:50, 20 maart 2012, https://www.youtube.com/watch?v=64AaXC00bkQ; TechCrunch, ‘TechFellow Awards: Ruchi Sanghvi’, TechCrunch-video, 4:40, 4 maart 2012, https://techcrunch.com/video/techfellow-awards-ruchi- coders-noten.indd 2 16-05-19 09:20 sanghvi/517287387/; FWDus2, ‘Ruchi’s Story’, YouTube, 1:24, 10 mei 2013, https://www.youtube.com/watch?v=i86ibVt1OMM; alle video’s geraadpleegd op 16 augustus 2018. 4 Clare O’Connor, ‘Video: Mark Zucker berg in 2005, Talking Facebook (While Dustin Moskovitz Does a Keg Stand)’, Forbes, 15 augustus 2011, geraadpleegd op 7 oktober 2018, https://www.forbes.com/sites/ clareoconnor/2011/08/15/video-mark-Zucker berg-in-2005-talking- facebook-while-dustin-moskovitz-does-a-keg-stand/#629cb86571a5.