A Methodology for the Cryptanalysis of Classical Ciphers with Search

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

To What Extent Did British Advancements in Cryptanalysis During World War II Influence the Development of Computer Technology?

Portland State University PDXScholar Young Historians Conference Young Historians Conference 2016 Apr 28th, 9:00 AM - 10:15 AM To What Extent Did British Advancements in Cryptanalysis During World War II Influence the Development of Computer Technology? Hayley A. LeBlanc Sunset High School Follow this and additional works at: https://pdxscholar.library.pdx.edu/younghistorians Part of the European History Commons, and the History of Science, Technology, and Medicine Commons Let us know how access to this document benefits ou.y LeBlanc, Hayley A., "To What Extent Did British Advancements in Cryptanalysis During World War II Influence the Development of Computer Technology?" (2016). Young Historians Conference. 1. https://pdxscholar.library.pdx.edu/younghistorians/2016/oralpres/1 This Event is brought to you for free and open access. It has been accepted for inclusion in Young Historians Conference by an authorized administrator of PDXScholar. Please contact us if we can make this document more accessible: [email protected]. To what extent did British advancements in cryptanalysis during World War 2 influence the development of computer technology? Hayley LeBlanc 1936 words 1 Table of Contents Section A: Plan of Investigation…………………………………………………………………..3 Section B: Summary of Evidence………………………………………………………………....4 Section C: Evaluation of Sources…………………………………………………………………6 Section D: Analysis………………………………………………………………………………..7 Section E: Conclusion……………………………………………………………………………10 Section F: List of Sources………………………………………………………………………..11 Appendix A: Explanation of the Enigma Machine……………………………………….……...13 Appendix B: Glossary of Cryptology Terms.…………………………………………………....16 2 Section A: Plan of Investigation This investigation will focus on the advancements made in the field of computing by British codebreakers working on German ciphers during World War 2 (19391945). -

Introduction to Cryptography Teachers' Reference

Introduction to Cryptography Teachers’ Reference Dear Teachers! Here I have mentioned some guidelines for your good self so that your students can benefit form these modules. I am very hopeful that you will enjoy conducting the session. After Module-1 1. Scytale Decryption In your class ask your students to devise a method to decrypt the message just been encrypted using Scytale. Encourage them to look around to find a decryption tool. Ask your students to cut a strip of paper some 4mm wide, 32cm long and light pencil lines drawn on it at 4mm distance. To give it the following shape. Now write cipher text on the paper strip in the following way. t i t i r s a e e e e e b h y w i t i l r f d n m g o e e o h v n t t r a a e c a g o o w d m h Encourage them once again to find a tool around them to decrypt it. Ask them to look for a very familiar thing they must have been using right from their early school days to do their work. Now many of them must have figured out that you are asking for a pencil. Now ask them to use the pencil to decrypt the cipher text by using pencil and the only reasonable way would be to wrap the paper strip around the strip. The plain text will be visible along the six side faces of the pencil. Encourage them to reason that how did it work? There is a connection between six rows of the table in which the plain text was filled and the six faces of the pencil. -

Breaking the Enigma Cipher

Marian Rejewski An Application of the Theory of Permutations in Breaking the Enigma Cipher Applicaciones Mathematicae. 16, No. 4, Warsaw 1980. Received on 13.5.1977 Introduction. Cryptology, i.e., the science on ciphers, has applied since the very beginning some mathematical methods, mainly the elements of probability theory and statistics. Mechanical and electromechanical ciphering devices, introduced to practice in the twenties of our century, broadened considerably the field of applications of mathematics in cryptology. This is particularly true for the theory of permutations, known since over a hundred years(1), called formerly the theory of substitutions. Its application by Polish cryptologists enabled, in turn of years 1932–33, to break the German Enigma cipher, which subsequently exerted a considerable influence on the course of the 1939–1945 war operation upon the European and African as well as the Far East war theatres (see [1]–[4]). The present paper is intended to show, necessarily in great brevity and simplification, some aspects of the Enigma cipher breaking, those in particular which used the theory of permutations. This paper, being not a systematic outline of the process of breaking the Enigma cipher, presents however its important part. It should be mentioned that the present paper is the first publication on the mathematical background of the Enigma cipher breaking. There exist, however, several reports related to this topic by the same author: one – written in 1942 – can be found in the General Wladyslaw Sikorski Historical Institute in London, and the other – written in 1967 – is deposited in the Military Historical Institute in Warsaw. -

Classical Encryption Techniques

CPE 542: CRYPTOGRAPHY & NETWORK SECURITY Chapter 2: Classical Encryption Techniques Dr. Lo’ai Tawalbeh Computer Engineering Department Jordan University of Science and Technology Jordan Dr. Lo’ai Tawalbeh Fall 2005 Introduction Basic Terminology • plaintext - the original message • ciphertext - the coded message • key - information used in encryption/decryption, and known only to sender/receiver • encipher (encrypt) - converting plaintext to ciphertext using key • decipher (decrypt) - recovering ciphertext from plaintext using key • cryptography - study of encryption principles/methods/designs • cryptanalysis (code breaking) - the study of principles/ methods of deciphering ciphertext Dr. Lo’ai Tawalbeh Fall 2005 1 Cryptographic Systems Cryptographic Systems are categorized according to: 1. The operation used in transferring plaintext to ciphertext: • Substitution: each element in the plaintext is mapped into another element • Transposition: the elements in the plaintext are re-arranged. 2. The number of keys used: • Symmetric (private- key) : both the sender and receiver use the same key • Asymmetric (public-key) : sender and receiver use different key 3. The way the plaintext is processed : • Block cipher : inputs are processed one block at a time, producing a corresponding output block. • Stream cipher: inputs are processed continuously, producing one element at a time (bit, Dr. Lo’ai Tawalbeh Fall 2005 Cryptographic Systems Symmetric Encryption Model Dr. Lo’ai Tawalbeh Fall 2005 2 Cryptographic Systems Requirements • two requirements for secure use of symmetric encryption: 1. a strong encryption algorithm 2. a secret key known only to sender / receiver •Y = Ek(X), where X: the plaintext, Y: the ciphertext •X = Dk(Y) • assume encryption algorithm is known •implies a secure channel to distribute key Dr. -

Enhancing the Security of Caesar Cipher Substitution Method Using a Randomized Approach for More Secure Communication

International Journal of Computer Applications (0975 – 8887) Volume 129 – No.13, November2015 Enhancing the Security of Caesar Cipher Substitution Method using a Randomized Approach for more Secure Communication Atish Jain Ronak Dedhia Abhijit Patil Dept. of Computer Engineering Dept. of Computer Engineering Dept. of Computer Engineering D.J. Sanghvi College of D.J. Sanghvi College of D.J. Sanghvi College of Engineering Engineering Engineering Mumbai University, Mumbai, Mumbai University, Mumbai, Mumbai University, Mumbai, India India India ABSTRACT communication can be encoded to prevent their contents from Caesar cipher is an ancient, elementary method of encrypting being disclosed through various techniques like interception plain text message into cipher text protecting it from of message or eavesdropping. Different ways include using adversaries. However, with the advent of powerful computers, ciphers, codes, substitution, etc. so that only the authorized there is a need for increasing the complexity of such people can view and interpret the real message correctly. techniques. This paper contributes in the area of classical Cryptography concerns itself with four main objectives, cryptography by providing a modified and expanded version namely, 1) Confidentiality, 2) Integrity, 3) Non-repudiation for Caesar cipher using knowledge of mathematics and and 4) Authentication. [1] computer science. To increase the strength of this classical Cryptography is divided into two types, Symmetric key and encryption technique, the proposed modified algorithm uses Asymmetric key cryptography. In Symmetric key the concepts of affine ciphers, transposition ciphers and cryptography a single key is shared between sender and randomized substitution techniques to create a cipher text receiver. The sender uses the shared key and encryption which is nearly impossible to decode. -

Simple Substitution Cipher Evelyn Guo

Simple Substitution Cipher Evelyn Guo Topic: Data Frequency Analysis, Logic Curriculum Competencies: • Develop thinking strategies to solve puzzles and play games • Think creatively and with curiosity and wonder when exploring problems • Apply flexible and strategic approaches to solve problems • Solve problems with persistence and a positive disposition Grade Levels: G3 - G12 Resource: University of Cambridge Millennium Mathematics Project - NRICH https://nrich.maths.org/4957 Cipher Challenge Toolkit https://nrich.maths.org/7983 Practical Cryptography website http://practicalcryptography.com/ciphers/classical-era/simple- substitution/ Materials: Ipad and Laptop which could run excel spreadsheet. Flip Chart with tips and hints for different levels of players Booklet flyers for anyone taking home. Printed coded message and work sheet (help sheet) with plain alphabet and cipher alphabet (leave blank) Pencils Extension: • Depends on individual player’s interest and math abilities, introduce easier (Atbash Cipher, Caesar Cipher) or harder ways ( AutoKey Cipher) to encrypt messages. • Introduce students how to use the practical cryptography website to create their own encrypted message instantly. • Allow players create their own cipher method. • Understand in any language some letters tend to appear more often than other letters Activity Sheet for Substitution Cipher Opening Question: Which Letters do you think are the most common in English? Start by performing a frequency analysis on some selected text to see which letters appear most often. It is better to use longer texts, as a short text might have an unusual distribution of letters, like the "quick brown fox jumps over the lazy dog" Introduce the Problem: In the coded text attached, every letter in the original message was switched with another letter. -

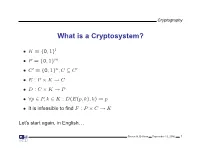

What Is a Cryptosystem?

Cryptography What is a Cryptosystem? • K = {0, 1}l • P = {0, 1}m • C′ = {0, 1}n,C ⊆ C′ • E : P × K → C • D : C × K → P • ∀p ∈ P, k ∈ K : D(E(p, k), k) = p • It is infeasible to find F : P × C → K Let’s start again, in English. Steven M. Bellovin September 13, 2006 1 Cryptography What is a Cryptosystem? A cryptosystem is pair of algorithms that take a key and convert plaintext to ciphertext and back. Plaintext is what you want to protect; ciphertext should appear to be random gibberish. The design and analysis of today’s cryptographic algorithms is highly mathematical. Do not try to design your own algorithms. Steven M. Bellovin September 13, 2006 2 Cryptography A Tiny Bit of History • Encryption goes back thousands of years • Classical ciphers encrypted letters (and perhaps digits), and yielded all sorts of bizarre outputs. • The advent of military telegraphy led to ciphers that produced only letters. Steven M. Bellovin September 13, 2006 3 Cryptography Codes vs. Ciphers • Ciphers operate syntactically, on letters or groups of letters: A → D, B → E, etc. • Codes operate semantically, on words, phrases, or sentences, per this 1910 codebook Steven M. Bellovin September 13, 2006 4 Cryptography A 1910 Commercial Codebook Steven M. Bellovin September 13, 2006 5 Cryptography Commercial Telegraph Codes • Most were aimed at economy • Secrecy from casual snoopers was a useful side-effect, but not the primary motivation • That said, a few such codes were intended for secrecy; I have some in my collection, including one intended for union use Steven M. -

A Covert Encryption Method for Applications in Electronic Data Interchange

Technological University Dublin ARROW@TU Dublin Articles School of Electrical and Electronic Engineering 2009-01-01 A Covert Encryption Method for Applications in Electronic Data Interchange Jonathan Blackledge Technological University Dublin, [email protected] Dmitry Dubovitskiy Oxford Recognition Limited, [email protected] Follow this and additional works at: https://arrow.tudublin.ie/engscheleart2 Part of the Digital Communications and Networking Commons, Numerical Analysis and Computation Commons, and the Software Engineering Commons Recommended Citation Blackledge, J., Dubovitskiy, D.: A Covert Encryption Method for Applications in Electronic Data Interchange. ISAST Journal on Electronics and Signal Processing, vol: 4, issue: 1, pages: 107 -128, 2009. doi:10.21427/D7RS67 This Article is brought to you for free and open access by the School of Electrical and Electronic Engineering at ARROW@TU Dublin. It has been accepted for inclusion in Articles by an authorized administrator of ARROW@TU Dublin. For more information, please contact [email protected], [email protected]. This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 License A Covert Encryption Method for Applications in Electronic Data Interchange Jonathan M Blackledge, Fellow, IET, Fellow, BCS and Dmitry A Dubovitskiy, Member IET Abstract— A principal weakness of all encryption systems is to make sure that the ciphertext is relatively strong (but not that the output data can be ‘seen’ to be encrypted. In other too strong!) and that the information extracted is of good words, encrypted data provides a ‘flag’ on the potential value quality in terms of providing the attacker with ‘intelligence’ of the information that has been encrypted. -

Amy Bell Abilene, TX December 2005

Compositional Cryptology Thesis Presented to the Honors Committee of McMurry University In partial fulfillment of the requirements for Undergraduate Honors in Math By Amy Bell Abilene, TX December 2005 i ii Acknowledgements I could not have completed this thesis without all the support of my professors, family, and friends. Dr. McCoun especially deserves many thanks for helping me to develop the idea of compositional cryptology and for all the countless hours spent discussing new ideas and ways to expand my thesis. Because of his persistence and dedication, I was able to learn and go deeper into the subject matter than I ever expected. My committee members, Dr. Rittenhouse and Dr. Thornburg were also extremely helpful in giving me great advice for presenting my thesis. I also want to thank my family for always supporting me through everything. Without their love and encouragement I would never have been able to complete my thesis. Thanks also should go to my wonderful roommates who helped to keep me motivated during the final stressful months of my thesis. I especially want to thank my fiancé, Gian Falco, who has always believed in me and given me so much love and support throughout my college career. There are many more professors, coaches, and friends that I want to thank not only for encouraging me with my thesis, but also for helping me through all my pursuits at school. Thank you to all of my McMurry family! iii Preface The goal of this research was to gain a deeper understanding of some existing cryptosystems, to implement these cryptosystems in a computer programming language of my choice, and to discover whether the composition of cryptosystems leads to greater security. -

Hagelin) by Williaj-1 F

.. REF ID :A2436259 Declassified and approved for release by NSA on 07-22 2014 pursuant to E.O. 1352e REF ID:A2436259 '!'UP SECRE'l' REPORT"OF.VISIT 1Q. CRYPTO A.G. (HAGELIN) BY WILLIAJ-1 F. FRIEDI.W.if SPECIAL ASSISTANT TO THE DIRECTOR, NATIONAL SECURITY AGENCY 21 - 28 FEBRUARY 1955 ------------------ I -:-· INTRO:bUCTIOI~ 1. In accordance with Letter Orders 273 dated 27 January 1955, as modified by L.0.273-A dated~ February 1955, I left Washington via MATS at 1500 houri' on 18 'February 1955, arrived at Orly Field, Pe,ris, at 1430 hours on 19 February, ' • • f • I ' -,-:--,I." -'\ iII ~ ~ ,.oo4 • ,. ,.. \ • .... a .. ''I •:,., I I .arid at Zug, Switzerland, at 1830 the same day. I sp~~~ th~· ~e~t .few da;s· ~ Boris Hagelin, Junior, for the purpose of learning the status of their new deyelop- ' ments in crypto-apparatus and of makifie an approach and a proposal to Mr. Hagelin S~, 1 / as was recently authorized by.USCIB and concurred in by LSIB. ~ Upon completion of that part of my mission, I left Zug at 1400 hours on ··'··· 28 February and proceeded by atrb-eme:Bfle to Zll:N:ch, ·1.'fteu~ I l3e-a.d:ee: a s~f3:es ah3::i:nMP plE.t;i~ie to London, arriving i:n mndo-l't' at 1845 that evening, f;the schedu1 ed p1anli ed 2_. The following report is based upon notes made of the subste.nce of several talks with the Hagel~ns, at times in separate meetings with each of them and at other times in meetings with both of them. -

A Cipher Based on the Random Sequence of Digits in Irrational Numbers

https://doi.org/10.48009/1_iis_2016_14-25 Issues in Information Systems Volume 17, Issue I, pp. 14-25, 2016 A CIPHER BASED ON THE RANDOM SEQUENCE OF DIGITS IN IRRATIONAL NUMBERS J. L. González-Santander, [email protected], Universidad Católica de Valencia “san Vicente mártir” G. Martín González. [email protected], Universidad Católica de Valencia “san Vicente mártir” ABSTRACT An encryption method combining a transposition cipher with one-time pad cipher is proposed. The transposition cipher prevents the malleability of the messages and the randomness of one-time pad cipher is based on the normality of "almost" all irrational numbers. Further, authentication and perfect forward secrecy are implemented. This method is quite suitable for communication within groups of people who know one each other in advance, such as mobile chat groups. Keywords: One-time Pad Cipher, Transposition Ciphers, Chat Mobile Groups Privacy, Forward Secrecy INTRODUCTION In cryptography, a cipher is a procedure for encoding and decoding a message in such a way that only authorized parties can write and read information about the message. Generally speaking, there are two main different cipher methods, transposition, and substitution ciphers, both methods being known from Antiquity. For instance, Caesar cipher consists in substitute each letter of the plaintext some fixed number of positions further down the alphabet. The name of this cipher came from Julius Caesar because he used this method taking a shift of three to communicate to his generals (Suetonius, c. 69-122 AD). In ancient Sparta, the transposition cipher entailed the use of a simple device, the scytale (skytálē) to encrypt and decrypt messages (Plutarch, c. -

The Mathemathics of Secrets.Pdf

THE MATHEMATICS OF SECRETS THE MATHEMATICS OF SECRETS CRYPTOGRAPHY FROM CAESAR CIPHERS TO DIGITAL ENCRYPTION JOSHUA HOLDEN PRINCETON UNIVERSITY PRESS PRINCETON AND OXFORD Copyright c 2017 by Princeton University Press Published by Princeton University Press, 41 William Street, Princeton, New Jersey 08540 In the United Kingdom: Princeton University Press, 6 Oxford Street, Woodstock, Oxfordshire OX20 1TR press.princeton.edu Jacket image courtesy of Shutterstock; design by Lorraine Betz Doneker All Rights Reserved Library of Congress Cataloging-in-Publication Data Names: Holden, Joshua, 1970– author. Title: The mathematics of secrets : cryptography from Caesar ciphers to digital encryption / Joshua Holden. Description: Princeton : Princeton University Press, [2017] | Includes bibliographical references and index. Identifiers: LCCN 2016014840 | ISBN 9780691141756 (hardcover : alk. paper) Subjects: LCSH: Cryptography—Mathematics. | Ciphers. | Computer security. Classification: LCC Z103 .H664 2017 | DDC 005.8/2—dc23 LC record available at https://lccn.loc.gov/2016014840 British Library Cataloging-in-Publication Data is available This book has been composed in Linux Libertine Printed on acid-free paper. ∞ Printed in the United States of America 13579108642 To Lana and Richard for their love and support CONTENTS Preface xi Acknowledgments xiii Introduction to Ciphers and Substitution 1 1.1 Alice and Bob and Carl and Julius: Terminology and Caesar Cipher 1 1.2 The Key to the Matter: Generalizing the Caesar Cipher 4 1.3 Multiplicative Ciphers 6