Engaging with London's Diverse Communities

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

WEEK in BRUSSELS Week Ending Friday 23 January

WEEK IN BRUSSELS Week ending Friday 23 January SMMT strengthens UK auto EU to support studies on dialogue with EU electric vehicle traffic SMMT held its reception in Brussels ‘The UK development automotive industry in Europe’ this week. The reception brought together senior automotive industry The EU’s TEN-T Programme will back a market study executives, UK and EU government officials, MEPs and a pilot on the deployment of electric vehicles and and representatives of the European Commission. A their charging infrastructure along the main highways keynote speech was delivered by Lord Hill, in southern Sweden, Denmark and northern Germany. Commissioner for Financial Stability, Financial The €1 million project aims to contribute to removing Services and Capital Markets Union, in which he barriers for long distance ‘green’ travel across announced the re-launch of the European borders. One of the main barriers to the widespread Commission’s CARS 2020 initiative. Also speaking at use of electric vehicles on European roads is lack of the event were Glenis Willmott MEP, Syed Kamall convenient service stations and their incompatibility MEP and Mike Hawes, SMMT Chief Executive. The with other kinds of vehicles. This project will carry out event presented a key opportunity to demonstrate the feasibility studies on consumer preferences and user strength and importance of the UK automotive acceptance of electric vehicles and the related industry to European colleagues, and to emphasise charging infrastructure, as well as the supporting how having a strong voice in Europe is critical to the consumer services. It will also make a pilot continuing success of the UK automotive sector. -

A Guide to the EU Referendum Debate

‘What country, friends, is this?’ A guide to the EU referendum debate ‘What country, friends, is this?’ A guide to the EU referendum debate Foreword 4 Professor Nick Pearce, Director of the Institute for Policy Research Public attitudes and political 6 discourses on the EU in the Brexit referendum 7 ‘To be or not to be?’ ‘Should I stay or should I go?’ and other clichés: the 2016 UK referendum on EU membership Dr Nicholas Startin, Deputy Head of the Department of Politics, Languages & International Studies 16 The same, but different: Wales and the debate over EU membership Dr David Moon, Lecturer, Department of Politics, Languages & International Studies 21 The EU debate in Northern Ireland Dr Sophie Whiting, Lecturer, Department of Politics, Languages & International Studies 24 Will women decide the outcome of the EU referendum? Dr Susan Milner, Reader, Department of Politics, Languages & International Studies 28 Policy debates 29 Brexit and the City of London: a clear and present danger Professor Chris Martin, Professor of Economics, Department of Economics 33 The economics of the UK outside the Eurozone: what does it mean for the UK if/when Eurozone integration deepens? Implications of Eurozone failures for the UK Dr Bruce Morley, Lecturer in Economics, Department of Economics 38 Security in, secure out: Brexit’s impact on security and defence policy Professor David Galbreath, Professor of International Security, Associate Dean (Research) 42 Migration and EU membership Dr Emma Carmel, Senior Lecturer, Department of Social & Policy Sciences 2 ‘What country, friends, is this?’ A guide to the EU referendum debate 45 Country perspectives 46 Debating the future of Europe is essential, but when will we start? The perspective from France Dr Aurelien Mondon, Senior Lecturer, Department of Politics, Languages & International Studies 52 Germany versus Brexit – the reluctant hegemon is not amused Dr Alim Baluch, Teaching Fellow, Department of Politics, Languages & International Studies 57 The Brexit referendum is not only a British affair. -

Proposed Cycling and Pedestrian Improvements on A21 Farnborough Way at Green Street Green

Proposed cycling and pedestrian improvements on A21 Farnborough Way at Green Street Green Consultation Report October 2013 1 Contents 1. Introduction……………………………………………….….……………………..2 2. The Consultation.................………………………………………………………4 3. Results of the consultation…......………………………………………….......... 4 4. Conclusion………………………………………………………………………….10 Appendix A – copy of consultation letter…………………………..……...….…11 Appendix B – list of stakeholders consulted.……………………………..…….13 2 1 Introduction Background In 2005 a petition with 100 signatures was received by Transport for London (TfL) from Bob Neill (London Assembly Member), asking us to provide a signal controlled crossing on the A21 near the junction with Cudham Lane North. An investigation into providing improved crossing facilities was initiated the same year resulting in a design consisting of a staggered signal controlled crossing which was consulted on in January 2010. Subsequently it was decided that the scheme was not deliverable as collision data for the road was not sufficient to justify the cost of the scheme or impact on general traffic movements. An alternative scheme for addressing pedestrian improvements was subsequently designed (as detailed below). Objectives The proposals are designed to improve pedestrian and cycling accessibility and connectivity around the A21 Green Street Green roundabout and in particular to provide improved pedestrian and cycle links between A21 Farnborough Way and A21 Sevenoaks Way as well as the residential developments in Cudham Lane North and the centre of Green Street Green, without significant impact on general traffic movements. General Scope of proposals The proposals would see the introduction of a new wide uncontrolled pedestrian and cycle crossing facility across A21 Farnborough Way, improvements to the existing crossing facility across Cudham Lane North, and improved shared pedestrian and cycle routes. -

View Call List: Chamber PDF File 0.08 MB

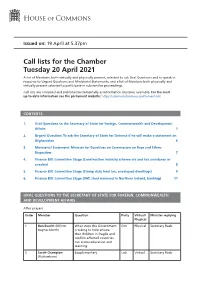

Issued on: 19 April at 5.37pm Call lists for the Chamber Tuesday 20 April 2021 A list of Members, both virtually and physically present, selected to ask Oral Questions and to speak in response to Urgent Questions and Ministerial Statements; and a list of Members both physically and virtually present selected to participate in substantive proceedings. Call lists are compiled and published incrementally as information becomes available. For the most up-to-date information see the parliament website: https://commonsbusiness.parliament.uk/ CONTENTS 1. Oral Questions to the Secretary of State for Foreign, Commonwealth and Development Affairs 1 2. Urgent Question: To ask the Secretary of State for Defence if he will make a statement on Afghanistan 6 3. Ministerial Statement: Minister for Equalities on Commission on Race and Ethnic Disparities 7 4. Finance Bill: Committee Stage (Construction industry scheme etc and tax avoidance or evasion) 8 5. Finance Bill: Committee Stage (Stamp duty land tax, enveloped dwellings) 9 6. Finance Bill: Committee Stage (VAT, steel removed to Northern Ireland, banking) 11 ORAL QUESTIONS TO THE SECRETARY OF STATE FOR FOREIGN, COMMONWEALTH AND DEVELOPMENT AFFAIRS After prayers Order Member Question Party Virtual/ Minister replying Physical 1 Ben Everitt (Milton What steps the Government Con Physical Secretary Raab Keynes North) is taking to help ensure that children in fragile and conflict-affected countries can access education and learning. 2 Sarah Champion Supplementary Lab Virtual Secretary Raab (Rotherham) 2 Tuesday 20 April 2021 Order Member Question Party Virtual/ Minister replying Physical 3 Chris Law (Dundee Supplementary SNP Virtual Secretary Raab West) 4 + 5 Rachel Hopkins (Luton What recent assessment he Lab Virtual S+B5:F21ecretary South) has made of the (a) human- Raab itarian and (b) human rights situation in Tigray, Ethiopia. -

Your Darwin News Updates

News from Darwin Conservatives Spring 2020 YOUR DARWIN NEWS UPDATES From Councillor Scoates. "I like to keep in regular contact with local residents. I value discussing local issues with people who are involved or affected by them." "I'm very pleased to have been places of interest in the Village invited to Residents’ Meeting at and local areas. Downe, Cudham, Blackness • The Permit Parking scheme Lane and Beechwood estate. introduced on the Beechwood The updates I get from these Estate has concluded meetings raise issues that I try satisfactorily and residents are my best to resolve. The many happy with the current Residents’ Associations are a arrangements. great conduit for issues that are not raised with me personally. • The over-flow car park for Christmas Tree Farm has now • There’s good news for been made official. The car Downe Village. Along with park has proven to reduce funds raised by the Downe parking problems surrounding Residents’ Association, Bromley the Farm during busy times. Council have agreed to fund an Christmas Tree Farm have also ‘Information Board’ to be allowed it to be used for erected in the centre of the community events and is very Village. This will point out much run for the Village." Darwin Ward Councillor, Richard Scoates CRIME-FIGHTING HOUSING AND YOUTH WORKER PLANNING Crime across London is on the rise. In Since his election in 2010, Councillor fact, knife crime is the highest it’s been in Richard Scoates has worked tirelessly to 11 years. It has risen by 39%. That’s 4500 protect our environment and our precious more offences since Sadiq Khan became Green Belt land Mayor. -

On Faithfulness and Factuality in Abstractive Summarization

On Faithfulness and Factuality in Abstractive Summarization Joshua Maynez∗ Shashi Narayan∗ Bernd Bohnet Ryan McDonald Google Research fjoshuahm,shashinarayan,bohnetbd,[email protected] Abstract understanding how maximum likelihood training and approximate beam-search decoding in these It is well known that the standard likelihood models lead to less human-like text in open-ended training and approximate decoding objectives text generation such as language modeling and in neural text generation models lead to less human-like responses for open-ended tasks story generation (Holtzman et al., 2020; Welleck such as language modeling and story gener- et al., 2020; See et al., 2019). In this paper we ation. In this paper we have analyzed limi- investigate how these models are prone to gener- tations of these models for abstractive docu- ate hallucinated text in conditional text generation, ment summarization and found that these mod- specifically, extreme abstractive document summa- els are highly prone to hallucinate content that rization (Narayan et al., 2018a). is unfaithful to the input document. We con- Document summarization — the task of produc- ducted a large scale human evaluation of sev- eral neural abstractive summarization systems ing a shorter version of a document while preserv- to better understand the types of hallucinations ing its information content (Mani, 2001; Nenkova they produce. Our human annotators found and McKeown, 2011) — requires models to gener- substantial amounts of hallucinated content in ate text that is not only human-like but also faith- all model generated summaries. However, our ful and/or factual given the document. The exam- analysis does show that pretrained models are ple in Figure1 illustrates that the faithfulness and better summarizers not only in terms of raw factuality are yet to be conquered by conditional metrics, i.e., ROUGE, but also in generating text generators. -

Report Title Appointments and Urgency Committee – Appointment of Chair

Report title Appointments and Urgency Committee – Appointment of Chair Meeting Date Authority 26 March 2015 Report by Document Number Clerk to the Authority FEP 2431 Public Summary The Mayor has appointed Gareth Bacon AM as Chairman of the London Fire and Emergency Planning Authority, with effect from 27 March 2015.This report seeks approval for the proposed Chairman to be appointed Chairman of the Authority’s Appointments and Urgency Committee, with effect from 27 March 2015. Recommendation 1. That Gareth Bacon AM be appointed Chairman of the Appointments and Urgency Committee with effect from 27 March 2015. Background 2. At its meeting on 29 January 2015, the Chairman announced that he had written to the Mayor and the Clerk of the Authority to inform them of his intention to stand down as Chairman of the Authority immediately after the full Authority meeting on 26 March 2015. 3. On 3 February 2015, the Mayor wrote to the Chairman of the London Assembly to inform the Assembly of his decision to nominate Gareth Bacon AM as Chairman of LFEPA from 27 March 2015 to 16 June 2015. On 23 February, the London Assembly held a Confirmation Hearings Committee meeting to consider this appointment and to put questions to the nominated Chairman. At the rise of the meeting, the Chair of the Confirmation Hearings Committee wrote to the Mayor to confirm that Gareth Bacon AM should be appointed to the role. The Mayor confirmed on 2 March 2015 that he had appointed Gareth Bacon to the office of Chairman of the Authority, with effect from 27 March 2015. -

Bromley CAMRA June 2019 Newsletter Dear CAMRA Member

This email was sent from your CAMRA Branch Bromley CAMRA June 2019 Newsletter Dear CAMRA member, Items of interest this month include: Branch Social Events Beckenham Beer and Cider Festival 2019 Branch Committee Vacancies Beer Scoring Using the Good Beer Guide App Summer of Pub Bromley Cramble 2019 An introduction to Maggie Hopgood “Its not all Lager” Branch Social Events 11 Jun. Bromley North Social. 7.30pm Crown & Anchor, 19 Park Rd, BR1 3HJ; 8.30pm Freelands Tavern, 31 Freelands Rd, BR1 3HZ; 9.30pm Red Lion, 10 North Rd BR1 3LG. 18 Jun. Hayes Social. 7.30pm Royal British Legion, 14 Station Hill, Hayes, BR2 7DJ; 8.30pm Real Ale Way, 55 Station Approach, BR2 7EB. 25 Jun. Committee Meeting. 7.30pm Queen’s Head, 25 High St. Downe, BR6 7US. All members are welcome to attend 29 Jun. The Annual Crystal Palace Triangle Social. with Croydon & Sutton & SEL Branches. Starts 12.00 noon at Douglas Fir, 144 Anerley Rd, Anerley SE20 8DL; 1.10pm; Alma, 95 Church Rd, Crystal Palace SE19 2TA; 2pm White Hart, 96 Church Rd, Upper Norwood SE19 2EZ; 2.50pm Postal Order, 33 Westow St. SE19 3RW; 3.50pm Sparrowhawk, 2 Westow Hill, Upper Norwood SE19 1RX; 4.40pm Walker Briggs, 23 Westow Hill SE19 1TQ; 5.30pm Faber Fox, 25-27 Westow Hill SE19 1TQ; 6.20pm Westow House, 79 Westow Hill SE19 1TX. 6 Jul. Branch Social at SIBA Beer Fest, The Slade, Tonbridge TN9 1HR. Meet Orpington Stn. at 10.50am for 11.12 Train to Tonbridge. Venue about 10min walk from Tonbridge Stn. -

The European Conservatives and Reformists in the European Parliament

The European Conservatives and Reformists in the European Parliament Standard Note: SN/IA/6918 Last updated: 16 June 2014 Author: Vaughne Miller Section International Affairs and Defence Section Parties are continuing negotiations to form political groups in the European Parliament (EP) following European elections on 22-25 May 2014. This note looks at the future composition of the European Conservatives and Reformists group (ECR), which was established by UK Conservatives when David Cameron took them out of the centre-right EPP-ED group in 2009. It also considers what the entry of the German right-wing Alternative für Deutschland group into the ECR group might mean for Anglo-German relations. The ECR might become the third largest political group in the EP, although this depends on support for the Liberals and Democrats group. The final number and composition of EP political groups will not be known until after 24 June 2014, which is the deadline for registering groups. For detailed information on the EP election results, see Research paper 14/32, European Parliament Elections 2014, 11 June 2014. This information is provided to Members of Parliament in support of their parliamentary duties and is not intended to address the specific circumstances of any particular individual. It should not be relied upon as being up to date; the law or policies may have changed since it was last updated; and it should not be relied upon as legal or professional advice or as a substitute for it. A suitably qualified professional should be consulted if specific advice or information is required. -

Xerox University Microfilms 300 North Zoab Road Ann Arbor, Michigan 46106 7619623

INFORMATION TO USERS This material was produced from a microfilm copy of the original document. While the most advanced technological means to photograph and reproduce this document have been used, the quality is heavily dependent upon the quality of the original submitted. The following explanation of techniques is provided to help you understand markings or patterns which may appear on this reproduction. 1. The sign or "target" for pages apparently lacking from the document photographed is "Missing Page(s)". If it was possible to obtain the missing page(s) dr section, they are spliced into the film along with adjacent pages, This may have necessitated cutting thru an image and duplicating adjacent pages to insure you complete continuity. 2. When an image on the film is obliterated with a large round black mark, it is an indication that the photographer suspected that the copy may have moved during exposure and thus cause a blurred image. You will find a good image of the page in the adjacent frame. 3. When a map, drawing or chart, etc., was part of the material being photographed the photographer followed a definite method in "sectioning" the material. It is customary to begin photoing at the upper left hand corner of a large sheet and to continue photoing from left to right in equal sections with a small overlap. If necessary, sectioning is continued again — beginning below the first row and continuing on until complete. 4. The majority of users indicate that the textual content is of greatest value, however, a somewhat higher quality reproduction could be made from "photographs" if essential to the understanding of the dissertation. -

Exclusive Interview with Dr

State: A Last Resort for People Exclusive interview with Dr. Syed Kamall Conducted by Bardia Garshasbi in London– July 2014 Syed Kamall is a phenomenon in his own right. He comes from an ordinary Guyanese family who migrated to London in mid-1950s. His father was a bus driver, and now at the age of 47, he is the leader of the Britain’s Conservative Party in the European Parliament, representing the people of London in that parliament since 2005, when he was only 38! Having degrees from some of the world’s most prestigious universities (London School of Economics and City University London) Dr. Kamall also chairs the European Conservatives and Reformist Group, the EU Parliament’s third largest group with 70 MEPs who are campaigning for urgent reform of the EU and of whom Syed is, as he proudly puts it, “the first group leader from an ethnic minority and the first Muslim ever”. Dr. Kamall is one of the few successful and highly educated politicians in Europe who does not come from an elite background or rich family. His path to success has been uphill and demanding. He has firsthand knowledge of the challenges low-income families are facing in their daily lives. That is why the relief of poverty has always been a top priority for him. He works tirelessly to find ways to tackle poverty by educating the young and by creating incentives and equal opportunities for hard- working people. His constituents in London and his peers in both Britain and Europe know him for his intelligence, decency, and eloquence. -

Members of the House of Commons December 2019 Diane ABBOTT MP

Members of the House of Commons December 2019 A Labour Conservative Diane ABBOTT MP Adam AFRIYIE MP Hackney North and Stoke Windsor Newington Labour Conservative Debbie ABRAHAMS MP Imran AHMAD-KHAN Oldham East and MP Saddleworth Wakefield Conservative Conservative Nigel ADAMS MP Nickie AIKEN MP Selby and Ainsty Cities of London and Westminster Conservative Conservative Bim AFOLAMI MP Peter ALDOUS MP Hitchin and Harpenden Waveney A Labour Labour Rushanara ALI MP Mike AMESBURY MP Bethnal Green and Bow Weaver Vale Labour Conservative Tahir ALI MP Sir David AMESS MP Birmingham, Hall Green Southend West Conservative Labour Lucy ALLAN MP Fleur ANDERSON MP Telford Putney Labour Conservative Dr Rosena ALLIN-KHAN Lee ANDERSON MP MP Ashfield Tooting Members of the House of Commons December 2019 A Conservative Conservative Stuart ANDERSON MP Edward ARGAR MP Wolverhampton South Charnwood West Conservative Labour Stuart ANDREW MP Jonathan ASHWORTH Pudsey MP Leicester South Conservative Conservative Caroline ANSELL MP Sarah ATHERTON MP Eastbourne Wrexham Labour Conservative Tonia ANTONIAZZI MP Victoria ATKINS MP Gower Louth and Horncastle B Conservative Conservative Gareth BACON MP Siobhan BAILLIE MP Orpington Stroud Conservative Conservative Richard BACON MP Duncan BAKER MP South Norfolk North Norfolk Conservative Conservative Kemi BADENOCH MP Steve BAKER MP Saffron Walden Wycombe Conservative Conservative Shaun BAILEY MP Harriett BALDWIN MP West Bromwich West West Worcestershire Members of the House of Commons December 2019 B Conservative Conservative