What Is a Codec?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sony Recorder

Sony Recorder www.ctlny.com 24 All prices subject to change DVCAM, J-Series, Portable & Betacam Recorders DVCAM Recorders J-Series Betacam Recorders SP Betacam Recorders Sony Model# DSR1500A Sony Model# J1/901 Sony Model# PVW2600 Sales price $5,680.08 Sales price $5,735.80 Sales price $12,183.36 Editing recorder also play Beta/SP/SX Player w/ Betacam SP Video Editing DVCPRO,SDI-YUV Component Output Player with TBC & TC optional 8-3/8 x 5-1/8 x 16-5/8 16-7/8 x 7-5/8 x 19-3/8 Model # List Sales price Model # List Sales price Model # List Sales price DSR1500A $7,245.00 $5,680.08 J1/901 $6,025.00 $5,735.80 PVW2600 $15,540.00 $12,183.3 Editing recorder also play DVCPRO,SDI-YUV optional Beta/SP/SX Player w/ Component Output Betacam SP Video Editing Player with TBC & TC 6 DSR1600 $6,975.00 $5,468.40 J1/902 $7,050.00 $6,711.60 PVW2650 $22,089.00 $17,317.7 Edit Player w/ DVCPRO playback, RS-422 & DV Output Beta/SP/SX Editing Player w/ SDI Output Betacam SP Editing Player w. Dynamic Tracking, TBC & TC8 DSR1800 $9,970.00 $7,816.48 J2/901 $10,175.00 $9,686.60 PVW2800 $23,199.00 $18,188.0 Edit Recorder w/DVCPRO playback,RS422 & DV Output IMX/SP/SX Editing Player w/ Component Output Betacam SP Video Editing Recorder with TBC & TC 2 DSR2000 $15,750.00 $13,229.4 J2/902 $11,400.00 $10,852.8 UVW1200 $6,634.00 $5,572.56 DVCAM/DVCPRO Recorder w/Motion Control,SDI/RS422 4 IMX/SP/SX Editing Player w/ SDI Output 0 Betacam Player w/ RGB & Auto Repeat Function DSR2000P $1,770.00 $14,868.0 J3/901 $12,400.00 $11,804.8 UVW1400A $8,988.00 $7,549.92 PAL DVCAM/DVCPRO -

V-Tune Pro 4K AV Tuner Delivers Exceptional HD Tuning Solutions

V-Tune Pro 4K AV Tuner Delivers Exceptional HD Tuning Solutions Corporate • Hotels • Conference Centers • Higher Education 800.245.4964 | [email protected] | www.westpennwire.com Tune Into HDTV 4K Distribution AV Tuner Provides HDMI 2.0, 1G BaseT Port & 3-Year Warranty The V-Tune Pro 4K offers a 4K2K high definition solution for Video outputs are HDMI 2.0 to reflect the latest in integrated systems that conform to NTSC (North America) connectivity technology, and composite YPbPr video or PAL (Europe) standards, or QAM schemes, and feature: to accommodate legacy systems. > ATSC Receivers > DVB Devices Audio is provided through unbalanced stereo RCA connectors, as well as through S/PDIF and optical > IPTV Systems fiber connectors. The tuner is capable of decoding video in these formats with resolutions up to 4K@60Hz via RF & LAN: The V-Tune Pro 4K’s versatility enables it to fit with > H.264 almost any integrated system’s topology. It’s the only > H.265 tuner on the market which can support a wide range of standards, formats, controls and connectivity > MPEG2 technologies cost-effectively. > MPEG4 > VC-1 The V-Tune Pro-4K AV Tuner may be integrated with these types of controls: > IP-Based > IR > RS-232 More Features to Maximize Functionality The V-Tune Pro-4K AV Tuner has many characteristics that make it ideal for any environment requiring superior HD tuning solutions. FEATURE V-TUNEPRO-4K 232-ATSC-4 4k x 2k Resolution YES NO (1080P Max) PAL YES NO DVB-T YES NO ISDB-T YES NO IPTV YES (UDP/RTP/RTSP Multicast & Unicast) NO 1G LAN PORT -

Avid Supported Video File Formats

Avid Supported Video File Formats 04.07.2021 Page 1 Avid Supported Video File Formats 4/7/2021 Table of Contents Common Industry Formats ............................................................................................................................................................................................................................................................................................................................................................................................... 4 Application & Device-Generated Formats .................................................................................................................................................................................................................................................................................................................................................................. 8 Stereoscopic 3D Video Formats ...................................................................................................................................................................................................................................................................................................................................................................................... 11 Quick Lookup of Common File Formats ARRI..............................................................................................................................................................................................................................................................................................................................................................4 -

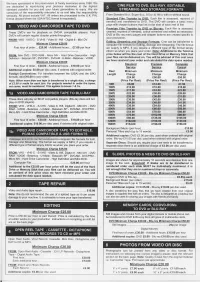

Minimum Charqe F25.00 Minimum Charqe €20.00

We have specialisedin the preservationof familymemories since 1988.We are dedicated to reproducing your precious memories to the highest standard possiblefor you and your future generationsto enjoy. We are consciousof the responsibilityentrusted to us and take this responsibility seriously.All prices includeVAT. All ordersare processedto the U.K. PAL FromStandard Bmm, Super Bmm, 9.5mm & 16mmwith or withoutsound. format(except where the USA NTSCformat is requested). Standard Film Transfer to DVD: Each film is assessed, repaired(if needed) and transferredto DVD. The DVD will contain a basic menu pagewith chaplerbuttons that link to the startof each reel of film. Premium Film Transfer to DVD or Blu-rav: Each film is assessed, These DVD's are for playback on DVD-R compatible players. Your cleaned,repaired (if needed),colour corrected and edited as necessary. DVD'swill containregular chapter points throughout. DVD or Blu-raymenu pages and chapterbuttons are createdspecific to your production. FROM:VHS -VHS-C - S-VHS-VideoB - HiB- DigitalB- MiniDV Editinq. Streaminq and Storaqe Formats: Cine can be transferredto MinimumCharqe €20.00 computerfile formatsfor Editing,Storage and Streaming.The file format Firsthour of order....€20.00 - Additionalhours.... €7.00 per hour we supply is MP4, if you require a differenttype of file format simply informus when you place your order. Note: Additional to the Telecine price below will be the the Hard Drive Memory FROM:Mini DVD - DVD RAM - MicroMV - Hard DriveCamcorder - High cost of or Stick that your Definition- BelacamSP - DVCPRO- DVCAM- U-Matic- Betamax- V2000. files can be delivered on. You will be advised of this cost once we have received your order and calculated the data space needed. -

Fast Cosine Transform to Increase Speed-Up and Efficiency of Karhunen-Loève Transform for Lossy Image Compression

Fast Cosine Transform to increase speed-up and efficiency of Karhunen-Loève Transform for lossy image compression Mario Mastriani, and Juliana Gambini Several authors have tried to combine the DCT with the Abstract —In this work, we present a comparison between two KLT but with questionable success [1], with particular interest techniques of image compression. In the first case, the image is to multispectral imagery [30, 32, 34]. divided in blocks which are collected according to zig-zag scan. In In all cases, the KLT is used to decorrelate in the spectral the second one, we apply the Fast Cosine Transform to the image, domain. All images are first decomposed into blocks, and each and then the transformed image is divided in blocks which are collected according to zig-zag scan too. Later, in both cases, the block uses its own KLT instead of one single matrix for the Karhunen-Loève transform is applied to mentioned blocks. On the whole image. In this paper, we use the KLT for a decorrelation other hand, we present three new metrics based on eigenvalues for a between sub-blocks resulting of the applications of a DCT better comparative evaluation of the techniques. Simulations show with zig-zag scan, that is to say, in the spectral domain. that the combined version is the best, with minor Mean Absolute We introduce in this paper an appropriate sequence, Error (MAE) and Mean Squared Error (MSE), higher Peak Signal to decorrelating first the data in the spatial domain using the DCT Noise Ratio (PSNR) and better image quality. -

Shop Fullcompass.Com Today! for Expert Advice - Call: 800-356-5844 M-F: 9:00-5:30 Central 412 VIDEO PLAYERS / RECORDERS

DVD / BLU-RAY PLAYERS AND RECORDERS DVD / BLU-RAYVIDEO PLAY PLAYERSE RASND / RECORDERS 411 NEW! ATOMOS ATOMOS NINJA STAR POCKET- SIZE APPLE PRORES RECORDER AND TASCAM DV-D01U RACKMOUNTABLE SINGLE DISC DVD PLAYER DECK Designed for action cameras, drones The DV-D01U is a rackmountable single-disc player with a powered loading tray. and mirrorless DSLR’s, this pocket-size Apple The player reads DVD video, DVD audio (2-channel only), video CD, CD-DA, MP3, ProRes recorder delivers better quality (no MPEG compression) and huge WMA, .WAV, JPEG, ASF, MPEG-2/MPEG-1, and DivX. It is capable of playing back workflow timesavings. Ultra-long battery life with tiny size and weight make this properly finalized DVD +R/+RW discs. Outputs consist of HDMI audio/video unit ideal for situations requiring portability, reliability and long power draws. out and composite, S-Video, and component video outputs. Rear panel stereo Captures video in select HD/SD recording formats via HDMI, with 2-channel audio outputs are unbalanced (RCA) and digital outputs are optical. Digital outputs can from camera and/or analog input. Edit-ready media files are recorded directly to pass Dolby Digital and DTS surround streams for later decoding. The DV-D01U CFast 1.0 memory card. Standard mounting plate included. provides extensive bi-directional RS232 serial control and an I/R remote control ITEM DESCRIPTION PRICE is included. ATO-NINJA-STAR........ Pocket-Size ProRes Recorder & Deck ........................................... 295.00 ITEM DESCRIPTION PRICE ATO-MCFT064 ........... 64GB CFast Memory Card ........................................................... 159.00 DVD01U ..................... Rackmountable DVD player, 1RU ................................................ 369.99 ATO-MCFT128 .......... -

Prices 06-2021

Prices (exc. VAT) -June 2021 1 - Contents 2 - 4K HDR color grading 3 - 5.1 mixing 4 - Subtitling 5 - 2K/4K/3D DCPs 6 - Broadcast-ready files / IMF Package / Dolby E 7 - DVD & 2D/3D/4K Blu-ray authoring 8 - DVD duplication 9 - Blu-ray duplication 10 - Dailies management / 3D & HDR encoding 11 - LTO archiving and restore Have a question or want a quote? Write to us: [email protected] Registration: 492432703 - VAT ID: FR52492432703 4K HDR color grading Color grading station Half-day (4h) Full day (8h) Week (5d) Rental of 4K HDR DaVinci Resolve station with senior colorist's services € 550 € 990 € 4 900 Rental of 4K HDR DaVinci Resolve station without senior colorist's services € 270 € 500 € 2 450 Delivery of DaVinci Resolve source project to customer Included Video export Apple ProRes or DNxHR Included Color grading peripherals Week (5d) Rental of HDR Eizo CG3145 Prominence monitor € 900 Rental of DaVinci Resolve Mini Panel € 300 Transport costs (both ways, mandatory, only available in Paris, Hauts-de-Seine, Seine-Saint-Denis and Val-de-Marne) € 100 Roundtrip workflow Final Cut Pro Media Composer Premiere Pro Organisation of the timeline and export to Final Cut Pro 7/X, Avid Media Composer or Adobe Premiere Pro Offline reference clip with burned-in timecode after export € 90 / hour Project import and check on DaVinci Resolve The powerful color grading station: Blackmagic Design's DaVinci Resolve Studio 17 on Linux (+Neat plugin) AMD Ryzen Threadripper 64 cores / nVidia RTX 3090 24 GB Best 4K DCI 10-bit HDR mastering display monitor on an Eizo CG3145 Prominence (meeting Dolby Vision and Netflix standards) Display calibration by a VTCAM expert to Rec. -

Capabilities of the Horchow Auditorium and the Orientation

Performance Capabilities of Horchow Auditorium and Atrium at the Dallas Museum of Art Horchow Auditorium Capacity and Stage: The auditorium seats 333 people (with a 12 removable chair option in the back), maxing out the capacity at 345). The stage is 45’ X 18’and the screen is 27’ X 14’. A height adjustable podium, microphone, podium clock and light are standard equipment available. Installed/Available Equipment Sound: Lighting: 24 channel sound board 24 fixed lights 4 stage monitors (with up to 4 Mixes) 5 movers (these give a wide array of lighting looks) 6 hardwired microphones 4 wireless lavaliere microphones 2 handheld wireless microphones (with headphone option) 9-foot Steinway Concert Grand Piano 3 Bose towers (these have been requested by Acoustic performers before and work very well) Music stands Projection Panasonic PTRQ32 4K 20,000 Lumen Laser Projector Preferred Video Formats in Horchow Blu Ray DVD Apple ProRes 4:2:2 Standard in a .mov wrapper H.264 in a .mov wrapper Formats we can use, but are not optimal MPEG-1/2 Dirac / VC-2 DivX® (1/2/3/4/5/6) MJPEG (A/B) MPEG-4 ASP WMV 1/2 XviD WMV 3 / WMV-9 / VC-1 3ivX D4 Sorenson 1/3 H.261/H.263 / H.263i DV H.264 / MPEG-4 AVC On2 VP3/VP5/VP6 Cinepak Indeo Video v3 (IV32) Theora Real Video (1/2/3/4) Atrium Capacity and Stage: The Atrium seats up to 500 people (chair rental required). The stage available to be installed in the Atrium is 16’ x 12’ x 1’. -

Reviewer's Guide

Episode® 6.5 Affordable transcoding for individuals and workgroups Multiformat encoding software with uncompromising quality, speed and control. The Episode Product Guide is designed to provide an overview of the features and functions of Telestream’s Episode products. This guide also provides product information, helpful encoding scenarios and other relevant information to assist in the product review process. Please review this document along with the associated Episode User Guide, which provides complete product details. Telestream provides this guide for informational purposes only; it is not a product specification. The information in this document is subject to change at any time. 1 CONTENTS EPISODE OVERVIEW ........................................................................................................... 3 Episode ($495 USD) ........................................................................................................... 3 Episode Pro ($995 USD) .................................................................................................... 3 Episode Engine ($4995 USD) ............................................................................................ 3 KEY BENEFITS ..................................................................................................................... 4 FEATURES ............................................................................................................................ 5 Highest quality ................................................................................................................... -

(A/V Codecs) REDCODE RAW (.R3D) ARRIRAW

What is a Codec? Codec is a portmanteau of either "Compressor-Decompressor" or "Coder-Decoder," which describes a device or program capable of performing transformations on a data stream or signal. Codecs encode a stream or signal for transmission, storage or encryption and decode it for viewing or editing. Codecs are often used in videoconferencing and streaming media solutions. A video codec converts analog video signals from a video camera into digital signals for transmission. It then converts the digital signals back to analog for display. An audio codec converts analog audio signals from a microphone into digital signals for transmission. It then converts the digital signals back to analog for playing. The raw encoded form of audio and video data is often called essence, to distinguish it from the metadata information that together make up the information content of the stream and any "wrapper" data that is then added to aid access to or improve the robustness of the stream. Most codecs are lossy, in order to get a reasonably small file size. There are lossless codecs as well, but for most purposes the almost imperceptible increase in quality is not worth the considerable increase in data size. The main exception is if the data will undergo more processing in the future, in which case the repeated lossy encoding would damage the eventual quality too much. Many multimedia data streams need to contain both audio and video data, and often some form of metadata that permits synchronization of the audio and video. Each of these three streams may be handled by different programs, processes, or hardware; but for the multimedia data stream to be useful in stored or transmitted form, they must be encapsulated together in a container format. -

Adobe Trademark Database for General Distribution

Adobe Trademark List for General Distribution As of May 17, 2021 Please refer to the Permissions and trademark guidelines on our company web site and to the publication Adobe Trademark Guidelines for third parties who license, use or refer to Adobe trademarks for specific information on proper trademark usage. Along with this database (and future updates), they are available from our company web site at: https://www.adobe.com/legal/permissions/trademarks.html Unless you are licensed by Adobe under a specific licensing program agreement or equivalent authorization, use of Adobe logos, such as the Adobe corporate logo or an Adobe product logo, is not allowed. You may qualify for use of certain logos under the programs offered through Partnering with Adobe. Please contact your Adobe representative for applicable guidelines, or learn more about logo usage on our website: https://www.adobe.com/legal/permissions.html Referring to Adobe products Use the full name of the product at its first and most prominent mention (for example, “Adobe Photoshop” in first reference, not “Photoshop”). See the “Preferred use” column below to see how each product should be referenced. Unless specifically noted, abbreviations and acronyms should not be used to refer to Adobe products or trademarks. Attribution statements Marking trademarks with ® or TM symbols is not required, but please include an attribution statement, which may appear in small, but still legible, print, when using any Adobe trademarks in any published materials—typically with other legal lines such as a copyright notice at the end of a document, on the copyright page of a book or manual, or on the legal information page of a website. -

Opus, a Free, High-Quality Speech and Audio Codec

Opus, a free, high-quality speech and audio codec Jean-Marc Valin, Koen Vos, Timothy B. Terriberry, Gregory Maxwell 29 January 2014 Xiph.Org & Mozilla What is Opus? ● New highly-flexible speech and audio codec – Works for most audio applications ● Completely free – Royalty-free licensing – Open-source implementation ● IETF RFC 6716 (Sep. 2012) Xiph.Org & Mozilla Why a New Audio Codec? http://xkcd.com/927/ http://imgs.xkcd.com/comics/standards.png Xiph.Org & Mozilla Why Should You Care? ● Best-in-class performance within a wide range of bitrates and applications ● Adaptability to varying network conditions ● Will be deployed as part of WebRTC ● No licensing costs ● No incompatible flavours Xiph.Org & Mozilla History ● Jan. 2007: SILK project started at Skype ● Nov. 2007: CELT project started ● Mar. 2009: Skype asks IETF to create a WG ● Feb. 2010: WG created ● Jul. 2010: First prototype of SILK+CELT codec ● Dec 2011: Opus surpasses Vorbis and AAC ● Sep. 2012: Opus becomes RFC 6716 ● Dec. 2013: Version 1.1 of libopus released Xiph.Org & Mozilla Applications and Standards (2010) Application Codec VoIP with PSTN AMR-NB Wideband VoIP/videoconference AMR-WB High-quality videoconference G.719 Low-bitrate music streaming HE-AAC High-quality music streaming AAC-LC Low-delay broadcast AAC-ELD Network music performance Xiph.Org & Mozilla Applications and Standards (2013) Application Codec VoIP with PSTN Opus Wideband VoIP/videoconference Opus High-quality videoconference Opus Low-bitrate music streaming Opus High-quality music streaming Opus Low-delay