Imagery Fundamentals

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

New Capabilities for All-Weather Microwave Atmospheric Sensing Using Cubesats and Constellations

SSC18-II-07 New Capabilities for All-Weather Microwave Atmospheric Sensing Using CubeSats and Constellations W. Blackwell, K. Clark, D. Cousins, D. Crompton, A. Cunningham, M. Diliberto, L. Fuhrman, R. Leslie, I. Osaretin, and S. Michael MIT Lincoln Laboratory [email protected] ABSTRACT Three MIT Lincoln Laboratory nanosatellite missions flying microwave radiometers for high-resolution atmospheric sensing are in varying stages of development. Microwave instrumentation is particularly well suited for implementation on a very small satellite, as the sensor requirements for power, pointing, and spatial resolution (aperture size) can be accommodated by a nanosatellite platform. The Microsized Microwave Atmospheric Satellite Version 2a (MicroMAS-2a), launched on January 11, 2018 and has demonstrated temperature sounding using channels near 118 GHz and humidity sounding using channels near 183 GHz. A second MicroMAS-2 flight unit (MicroMAS-2b) will be launched in Fall 2018 as part of ELANA-XX. The Time-Resolved Observations of Precipitation structure and storm Intensity with a Constellation of Smallsats (TROPICS) mission was selected by NASA in 2016 as part of the Earth Venture–Instrument (EVI-3) program. The overarching goal for TROPICS is to provide nearly all-weather observations of 3-D temperature and humidity, as well as cloud ice and precipitation horizontal structure, at high temporal resolution to conduct high-value science investigations of tropical cyclones. TROPICS will provide rapid-refresh microwave measurements (median refresh rate of approximately 40 minutes for the baseline mission) over the tropics that can be used to observe the thermodynamics of the troposphere and precipitation structure for storm systems at the mesoscale and synoptic scale over the entire storm lifecycle. -

Use of Hyperspectral/Multispectral Imaging in Gastroenterology

Journal of Clinical Medicine Review Use of Hyperspectral/Multispectral Imaging in Gastroenterology. Shedding Some–Different–Light into the Dark Samuel Ortega 1,* , Himar Fabelo 1 , Dimitris K. Iakovidis 2, Anastasios Koulaouzidis 3 and Gustavo M. Callico 1 1 Institute for Applied Microelectronics (IUMA), University of Las Palmas de Gran Canaria (ULPGC), Las Palmas de Gran Canaria 35017, Spain; [email protected] (H.F.); [email protected] (G.M.C.) 2 Dept. of Computer Science and Biomedical Informatics, University of Thessaly, 35131 Lamia, Greece; [email protected] 3 Endoscopy Unit, The Royal Infirmary of Edinburgh, Edinburgh EH16 4SA, UK; [email protected] * Correspondence: [email protected]; Tel.: +34-928-451-220 Received: 26 November 2018; Accepted: 26 December 2018; Published: 1 January 2019 Abstract: Hyperspectral/Multispectral imaging (HSI/MSI) technologies are able to sample from tens to hundreds of spectral channels within the electromagnetic spectrum, exceeding the capabilities of human vision. These spectral techniques are based on the principle that every material has a different response (reflection and absorption) to different wavelengths. Thereby, this technology facilitates the discrimination between different materials. HSI has demonstrated good discrimination capabilities for materials in fields, for instance, remote sensing, pollution monitoring, field surveillance, food quality, agriculture, astronomy, geological mapping, and currently, also in medicine. HSI technology allows tissue observation beyond the limitations of the human eye. Moreover, many researchers are using HSI as a new diagnosis tool to analyze optical properties of tissue. Recently, HSI has shown good performance in identifying human diseases in a non-invasive manner. In this paper, we show the potential use of these technologies in the medical domain, with emphasis in the current advances in gastroenterology. -

Multispectral Imaging for Medical and Industrial Machine Vision Systems

1 | Tech Guide: Multispectral imaging for medical and industrial machine vision systems Tech Guide: Multispectral Imaging Multispectral imaging for medical and industrial machine vision systems 2 | Tech Guide: Multispectral imaging for medical and industrial machine vision systems Table of contents Introduction Chapter 1: What is multispectral imaging? Chapter 2: Multispectral imaging applications Chapter 3: Multispectral camera technologies Chapter 4: Key considerations when selecting camera technology for multispectral imaging Chapter 5: Hyperspectral and the future of multispectral imaging 3 | Tech Guide: Multispectral imaging for medical and industrial machine vision systems Introduction Just as machine vision systems have evolved from traditional monochrome cameras to many systems that now utilize full color imaging information, there has also been an evolution from systems that only captured broadband images in the visible spectrum, to those that can utilize targeted spectral bands in both visible and non- visible spectral regions to perform more sophisticated inspection and analysis. The color output of the cameras used in the machine vision industry today is largely based on Bayer-pattern or trilinear sensor technology. But imaging is moving well beyond conventional color where standard RGB is not enough to carry out inspection tasks. Some applications demand unconventional RGB wavelength bands while others demand a combination of visible and non-visible wavelengths. Others require exclusively non-visible wavelengths such as UV, NIR or SWIR, with no wavebands in the visible spectrum. Complex metrology and imaging applications are beginning to demand higher numbers of spectral channels or possibilities to select application-specific spectral filtering at high inspection throughputs. With the traditional machine vision industry merging with intricate measurement technologies, consistent, reliable, high-fidelity color and multispectral imaging are playing key roles in industrial quality control. -

User's Manual for Nuance 3.0.2 Notice

Nuance MULTISPECTRAL IMAGING SYSTEM User's Manual for Nuance 3.0.2 Notice The information in this document is subject to change without notice and should not be construed as a commitment by PerkinElmer, Inc. PerkinElmer assumes no responsibility for any errors that may appear in this document. This manual is believed to be complete and accurate at the time of publication. In no event shall PerkinElmer be liable for incidental or consequential damages in connection with or arising from the use of this manual. This manual describes system operation using Nuance version 3.0.2 software. For more information contact: PerknElmer, Inc. 68 Elm Street, Hopkinton, MA, 01748, USA Phone: 800-762-4000 or +1 203-925-4602 Fax: +1 203-944-4904 Email: [email protected] Web site: http://www.perkinelmer.com US Patent 5,892,612; 5,953,087; 7,655,898; and patents pending. Document Part No. 130765 Rev. 01 Table of Contents Part I Welcome To Nuance 6 Part II Introduction to Multispectral Imaging 7 1 Light...................................................................................................................................... 7 2 Human...................................................................................................................................... Perception of Light Intensity and of Color 8 3 Light ......................................................................................................................................Absorbance and Reflection 9 4 Fluorescence..................................................................................................................................... -

Classification of Compressed Remote Sensing Multispectral Images Via

Journal of Imaging Article Classification of Compressed Remote Sensing Multispectral Images via Convolutional Neural Networks Michalis Giannopoulos 1,2,*,† , Anastasia Aidini 1,2,† , Anastasia Pentari 1,2 , Konstantina Fotiadou 1,2 and Panagiotis Tsakalides 1,2 1 Signal Processing Lab (SPL), Institute of Computer Science, Foundation for Research and Technology-Hellas (FORTH), 70013 Crete, Greece; [email protected] (A.A.); [email protected] (A.P.); [email protected] (K.F.); [email protected] (P.T.) 2 Computer Science Department, University of Crete, 70013 Crete, Greece * Correspondence: [email protected] † These authors contributed equally to this work. Received: 17 January 2020; Accepted: 15 April 2020; Published: 18 April 2020 Abstract: Multispectral sensors constitute a core Earth observation image technology generating massive high-dimensional observations. To address the communication and storage constraints of remote sensing platforms, lossy data compression becomes necessary, but it unavoidably introduces unwanted artifacts. In this work, we consider the encoding of multispectral observations into high-order tensor structures which can naturally capture multi-dimensional dependencies and correlations, and we propose a resource-efficient compression scheme based on quantized low-rank tensor completion. The proposed method is also applicable to the case of missing observations due to environmental conditions, such as cloud cover. To quantify the performance of compression, we consider both typical image quality metrics as well as the impact on state-of-the-art deep learning-based land-cover classification schemes. Experimental analysis on observations from the ESA Sentinel-2 satellite reveals that even minimal compression can have negative effects on classification performance which can be efficiently addressed by our proposed recovery scheme. -

Post-Processing of VIS, NIR, and SWIR Multispectral Images of Paintings

heritage Article Post-Processing of VIS, NIR, and SWIR Multispectral Images of Paintings. New Discovery on the The Drunkenness of Noah, Painted by Andrea Sacchi, Stored at Palazzo Chigi (Ariccia, Rome) 1, , 1, , 2, , 3, , Lucilla Pronti * y , Martina Romani * y, Gianluca Verona-Rinati * y, Ombretta Tarquini * y, 4, 3, 3, 1, Francesco Colao y, Marcello Colapietro y, Augusto Pifferi y , Mariangela Cestelli-Guidi y 2, and Marco Marinelli y 1 INFN-Laboratori Nazionali di Frascati, Via E. Fermi 40, 00044 Frascati, Italy 2 INFN-Dipartimento di Ingegneria Industriale, Università degli Studi di Roma Tor Vergata, Department of Industrial Engineering, Via del Politecnico 1, 00133 Roma, Italy 3 C.N.R. Istituto di Cristallografia - Area della Ricerca Roma 1, Via Salaria Km 29300, 00015 Monterotondo (RM), Italy 4 ENEA, Italian National Agency for New Technologies, Energy and Sustainable Economic Development, Via E. Fermi 45, 00044 Frascati (RM), Italy * Correspondence: [email protected] (L.P.); [email protected] (M.R.); [email protected] (G.V.-R.); [email protected] (O.T.) These authors contributed equally to this work. y Received: 30 June 2019; Accepted: 30 July 2019; Published: 2 August 2019 Abstract: IR Reflectography applied to the identification of hidden details of paintings is extremely useful for authentication purposes and for revealing technical hidden features. Recently, multispectral imaging has replaced traditional imaging techniques thanks to the possibility to select specific spectral ranges bringing out interesting details of the paintings. VIS–NIR–SWIR images of one of the The Drunkenness of Noah versions painted by Andrea Sacchi, acquired with a modified reflex and InGaAs cameras, are presented in this research. -

Fundamentals of Remote Sensing

Fundamentals of Remote Sensing A Canada Centre for Remote Sensing Remote Sensing Tutorial Natural Resources Ressources naturelles Canada Canada Fundamentals of Remote Sensing - Table of Contents Page 2 Table of Contents 1. Introduction 1.1 What is Remote Sensing? 5 1.2 Electromagnetic Radiation 7 1.3 Electromagnetic Spectrum 9 1.4 Interactions with the Atmosphere 12 1.5 Radiation - Target 16 1.6 Passive vs. Active Sensing 19 1.7 Characteristics of Images 20 1.8 Endnotes 22 Did You Know 23 Whiz Quiz and Answers 27 2. Sensors 2.1 On the Ground, In the Air, In Space 34 2.2 Satellite Characteristics 36 2.3 Pixel Size, and Scale 39 2.4 Spectral Resolution 41 2.5 Radiometric Resolution 43 2.6 Temporal Resolution 44 2.7 Cameras and Aerial Photography 45 2.8 Multispectral Scanning 48 2.9 Thermal Imaging 50 2.10 Geometric Distortion 52 2.11 Weather Satellites 54 2.12 Land Observation Satellites 60 2.13 Marine Observation Satellites 67 2.14 Other Sensors 70 2.15 Data Reception 72 2.16 Endnotes 74 Did You Know 75 Whiz Quiz and Answers 83 Canada Centre for Remote Sensing Fundamentals of Remote Sensing - Table of Contents Page 3 3. Microwaves 3.1 Introduction 92 3.2 Radar Basic 96 3.3 Viewing Geometry & Spatial Resolution 99 3.4 Image distortion 102 3.5 Target interaction 106 3.6 Image Properties 110 3.7 Advanced Applications 114 3.8 Polarimetry 117 3.9 Airborne vs Spaceborne 123 3.10 Airborne & Spaceborne Systems 125 3.11 Endnotes 129 Did You Know 131 Whiz Quiz and Answers 135 4. -

Multispectral Image Feature Points

Sensors 2012, 12, 12661-12672; doi:10.3390/s120912661 OPEN ACCESS sensors ISSN 1424-8220 www.mdpi.com/journal/sensors Article Multispectral Image Feature Points Cristhian Aguilera 1,*, Fernando Barrera 2, Felipe Lumbreras 2,3, Angel D. Sappa 2 and Ricardo Toledo 2,3 1 Department of Electrical and Electronics Engineering, Collao 1202, University of Bío-Bío, 4051381 Concepción, Chile 2 Computer Vision Center, Edifici O, Campus UAB, 08193 Bellaterra, Barcelona, Spain; E-Mails: [email protected] (F.B.); [email protected] (F.L.); [email protected] (A.D.S.); [email protected] (R.T.) 3 Computer Science Department, Edifici O, Campus UAB, 08193 Bellaterra, Barcelona, Spain * Author to whom correspondence should be addressed; E-Mail: [email protected]; Tel.: +56-41-311-1287; Fax: +56-41-311-1013. Received: 6 July 2012; in revised form: 24 August 2012 / Accepted: 30 August 2012 / Published: 17 September 2012 Abstract: This paper presents a novel feature point descriptor for the multispectral image case: Far-Infrared and Visible Spectrum images. It allows matching interest points on images of the same scene but acquired in different spectral bands. Initially, points of interest are detected on both images through a SIFT-like based scale space representation. Then, these points are characterized using an Edge Oriented Histogram (EOH) descriptor. Finally, points of interest from multispectral images are matched by finding nearest couples using the information from the descriptor. The provided experimental results and comparisons with similar methods show both the validity of the proposed approach as well as the improvements it offers with respect to the current state-of-the-art. -

Outcome Budget of the Department of Space Government of India 2013-2014 Contents

OUTCOME BUDGET OF THE DEPARTMENT OF SPACE GOVERNMENT OF INDIA 2013-2014 CONTENTS Page Nos. Executive Summary (i) - (ii) Introduction (Organisational Set-up, Major Projects/ Programmes of Department of Space, Overview Chapter I of 12th Five Year Plan 2012-2017 proposals, 1-13 Mandate and Policy framework of Department of Space) Chapter II Outcome Budget 2013-2014 15-60 Chapter III Reform measures and Policy initiatives 61-62 Review of Performance of the Major ongoing Chapter IV 63-102 Projects/Programmes/Centres of DOS/ISRO Chapter V Financial Review 103-110 Chapter VI Autonomous Bodies of DOS/ISRO 111-119 Annexure Major Indian Space Missions EXECUTIVE SUMMARY i. The primary objective of the Indian Space Programme is to achieve self-reliance in Space Technology and to evolve application programme to meet the developmental needs of the country. Towards meeting this objective, two major operational space systems have been established – the Indian National Satellite (INSAT) for telecommunication, television broadcasting and meteorological service and the Indian Remote Sensing Satellite (IRS) for natural resource monitoring and management. Two operational launch vehicles, Polar Satellite Launch Vehicle (PSLV) and Geosynchronous Satellite Launch Vehicle (GSLV) provide self reliance in launching IRS & INSAT Satellites respectively. ii. The Department of Space (DOS) and the Space Commission was set up in 1972 to formulate and implement Space policies and programmes in the country. The Indian Space Research Organisation (ISRO) is the research and development wing of the Department of Space and is responsible for executing the programmes and schemes of the Department in accordance with the directives and policies laid down by the Space Commission and the DOS. -

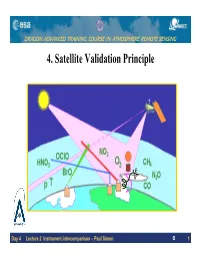

Instrument Intercomparison – Paul Simona 1 DRAGON ADVANCED TRAINING COURSE in ATMOSPHERE REMOTE SENSING Atmospheric Remote Sensing Measurements

DRAGON ADVANCED TRAINING COURSE IN ATMOSPHERE REMOTE SENSING 4. Satellite Validation Principle C o r r e l a t i v e D a t Day 4 Lecture 2 Instrument intercomparison – Paul Simona 1 DRAGON ADVANCED TRAINING COURSE IN ATMOSPHERE REMOTE SENSING Atmospheric Remote Sensing Measurements • Atmosphere is continuously changing in time and space. => No repeated measurements of the same quantity. • Radiation field measurements are direct, all other are indirect • Measurements probe large atmospheric volume => Large averaging and validation by in situ measurements difficult Courtesy Erkki Kyrola Day 4 Lecture 2 Instrument intercomparison – Paul Simon 2 DRAGON ADVANCED TRAINING COURSE IN ATMOSPHERE REMOTE SENSING Atmospheric Satellite Sensors We launch a satellite sensor for dedicated purposes: Meteorology, dynamical tracer, surface UV monitoring; Global climatology; Montreal and Kyoto Protocol related issues; Atmospheric processes: polar chemistry, etc.; Tropospheric issues like pollution, biomass burning, oxidizing capacity…; Radiative Transfer and Chemical Transport modelling. Day 4 Lecture 2 Instrument intercomparison – Paul Simon 3 DRAGON ADVANCED TRAINING COURSE IN ATMOSPHERE REMOTE SENSING Satellite Validation Principles Ideally, the observations of atmospheric constituents must not be dependent on: ¾ the atmospheric temperature; ¾ the abundance of other species; ¾ the Sun elevation; ¾ the presence of clouds; ¾ the instrument degradation (aging); ¾ etc… Day 4 Lecture 2 Instrument intercomparison – Paul Simon 4 DRAGON ADVANCED TRAINING -

Helsinki University of Technology

Aalto University School of Science Degree Programme in Industrial Engineering and Management Timo Tuomivirta Energy management in neighbourhoods Master’s Thesis Espoo, 07/06/2016 Supervisor: Professor Jan Holmström Instructor: M.Sc. Olli Nummelin Title of thesis Energy management in neighbourhoods Degree programme Industrial Engineering and Management Major Industrial Management Code of major TU-22 Supervisor Professor Jan Holmström Thesis advisor M.Sc. Jan Holmström Date 07.06.2016 Number of pages 115+67 Language English The topic of the thesis was to structure, refine, combine, analyse, and model data from real estate. Possibilities to adjust the relationship of utilities demand and supply were evaluated. The information collected from real estate was composed of real estate level and apartment level data. Real estate level data included weather, heating circuit, cooling circuit and domestic water. The apartment level data included room temperature switch, room operating panel and domestic hot-, and cold water consumption. The apartment specific data were aggregated on real estate level. First the interrelation of sub meters and utility companies billing were formed. After that the differences between measurements and company billing were evaluated. Then system specifically on certain time resolution the temperature of supply and return water, electricity consumption, heat energy consumption and cool energy consumption were determined. Hot water, cold water and overall water consumption on certain time resolution were determined. Furthermore, the seasonal ratio of hot and cold water was evaluated. From cold water station related data sample width specific energy efficiency ratio were calculated. From room temperature switch and room operating panel real estate level analysis were aggregated. -

The Technologies That Could Prevent More Mysteries Like That of Missing Malaysia Airlines Flight 370 Page 20

July-August 2014 The technologies that could prevent more mysteries like that of missing Malaysia Airlines Flight 370 Page 20 Hypersonics after WaveRider p.10 40 tons on a dime at Mars p. 36 A PUBLICATION OF THE AMERICAN INSTITUTE OF AERONAUTICS AND ASTRONAUTICS AIAA Progress in Astronautics and Aeronautics AIAA’s popular book series Progress in Astronautics and Aeronautics features books that present a particular, well- defi ned subject refl ecting advances in the fi elds of aerospace science, engineering, and/or technology. POPULAR TITLES Tactical and Strategic Missile Guidance, Sixth Edition Paul Zarchan 1026 pages This best-selling title provides an in-depth look at tactical and strategic missile guidance using common language, notation, and perspective. The sixth edition includes six new chapters on topics related to improving missile guidance system performance and understanding key design concepts and tradeoffs. ISBN: 978-1-60086-894-8 List Price: $134.95 “AIAA Best Seller” AIAA Member Price: $104.95 Morphing Aerospace Vehicles and Structures John Valasek 286 pages Morphing Aerospace Vehicles and Structures is a synthesis of the relevant disciplines and applications involved in the morphing of fi xed wing fl ight vehicles. The book is organized into three major sections: Bio-Inspiration; Control and Dynamics; and Smart Materials and Structures. Most chapters are both tutorial and research-oriented in nature, covering elementary concepts through advanced – and in many cases novel – methodologies. ISBN: 978-1-60086-903-7 “Features the