A Trillion Coral Reef Colors: Deeply Annotated Underwater Hyperspectral Images for Automated Classification and Habitat Mapping

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reef Sponges of the Genus Agelas (Porifera: Demospongiae) from the Greater Caribbean

Zootaxa 3794 (3): 301–343 ISSN 1175-5326 (print edition) www.mapress.com/zootaxa/ Article ZOOTAXA Copyright © 2014 Magnolia Press ISSN 1175-5334 (online edition) http://dx.doi.org/10.11646/zootaxa.3794.3.1 http://zoobank.org/urn:lsid:zoobank.org:pub:51852298-F299-4392-9C89-A6FD14D3E1D0 Reef sponges of the genus Agelas (Porifera: Demospongiae) from the Greater Caribbean FERNANDO J. PARRA-VELANDIA1,2, SVEN ZEA2,4 & ROB W. M. VAN SOEST3 1St John's Island Marine Laboratory, Tropical Marine Science Institute (TMSI), National University of Singapore, 18 Kent Ridge Road, Singapore 119227. E-mail: [email protected] 2Universidad Nacional de Colombia, Sede Caribe, Centro de Estudios en Ciencias del Mar—CECIMAR; c/o INVEMAR, Calle 25 2- 55, Rodadero Sur, Playa Salguero, Santa Marta, Colombia. E-mail: [email protected] 3Netherlands Centre for Biodiversity Naturalis, P.O.Box 9517 2300 RA Leiden, The Netherlands. E-mail: [email protected] 4Corresponding author Table of contents Abstract . 301 Introduction . 302 The genus Agelas in the Greater Caribbean . 302 Material and methods . 303 Classification . 304 Phylum Porifera Grant, 1835 . 304 Class Demospongiae Sollas, 1875 . 304 Order Agelasida Hartman, 1980 . 304 Family Agelasidae Verrill, 1907 . 304 Genus Agelas Duchassaing & Michelotti, 1864 . 304 Agelas dispar Duchassaing & Michelotti, 1864 . 306 Agelas cervicornis (Schmidt, 1870) . 311 Agelas wiedenmayeri Alcolado, 1984. 313 Agelas sceptrum (Lamarck, 1815) . 315 Agelas dilatata Duchassaing & Michelotti, 1864 . 316 Agelas conifera (Schmidt, 1870). 318 Agelas tubulata Lehnert & van Soest, 1996 . 321 Agelas repens Lehnert & van Soest, 1998. 324 Agelas cerebrum Assmann, van Soest & Köck, 2001. 325 Agelas schmidti Wilson, 1902 . -

Near Vermilion Sands: the Context and Date of Composition of an Abandoned Literary Draft by J. G. Ballard

Near Vermilion Sands: The Context and Date of Composition of an Abandoned Literary Draft by J. G. Ballard Chris Beckett ‘We had entered an inflamed landscape’1 When Raine Channing – ‘sometime international model and epitome of eternal youthfulness’2 – wanders into ‘Topless in Gaza’, a bio-fabric boutique in Vermilion Sands, and remarks that ‘Nothing in Vermilion Sands ever changes’, she is uttering a general truth about the fantastic dystopian world that J. G. Ballard draws and re-draws in his collection of stories set in and around the tired, flamboyant desert resort, a resort where traumas flower and sonic sculptures run to seed.3 ‘It’s a good place to come back to,’4 she casually continues. But, like many of the female protagonists in these stories – each a femme fatale – she is a captive of her past: ‘She had come back to Lagoon West to make a beginning, and instead found that events repeated themselves.’5 Raine has murdered her ‘confidant and impresario, the brilliant couturier and designer of the first bio-fabric fashions, Gavin Kaiser’.6 Kaiser has been killed – with grim, pantomime karma – by a constricting gold lamé shirt of his own design: ‘Justice in a way, the tailor killed by his own cloth.’7 But Kaiser’s death has not resolved her trauma. Raine is a victim herself, a victim of serial plastic surgery, caught as a teenager in Kaiser’s doomed search for perpetual gamin youth: ‘he kept me at fifteen,’ she says, ‘but not because of the fashion-modelling. He wanted me for ever when I first loved him.’8 She hopes to find in Vermilion Sands, in its localized curvature of time and space, the parts of herself she has lost on a succession of operating tables. -

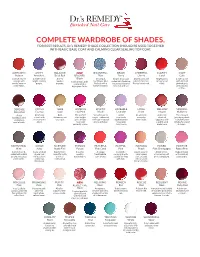

COMPLETE WARDROBE of SHADES. for BEST RESULTS, Dr.’S REMEDY SHADE COLLECTION SHOULD BE USED TOGETHER with BASIC BASE COAT and CALMING CLEAR SEALING TOP COAT

COMPLETE WARDROBE OF SHADES. FOR BEST RESULTS, Dr.’s REMEDY SHADE COLLECTION SHOULD BE USED TOGETHER WITH BASIC BASE COAT AND CALMING CLEAR SEALING TOP COAT. ALTRUISTIC AMITY BALANCE NEW BOUNTIFUL BRAVE CHEERFUL CLARITY COZY Auburn Amethyst Brick Red BELOVED Blue Berry Cherry Coral Cafe A playful burnt A moderately A deep Blush A tranquil, Bright, fresh and A bold, juicy and Bright pinky A cafe au lait orange with bright, smokey modern Cool cotton candy cornflower blue undeniably feminine; upbeat shimmer- orangey and with hints of earthy, autumn purple. maroon. crème with a flecked with a the perfect blend of flecked candy red. matte. pinkish grey undertones. high-gloss finish. hint of shimmer. romance and fun. and a splash of lilac. DEFENSE FOCUS GLEE HOPEFUL KINETIC LOVEABLE LOYAL MELLOW MINDFUL Deep Red Fuchsia Gold Hot Pink Khaki Lavender Linen Mauve Mulberry A rich A hot pink Rich, The perfect Versatile warm A lilac An ultimate A delicate This renewed bordeaux with classic with shimmery and ultra bright taupe—enhanced that lends everyday shade of juicy berry shade a luxurious rich, romantic luxurious. pink, almost with cool tinges of sophistication sheer nude. eggplant, with is stylishly tart matte finish. allure. neon and green and gray. to springs a subtle pink yet playful sweet perfectly matte. flirty frocks. undertone. & classic. MOTIVATING NOBLE NURTURE PASSION PEACEFUL PLAYFUL PLEASING POISED POSITIVE Mink Navy Nude Pink Purple Pink Coral Pink Peach Pink Champagne Pastel Pink A muted mink, A sea-at-dusk Barely there A subtle, A poppy, A cheerful A pale, peachy- A high-shine, Baby girl pink spiked with subtle shade that beautiful with sparkly fresh bubble- candy pink with coral creme shimmering soft with swirls of purple and cocoa reflects light a hint of boysenberry. -

The Coral Trait Database, a Curated Database of Trait Information for Coral Species from the Global Oceans

www.nature.com/scientificdata OPEN The Coral Trait Database, a curated SUBJECT CATEGORIES » Community ecology database of trait information for » Marine biology » Biodiversity coral species from the global oceans » Biogeography 1 2 3 2 4 Joshua S. Madin , Kristen D. Anderson , Magnus Heide Andreasen , Tom C.L. Bridge , , » Coral reefs 5 2 6 7 1 1 Stephen D. Cairns , Sean R. Connolly , , Emily S. Darling , Marcela Diaz , Daniel S. Falster , 8 8 2 6 9 3 Erik C. Franklin , Ruth D. Gates , Mia O. Hoogenboom , , Danwei Huang , Sally A. Keith , 1 2 2 4 10 Matthew A. Kosnik , Chao-Yang Kuo , Janice M. Lough , , Catherine E. Lovelock , 1 1 1 11 12 13 Osmar Luiz , Julieta Martinelli , Toni Mizerek , John M. Pandolfi , Xavier Pochon , , 2 8 2 14 Morgan S. Pratchett , Hollie M. Putnam , T. Edward Roberts , Michael Stat , 15 16 2 Carden C. Wallace , Elizabeth Widman & Andrew H. Baird Received: 06 October 2015 28 2016 Accepted: January Trait-based approaches advance ecological and evolutionary research because traits provide a strong link to Published: 29 March 2016 an organism’s function and fitness. Trait-based research might lead to a deeper understanding of the functions of, and services provided by, ecosystems, thereby improving management, which is vital in the current era of rapid environmental change. Coral reef scientists have long collected trait data for corals; however, these are difficult to access and often under-utilized in addressing large-scale questions. We present the Coral Trait Database initiative that aims to bring together physiological, morphological, ecological, phylogenetic and biogeographic trait information into a single repository. -

MARINE FAUNA and FLORA of BERMUDA a Systematic Guide to the Identification of Marine Organisms

MARINE FAUNA AND FLORA OF BERMUDA A Systematic Guide to the Identification of Marine Organisms Edited by WOLFGANG STERRER Bermuda Biological Station St. George's, Bermuda in cooperation with Christiane Schoepfer-Sterrer and 63 text contributors A Wiley-Interscience Publication JOHN WILEY & SONS New York Chichester Brisbane Toronto Singapore ANTHOZOA 159 sucker) on the exumbrella. Color vari many Actiniaria and Ceriantharia can able, mostly greenish gray-blue, the move if exposed to unfavorable condi greenish color due to zooxanthellae tions. Actiniaria can creep along on their embedded in the mesoglea. Polyp pedal discs at 8-10 cm/hr, pull themselves slender; strobilation of the monodisc by their tentacles, move by peristalsis type. Medusae are found, upside through loose sediment, float in currents, down and usually in large congrega and even swim by coordinated tentacular tions, on the muddy bottoms of in motion. shore bays and ponds. Both subclasses are represented in Ber W. STERRER muda. Because the orders are so diverse morphologically, they are often discussed separately. In some classifications the an Class Anthozoa (Corals, anemones) thozoan orders are grouped into 3 (not the 2 considered here) subclasses, splitting off CHARACTERISTICS: Exclusively polypoid, sol the Ceriantharia and Antipatharia into a itary or colonial eNIDARIA. Oral end ex separate subclass, the Ceriantipatharia. panded into oral disc which bears the mouth and Corallimorpharia are sometimes consid one or more rings of hollow tentacles. ered a suborder of Scleractinia. Approxi Stomodeum well developed, often with 1 or 2 mately 6,500 species of Anthozoa are siphonoglyphs. Gastrovascular cavity compart known. Of 93 species reported from Ber mentalized by radially arranged mesenteries. -

Taxonomy and Diversity of the Sponge Fauna from Walters Shoal, a Shallow Seamount in the Western Indian Ocean Region

Taxonomy and diversity of the sponge fauna from Walters Shoal, a shallow seamount in the Western Indian Ocean region By Robyn Pauline Payne A thesis submitted in partial fulfilment of the requirements for the degree of Magister Scientiae in the Department of Biodiversity and Conservation Biology, University of the Western Cape. Supervisors: Dr Toufiek Samaai Prof. Mark J. Gibbons Dr Wayne K. Florence The financial assistance of the National Research Foundation (NRF) towards this research is hereby acknowledged. Opinions expressed and conclusions arrived at, are those of the author and are not necessarily to be attributed to the NRF. December 2015 Taxonomy and diversity of the sponge fauna from Walters Shoal, a shallow seamount in the Western Indian Ocean region Robyn Pauline Payne Keywords Indian Ocean Seamount Walters Shoal Sponges Taxonomy Systematics Diversity Biogeography ii Abstract Taxonomy and diversity of the sponge fauna from Walters Shoal, a shallow seamount in the Western Indian Ocean region R. P. Payne MSc Thesis, Department of Biodiversity and Conservation Biology, University of the Western Cape. Seamounts are poorly understood ubiquitous undersea features, with less than 4% sampled for scientific purposes globally. Consequently, the fauna associated with seamounts in the Indian Ocean remains largely unknown, with less than 300 species recorded. One such feature within this region is Walters Shoal, a shallow seamount located on the South Madagascar Ridge, which is situated approximately 400 nautical miles south of Madagascar and 600 nautical miles east of South Africa. Even though it penetrates the euphotic zone (summit is 15 m below the sea surface) and is protected by the Southern Indian Ocean Deep- Sea Fishers Association, there is a paucity of biodiversity and oceanographic data. -

Microbiomes of Gall-Inducing Copepod Crustaceans from the Corals Stylophora Pistillata (Scleractinia) and Gorgonia Ventalina

www.nature.com/scientificreports OPEN Microbiomes of gall-inducing copepod crustaceans from the corals Stylophora pistillata Received: 26 February 2018 Accepted: 18 July 2018 (Scleractinia) and Gorgonia Published: xx xx xxxx ventalina (Alcyonacea) Pavel V. Shelyakin1,2, Sofya K. Garushyants1,3, Mikhail A. Nikitin4, Sofya V. Mudrova5, Michael Berumen 5, Arjen G. C. L. Speksnijder6, Bert W. Hoeksema6, Diego Fontaneto7, Mikhail S. Gelfand1,3,4,8 & Viatcheslav N. Ivanenko 6,9 Corals harbor complex and diverse microbial communities that strongly impact host ftness and resistance to diseases, but these microbes themselves can be infuenced by stresses, like those caused by the presence of macroscopic symbionts. In addition to directly infuencing the host, symbionts may transmit pathogenic microbial communities. We analyzed two coral gall-forming copepod systems by using 16S rRNA gene metagenomic sequencing: (1) the sea fan Gorgonia ventalina with copepods of the genus Sphaerippe from the Caribbean and (2) the scleractinian coral Stylophora pistillata with copepods of the genus Spaniomolgus from the Saudi Arabian part of the Red Sea. We show that bacterial communities in these two systems were substantially diferent with Actinobacteria, Alphaproteobacteria, and Betaproteobacteria more prevalent in samples from Gorgonia ventalina, and Gammaproteobacteria in Stylophora pistillata. In Stylophora pistillata, normal coral microbiomes were enriched with the common coral symbiont Endozoicomonas and some unclassifed bacteria, while copepod and gall-tissue microbiomes were highly enriched with the family ME2 (Oceanospirillales) or Rhodobacteraceae. In Gorgonia ventalina, no bacterial group had signifcantly diferent prevalence in the normal coral tissues, copepods, and injured tissues. The total microbiome composition of polyps injured by copepods was diferent. -

Long-Term Recruitment of Soft-Corals (Octocorallia: Alcyonacea) on Artificial Substrata at Eilat (Red Sea)

MARINE ECOLOGY - PROGRESS SERIES Vol. 38: 161-167, 1987 Published June 18 Mar. Ecol. Prog. Ser. Long-term recruitment of soft-corals (Octocorallia: Alcyonacea) on artificial substrata at Eilat (Red Sea) Y.Benayahu & Y.Loya Department of Zoology. The George S. Wise Center for Life Sciences, Tel Aviv University, Tel Aviv 69978. Israel ABSTRACT: Recruitment of soft corals (Octocorallia: Alcyonacea) on concrete plates was studied in the reefs of the Nature Reserve of Eilat at depths of 17 to 29 m over 12 yr. Xenia macrospiculata was the pioneering species, appealing on the vast majority of the plates before any other spat. This species remained the most conspicuous inhabitant of the substrata throughout the whole study. Approximately 10 % of the plates were very extensively colonized by X. rnacrospiculata, resembling the percentage of living coverage by the species in the surrounding reef, thus suggesting that during the study X. rnacrospiculata populations reached their maximal potential to capture the newly available substrata. The successive appearance of an additional 11 soft coral species was recorded. The species composition of the recruits and their abundance corresponded with the soft coral community in the natural reef, indicahng that the estabhshed spat were progeny of the local populations. Soft coral recruits utilized the edges and lower surfaces of the plates most successfully, rather than the exposed upper surfaces. Such preferential settling of alcyonaceans allows the spat to escape from unfavourable conditions and maintains their high survival in the established community. INTRODUCTION determine the role played by alcyonaceans in the course of reef colonization and in the reef's space Studies on processes and dynamics of reef benthic allocation. -

Appendix: Some Important Early Collections of West Indian Type Specimens, with Historical Notes

Appendix: Some important early collections of West Indian type specimens, with historical notes Duchassaing & Michelotti, 1864 between 1841 and 1864, we gain additional information concerning the sponge memoir, starting with the letter dated 8 May 1855. Jacob Gysbert Samuel van Breda A biography of Placide Duchassaing de Fonbressin was (1788-1867) was professor of botany in Franeker (Hol published by his friend Sagot (1873). Although an aristo land), of botany and zoology in Gent (Belgium), and crat by birth, as we learn from Michelotti's last extant then of zoology and geology in Leyden. Later he went to letter to van Breda, Duchassaing did not add de Fon Haarlem, where he was secretary of the Hollandsche bressin to his name until 1864. Duchassaing was born Maatschappij der Wetenschappen, curator of its cabinet around 1819 on Guadeloupe, in a French-Creole family of natural history, and director of Teyler's Museum of of planters. He was sent to school in Paris, first to the minerals, fossils and physical instruments. Van Breda Lycee Louis-le-Grand, then to University. He finished traveled extensively in Europe collecting fossils, especial his studies in 1844 with a doctorate in medicine and two ly in Italy. Michelotti exchanged collections of fossils additional theses in geology and zoology. He then settled with him over a long period of time, and was received as on Guadeloupe as physician. Because of social unrest foreign member of the Hollandsche Maatschappij der after the freeing of native labor, he left Guadeloupe W etenschappen in 1842. The two chief papers of Miche around 1848, and visited several islands of the Antilles lotti on fossils were published by the Hollandsche Maat (notably Nevis, Sint Eustatius, St. -

St. Kitts Final Report

ReefFix: An Integrated Coastal Zone Management (ICZM) Ecosystem Services Valuation and Capacity Building Project for the Caribbean ST. KITTS AND NEVIS FIRST DRAFT REPORT JUNE 2013 PREPARED BY PATRICK I. WILLIAMS CONSULTANT CLEVERLY HILL SANDY POINT ST. KITTS PHONE: 1 (869) 765-3988 E-MAIL: [email protected] 1 2 TABLE OF CONTENTS Page No. Table of Contents 3 List of Figures 6 List of Tables 6 Glossary of Terms 7 Acronyms 10 Executive Summary 12 Part 1: Situational analysis 15 1.1 Introduction 15 1.2 Physical attributes 16 1.2.1 Location 16 1.2.2 Area 16 1.2.3 Physical landscape 16 1.2.4 Coastal zone management 17 1.2.5 Vulnerability of coastal transportation system 19 1.2.6 Climate 19 1.3 Socio-economic context 20 1.3.1 Population 20 1.3.2 General economy 20 1.3.3 Poverty 22 1.4 Policy frameworks of relevance to marine resource protection and management in St. Kitts and Nevis 23 1.4.1 National Environmental Action Plan (NEAP) 23 1.4.2 National Physical Development Plan (2006) 23 1.4.3 National Environmental Management Strategy (NEMS) 23 1.4.4 National Biodiversity Strategy and Action Plan (NABSAP) 26 1.4.5 Medium Term Economic Strategy Paper (MTESP) 26 1.5 Legislative instruments of relevance to marine protection and management in St. Kitts and Nevis 27 1.5.1 Development Control and Planning Act (DCPA), 2000 27 1.5.2 National Conservation and Environmental Protection Act (NCEPA), 1987 27 1.5.3 Public Health Act (1969) 28 1.5.4 Solid Waste Management Corporation Act (1996) 29 1.5.5 Water Courses and Water Works Ordinance (Cap. -

Preliminary Report on the Octocorals (Cnidaria: Anthozoa: Octocorallia) from the Ogasawara Islands

国立科博専報,(52), pp. 65–94 , 2018 年 3 月 28 日 Mem. Natl. Mus. Nat. Sci., Tokyo, (52), pp. 65–94, March 28, 2018 Preliminary Report on the Octocorals (Cnidaria: Anthozoa: Octocorallia) from the Ogasawara Islands Yukimitsu Imahara1* and Hiroshi Namikawa2 1Wakayama Laboratory, Biological Institute on Kuroshio, 300–11 Kire, Wakayama, Wakayama 640–0351, Japan *E-mail: [email protected] 2Showa Memorial Institute, National Museum of Nature and Science, 4–1–1 Amakubo, Tsukuba, Ibaraki 305–0005, Japan Abstract. Approximately 400 octocoral specimens were collected from the Ogasawara Islands by SCUBA diving during 2013–2016 and by dredging surveys by the R/V Koyo of the Tokyo Met- ropolitan Ogasawara Fisheries Center in 2014 as part of the project “Biological Properties of Bio- diversity Hotspots in Japan” at the National Museum of Nature and Science. Here we report on 52 lots of these octocoral specimens that have been identified to 42 species thus far. The specimens include seven species of three genera in two families of Stolonifera, 25 species of ten genera in two families of Alcyoniina, one species of Scleraxonia, and nine species of four genera in three families of Pennatulacea. Among them, three species of Stolonifera: Clavularia cf. durum Hick- son, C. cf. margaritiferae Thomson & Henderson and C. cf. repens Thomson & Henderson, and five species of Alcyoniina: Lobophytum variatum Tixier-Durivault, L. cf. mirabile Tixier- Durivault, Lohowia koosi Alderslade, Sarcophyton cf. boletiforme Tixier-Durivault and Sinularia linnei Ofwegen, are new to Japan. In particular, Lohowia koosi is the first discovery since the orig- inal description from the east coast of Australia. -

Review on Hard Coral Recruitment (Cnidaria: Scleractinia) in Colombia

Universitas Scientiarum, 2011, Vol. 16 N° 3: 200-218 Disponible en línea en: www.javeriana.edu.co/universitas_scientiarum 2011, Vol. 16 N° 3: 200-218 SICI: 2027-1352(201109/12)16:3<200:RHCRCSIC>2.0.TS;2-W Invited review Review on hard coral recruitment (Cnidaria: Scleractinia) in Colombia Alberto Acosta1, Luisa F. Dueñas2, Valeria Pizarro3 1 Unidad de Ecología y Sistemática, Departamento de Biología, Facultad de Ciencias, Pontificia Universidad Javeriana, Bogotá, D.C., Colombia. 2 Laboratorio de Biología Molecular Marina - BIOMMAR, Departamento de Ciencias Biológicas, Facultad de Ciencias, Universidad de los Andes, Bogotá, D.C., Colombia. 3 Programa de Biología Marina, Facultad de Ciencias Naturales, Universidad Jorge Tadeo Lozano. Santa Marta. Colombia. * [email protected] Recibido: 28-02-2011; Aceptado: 11-05-2011 Abstract Recruitment, defined and measured as the incorporation of new individuals (i.e. coral juveniles) into a population, is a fundamental process for ecologists, evolutionists and conservationists due to its direct effect on population structure and function. Because most coral populations are self-feeding, a breakdown in recruitment would lead to local extinction. Recruitment indirectly affects both renewal and maintenance of existing and future coral communities, coral reef biodiversity (bottom-up effect) and therefore coral reef resilience. This process has been used as an indirect measure of individual reproductive success (fitness) and is the final stage of larval dispersal leading to population connectivity. As a result, recruitment has been proposed as an indicator of coral-reef health in marine protected areas, as well as a central aspect of the decision-making process concerning management and conservation.