Spectral Density Estimation for Nonstationary Data with Nonzero Mean Function Anna E

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Kalman and Particle Filtering

Abstract: The Kalman and Particle filters are algorithms that recursively update an estimate of the state and find the innovations driving a stochastic process given a sequence of observations. The Kalman filter accomplishes this goal by linear projections, while the Particle filter does so by a sequential Monte Carlo method. With the state estimates, we can forecast and smooth the stochastic process. With the innovations, we can estimate the parameters of the model. The article discusses how to set a dynamic model in a state-space form, derives the Kalman and Particle filters, and explains how to use them for estimation. Kalman and Particle Filtering The Kalman and Particle filters are algorithms that recursively update an estimate of the state and find the innovations driving a stochastic process given a sequence of observations. The Kalman filter accomplishes this goal by linear projections, while the Particle filter does so by a sequential Monte Carlo method. Since both filters start with a state-space representation of the stochastic processes of interest, section 1 presents the state-space form of a dynamic model. Then, section 2 intro- duces the Kalman filter and section 3 develops the Particle filter. For extended expositions of this material, see Doucet, de Freitas, and Gordon (2001), Durbin and Koopman (2001), and Ljungqvist and Sargent (2004). 1. The state-space representation of a dynamic model A large class of dynamic models can be represented by a state-space form: Xt+1 = ϕ (Xt,Wt+1; γ) (1) Yt = g (Xt,Vt; γ) . (2) This representation handles a stochastic process by finding three objects: a vector that l describes the position of the system (a state, Xt X R ) and two functions, one mapping ∈ ⊂ 1 the state today into the state tomorrow (the transition equation, (1)) and one mapping the state into observables, Yt (the measurement equation, (2)). -

Lecture 19: Wavelet Compression of Time Series and Images

Lecture 19: Wavelet compression of time series and images c Christopher S. Bretherton Winter 2014 Ref: Matlab Wavelet Toolbox help. 19.1 Wavelet compression of a time series The last section of wavelet leleccum notoolbox.m demonstrates the use of wavelet compression on a time series. The idea is to keep the wavelet coefficients of largest amplitude and zero out the small ones. 19.2 Wavelet analysis/compression of an image Wavelet analysis is easily extended to two-dimensional images or datasets (data matrices), by first doing a wavelet transform of each column of the matrix, then transforming each row of the result (see wavelet image). The wavelet coeffi- cient matrix has the highest level (largest-scale) averages in the first rows/columns, then successively smaller detail scales further down the rows/columns. The ex- ample also shows fine results with 50-fold data compression. 19.3 Continuous Wavelet Transform (CWT) Given a continuous signal u(t) and an analyzing wavelet (x), the CWT has the form Z 1 s − t W (λ, t) = λ−1=2 ( )u(s)ds (19.3.1) −∞ λ Here λ, the scale, is a continuous variable. We insist that have mean zero and that its square integrates to 1. The continuous Haar wavelet is defined: 8 < 1 0 < t < 1=2 (t) = −1 1=2 < t < 1 (19.3.2) : 0 otherwise W (λ, t) is proportional to the difference of running means of u over successive intervals of length λ/2. 1 Amath 482/582 Lecture 19 Bretherton - Winter 2014 2 In practice, for a discrete time series, the integral is evaluated as a Riemann sum using the Matlab wavelet toolbox function cwt. -

Measurement Techniques of Ultra-Wideband Transmissions

Rec. ITU-R SM.1754-0 1 RECOMMENDATION ITU-R SM.1754-0* Measurement techniques of ultra-wideband transmissions (2006) Scope Taking into account that there are two general measurement approaches (time domain and frequency domain) this Recommendation gives the appropriate techniques to be applied when measuring UWB transmissions. Keywords Ultra-wideband( UWB), international transmissions, short-duration pulse The ITU Radiocommunication Assembly, considering a) that intentional transmissions from devices using ultra-wideband (UWB) technology may extend over a very large frequency range; b) that devices using UWB technology are being developed with transmissions that span numerous radiocommunication service allocations; c) that UWB technology may be integrated into many wireless applications such as short- range indoor and outdoor communications, radar imaging, medical imaging, asset tracking, surveillance, vehicular radar and intelligent transportation; d) that a UWB transmission may be a sequence of short-duration pulses; e) that UWB transmissions may appear as noise-like, which may add to the difficulty of their measurement; f) that the measurements of UWB transmissions are different from those of conventional radiocommunication systems; g) that proper measurements and assessments of power spectral density are key issues to be addressed for any radiation, noting a) that terms and definitions for UWB technology and devices are given in Recommendation ITU-R SM.1755; b) that there are two general measurement approaches, time domain and frequency domain, with each having a particular set of advantages and disadvantages, recommends 1 that techniques described in Annex 1 to this Recommendation should be considered when measuring UWB transmissions. * Radiocommunication Study Group 1 made editorial amendments to this Recommendation in the years 2018 and 2019 in accordance with Resolution ITU-R 1. -

Alternative Tests for Time Series Dependence Based on Autocorrelation Coefficients

Alternative Tests for Time Series Dependence Based on Autocorrelation Coefficients Richard M. Levich and Rosario C. Rizzo * Current Draft: December 1998 Abstract: When autocorrelation is small, existing statistical techniques may not be powerful enough to reject the hypothesis that a series is free of autocorrelation. We propose two new and simple statistical tests (RHO and PHI) based on the unweighted sum of autocorrelation and partial autocorrelation coefficients. We analyze a set of simulated data to show the higher power of RHO and PHI in comparison to conventional tests for autocorrelation, especially in the presence of small but persistent autocorrelation. We show an application of our tests to data on currency futures to demonstrate their practical use. Finally, we indicate how our methodology could be used for a new class of time series models (the Generalized Autoregressive, or GAR models) that take into account the presence of small but persistent autocorrelation. _______________________________________________________________ An earlier version of this paper was presented at the Symposium on Global Integration and Competition, sponsored by the Center for Japan-U.S. Business and Economic Studies, Stern School of Business, New York University, March 27-28, 1997. We thank Paul Samuelson and the other participants at that conference for useful comments. * Stern School of Business, New York University and Research Department, Bank of Italy, respectively. 1 1. Introduction Economic time series are often characterized by positive -

Time Series: Co-Integration

Time Series: Co-integration Series: Economic Forecasting; Time Series: General; Watson M W 1994 Vector autoregressions and co-integration. Time Series: Nonstationary Distributions and Unit In: Engle R F, McFadden D L (eds.) Handbook of Econo- Roots; Time Series: Seasonal Adjustment metrics Vol. IV. Elsevier, The Netherlands N. H. Chan Bibliography Banerjee A, Dolado J J, Galbraith J W, Hendry D F 1993 Co- Integration, Error Correction, and the Econometric Analysis of Non-stationary Data. Oxford University Press, Oxford, UK Time Series: Cycles Box G E P, Tiao G C 1977 A canonical analysis of multiple time series. Biometrika 64: 355–65 Time series data in economics and other fields of social Chan N H, Tsay R S 1996 On the use of canonical correlation science often exhibit cyclical behavior. For example, analysis in testing common trends. In: Lee J C, Johnson W O, aggregate retail sales are high in November and Zellner A (eds.) Modelling and Prediction: Honoring December and follow a seasonal cycle; voter regis- S. Geisser. Springer-Verlag, New York, pp. 364–77 trations are high before each presidential election and Chan N H, Wei C Z 1988 Limiting distributions of least squares follow an election cycle; and aggregate macro- estimates of unstable autoregressive processes. Annals of Statistics 16: 367–401 economic activity falls into recession every six to eight Engle R F, Granger C W J 1987 Cointegration and error years and follows a business cycle. In spite of this correction: Representation, estimation, and testing. Econo- cyclicality, these series are not perfectly predictable, metrica 55: 251–76 and the cycles are not strictly periodic. -

Generating Time Series with Diverse and Controllable Characteristics

GRATIS: GeneRAting TIme Series with diverse and controllable characteristics Yanfei Kang,∗ Rob J Hyndman,† and Feng Li‡ Abstract The explosion of time series data in recent years has brought a flourish of new time series analysis methods, for forecasting, clustering, classification and other tasks. The evaluation of these new methods requires either collecting or simulating a diverse set of time series benchmarking data to enable reliable comparisons against alternative approaches. We pro- pose GeneRAting TIme Series with diverse and controllable characteristics, named GRATIS, with the use of mixture autoregressive (MAR) models. We simulate sets of time series using MAR models and investigate the diversity and coverage of the generated time series in a time series feature space. By tuning the parameters of the MAR models, GRATIS is also able to efficiently generate new time series with controllable features. In general, as a costless surrogate to the traditional data collection approach, GRATIS can be used as an evaluation tool for tasks such as time series forecasting and classification. We illustrate the usefulness of our time series generation process through a time series forecasting application. Keywords: Time series features; Time series generation; Mixture autoregressive models; Time series forecasting; Simulation. 1 Introduction With the widespread collection of time series data via scanners, monitors and other automated data collection devices, there has been an explosion of time series analysis methods developed in the past decade or two. Paradoxically, the large datasets are often also relatively homogeneous in the industry domain, which limits their use for evaluation of general time series analysis methods (Keogh and Kasetty, 2003; Mu˜nozet al., 2018; Kang et al., 2017). -

Windowing Techniques, the Welch Method for Improvement of Power Spectrum Estimation

Computers, Materials & Continua Tech Science Press DOI:10.32604/cmc.2021.014752 Article Windowing Techniques, the Welch Method for Improvement of Power Spectrum Estimation Dah-Jing Jwo1, *, Wei-Yeh Chang1 and I-Hua Wu2 1Department of Communications, Navigation and Control Engineering, National Taiwan Ocean University, Keelung, 202-24, Taiwan 2Innovative Navigation Technology Ltd., Kaohsiung, 801, Taiwan *Corresponding Author: Dah-Jing Jwo. Email: [email protected] Received: 01 October 2020; Accepted: 08 November 2020 Abstract: This paper revisits the characteristics of windowing techniques with various window functions involved, and successively investigates spectral leak- age mitigation utilizing the Welch method. The discrete Fourier transform (DFT) is ubiquitous in digital signal processing (DSP) for the spectrum anal- ysis and can be efciently realized by the fast Fourier transform (FFT). The sampling signal will result in distortion and thus may cause unpredictable spectral leakage in discrete spectrum when the DFT is employed. Windowing is implemented by multiplying the input signal with a window function and windowing amplitude modulates the input signal so that the spectral leakage is evened out. Therefore, windowing processing reduces the amplitude of the samples at the beginning and end of the window. In addition to selecting appropriate window functions, a pretreatment method, such as the Welch method, is effective to mitigate the spectral leakage. Due to the noise caused by imperfect, nite data, the noise reduction from Welch’s method is a desired treatment. The nonparametric Welch method is an improvement on the peri- odogram spectrum estimation method where the signal-to-noise ratio (SNR) is high and mitigates noise in the estimated power spectra in exchange for frequency resolution reduction. -

Windowing Design and Performance Assessment for Mitigation of Spectrum Leakage

E3S Web of Conferences 94, 03001 (2019) https://doi.org/10.1051/e3sconf/20199403001 ISGNSS 2018 Windowing Design and Performance Assessment for Mitigation of Spectrum Leakage Dah-Jing Jwo 1,*, I-Hua Wu 2 and Yi Chang1 1 Department of Communications, Navigation and Control Engineering, National Taiwan Ocean University 2 Pei-Ning Rd., Keelung 202, Taiwan 2 Bison Electronics, Inc., Neihu District, Taipei 114, Taiwan Abstract. This paper investigates the windowing design and performance assessment for mitigation of spectral leakage. A pretreatment method to reduce the spectral leakage is developed. In addition to selecting appropriate window functions, the Welch method is introduced. Windowing is implemented by multiplying the input signal with a windowing function. The periodogram technique based on Welch method is capable of providing good resolution if data length samples are selected optimally. Windowing amplitude modulates the input signal so that the spectral leakage is evened out. Thus, windowing reduces the amplitude of the samples at the beginning and end of the window, altering leakage. The influence of various window functions on the Fourier transform spectrum of the signals was discussed, and the characteristics and functions of various window functions were explained. In addition, we compared the differences in the influence of different data lengths on spectral resolution and noise levels caused by the traditional power spectrum estimation and various window-function-based Welch power spectrum estimations. 1 Introduction knowledge. Due to computer capacity and limitations on processing time, only limited time samples can actually The spectrum analysis [1-4] is a critical concern in many be processed during spectrum analysis and data military and civilian applications, such as performance processing of signals, i.e., the original signals need to be test of electric power systems and communication cut off, which induces leakage errors. -

Quantum Noise and Quantum Measurement

Quantum noise and quantum measurement Aashish A. Clerk Department of Physics, McGill University, Montreal, Quebec, Canada H3A 2T8 1 Contents 1 Introduction 1 2 Quantum noise spectral densities: some essential features 2 2.1 Classical noise basics 2 2.2 Quantum noise spectral densities 3 2.3 Brief example: current noise of a quantum point contact 9 2.4 Heisenberg inequality on detector quantum noise 10 3 Quantum limit on QND qubit detection 16 3.1 Measurement rate and dephasing rate 16 3.2 Efficiency ratio 18 3.3 Example: QPC detector 20 3.4 Significance of the quantum limit on QND qubit detection 23 3.5 QND quantum limit beyond linear response 23 4 Quantum limit on linear amplification: the op-amp mode 24 4.1 Weak continuous position detection 24 4.2 A possible correlation-based loophole? 26 4.3 Power gain 27 4.4 Simplifications for a detector with ideal quantum noise and large power gain 30 4.5 Derivation of the quantum limit 30 4.6 Noise temperature 33 4.7 Quantum limit on an \op-amp" style voltage amplifier 33 5 Quantum limit on a linear-amplifier: scattering mode 38 5.1 Caves-Haus formulation of the scattering-mode quantum limit 38 5.2 Bosonic Scattering Description of a Two-Port Amplifier 41 References 50 1 Introduction The fact that quantum mechanics can place restrictions on our ability to make measurements is something we all encounter in our first quantum mechanics class. One is typically presented with the example of the Heisenberg microscope (Heisenberg, 1930), where the position of a particle is measured by scattering light off it. -

Effect of Data Gaps: Comparison of Different Spectral Analysis Methods

Ann. Geophys., 34, 437–449, 2016 www.ann-geophys.net/34/437/2016/ doi:10.5194/angeo-34-437-2016 © Author(s) 2016. CC Attribution 3.0 License. Effect of data gaps: comparison of different spectral analysis methods Costel Munteanu1,2,3, Catalin Negrea1,4,5, Marius Echim1,6, and Kalevi Mursula2 1Institute of Space Science, Magurele, Romania 2Astronomy and Space Physics Research Unit, University of Oulu, Oulu, Finland 3Department of Physics, University of Bucharest, Magurele, Romania 4Cooperative Institute for Research in Environmental Sciences, Univ. of Colorado, Boulder, Colorado, USA 5Space Weather Prediction Center, NOAA, Boulder, Colorado, USA 6Belgian Institute of Space Aeronomy, Brussels, Belgium Correspondence to: Costel Munteanu ([email protected]) Received: 31 August 2015 – Revised: 14 March 2016 – Accepted: 28 March 2016 – Published: 19 April 2016 Abstract. In this paper we investigate quantitatively the ef- 1 Introduction fect of data gaps for four methods of estimating the ampli- tude spectrum of a time series: fast Fourier transform (FFT), Spectral analysis is a widely used tool in data analysis and discrete Fourier transform (DFT), Z transform (ZTR) and processing in most fields of science. The technique became the Lomb–Scargle algorithm (LST). We devise two tests: the very popular with the introduction of the fast Fourier trans- single-large-gap test, which can probe the effect of a sin- form algorithm which allowed for an extremely rapid com- gle data gap of varying size and the multiple-small-gaps test, putation of the Fourier Transform. In the absence of modern used to study the effect of numerous small gaps of variable supercomputers, this was not just useful, but also the only size distributed within the time series. -

Monte Carlo Smoothing for Nonlinear Time Series

Monte Carlo Smoothing for Nonlinear Time Series Simon J. GODSILL, Arnaud DOUCET, and Mike WEST We develop methods for performing smoothing computations in general state-space models. The methods rely on a particle representation of the filtering distributions, and their evolution through time using sequential importance sampling and resampling ideas. In particular, novel techniques are presented for generation of sample realizations of historical state sequences. This is carried out in a forward-filtering backward-smoothing procedure that can be viewed as the nonlinear, non-Gaussian counterpart of standard Kalman filter-based simulation smoothers in the linear Gaussian case. Convergence in the mean squared error sense of the smoothed trajectories is proved, showing the validity of our proposed method. The methods are tested in a substantial application for the processing of speech signals represented by a time-varying autoregression and parameterized in terms of time-varying partial correlation coefficients, comparing the results of our algorithm with those from a simple smoother based on the filtered trajectories. KEY WORDS: Bayesian inference; Non-Gaussian time series; Nonlinear time series; Particle filter; Sequential Monte Carlo; State- space model. 1. INTRODUCTION and In this article we develop Monte Carlo methods for smooth- g(yt+1|xt+1)p(xt+1|y1:t ) p(xt+1|y1:t+1) = . ing in general state-space models. To fix notation, consider p(yt+1|y1:t ) the standard Markovian state-space model (West and Harrison 1997) Similarly, smoothing can be performed recursively backward in time using the smoothing formula xt+1 ∼ f(xt+1|xt ) (state evolution density), p(xt |y1:t )f (xt+1|xt ) p(x |y ) = p(x + |y ) dx + . -

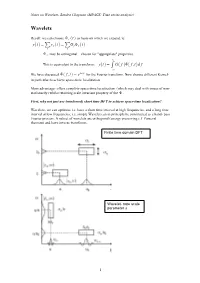

Wavelets T( )= T( ) G F( ) F ,T ( )D F

Notes on Wavelets- Sandra Chapman (MPAGS: Time series analysis) Wavelets Recall: we can choose ! f (t ) as basis on which we expand, ie: y(t ) = " y f (t ) = "G f ! f (t ) f f ! f may be orthogonal – chosen for "appropriate" properties. # This is equivalent to the transform: y(t ) = $ G( f )!( f ,t )d f "# We have discussed !( f ,t ) = e2!ift for the Fourier transform. Now choose different Kernel- in particular to achieve space-time localization. Main advantage- offers complete space-time localization (which may deal with issues of non- stationarity) whilst retaining scale invariant property of the ! . First, why not just use (windowed) short time DFT to achieve space-time localization? Wavelets- we can optimize i.e. have a short time interval at high frequencies, and a long time interval at low frequencies; i.e. simple Wavelet can in principle be constructed as a band- pass Fourier process. A subset of wavelets are orthogonal (energy preserving c.f Parseval theorem) and have inverse transforms. Finite time domain DFT Wavelet- note scale parameter s 1 Notes on Wavelets- Sandra Chapman (MPAGS: Time series analysis) So at its simplest, a wavelet transform is simply a collection of windowed band pass filters applied to the Fourier transform- and this is how wavelet transforms are often computed (as in Matlab). However we will want to impose some desirable properties, invertability (orthogonality) and completeness. Continuous Fourier transform: " m 1 T / 2 2!ifmt !2!ifmt x(t ) = Sme , fm = Sm = x(t )e dt # "!T / 2 m=!" T T ! i( n!m)x with orthogonality: e dx = 2!"mn "!! " x(t ) = # S( f )e2!ift df continuous Fourier transform pair: !" " S( f ) = # x(t )e!2!ift dt !" Continuous Wavelet transform: $ W !,a = x t " * t dt ( ) % ( ) ! ,a ( ) #$ 1 $ & $ ) da x(t) = ( W (!,a)"! ! ,a d! + 2 C % % a " 0 '#$ * 1 $ t # " ' Where the mother wavelet is ! " ,a (t) = ! & ) where ! is the shift parameter and a a % a ( is the scale (dilation) parameter (we can generalize to have a scaling function a(t)).