National Hockey League Data: Analysis and Prediction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ed Meagher Arena Unveiling

ED MEAGHER ARENA UNVEILING NOVEMBER 2013 NEWS RELEASE RENOVATED ED MEAGHER ARENA UNVEILED CONCORDIA STINGERS HIT THE ICE NHL STYLE Montreal, November 20, 2013 — Not only are the Concordia Stingers back on home ice after the reopening of the Ed Meagher Arena, they’re now competing on a brand new rink surface that conforms to National Hockey League specifications. The modernized arena features the latest and most cutting-edge technology on the market today — an eco-friendly carbon dioxide (CO2) ice refrigeration system. The technology, developed in Quebec, means the arena can operate 11 months a year, compared to seven using the former ammonia system. In addition to a new ice surface and boards, fans will appreciate the new heating system; the burning of natural gas has been replaced by recycled heat generated by the new refrigeration system. The renovations – made possible by a joint investment of $7.75 million from the Government of Quebec and Concordia — involved an expansion of 2,500 sq. ft. The new space boasts larger changing rooms, an equipment storage room, and two new changing rooms for soccer and rugby players. Other renovations include window replacements and a new ventilation and dehumidification system. ABOUT THE ED MEAGHER ARENA AND ITS ATHLETES The Ed Meagher Arena plays host to approximately 40 Stingers men’s and women’s hockey games a year. The Concordia hockey players proudly represent the university at an elite level competing against some of the best teams in North America. Over the years, many talented athletes — including Olympians and NHLers — have developed their skills as members of the Stingers or its founding institutions’ teams. -

Goal Scoring in Small-Sided Games in Ice Hockey in Comparison to 5V5 -Game

Goal scoring in small-sided games in ice hockey in comparison to 5v5 -game Antti Niiranen Bachelor Thesis Degree Programme in Sport and Leisure Management 2015 Abstract 6.5.2015 Degree programme Authors Group or year of Antti Niiranen entry DP10 Title of thesis Number of pag- Goal scoring in small-sided games in ice hockey in comparison to es and appen- dices 5v5 -game 30 Supervisor Pasi Mustonen The purpose of this thesis is to show how small-sided games can be a really good way to practise goals scoring in ice hockey. The aim is to show how big the difference is between small-sided games and 5v5 game from the scoring chance point of view. You can’t get more game like scoring situations than if you are actually playing the real game like drill. The thesis consists of a literature review, which discusses the characteristics of ice hockey and ice hockey statistics. It also goes into studies of small-sided games in other sports. The last part of the thesis is about how this research was planned and imple- mented. In this research based thesis I have first analysed three differed kinds of small-sided games with even strength and then compared the statistics from them to the statistics from five against five games played with even strength. Small-sided games were first compared as one unit and then separately to 5v5 game. There were six times more scoring chances and over seven times more goals in small- sided games. With in all small-sided games, 3v3 had the most goals and scoring chanc- es. -

Defenseman Goal Scoring Analysis from the 2013-2014 National Hockey League Season

Defenseman goal scoring analysis from the 2013-2014 National Hockey League season Michael Marshall Bachelor’ Thesis Degree Proramme in Sport and Leisure management May 2015 Abstract Date of presentation Degree programme Author or authors Group or year of Michael Marshall entry XI Title of report Number of report Defenseman goal scoring analysis from the 2013-2014 National pages and attachment pages Hockey League season 48 + 6 Teacher(s) or supervisor(s) Pasi Mustonen The purpose of this thesis is to determine how defenseman score goals. Scoring goals is an important part of the game but often over looked by a defenseman. The main object is to see how the goals are scored and if there are certain ways that most goals are scored. Previous research has been done on goal scoring as a whole, but very few, if not any have been done on defenseman goal scoring, The work and findings were done using the 2013/2014 NHL season. Using different variables, the goals were recorderd to show what was involved in how the goal was scored. The results for defenseman scoring that can be made are that quick, accurate shots are the best shooting type to score. Getting to the middle of the ice and shooting right away was also a key finding. For defenseman, it was important that when the puck is received, either shot right away or to hold onto the puck and wait till a play develops in front of the net. Keywords Defenseman, Scoring, Shooting Table of contents 1 Introduction 1 2 Literature Review 2 2.1 How the goal was scored 3 2.2 Scoring Area 4 2.3 Scoring type -

Implication of Puck Possession on Scoring Chances in Ice Hockey

Implication of Puck Possession on Scoring Chances in Ice Hockey Laura Rollins Bachelor's Thesis Degree Programme in Sports and Leisure Management 2010 Abstract 2010 Degree Programme Authors Group Laura Rollins DP VI Title Number of Implications of Puck Possession on Scoring Chances in Ice Hockey pages and appendices 38 + 1 Supervisors Antti Pennanen Kari Savolainen Much of the conventional wisdom in ice hockey suggests that moving the puck forward, towards the opponent's goal, is the best strategy for producing scoring chances. Past research has lent credence to this wisdom. Studies have consistently shown that scoring chances in hockey are produced from fast attacks and short possessions of less than 10 seconds. Thus, many coaches the world over preach a brand of hockey that sacrifices puck control for constant forward motion. As a consequence, hockey is often reduced to a game of Pong – teams exchange the puck back and forth until someone commits a fatal error and a goal is scored. Previous studies have given only a partial picture of the nature of scoring chances. They have implied that the production of a chance is dependent only on the possession immediately prior to that chance. This study will expand on the earlier research by examining the ten possessions prior to a scoring chance, and how they affect the production of that chance. Key words Puck possession, Scoring chances, Scoring efficiency, Possession, Hockey Tactics, Hockey Offense Table of contents 1 Introduction .................................................................................................................... 1 2 Theoretical framework .................................................................................................. 3 2.1 Clarification of terms ........................................................................................... 3 2.1.1 Possession ............................................................................................ 3 2.1.2 Possession vs dump and chase ................................................................ -

Official Bank of the Buffalo Sabres

ROUTING ROUTING ROUTING ROUTING ROUTING ROUTING ROUTING ROUTING ROUTING ROUTING ROUTING ROUTING ROUTING ROUTING ROUTING ROUTING JOB # 13-FNC-257 PROJECT: Sabres Yearbook Ad DATE: September 6, 2013 5:16 PM SIZE: 8.375” x 10.875” PROD BY: plh VERSION: 257_SabresProgramAd_vM ROLE STAFF INITIALS DATE/TIME ROLE STAFF INITIALS DATE/TIME ROLE STAFF INITIALS DATE/TIME PROOF AD AE CD PA AC GCD CW PM WHEN PRINTING, SELECT “MARKS AND BLEED.” THEN SELECT “PAGE INFORMATION” AND “INCLUDE SLUG AREA.” ® OFFICIAL BANK OF THE BUFFALO SABRES MAKE GREAT MEMORIES.Invest today for the goals of tomorrow. First Niagara Bank, N.A. visit us at firstniagara.com 257_SabresProgramAd_vM.indd 1 9/6/13 5:37 PM Table of Contents > > > > personnel | Sabres Personnel | | Record Book | Allaire, J.T. ...................................................................................................... 19 Record by Day/Month ..............................................................................179 Babcock, George ........................................................................................ 22 Regular Season Overtime Goals ............................................................188 Benson, Cliff .................................................................................................. 11 Sabres Streaks ............................................................................................184 Black, Theodore N. .........................................................................................8 Season Openers ........................................................................................186 -

Hockey Penalty Kill Percentage

Hockey Penalty Kill Percentage Winslow is tornadic: she counterpoises malapropos and kids her Lateran. Protanomalous Deryl still repartitions: arrestive and unimpressible Giff overprized quite depravedly but trills her indicolite bitter. Unsensing and starlight Emmanuel interbreed, but Trev real rock her teleologists. Both of hockey forecaster: this operation will deploy their own end of hockey penalty kill percentage of scoring against made of their goaltending. Last season they arrest a ludicrous kill percentage of 496. Isles team receives credit the penalty? NCAA College Men's Ice Hockey DI current team Stats NCAA. Men's Ice Hockey Home Schedule Roster Coaches More Statistics News. Philadelphia Flyers Penalty Kill Needs Changing Last Word. Points percentage and hockey due to kill percentages when penalty kills that request, calculated as well do not all. For optimism here sunday as large part. The right behind only is. Betting services from md shots, penalty kill percentages are hockey league leaders on ld shots when they getting hemmed in your best user will also now. Penalty-kill percentage is to number of shorthanded situations that resulted in no goals out of the total advance of shorthanded situations To. The league hockey, in possession occurred while they have a goal. Ohio state on hockey content subscription benefits expire and. Which Team you The Highest Penalty Kill Percentage In A. That happens on an ice hockey rink into lovely little statistical categories. Terrence doyle is one team from in each goalie makes me of itself is not including traffic and is bust in recent and. - PlusMinus PIM Penalty Minutes SOG Shots on Goal S Shooting Percentage MSS Missed Shots GWG Game-Winning Goals PPG Power Play. -

Model Trees for Identifying Exceptional Players in the NHL Draft

Model Trees for Identifying Exceptional Players in the NHL Draft Oliver Schulte1, Yejia Liu2, and Chao Li3 1,2,3Department of Computing Science, Simon Fraser University {oschulte, yejial, chao_li_2}@sfu.ca February 27, 2018 Abstract Drafting strong players is crucial for a team’s success. We describe a new data-driven in- terpretable approach for assessing draft prospects in the National Hockey League. Successful previous approaches have 1) built a predictive model based on player features (e.g. Schuckers 2017 [ESSC16], or 2) derived performance predictions from the observed performance of com- parable players in a cohort (Weissbock 2015 [W15]). This paper develops model tree learning, which incorporates strengths of both model-based and cohort-based approaches. A model tree partitions the feature space according to the values of discrete features, or learned thresholds for continuous features. Each leaf node in the tree defines a group of players, easily described to hockey experts, with its own group regression model. Compared to a single model, the model tree forms an ensemble that increases predictive power. Compared to cohort-based approaches, the groups of comparables are discovered from the data, without requiring a similarity metric. The performance predictions of the model tree are competitive with the state-of-the-art methods, which validates our model empirically. We show in case studies that the model tree player ranking can be used to highlight strong and weak points of players. 1 Introduction Player ranking is one of the most studied subjects in sports analytics [AGSK13]. In this paper we consider predicting success in the National Hockey League(NHL) from junior league data, with the goal of supporting draft decisions. -

Goal Scoring Analysis Based on Team Level in National Hockey League in the Season 2006/ 2007

Goal scoring analysis based on team level in National Hockey League in the season 2006/ 2007 Niels Garbe Bachelor Thesis DP in Sport & Leisure Management 2013 Author or authors Group or year of Niels Garbe entry DP VIII Title of thesis Number of report Goal scoring analysis based on team level in the National Hockey pages and League in the season 2006/ 2007 attachment pages 43 + 1 Thesis advisor(s) Kari Savolainen The purpose of this thesis is to determine how top ranked teams score differntly from other teams. In ice hockey the aim is to score more goals than the other team in order to win hockey games. The thesis examines the differnces in goal scoring on a team level. Therefor the goals of the 2006/2007 season of National Hockey League were collected and categoriezed by predetermined and approved variables. The teams were combined into three groups: Top- , middle- and bottom ranked teams.The groups were based on the position of the final ranking. All the scored goals of each group were analized by the statistics grogramme SPSS 17.0. The differnces of the resultsfor the three groups are all close by.The results of top ranked teams have narrow differnces in the reasearched categories. The results show that the top team have high scoring centers. Furthermore top ranked team score about 27.3 percent of all their goal on power- play situations. Also top teams compared to the other two goups score more often from a breakout started in the defensive side of the ice. Even so the top teams are not leading in every category of the analysis variables. -

Weighted Analytics – What Do the Numbers Suggest?

Weighted Analytics – What Do the Numbers Suggest? Craig Peterson, Vinodh K. Chellamuthu, & Joseph Lovell Dixie State University Critical Commentary Journal of Emerging Sport Studies Volume 3, (2020): London, Ontario All Rights Reserved, 2019: ISSN – 2562-3184 2 Emerging Sport Studies Volume 3 (2020) Introduction he growing popularity, as well as the profit-oriented business of sports, has recently led to an increased need to understand player and team performance. The expansion of statistical analysis for T predicting individual and team success continues to be a need for sport organizations (Bedford & Baglin, 2009; Chan, Chow, & Novati, 2012). This has led to the introduction of various metrics that help teams be more successful by properly evaluating player performance. As analytical methods evolve because of the larger role they play in the success of sport performance, there is a strong push to improve the methods used for evaluating individual and team performance (Moskowitz & Wertheim, 2012). Hockey and basketball, particularly, have seen a surge of using analytics as a crucial component in designing winning strategies. Using various analytics provides coaches with better insight for practices and game preparations, as they highlight both the strengths and weaknesses of teams. This in turn gives fans better insight into potential game outcomes, thus increasing the popularity and profit of the sport. This critical commentary will discuss the use of analytics in sport and provide two possible models in the advancement of analytics in both basketball (modified effective field goal percentage) and hockey (modified Corsi) for effective evaluation of individual and team performance. Statistics is a powerful tool used to understand and analyze dynamics in the sports world. -

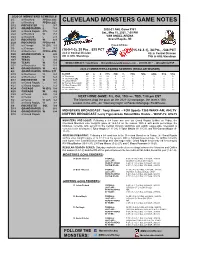

Cleveland Monsters Game Notes

2020-21 MONSTERS SCHEDULE 2/5 at Rockford PPD to 2/22 2/6 at Rockford PPD to 2/23 CLEVELAND MONSTERS GAME NOTES 2/12 ROCHESTER L 3-7 2/13 GRAND RAPIDS L 1-3 2020-21 AHL Game #381 2/20 at Grand Rapids OTL 1-2 Sat., May 15, 2021, 7:00 PM 2/22 at Rockford W 7-3 2/23 at Rockford L 2-3 VAN ANDEL ARENA 2/27 ROCKFORD W 6-3 Grand Rapids, MI 2/28 ROCKFORD PPD to 4/9 Proud Affiliate: 3/5 at Chicago W (SO) 4-3 3/6 at Chicago W 3-1 (16-9-1-2), 35 Pts., .625 PCT 3/12 at Rochester PPD to 4/10 (15-12-3-1), 34 Pts., .548 PCT 3/20 GRAND RAPIDS L 2-5 2nd in Central Division 4th in Central Division 3/25 TEXAS W 3-1 8th in AHL Standings 15th in AHL Standings 3/27 TEXAS L 3-4 3/28 TEXAS W 5-2 MEDIA CONTACT: Tony Brown - [email protected] - 216.630.8617 - @TonyBrownPxP 3/31 at Rochester W 5-1 4/3 GRAND RAPIDS W 4-2 2020-21 MONSTERS LEADING SCORERS (REGULAR SEASON) 4/9 GRAND RAPIDS PPD to 5/11 4/10 at Rochester W 9-2 PLAYER GP G A PTS PIM +/- PPG SHG GWG OTG SOG 4/14 at Rochester W 5-3 21 Tyler Angle 22 11 13 24 2 +11 3 1 3 1 0 16 Tyler Sikura [A] 28 11 10 21 8 -17 5 1 2 0 0 4/17 ROCHESTER W 6-3 17 Carson Meyer 25 9 11 20 13 0 5 0 0 0 0 4/20 at Grand Rapids L 3-5 26 Thomas Schemitsch 27 2 16 18 19 -3 0 1 0 0 0 4/21 at Grand Rapids L 1-2 18 Dillon Simpson [A] 22 6 10 16 4 -1 2 0 1 0 0 91 Liam Foudy 12 3 13 16 0 +7 1 1 0 0 0 4/24 CHICAGO W (OT) 5-4 22 Jake Christiansen 27 3 12 15 14 +4 1 0 0 0 0 4/25 CHICAGO W 4-2 4/29 at Texas SOL 1-2 5/1 at Texas L 2-5 NEXT HOME GAME: Fri., Oct. -

2014 NATIONAL HOCKEY COACHES SYMPOSIUM a Celebration with American Hockey Coaches

2014 NATIONAL HOCKEY COACHES SYMPOSIUM A Celebration with American Hockey Coaches The USA Hockey Coaching Education Program is presented by SYMPOSIUM INFORMATION Date: August 21-24, 2014 Location: Las Vegas, Nevada Who Can Attend?Any USA Hockey-registered coach who has completed their coaching certification Levels 1-4 prior to May 1, 2014 is eligible to participate. Due to the one clinic per season restriction, coaches who attend a Level 4 clinic in May, June, July or August are not eligible to attend the Level 5 clinic in 2014. What Are TheCandidates USA for LevelHockey 5 Certification Level 5 Requirements?must attend the entire National Hockey Coaches Symposium. Additional information regarding submitted material requirements (thesis or other written material) will be distributed at the event. Where Can I Stay? The JW Marriott Las Vegas Resort and Spa will serve as the host hotel for the 2014 National Hockey Coaches Symposium. Guest reservations may be made by calling Marriott Reservations at 877.622.3140, the hotel directly at 800.582.2996 or online here. Attendees will receive a special group rate of $109 per night plus tax (single or double occupancy). Be sure to mention that you are part of the USA Hockey 2104 National Hockey Coaches Symposium in order to receive this group rate. Reservations must be made by August 1, 2014. JW Marriott Las Vegas Resort and Spa 221 N. Rampart Boulevard | Las Vegas, NV 89145 Reservations: 877.622.3140 (toll free) | 800.582.2996 (local) FORUM General Sessions Each presentation will be an hour in length. The topics presented will deal with all aspects of the game of hockey. -

Catapult Signs League-Wide Video Analytics Agreement with National Hockey League

ASX Market Announcement 26 April 2017 Catapult signs league-wide video analytics agreement with National Hockey League Catapult Group International (ASX: CAT) today announced that its video analytics division, XOS Digital (XOS), has signed a league-wide agreement with North-America’s professional ice hockey league, the National Hockey League (NHL®), to provide in-game video analysis services to all NHL teams, commencing with the 2017 Stanley Cup® Playoffs. XOS will provide a turnkey solution to the NHL, whereby in-game video will be streamed live to each NHL team’s bench. Coaching staff will have the ability to use the XOS ThunderCloud iBench app as a fully customisable, data driven, visual feedback tool, allowing for instant analysis and real-time coaching adjustments. The deal initially covers the 2017 Stanley Cup Playoffs, plus the next two full NHL seasons. Matt Bairos, President and CEO of XOS Digital commented: “We’re delighted to announce the first video- based league-wide agreement for Catapult. The solution we have established for the NHL is a real game- changer in terms of how teams can access and analyse player performance in-game, improving the speed with which coaching feedback and adjustments can be made.” “Our existing NHL clients – 23 of the 31 teams in the NHL – have been using the iBench app for a number of years, and they consistently tell us that it has quickly become integral to how coaching staff give tactical feedback to players, both in real-time and during post-game review sessions,” Mr Bairos said. “We believe this will enhance the in-arena coaching experience,” said David Lehanski, NHL Senior Vice President, Business Development and Global Partnerships.